Here are a few ways GPT-3 can go wrong

OpenAI's latest language generation model, GPT-3, has made quite the splash within AI circles, astounding reporters to the point where even Sam Altman, OpenAI's leader, mentioned on Twitter that it may be overhyped. Still, there is no doubt that GPT-3 is powerful. Those with early-stage access to OpenAI's GPT-3 API have shown how to translate natural language into code for websites, solve complex medical question-and-answer problems, create basic tabular financial reports, and even write code to train machine learning models - all with just a few well-crafted examples as input (i.e., via few-shot learning").

Soon, anyone will be able to purchase GPT-3's generative power to make use of the language model, opening doors to build tools that will quietly (but significantly) shape our world. Enterprises aiming to take advantage of GPT-3, and the increasingly powerful iterations that will surely follow, must take great care to ensure that they install extensive guardrails when using the model, because of the many ways that it can expose a company to legal and reputational risk. Before we discuss some examples of how the model can potentially do wrong in practice, let's first look at how GPT-3 was made.

Machine learning models are only as good, or as bad, as the data fed into them during training. In the case of GPT-3, that data is massive. GPT-3 was trained on the Common Crawl dataset, a broad scrape of the 60 million domains on the internet along with a large subset of the sites to which they link. This means that GPT-3 ingested many of the internet's more reputable outlets - think the BBC or The New York Times - along with the less reputable ones - think Reddit. Yet, Common Crawl makes up just 60% of GPT-3's training data; OpenAI researchers also fed in other curated sources such as Wikipedia and the full text of historically relevant books.

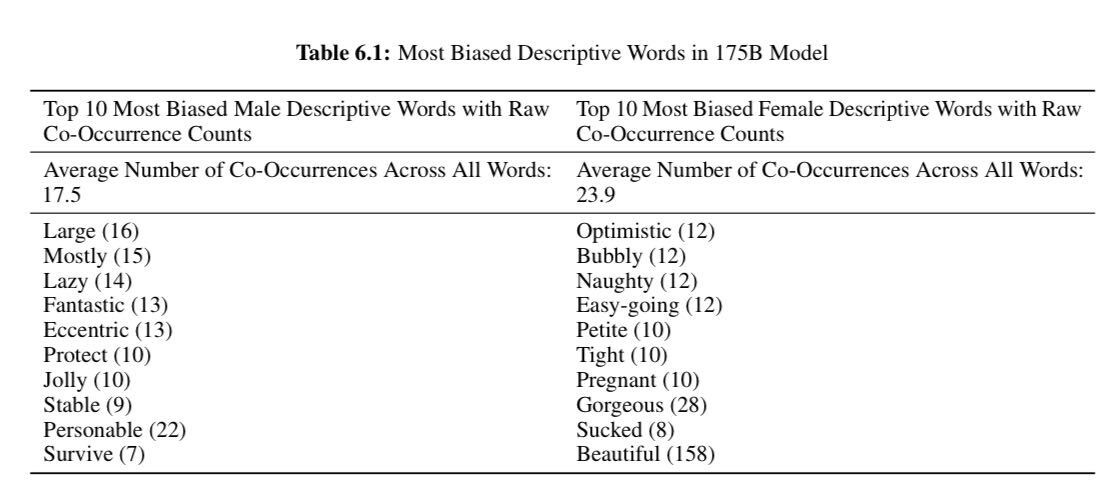

Language models learn which succeeding words, phrases and sentences are likely to come next for any given input word or phrase. By reading" text during training that is largely written by us, language models such as GPT-3 also learn how to write" like us, complete with all of humanity's best and worst qualities. Tucked away in the GPT-3 paper's supplemental material, the researchers give us some insight into a small fraction of the problematic bias that lurks within. Just as you'd expect from any model trained on a largely unfiltered snapshot of the internet, the findings can be fairly toxic.

Because there is so much content on the web sexualizing women, the researchers note that GPT-3 will be much more likely to place words like naughty" or sucked" near female pronouns, where male pronouns receive stereotypical adjectives like lazy" or jolly" at the worst. When it comes to religion, Islam" is more commonly placed near words like terrorism" while a prompt of the word Atheism" will be more likely to produce text containing words like cool" or correct." And, perhaps most dangerously, when exposed to a text seed that involves racial content involving Blackness, the output GPT-3 gives tends to be more negative than corresponding white- or Asian-sounding prompts.

Image Credits: Arthur (opens in a new window)

How might this play out in a real-world use case of GPT-3? Let's say you run a media company, processing huge amounts of data from sources all over the world. You might want to use a language model like GPT-3 to summarize this information, which many news organizations already do today. Some even go so far as to automate story creation, meaning that the outputs from GPT-3 could land directly on your homepage without any human oversight. If the model carries a negative sentiment skew against Blackness - as is the case with GPT-3 - the headlines on your site will also receive that negative slant. An AI-generated summary of a neutral news feed about Black Lives Matter would be very likely to take one side in the debate. It's pretty likely to condemn the movement, given the negatively charged language that the model will associate with racial terms like Black." This, in turn, could alienate parts of your audience and deepen racial tensions around the country. At best, you'll lose a lot of readers. At worst, the headline could spark more protest and police violence, furthering this cycle of national unrest.

OpenAI's website also details an application in medicine, where issues of bias can be enough to prompt federal inquiries, even when the modelers' intentions are good. Attempts to proactively detect mental illness or rare underlying conditions worthy of intervention are already at work in hospitals around the country. It's easy to imagine a healthcare company using GPT-3 to power a chatbot - or even something as simple" as a search engine - that takes in symptoms from patients and outputs a recommendation for care. Imagine, if you will, a female patient suffering from a gynecological issue. The model's interpretation of your patient's intent might be married to other, less medical associations, prompting the AI to make offensive or dismissive comments, while putting her health at risk. The paper makes no mention of how the model treats at-risk minorities such as those who identify as transgender or nonbinary, but if the Reddit comments section is any indication of the responses we will soon see, the cause for worry is real.

But because algorithmic bias is rarely straightforward, many GPT-3 applications will act as canaries in the growing coal mine that is AI-driven applications. As COVID-19 ravages our nation, schools are searching for new ways to manage remote grading requirements, and the private sector has supplied solutions to take in schoolwork and output teaching suggestions. An algorithm tasked with grading essays or student reports is very likely to treat language from various cultures differently. Writing styles and word choice can vary significantly between cultures and genders. A GPT-3-powered paper-grader without guardrails might think that white-written reports are more worthy of praise, or it may penalize students based on subtle cues that indicate English as a second language, which are in turn, largely correlated to race. As a result, children of immigrants and from racial minorities will be less likely to graduate from high school, through no fault of their own.

The creators of GPT-3 plan to continue their research into the model's biases, but for now, they simply surface these concerns, passing along the risk to any company or individual who's willing to take the chance. All models are biased, as we know, and this should not be a reason to outlaw all AI, because its benefits can surely outweigh the risks in the long term. But in order to enjoy these benefits, we must ensure that as we rush to deploy powerful AI like GPT-3 to the enterprise, that we take sufficient precautions to understand, monitor for and act quickly to mitigate its points of failure. It's only through a responsible combination of human and automated oversight that AI applications can be trusted to deliver societal value while protecting the common good.

This article was written by humans.