Deep Vision announces its low-latency AI processor for the edge

Deep Vision, a new AI startup that is building an AI inferencing chip for edge computing solutions, is coming out of stealth today. The six-year-old company's new ARA-1 processors promise to strike the right balance between low latency, energy efficiency and compute power for use in anything from sensors to cameras and full-fledged edge servers.

Because of its strength in real-time video analysis, the company is aiming its chip at solutions around smart retail, including cashier-less stores, smart cities and Industry 4.0/robotics. The company is also working with suppliers to the automotive industry, but less around autonomous driving than monitoring in-cabin activity to ensure that drivers are paying attention to the road and aren't distracted or sleepy.

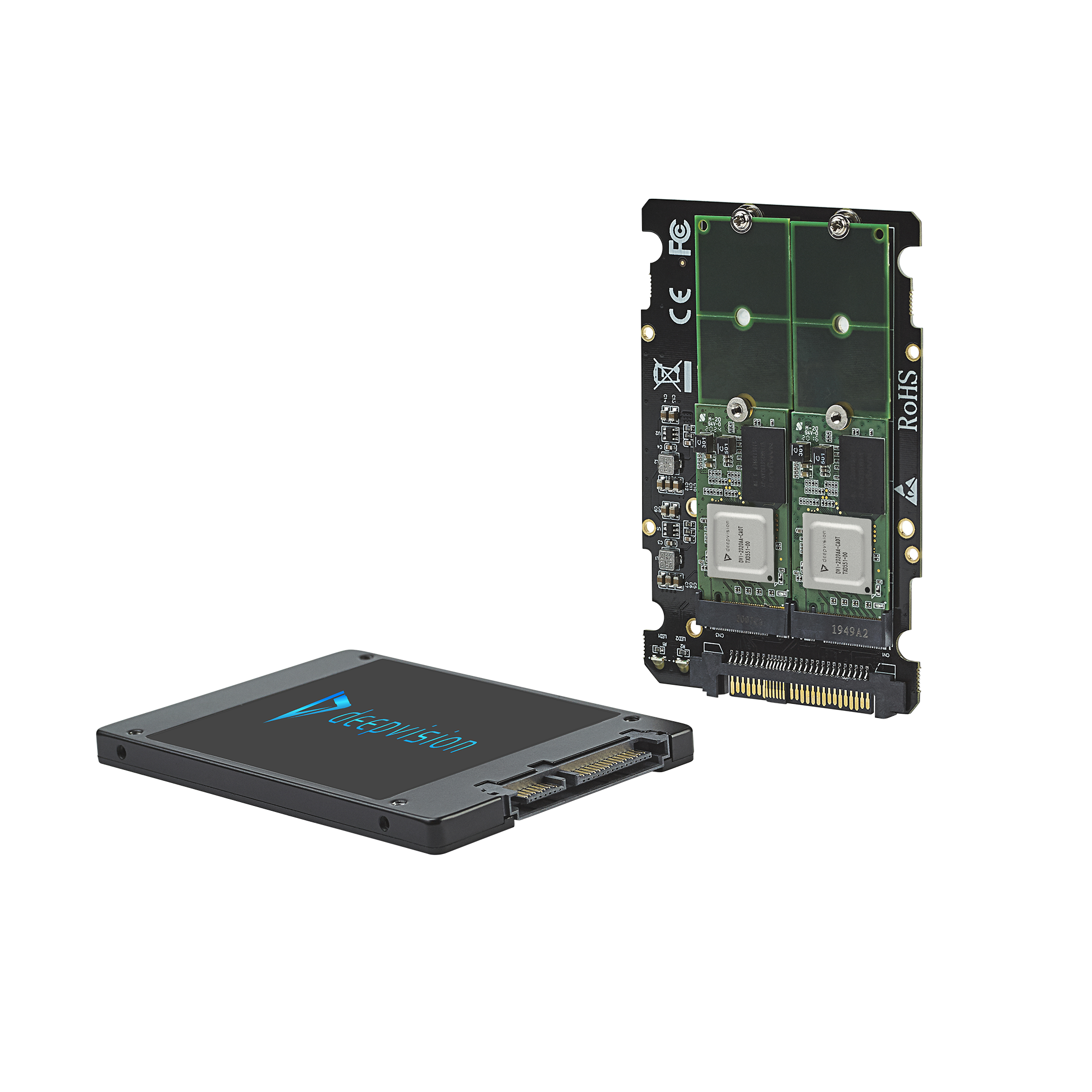

Image Credits: Deep Vision

The company was founded by its CTO Rehan Hameed and its Chief Architect Wajahat Qadeer, who recruited Ravi Annavajjhala, who previously worked at Intel and SanDisk, as the company's CEO. Hameed and Qadeer developed Deep Vision's architecture as part of a Ph.D. thesis at Stanford.

They came up with a very compelling architecture for AI that minimizes data movement within the chip," Annavajjhala explained. That gives you extraordinary efficiency - both in terms of performance per dollar and performance per watt - when looking at AI workloads."

Long before the team had working hardware, though, the company focused on building its compiler to ensure that its solution could actually address its customers' needs. Only then did they finalize the chip design.

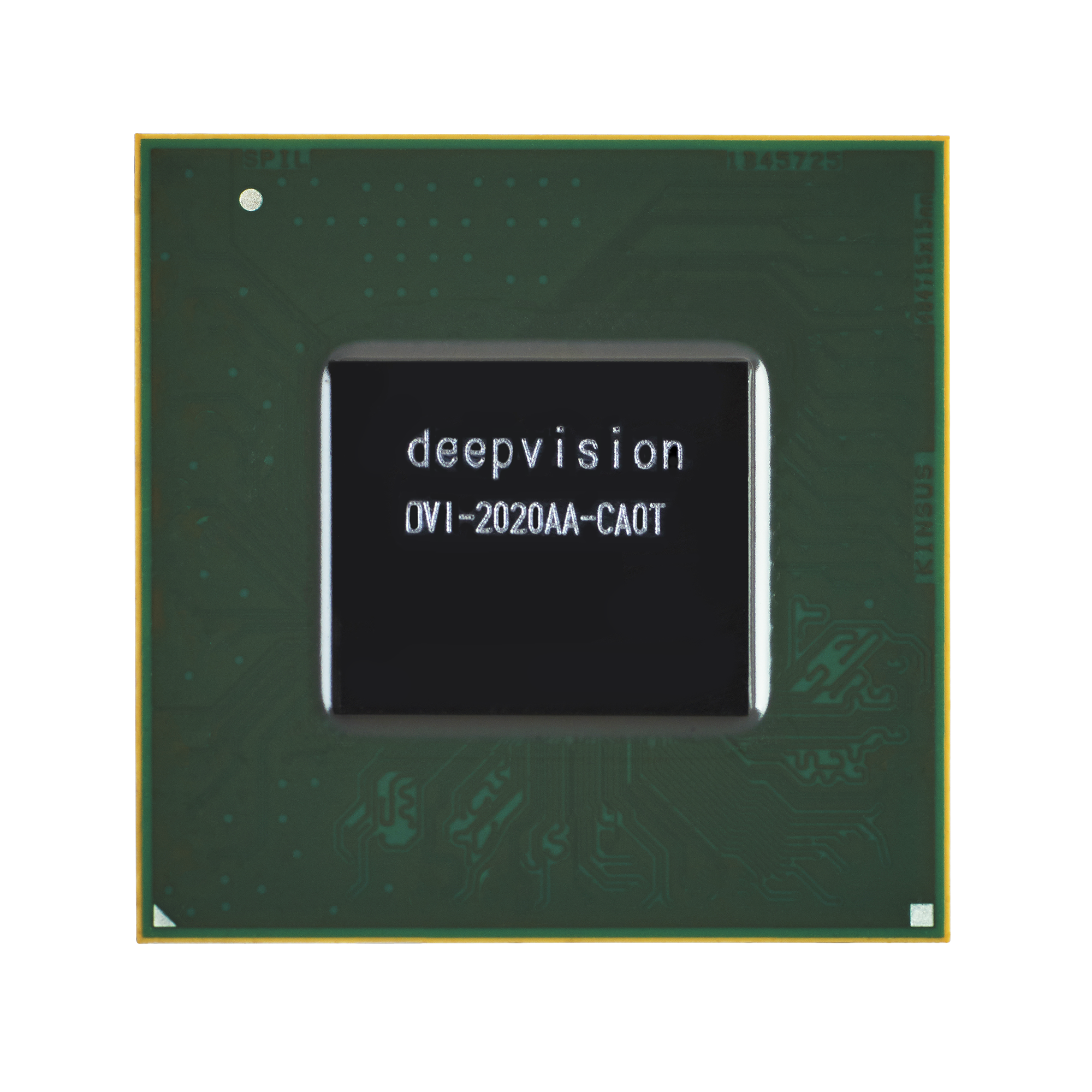

Image Credits: Deep Vision

As Hameed told me, Deep Vision's focus was always on reducing latency. While its competitors often emphasize throughput, the team believes that for edge solutions, latency is the more important metric. While architectures that focus on throughput make sense in the data center, Deep Vision CTO Hameed argues that this doesn't necessarily make them a good fit at the edge.

[Throughput architectures] require a large number of streams being processed by the accelerator at the same time to fully utilize the hardware, whether it's through batching or pipeline execution," he explained. That's the only way for them to get their big throughput. The result, of course, is high latency for individual tasks and that makes them a poor fit in our opinion for an edge use case where real-time performance is key."

To enable this performance - and Deep Vision claims that its processor offers far lower latency than Google's Edge TPUs and Movidius' MyriadX, for example - the team is using an architecture that reduces data movement on the chip to a minimum. In addition, its software optimizes the overall data flow inside the architecture based on the specific workload.

Image Credits: Deep Vision

In our design, instead of baking in a particular acceleration strategy into the hardware, we have instead built the right programmable primitives into our own processor, which allows the software to map any type of data flow or any execution flow that you might find in a neural network graph efficiently on top of the same set of basic primitives," said Hameed.

With this, the compiler can then look at the model and figure out how to best map it on the hardware to optimize for data flow and minimize data movement. Thanks to this, the processor and compiler can also support virtually any neural network framework and optimize their models without the developers having to think about the specific hardware constraints that often make working with other chips hard.

Every aspect of our hardware/software stack has been architected with the same two high-level goals in mind," Hameed said. One is to minimize the data movement to drive efficiency. And then also to keep every part of the design flexible in a way where the right execution plan can be used for every type of problem."

Since its founding, the company raised about $19 million and has filed nine patents. The new chip has been sampling for a while and even though the company already has a couple of customers, it chose to remain under the radar until now. The company obviously hopes that its unique architecture can give it an edge in this market, which is getting increasingly competitive. Besides the likes of Intel's Movidius chips (and custom chips from Google and AWS for their own clouds), there are also plenty of startups in this space, including the likes of Hailo, which raised a $60 million Series B round earlier this year and recently launched its new chips, too.

Hailo challenges Intel and Google with its new AI modules for edge devices