To Really Judge an AI's Smarts, Give it One of These IQ Tests

Chess was once seen as an ultimate test of intelligence, until computers defeated humans while showing none of the other broad capabilities we associate with smarts. Artificial intelligence has since bested humans at Go, some types of poker, and many video games.

So researchers are developing AI IQ tests meant to assess deeper humanlike aspects of intelligence, such as concept learning and analogical reasoning. So far, computers have struggled on many of these tasks, which is exactly the point. The test-makers hope their challenges will highlight what's missing in AI, and guide the field toward machines that can finally think like us.

A common human IQ test is Raven's Progressive Matrices, in which one needs to complete an arrangement of nine abstract drawings by deciphering the underlying structure and selecting the missing drawing from a group of options. Neural networks have gotten pretty good at that task. But a paper presented in December at the massive AI conference known as NeurIPS offers a new challenge: The AI system must generate a fitting image from scratch, an ultimate test of understanding the pattern.

If you are developing a computer vision system, usually it recognizes without really understanding what's in the scene," says Lior Wolf, a computer scientist at Tel Aviv University and Facebook, and the paper's senior author. This task requires understanding composition and rules, so it's a very neat problem." The researchers also designed a neural network to tackle the task-according to human judges, it gets about 70 percent correct, leaving plenty of room for improvement.

Other tests are harder still. Another NeurIPS paper presented a software-generated dataset of so-called Bongard Problems, a classic test for humans and computers. In their version, called Bongard-LOGO, one sees a few abstract sketches that match a pattern and a few that don't, and one must decide if new sketches match the pattern.

The puzzles test compositionality," or the ability to break a pattern down into its component parts, which is a critical piece of intelligence, says Anima Anandkumar, a computer scientist at the California Institute of Technology and the paper's senior author. Humans got the correct answer more than 90 percent of the time, the researchers found, but state-of-the-art visual processing algorithms topped out around 65 percent (with chance being 50 percent). That's the beauty of it," Anandkumar said of the test, that something so simple can still be so challenging for AI." They're currently developing a version of the test with real images.

Compositional thinking might help machines perform in the real world. Imagine a street scene, Anandkumar says. An autonomous vehicle needs to break it down into general concepts like cars and pedestrians to predict what will happen next. Compositional thinking would also make AI more interpretable and trustworthy, she added. One might peer inside to see how it pieces evidence together.

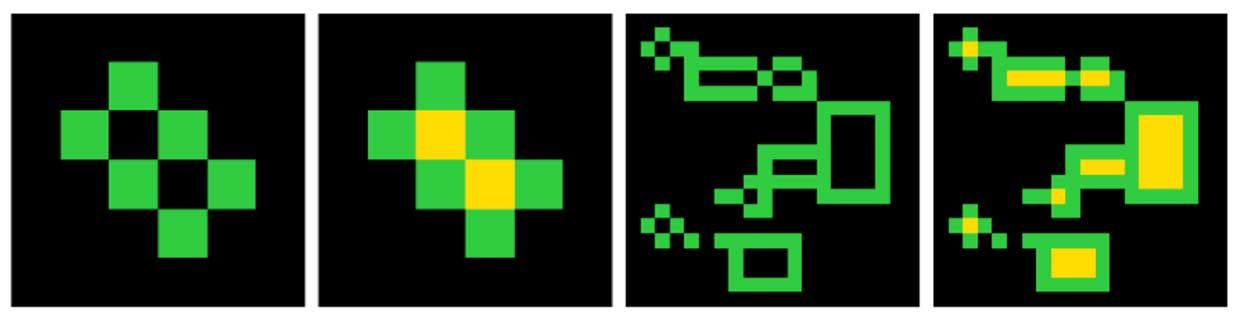

Still harder tests are out there. In 2019, Francois Chollet, an AI researcher at Google, created the Abstraction and Reasoning Corpus (ARC), a set of visual puzzles tapping into core human knowledge of geometry, numbers, physics, and even goal directness. On each puzzle, one sees one or more pairs of grids filled with colored squares, each pair a sort of before-and-after grid. One also sees a new grid and fills in its partner according to whatever rule one has inferred.

A website called Kaggle held a competition with the puzzles and awarded $20,000 last May to the three teams with the best-performing algorithms. The puzzles are pretty easy for humans, but the top AI barely reached 20 percent. That's a big red flag that tells you there's something interesting there," Chollet says, that we're missing something."

Images: Kaggle The Kaggle competition tasked participants with creating an AI system that could solve reasoning tasks it has never seen before. Each ARC task contained a few pairs of training inputs and outputs, and a test input for which the AI needed to predict the corresponding output with the pattern learned from the training examples.

Images: Kaggle The Kaggle competition tasked participants with creating an AI system that could solve reasoning tasks it has never seen before. Each ARC task contained a few pairs of training inputs and outputs, and a test input for which the AI needed to predict the corresponding output with the pattern learned from the training examples. The current wave of advancement in AI is driven largely by multi-layered neural networks, also known as deep learning. But, Chollet says, these neural nets perform abysmally" on the ARC. The Kaggle winners used old-school methods that combine handwritten rules rather than learning subtle patterns from gobs of data. Though he sees a role for both paradigms in tandem. A neural net might translate messy perceptual data into a structured form that symbolic processing can handle.

Anandkumar agrees with the need for a hybrid approach. Much of deep learning's progress now comes from making it deeper and deeper, with bigger and bigger neural nets, she says. The scale now is so enormous that I think we'll see more work trying to do more with less."

Anandkumar and Chollet point out one misconception about intelligence: People confuse it with skill. Instead, they say, it's the ability to pick up new skills easily. That may be why deep learning so often falters. It typically requires lots of training and doesn't generalize to new tasks, whereas the Bongard and ARC problems require solving a variety of puzzles with only a few examples of each. Maybe a good test of AI IQ would be for a computer to read this article and come up with a new IQ test.