How NASA Designed a Helicopter That Could Fly Autonomously on Mars

Tucked under the belly of the Perseverance rover that will be landing on Mars in just a few days is a little helicopter called Ingenuity. Its body is the size of a box of tissues, slung underneath a pair of 1.2m carbon fiber rotors on top of four spindly legs. It weighs just 1.8kg, but the importance of its mission is massive. If everything goes according to plan, Ingenuity will become the first aircraft to fly on Mars.

In order for this to work, Ingenuity has to survive frigid temperatures, manage merciless power constraints, and attempt a series of 90 second flights while separated from Earth by 10 light minutes. Which means that real-time communication or control is impossible. To understand how NASA is making this happen, below is our conversation with Tim Canham, Mars Helicopter Operations Lead at NASA's Jet Propulsion Laboratory (JPL).

It's important to keep the Mars Helicopter mission in context, because this is a technology demonstration. The primary goal here is to fly on Mars, full stop. Ingenuity won't be doing any of the same sort of science that the Perseverance rover is designed to do. If we're lucky, the helicopter will take a couple of in-flight pictures, but that's about it. The importance and the value of the mission is to show that flight on Mars is possible, and to collect data that will enable the next generation of Martian rotorcraft, which will be able to do more ambitious and exciting things.

Here's an animation from JPL showing the most complex mission that's planned right now:

Ingenuity isn't intended to do anything complicated because everything about the Mars helicopter itself is inherently complicated already. Flying a helicopter on Mars is incredibly challenging for a bunch of reasons, including the very thin atmosphere (just 1% the density of Earth's), the power requirements, and the communications limitations.

With all this in mind, getting Ingenuity to Mars in one piece and having it take off and land even once is a definite victory for NASA, JPL's Tim Canham tells us. Canham helped develop the software architecture that runs Ingenuity. As the Ingenuity operations lead, he's now focused on flight planning and coordinating with the Perseverance rover team. We spoke with Canham to get a better understanding of how Ingenuity will be relying on autonomy for its upcoming flights on Mars.

IEEE Spectrum: What can you tell us about Ingenuity's hardware?

Tim Canham: Since Ingenuity is classified as a technology demo, JPL is willing to accept more risk. The main unmanned projects like rovers and deep space explorers are what's called Class B missions, in which there are many people working on ruggedized hardware and software over many years. With a technology demo, JPL is willing to try new ways of doing things. So we essentially went out and used a lot of off-the-shelf consumer hardware.

There are some avionics components that are very tough and radiation resistant, but much of the technology is commercial grade. The processor board that we used, for instance, is a Snapdragon 801, which is manufactured by Qualcomm. It's essentially a cell phone class processor, and the board is very small. But ironically, because it's relatively modern technology, it's vastly more powerful than the processors that are flying on the rover. We actually have a couple of orders of magnitude more computing power than the rover does, because we need it. Our guidance loops are running at 500 Hz in order to maintain control in the atmosphere that we're flying in. And on top of that, we're capturing images and analyzing features and tracking them from frame to frame at 30 Hz, and so there's some pretty serious computing power needed for that. And none of the avionics that NASA is currently flying are anywhere near powerful enough. In some cases we literally ordered parts from SparkFun [Electronics]. Our philosophy was, this is commercial hardware, but we'll test it, and if it works well, we'll use it."

Can you describe what sensors Ingenuity uses for navigation?

We use a cellphone-grade IMU, a laser altimeter (from SparkFun), and a downward-pointing VGA camera for monocular feature tracking. A few dozen features are compared frame to frame to track relative position to figure out direction and speed, which is how the helicopter navigates. It's all done by estimates of position, as opposed to memorizing features or creating a map.

Photo: NASA/JPL-Caltech NASA's Ingenuity Mars helicopter viewed from below, showing its laser altimeter and navigation camera.

Photo: NASA/JPL-Caltech NASA's Ingenuity Mars helicopter viewed from below, showing its laser altimeter and navigation camera. We also have an inclinometer that we use to establish the tilt of the ground just during takeoff, and we have a cellphone-grade 13 megapixel color camera that isn't used for navigation, but we're going to try to take some nice pictures while we're flying. It's called the RTE, because everything has to have an acronym. There was an idea of putting hazard detection in the system early on, but we didn't have the schedule to do that.

In what sense is the helicopter operating autonomously?

You can almost think of the helicopter like a traditional JPL spacecraft in some ways. It has a sequencing engine on board, and we write a set of sequences, a series of commands, and we upload that file to the helicopter and it executes those commands. We plan the guidance part of the flights on the ground in simulation as a series of waypoints, and those waypoints are the sequence of commands that we send to the guidance software. When we want the helicopter to fly, we tell it to go, and the guidance software takes over and executes taking off, traversing to the different waypoints, and then landing.

This means the flights are pre-planned very specifically. It's not true autonomy, in the sense that we don't give it goals and rules and it's not doing any on-board high-level reasoning. It's sort of half-way autonomy. The brute force way would be a human sitting there and flying it around with joysticks, and obviously we can't do that on Mars. But there wasn't time in the project to develop really detailed autonomy on the helicopter, so we tell it the flight plan ahead of time, and it executes a trajectory that's been pre-planned for it. As it's flying, it's autonomously trying to make sure it stays on that trajectory in the presence of wind gusts or other things that may happen in that environment. But it's really designed to follow a trajectory that we plan on the ground before it flies.

This isn't necessarily an advanced autonomy proof of concept-something like telling it to go take a picture of that rock" would be more advanced autonomy, in my view. Whereas, this is really a scripted flight, the primary goal is to prove that we can fly around on Mars successfully. There are future mission concepts that we're working through now that would involve a bigger helicopter with much more autonomy on board that may be able to [achieve] that kind of advanced autonomy. But if you remember Mars Pathfinder, the very first rover that drove on Mars, it had a very basic mission: Drive in a circle around the base station and try to take some pictures and samples of some rocks. So, as a technology demo, we're trying to be modest about what we try to do the first time with the helicopter, too.

Is there any situation where something might cause the helicopter to decide to deviate from its pre-planned trajectory?

The guidance software is always making sure that all the sensors are healthy and producing good data. If a sensor goes wonky, the helicopter really has one response, which is to take the last propagated state and just try to land and then tell us what happened and wait for us to deal with it. The helicopter won't try to continue its flight if a sensor fails. All three sensors that we use during flight are necessary to complete the flight because of how their data is fused together.

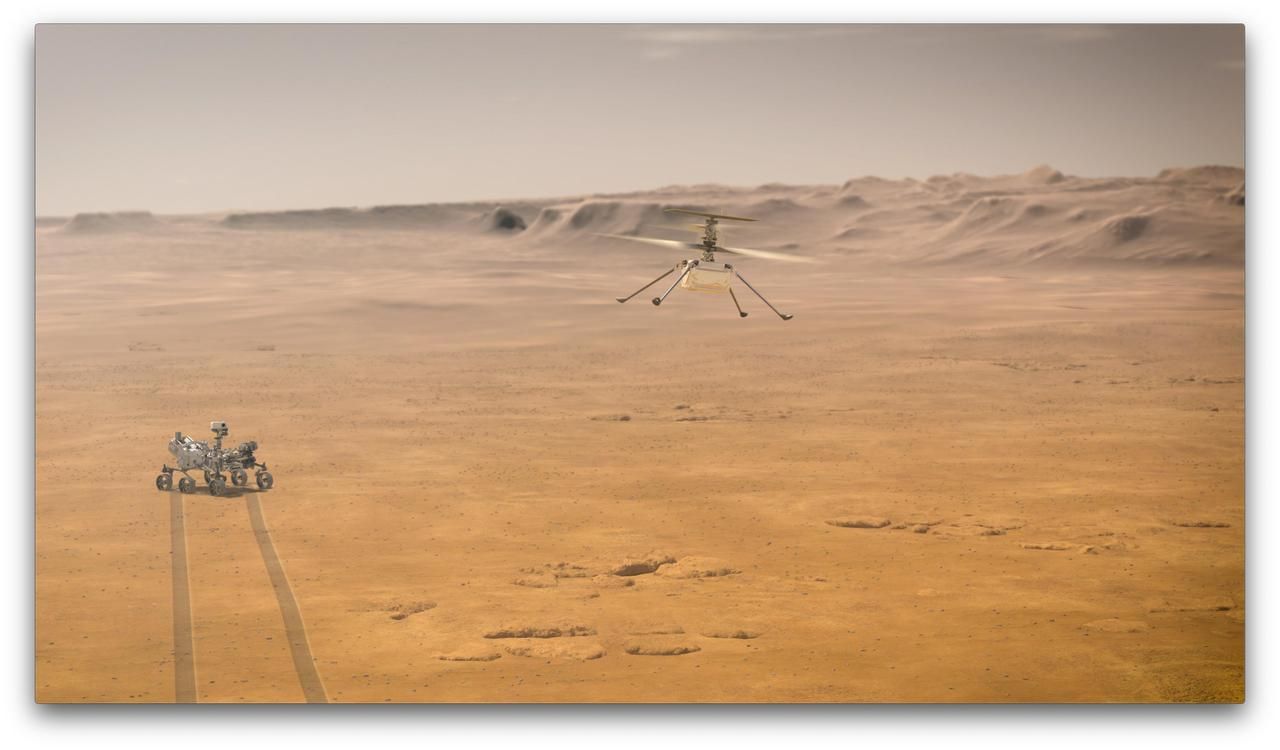

Illustration: NASA/JPL-Caltech An artist's illustration of Ingenuity flying on Mars.

Illustration: NASA/JPL-Caltech An artist's illustration of Ingenuity flying on Mars. How will you decide where to fly?

We'll be doing what we're calling a site selection process, and that's even starting now from orbital images of where we anticipate the rover is going to land. Orbital images are the coarse way of identifying potential sites, and then the rover will go to one of those sites and do a very extensive survey of the area. Based on the rockiness, the slope, and even how textured the area is for feature tracking, we'll select a site for the helicopter to operate in. There are some tradeoffs, because the safest surface is one that's featureless, with no rocks, but that's also the worst surface to do feature tracking on, so we have to find a balance that might include a bunch of little rocks that make good features to track but no big rocks that might make it more difficult to land.

What kind of flights are you hoping the robot will make?

Because we're trying this out for the first time, we have three main flights planned, and all three of them have the helicopter landing in the same spot that it took off from, because we know we'll have a surveyed safe area. We have a limited 30 day window, and if we have the time, then we might try to land it in a different area that looks safe from a distance. But the first three canonical flights are all going to be takeoff, fly, and then come back and land in the same spot.

JPL has a history of building robots that are able to remain functional long after their primary mission is over. With only a 30 day mission, does that mean that barring some kind of accident, the rover will end up just driving away from a perfectly functional Mars helicopter?

Yeah, that's the plan, because the rover has to get on with its primary mission. And it does consume resources to support us. And so they gave us this 30 day window, which we're very grateful for of course, and then they're moving on, whether we're still working or not. Whatever wild and crazy stuff we want to do, we'll have to do within our 30 days. We don't actually have the final two flights planned yet. Depending on how quickly the first three go, we may have a week or so to try some more exotic things. But we're really concentrating on those first three flights.

Our ultimate success criterion is a single flight, so if we get that first flight, we're going to be doing high fives. The next two flights are going to be stretching that envelope a little bit. And then the final two flights are, hey, let's see how adventurous we can get. We might fly off a hundred meters, or do a big circle or something like that. But the whole point is understanding how it flies, and that means doing our first flight and seeing how well it performs.

Let's say everything goes great on your first four flights and you have one flight left. Would you rather try something really adventurous that might not work, or something a little safer that's more likely to work but that wouldn't teach you quite as much?

That's a good question, and we'll have to figure that out. If we have one flight left and they're going to leave us behind anyway, maybe we could try something bold. But we haven't really gotten that far yet. We're really concentrating on those first three flights, and everything after that is a bonus.

Anything else you can share with us that engineers might find particularly interesting?

This the first time we'll be flying Linux on Mars. We're actually running on a Linux operating system. The software framework that we're using is one that we developed at JPL for cubesats and instruments, and we open-sourced it a few years ago. So, you can get the software framework that's flying on the Mars helicopter, and use it on your own project. It's kind of an open-source victory, because we're flying an open-source operating system and an open-source flight software framework and flying commercial parts that you can buy off the shelf if you wanted to do this yourself someday. This is a new thing for JPL because they tend to like what's very safe and proven, but a lot of people are very excited about it, and we're really looking forward to doing it.