Programming by Voice May Be the Next Frontier in Software Development

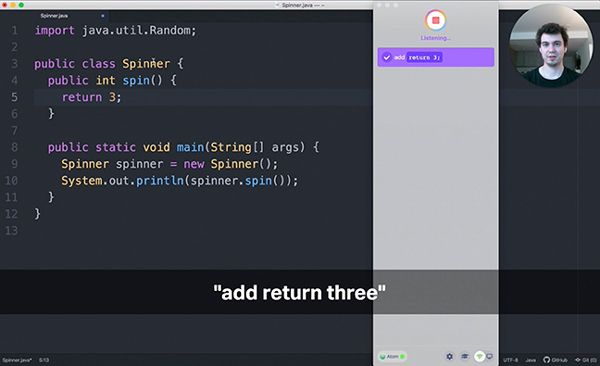

Images: Serenade From Voice to Code: Two of the leading programming-by-speech platforms today offer different approaches to the problem of reciting code to a computer. One, Serenade, acts a little like a digital assistant-allowing you to describe the commands you're encoding, without mandating that you necessarily dictate each instruction word-for-word. Another, Talon, provides more granular control over each line, which also necessitates a slightly more detail-oriented grasp of each task being programmed into the machine. A simple example, below, is a step-by-step guide-in Serenade and in Talon-to generating the Python code needed to print the word hello" onscreen.

Images: Serenade From Voice to Code: Two of the leading programming-by-speech platforms today offer different approaches to the problem of reciting code to a computer. One, Serenade, acts a little like a digital assistant-allowing you to describe the commands you're encoding, without mandating that you necessarily dictate each instruction word-for-word. Another, Talon, provides more granular control over each line, which also necessitates a slightly more detail-oriented grasp of each task being programmed into the machine. A simple example, below, is a step-by-step guide-in Serenade and in Talon-to generating the Python code needed to print the word hello" onscreen. Increasingly, we're interacting with our gadgets by talking to them. Old friends like Alexa and Siri are now being joined by automotive assistants like Apple CarPlay and Android Auto, and even apps sensitive to voice biometrics and commands. But what if the technology itself could be built using voice?

That's the premise behind voice coding, an approach to developing software using voice instead of a keyboard and mouse to write code. Through voice-coding platforms, programmers utter commands to manipulate code and create custom commands that cater to and automate their workflows.

Voice coding isn't as simple as it seems, with layers of complex technology behind it. The voice-coding app Serenade, for instance, has a speech-to-text engine developed specifically for code, unlike Google's speech-to-text API, which is designed for conversational speech. Once a software engineer speaks the code, Serenade's engine feeds that into its natural-language processing layer, whose machine-learning models are trained to identify and translate common programming constructs to syntactically valid code.

Serenade, which raised $2.1 million in a seed funding round in 2020, was born out of necessity when cofounder Matt Wiethoff was diagnosed with a repetitive strain injury in 2019. I left my job as a software engineer at Quora because I couldn't do the work anymore," he says. It was either pick a different career that didn't require this much typing or figure out some sort of solution."

This was the same path Ryan Hileman embarked upon, leaving his full-time job as a software engineer in 2017 after developing severe hand pain a year earlier. It was then that Hileman started building Talon, a hands-free coding platform. The point of Talon is to completely replace the keyboard and mouse for anyone," he says.

Talon has several components to it: speech recognition, eye tracking, and noise recognition. Talon's speech-recognition engine is based on Facebook's Wav2letter automatic speech-recognition system, which Hileman extended to accommodate commands for voice coding. Meanwhile, Talon's eye tracking and noise-recognition capabilities simulate navigating with a mouse, moving a cursor around the screen based on eye movements and making clicks based on mouth pops. That sound is easy to make. It's low effort and takes low latency to recognize, so it's a much faster, nonverbal way of clicking the mouse that doesn't cause vocal strain," Hileman says.

Coding with Talon sounds like speaking another language, as software engineer and voice coder Emily Shea demonstrates in a conference talk she delivered in 2019. Her video is filled with voice commands like slap" (hit return), undo" (delete), spring 3" (go to third line of file), and phrase name op equals snake extract word paren mad" (which results in this line of code: name = extract_word(m)).

On the other hand, coding with Serenade follows a more natural way to speak code. You can say delete import" to delete the import instruction at the top of a file or build" to run a custom build command. You can also say add function factorial" to create a function that computes a factorial in JavaScript, for example, and the app takes care of the syntax-including the function" keyword, parentheses, and curly brackets-so you don't have to explicitly state each element.

Illustration: IEEE Spectrum

Illustration: IEEE Spectrum Voice coding does require a decent microphone, especially if you want to eliminate background noise, though Serenade's models are trained on audio produced by laptop microphones. You'll also need eye-tracking hardware if you want to run Talon with eye tracking. (Talon does, however, run fine without it.) Open-source voice-coding platforms such as Aenea and Caster are free, but both rely on the Dragon speech-recognition engine, which users will have to purchase themselves. That said, Caster offers support for Kaldi, an open-source speech-recognition tool kit, and Windows Speech Recognition, which comes preinstalled in Windows.

The results, says Serenade Labs cofounder Tommy MacWilliam, speak for themselves. Being able to describe what you want to do is so much easier," he says. It's more fluid to say move these three lines down' or duplicate this method' as opposed to typing it out or pressing keyboard shortcuts."

Voice coding also allows those with injuries or chronic pain conditions to continue their careers. Being able to use voice and just remove my arms from the equation opened up a much less restrictive way to use my computer," Shea says.

Coding with voice could also lower barriers of entry to software development. If they can think about the code they want to write in a logical and structured way," says MacWilliam, then we can have machine learning take the last mile and translate those thoughts into syntactically valid code."

Voice coding is still in its infancy, and its potential to gain widespread adoption depends on how tied software engineers are to the traditional keyboard-and-mouse model of writing code. But voice coding opens up possibilities, maybe even a future where brain-computer interfaces directly transform what you're thinking into code-or software itself.

This article appears in the April 2021 print issue as Speaking In Code."

About the AuthorRina Diane Caballar is a journalist and former software engineer based in Wellington, New Zealand.