Deep Science: Introspective, detail-oriented and disaster-chasing AIs

Research papers come out far too frequently for anyone to read them all. That's especially true in the field of machine learning, which now affects (and produces papers in) practically every industry and company. This column aims to collect some of the most relevant recent discoveries and papers - particularly in, but not limited to, artificial intelligence - and explain why they matter.

It takes an emotionally mature AI to admit its own mistakes, and that's exactly what this project from the Technical University of Munich aims to create. Maybe not the emotion, exactly, but recognizing and learning from mistakes, specifically in self-driving cars. The researchers propose a system in which the car would look at all the times in the past when it has had to relinquish control to a human driver and thereby learn its own limitations - what they call introspective failure prediction."

For instance, if there are a lot of cars ahead, the autonomous vehicle's brain could use its sensors and logic to make a decision de novo about whether an approach would work or whether none will. But the TUM team says that by simply comparing new situations to old ones, it can reach a decision much faster on whether it will need to disengage. Saving six or seven seconds here could make all the difference for a safe handover.

It's important for robots and autonomous vehicles of all types to be able to make decisions without phoning home, especially in combat, where decisive and concise movements are necessary. The Army Research Lab is looking into ways in which ground and air vehicles can interact autonomously, allowing, for instance, a mobile landing pad that drones can land on without needing to coordinate, ask permission or rely on precise GPS signals.

Their solution, at least for the purposes of testing, is actually rather low tech. The ground vehicle has a landing area on top painted with an enormous QR code, which the drone can see from a fairly long way off. The drone can track the exact location of the pad totally independently. In the future, the QR code could be done away with and the drone could identify the shape of the vehicle instead, presumably using some best-guess logic to determine whether it's the one it wants.

Image Credits: Nagoya City University

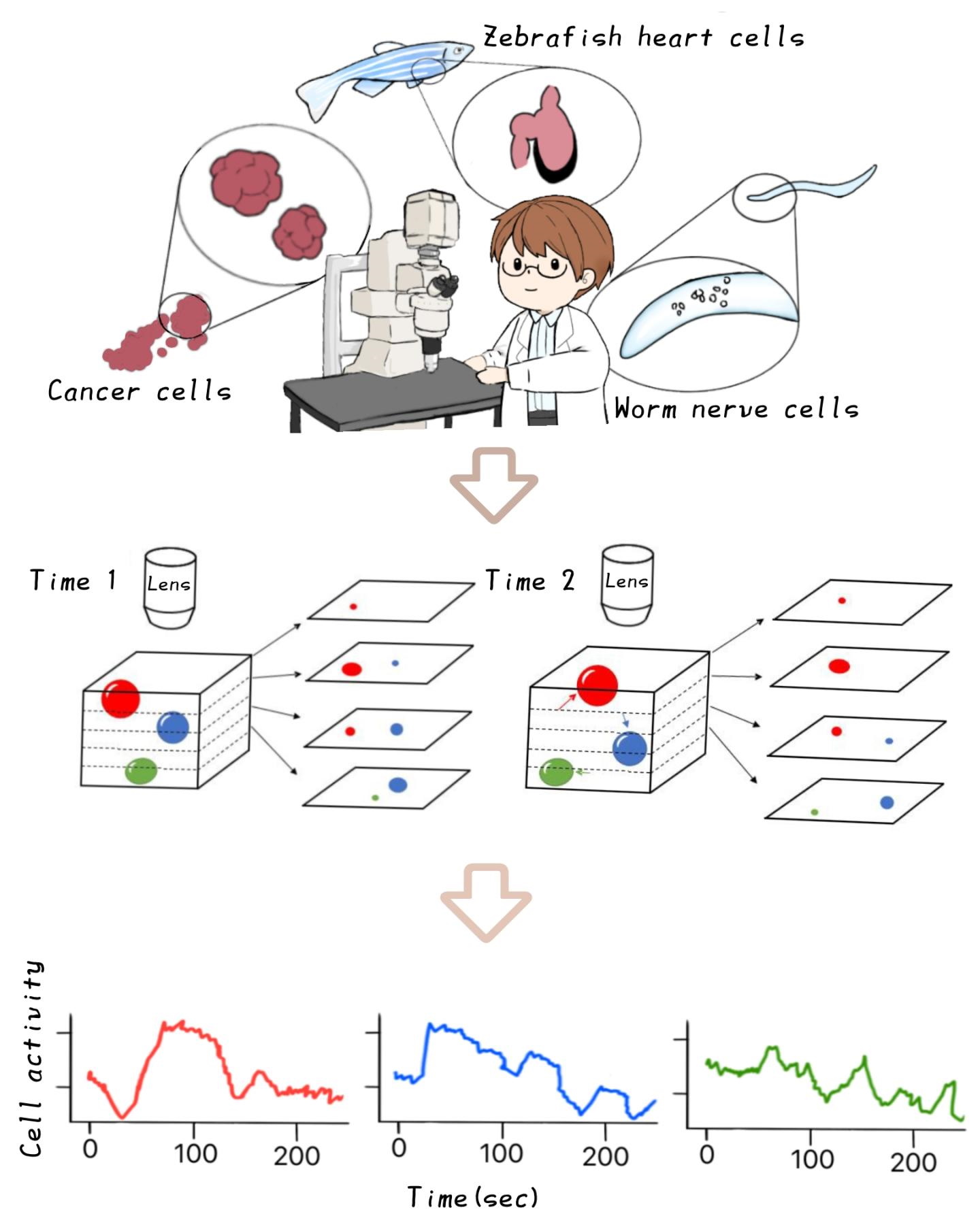

In the medical world, AI is being put to work not on tasks that are not much difficult but are rather tedious for people to do. A good example of this is tracking the activity of individual cells in microscopy images. It's not a superhuman task to look at a few hundred frames spanning several depths of a petri dish and track the movements of cells, but that doesn't mean grad students like doing it.

This software from researchers at Nagoya City University in Japan does it automatically using image analysis and the capability (much improved in recent years) of understanding objects over a period of time rather than just in individual frames. Read the paper here, and check out the extremely cute illustration showing off the tech at right ... more research organizations should hire professional artists.

This process is similar to that of tracking moles and other skin features on people at risk for melanoma. While they might see a dermatologist every year or so to find out whether a given spot seems sketchy, the rest of the time they must track their own moles and freckles in other ways. That's hard when they're in places like one's back.