Instagram to default young teens to private accounts, restrict ads and unwanted adult contact

As it gears up to expand access to younger users, Instagram this morning announced a series of updates designed to make its app a safer place for online teens. The company says it will now default users to private accounts at sign-up if they're under the age of 16 - or under 18 in certain locales, including in the E.U. It will also push existing users under 16 to switch their account to private, if they have not already done so. In addition, Instagram will roll out new technology aimed at reducing unwanted contact from adults - like those who have already been blocked or reported by other teens - and it will change how advertisers can reach its teenage audience.

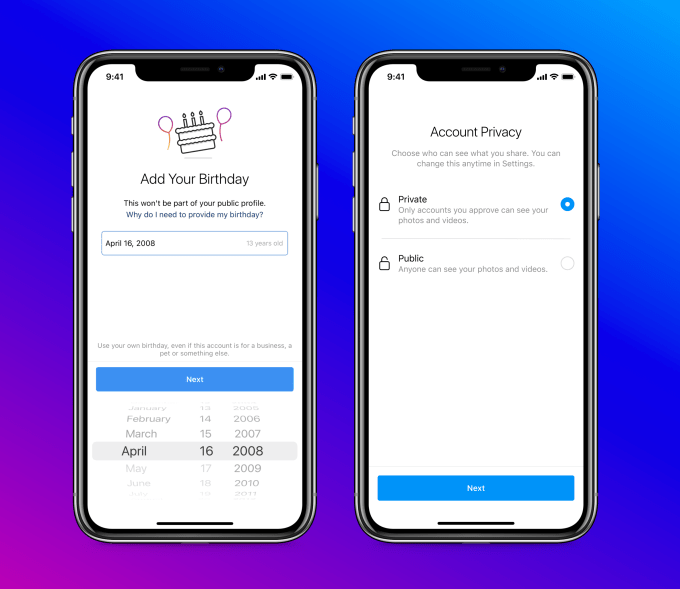

The most visible change for younger users will be the shift to private accounts.

Historically, when users signed up for a new Instagram account, they were asked to choose between a public or private account. But Instagram says that its research found that 8 out 10 young people selected the private" option during setup, so it will now make this the default for those under the age of 16.

Image Credits: Instagram

It won't, however, force teens to remain private. They can switch to public accounts at any time, including during signup. Those with existing public accounts will be alerted to the benefits of going private and be instructed on how to make the change through an in-app notification, but Instagram will not force them to go private, it says.

This change follows a similar move by rival platform TikTok, which this January announced it would update the private settings and defaults for users under the age of 18. In TikTok's case, it changed the accounts for users ages 13 to 15 to private by default but also tightened other controls related to how young teens use the app - with comments, video downloads, and other TikTok features, like Duets and Stitches.

TikTok update will change privacy settings and defaults for users under 18

Instagram isn't going so far as to restrict other settings beyond suggesting teens' default account type, but it is taking action to address some of the problems that result from having adults participate on the same app that minors use.

The company says it will use new technology to identify accounts that have shown potentially suspicious behavior" - like those who have been recently blocked or reported by other young teens. This is only one of many signals Instagram uses to identify suspicious behavior, but the company says it won't publicize the others, as it doesn't want people to be able to game its system.

Once identified as potentially suspicious," Instagram will then restrict these adults' accounts from being able to interact with young people's accounts.

For starters, Instagram will no longer show young people's accounts in Explore, Reels or in the Accounts Suggested For You" feature to these potentially suspicious adults. If the adult instead locates a young person's account by way of a search, they won't be able to follow them. And they won't be able to see comments from young people on other people's posts or be able to leave comments of their own on young people's posts.

(Any teens planning to report and block their parents probably won't trigger the algorithm, Instagram tells us, as it uses a combination of signals to trigger its restrictions.)

These new restrictions build on the technology Instagram introduced earlier this year, which restricted the ability for adults to contact teens who didn't already follow them. This made it possible for teens to still interact with their family and family friends, while limiting unwanted contact from adults they didn't know.

Instagram adds new teen safety tools as competition with TikTok heats up

Cutting off problematic adults from young teens' content like this actually goes further that what's available on other social networks, like TikTok or YouTube, where there are often disturbing comments left on videos of young people - in many cases, girls who are being sexualized and harassed by adult men. YouTube's comments section was even once home to a pedophile ring, which pushed YouTube to entirely disable comments on videos featuring minor children.

Instagram isn't blocking the comments section in full - it's more selectively seeking out the bad actors, then making content created by minors much harder for them to find in the first place.

The other major change rolling out in the next few weeks impacts advertisers looking to target ads to teens under 18 (or older in certain countries).

Image Credits: Instagram

Previously available targeting options - like those based on teens' interests or activity on other apps or websites - will no longer be available to advertisers. Instead, advertisers will only be able to target based on age, gender and location. This will go into effect across Instagram, Facebook and Messenger.

The company says the decision was influenced by recommendations from youth advocates who said younger people may not be as well-equipped to make decisions related to opting out of interest-based advertising, which led to the new restrictions.

In reality, however, Facebook's billion-dollar interest-based ad network has been under attack by regulators and competitors alike, and the company has been working to diversify its revenue beyond ads to include things like e-commerce with the expectation that potential changes to its business are around the corner.

In a recent iOS update, for example, Apple restricted the ability for Facebook to collect data from third-party apps by asking users if they wanted to opt out of being tracked. Most people said no" to tracking. Meanwhile, attacks on the personalized ad industry have included those from advocacy groups who have argued that tech companies should turn off personalized ads for those under 18 - not just the under-13 crowd, who are already protected under current children's privacy laws.

At the same time, Instagram has been toying with the idea of opening its app up to kids under the age of 13, and today's series of changes could help to demonstrate to regulators that it's moving forward with the safety of young people in mind, or so the company hopes.

Consumer groups and child development experts petition Facebook to drop Instagram for kids' plan

On this front, Instagram says it has expanded its Youth Advisors" group to include new experts like Jutta Croll at Stiftung Digitale Chancen, Pattie Gonsalves at Sangath and It's Okay To Talk, Vicki Shotbolt at ParentZone UK, Alfiee M. Breland-Noble at AAKOMA Project, Rachel Rodgers at Northeastern University, Janis Whitlock at Cornell University, and Amelia Vance at the Future of Privacy Forum.

The group also includes the Family Online Safety Institute, Digital Wellness Lab, MediaSmarts, Project Rockit and the Cyberbullying Research Center.

It's also working with lawmakers on age verification and parental consent standards that it expects to talk more about in the months to come. In a related announcement, Instagram said it's using A.I. technology that estimates people's ages. It can look for signals like people wishing someone a happy birthday" or happy quinceanera," which can help narrow down someone's age, for instance. This technology is already being used to stop some adults from interacting with young people's accounts, including the new restrictions announced today.