New Apple technology will warn parents and children about sexually explicit photos in Messages

Apple later this year will roll out new tools that will warn children and parents if the child sends or receives sexually explicit photos through the Messages app. The feature is part of a handful of new technologies Apple is introducing that aim to limit the spread of Child Sexual Abuse Material (CSAM) across Apple's platforms and services.

As part of these developments, Apple will be able to detect known CSAM images on its mobile devices, like iPhone and iPad, and in photos uploaded to iCloud, while still respecting consumer privacy.

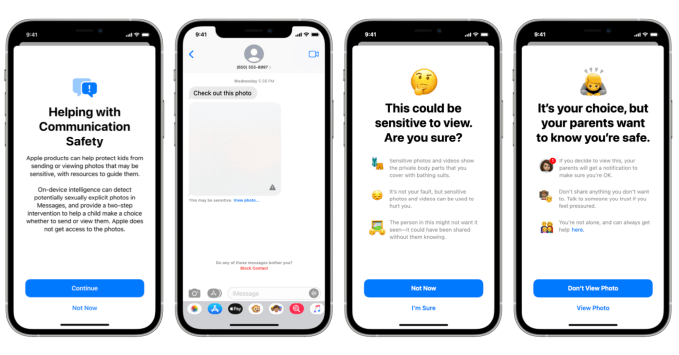

The new Messages feature, meanwhile, is meant to enable parents to play a more active and informed role when it comes to helping their children learn to navigate online communication. Through a software update rolling out later this year, Messages will be able to use on-device machine learning to analyze image attachments and determine if a photo being shared is sexually explicit. This technology does not require Apple to access or read the child's private communications, as all the processing happens on the device. Nothing is passed back to Apple's servers in the cloud.

If a sensitive photo is discovered in a message thread, the image will be blocked and a label will appear below the photo that states, this may be sensitive" with a link to click to view the photo. If the child chooses to view the photo, another screen appears with more information. Here, a message informs the child that sensitive photos and videos show the private body parts that you cover with bathing suits" and it's not your fault, but sensitive photos and videos can be used to harm you."

It also suggests that the person in the photo or video may not want it to be seen and it could have been shared without their knowing.

Image Credits: Apple

These warnings aim to help guide the child to make the right decision by choosing not to view the content.

However, if the child clicks through to view the photo anyway, they'll then be shown an additional screen that informs them that if they choose to view the photo, their parents will be notified. The screen also explains that their parents want them to be safe and suggests that the child talk to someone if they feel pressured. It offers a link to more resources for getting help, as well.

There's still an option at the bottom of the screen to view the photo, but again, it's not the default choice. Instead, the screen is designed in a way where the option to not view the photo is highlighted.

These types of features could help protect children from sexual predators, not only by introducing technology that interrupts the communications and offers advice and resources, but also because the system will alert parents. In many cases where a child is hurt by a predator, parents didn't even realize the child had begun to talk to that person online or by phone. This is because child predators are very manipulative and will attempt to gain the child's trust, then isolate the child from their parents so they'll keep the communications a secret. In other cases, the predators have groomed the parents, too.

Apple's technology could help in both cases by intervening, identifying and alerting to explicit materials being shared.

However, a growing amount of CSAM material is what's known as self-generated CSAM, or imagery that is taken by the child, which may be then shared consensually with the child's partner or peers. In other words, sexting or sharing nudes." According to a 2019 survey from Thorn, a company developing technology to fight the sexual exploitation of children, this practice has become so common that 1 in 5 girls ages 13 to 17 said they have shared their own nudes, and 1 in 10 boys have done the same. But the child may not fully understand how sharing that imagery puts them at risk of sexual abuse and exploitation.

The new Messages feature will offer a similar set of protections here, too. In this case, if a child attempts to send an explicit photo, they'll be warned before the photo is sent. Parents can also receive a message if the child chooses to send the photo anyway.

Apple says the new technology will arrive as part of a software update later this year to accounts set up as families in iCloud for iOS 15, iPadOS 15, and macOS Monterey in the U.S.

This update will also include updates to Siri and Search that will offer expanded guidance and resources to help children and parents stay safe online and get help in unsafe situations. For example, users will be able to ask Siri how to report CSAM or child exploitation. Siri and Search will also intervene when users search for queries related to CSAM to explain that the topic is harmful and provide resources to get help.