12 Graphs That Explain the State of AI in 2022

Every year, the Stanford Institute for Human-Centered Artificial Intelligence (HAI) puts out its AI Index, a massive compendium of data and graphs that tries to sum up the current state of artificial intelligence. The 2022 AI Index, which came out this week, is as impressive as ever, with 190 pages covering R&D, technical performance, ethics, policy, education, and the economy. I've done you a favor by reading every page of the report and plucking out 12 charts that capture the state of play.

It's worth noting that many of the trends I reported from last year's 2021 index still hold. For example, we are still living in a golden AI summer with ever-increasing publications, the AI job market is still global, and there's still a disconcerting gap between corporate recognition of AI risks and attempts to mitigate said risks. Rather than repeat those points here, we refer you to last year's coverage.

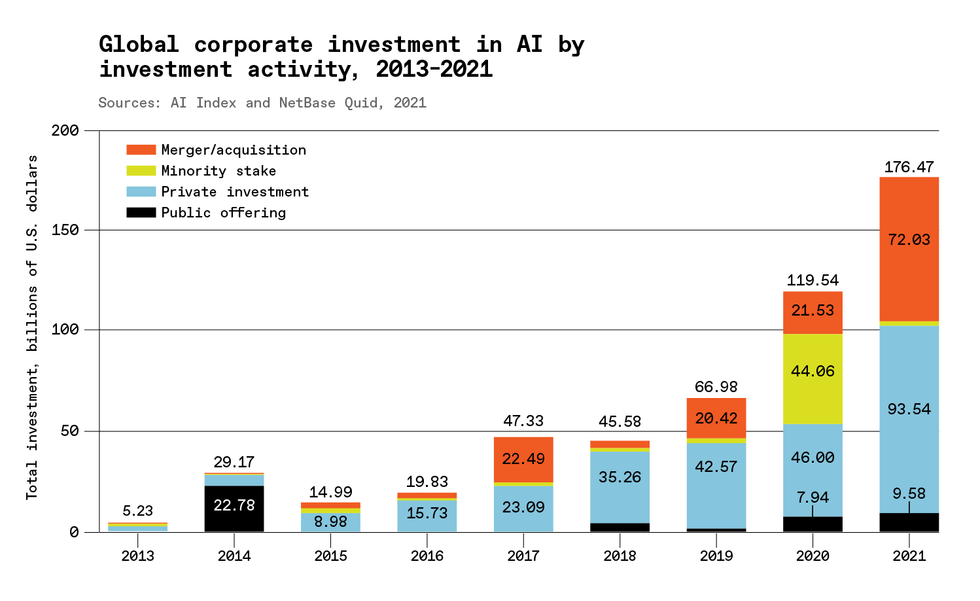

1. Investment Is Off the Hook

The amount of money pouring into AI is mind-boggling. The most noteworthy gain came in global private investment, which soared from US $46 billion in 2020 to $93.5 billion in 2021. That jump came from an increase in big funding rounds; in 2020 there were four funding rounds that topped $500 million, in 2021 there were 15. The report also notes that all that money is being funneled to fewer companies, since the number of newly funded startups has been dropping since 2018. It's a great time to join an AI startup, but maybe not to found one yourself.

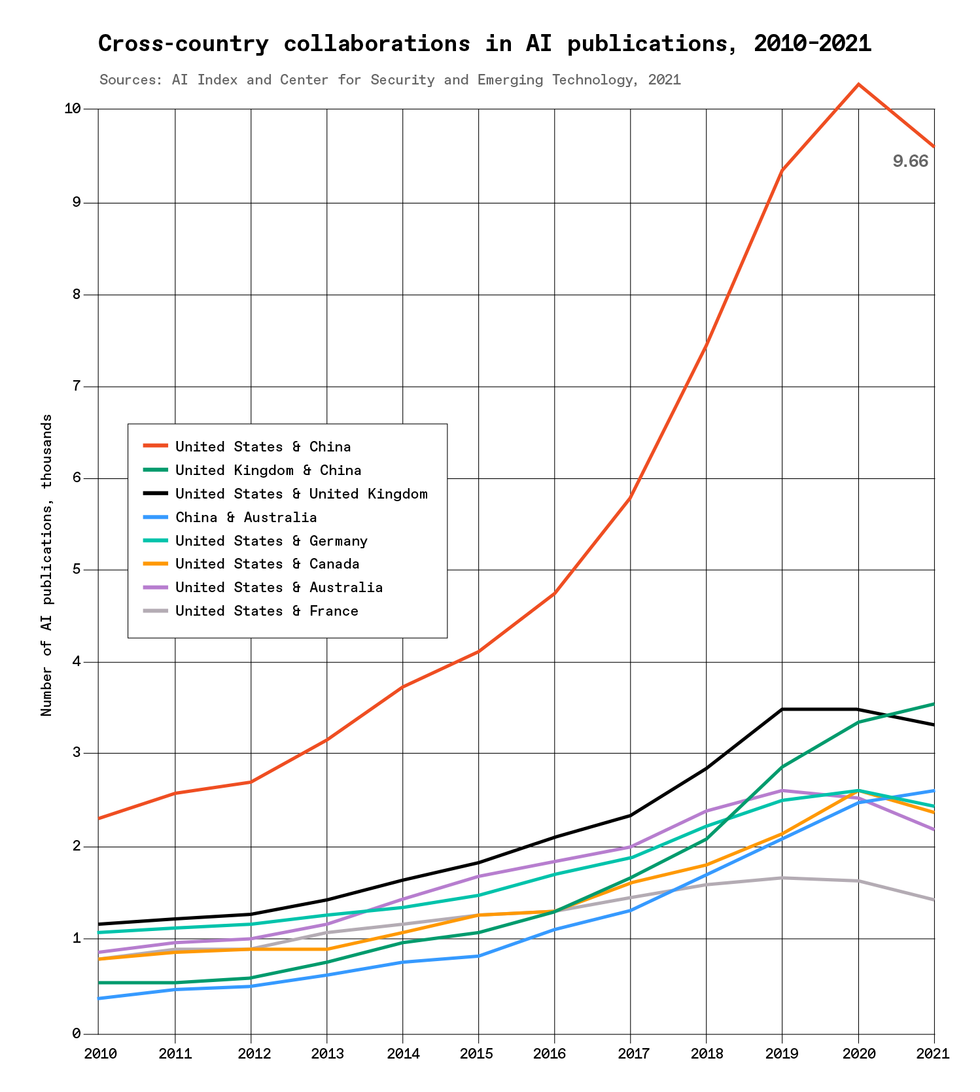

2. The U.S. vs. China Narrative Is Complicated

There's a fair bit of talk about an AI race between China and the United States these days. When you see all the news about geopolitical tensions, you would think that the number of collaborations would decrease between those two countries," says Daniel Zhang, a policy researcher at Stanford's HAI and editor in chief of this year's AI Index. Instead, he tells IEEE Spectrum, the past 10 years have been an upward trend." When it comes to cross-country collaborations on publications, China and the United States produce more than twice as many as the next pairing, China and the United Kingdom.

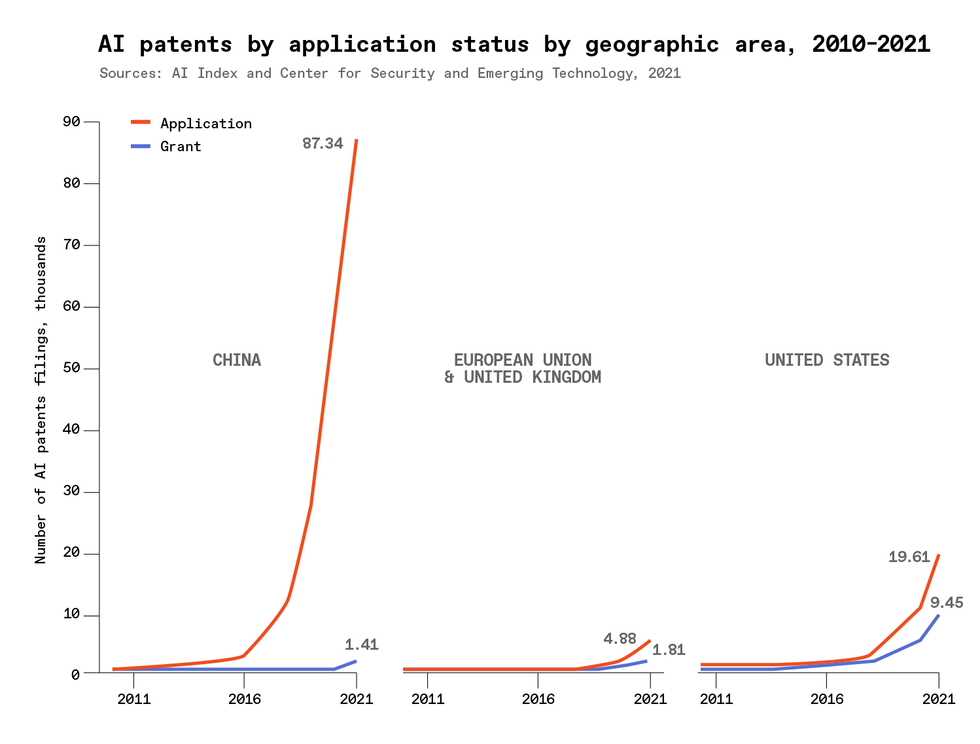

3. Applying for a Patent and Getting It Are Two Separate Things

China dominates the world on number of patents applied for; the report states that China accounted for 52 percent of global patent filings in 2021. However, many of those filings may have been somewhat aspirational. The United States dominates on number of patents granted, coming in at 40 percent of the global total. Zhang notes that having patents granted certifies that your patents are actually credible and useful," and says the situation is somewhat analogous to what's been happening with publications and citations. While China leads on number of publications, publication citations, and conference publications, the United States still leads on citations of conference publications, showing that prestigious papers from U.S. researchers are still having an outsized impact.

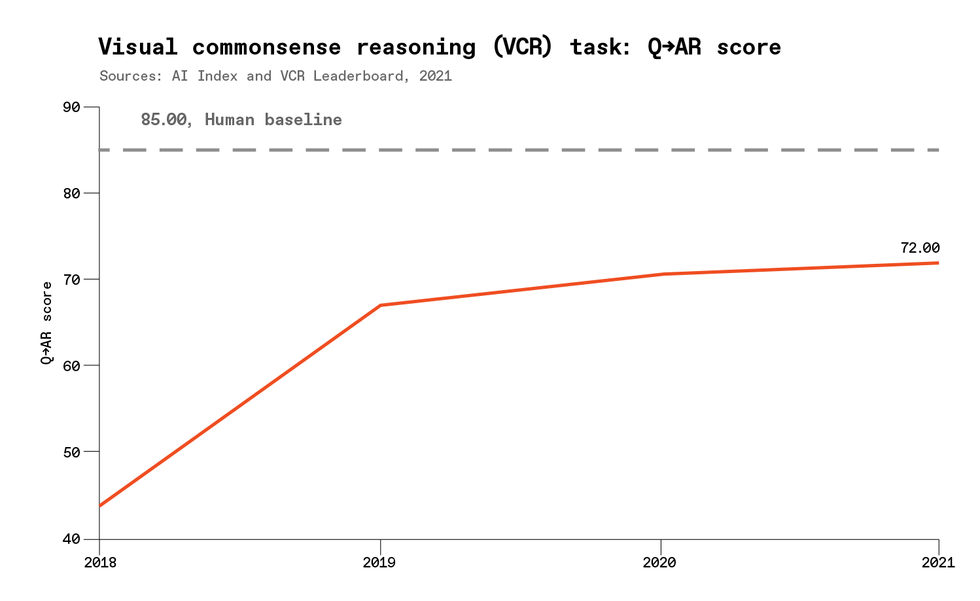

4. A Plateau in Computer Vision?

The field of computer vision has been advancing so rapidly, it's been hard to keep up with news of the latest accomplishments. The AI Index shows that computer vision systems are tremendously good at tasks involving static images such as object classification and facial recognition, and they're getting better at video tasks such as classifying activities.

But a relatively new benchmark shows the limits of what computer vision systems can do: They're great at identifying things, not so great at reasoning about what they see. The visual commonsense reasoning challenge, introduced in 2018, asks AI systems to answer questions about images and also explain their reasoning. For example, one image shows people seated at a restaurant table and a server approaching with plates; the test asks why one of the seated people is pointing to the person across the table. The report notes that performance improvements have become increasingly marginal in recent years, suggesting that new techniques may need to be invented to significantly improve performance."

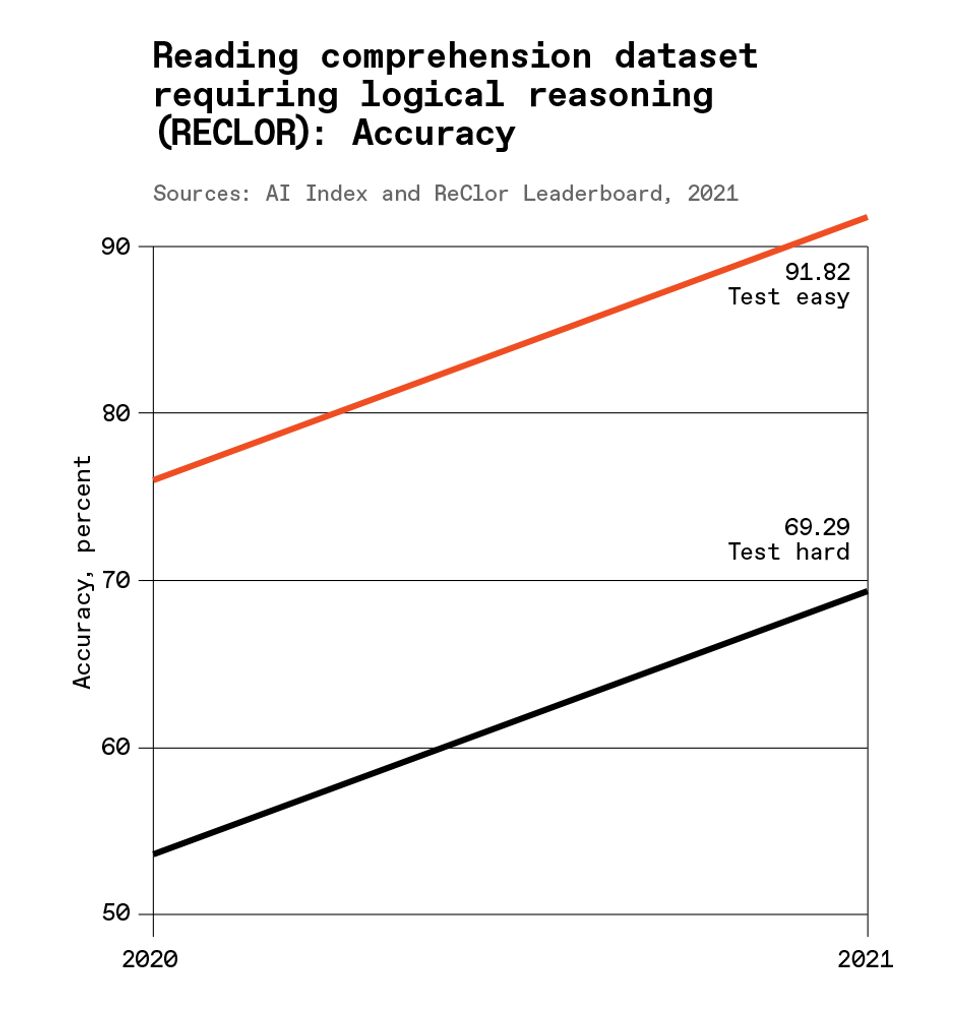

5. AI Isn't Ready for Law School

The field of natural language processing (NLP) started booming a few years later than computer vision, but it's in a somewhat similar place as computer vision (chart 4). Benchmarks for tasks such as text summarization and basic reading comprehension show impressive results, with AI systems often exceeding human performance. But when NLP systems have to reason about what they've read, they run into trouble.

This chart shows performance on a benchmark made up of logical reasoning questions from the LSAT tests that are used as entrance exams for law school. While NLP systems did well on an easier set of questions from that benchmark, the top-performing model had an accuracy of only 69 percent on a set of harder questions. Researchers have gotten similar results from a benchmark that requires NLP systems to draw conclusions from incomplete information. Reasoning is still a frontier of AI.

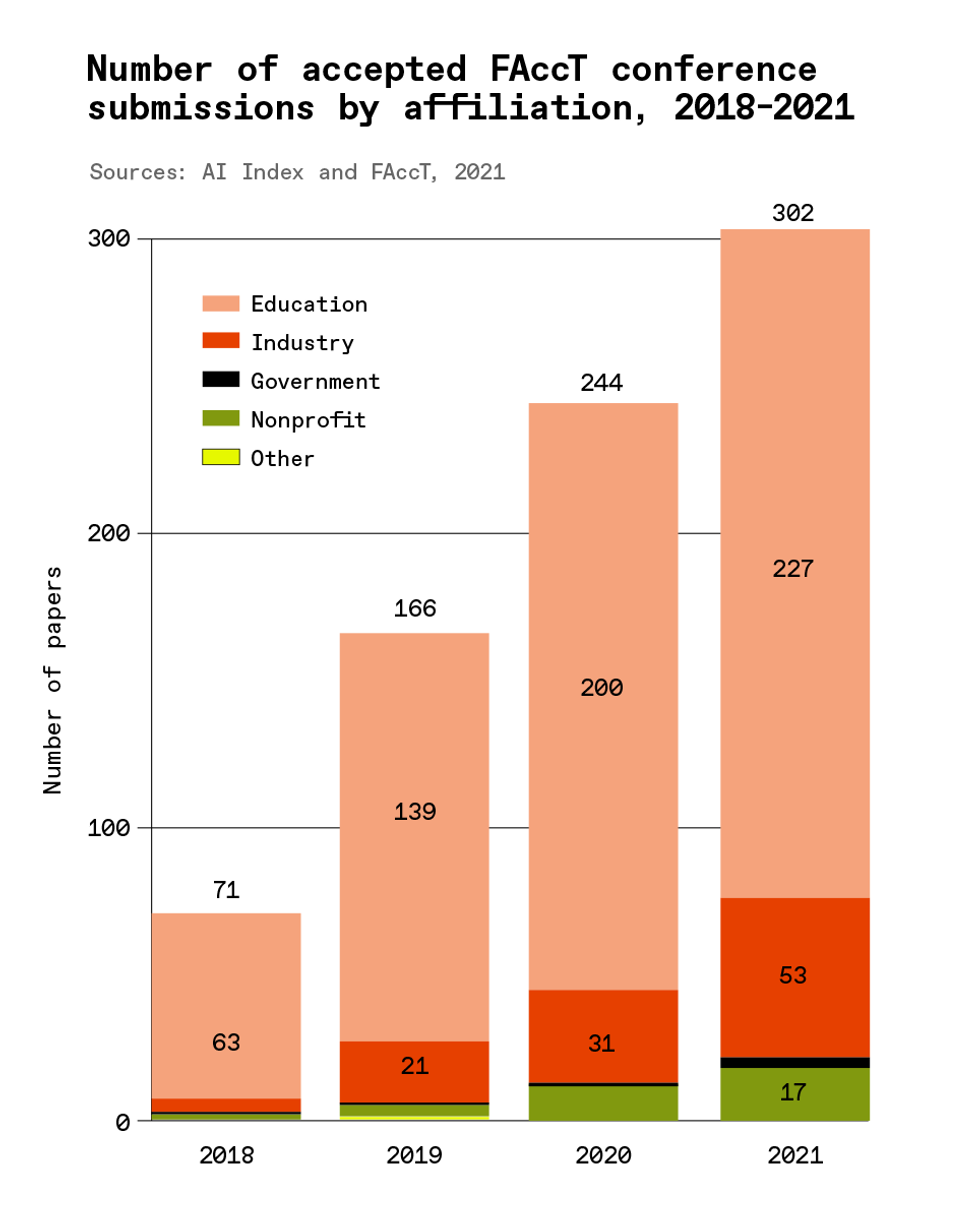

6. Ethics Everywhere

Here's a bit of good news from the report: There's a huge amount of interest in AI ethics right now, as judged by participation in meetings like the ACM conference on Fairness, Accountability, and Transparency (FAccT) and ethics-related workshops at NeurIPS. For those who haven't heard of FAccT, the report notes that it was one of the first major conferences to focus on sociotechnical analysis of algorithms. This chart shows increasing industry participation at FAccT, which Zhang sees as further good news. This field has been dominated by academic researchers," he says, but now we're seeing more private-sector involvement." Zhang says it's hard to guess what such participation means for how AI systems are being designed and deployed within industry, but it's a positive sign.

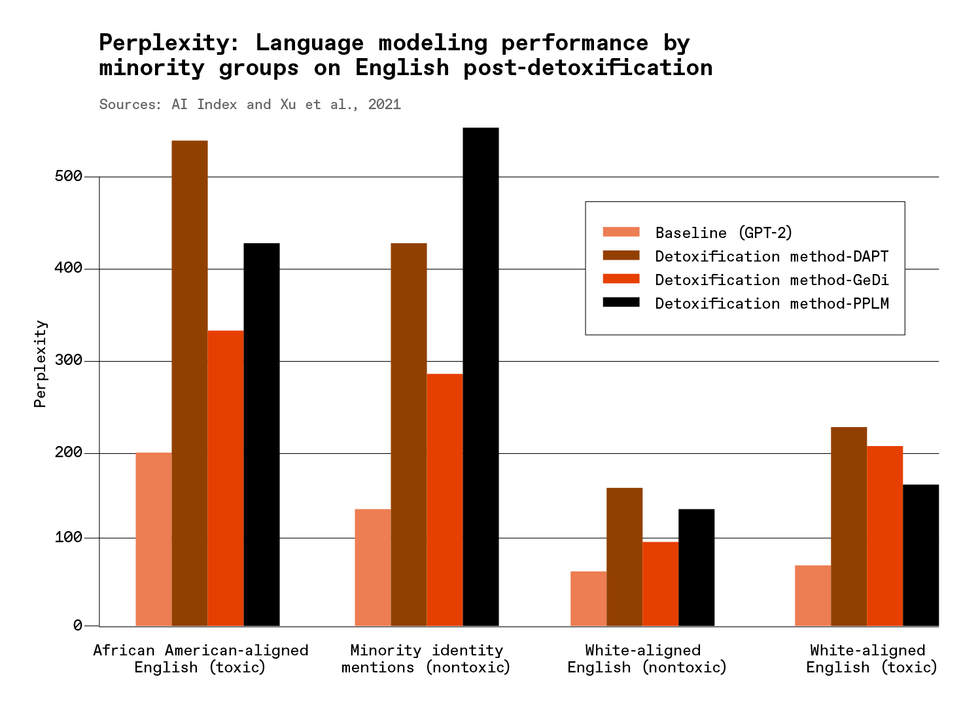

7. Detox: Damned if You Don't, Damned if You Do

One big area of ethical concern in AI involves large language models like OpenAI's GPT-3, which has a truly terrible habit of generating text that's laden with every bias and prejudice it learned from its training data-the Internet. A number of research groups (including OpenAI itself) are working on this toxic-language problem, with both new benchmarks to measure bias and detoxification programs. But the chart above shows the results of running the language model GPT-2 through three different detox methods. All three methods impaired the model's performance on a metric called perplexity (a lower score is better), with the worst performance impacts on text involving African American-aligned English and mentions of minority groups. As the experts like to say, more research is needed.

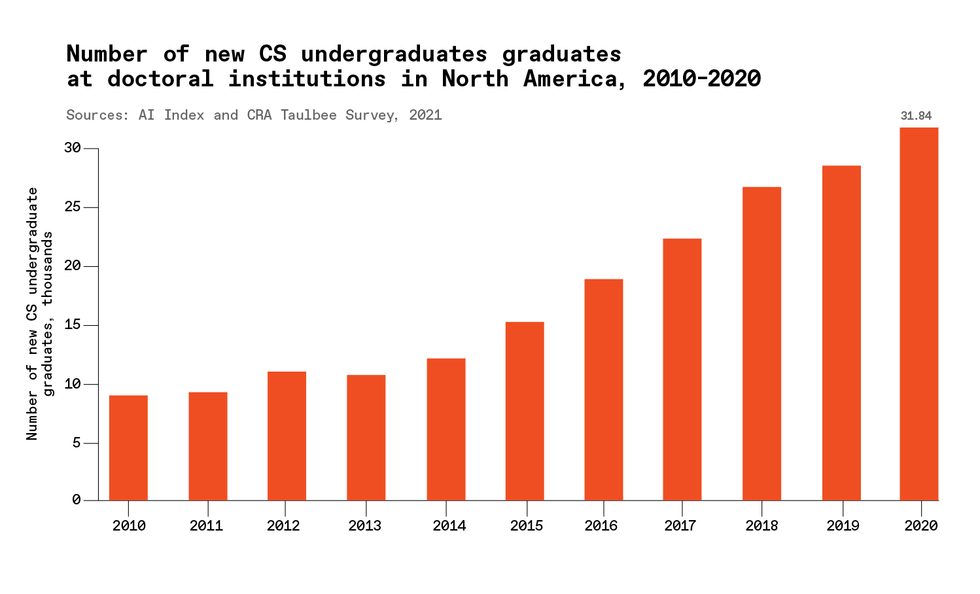

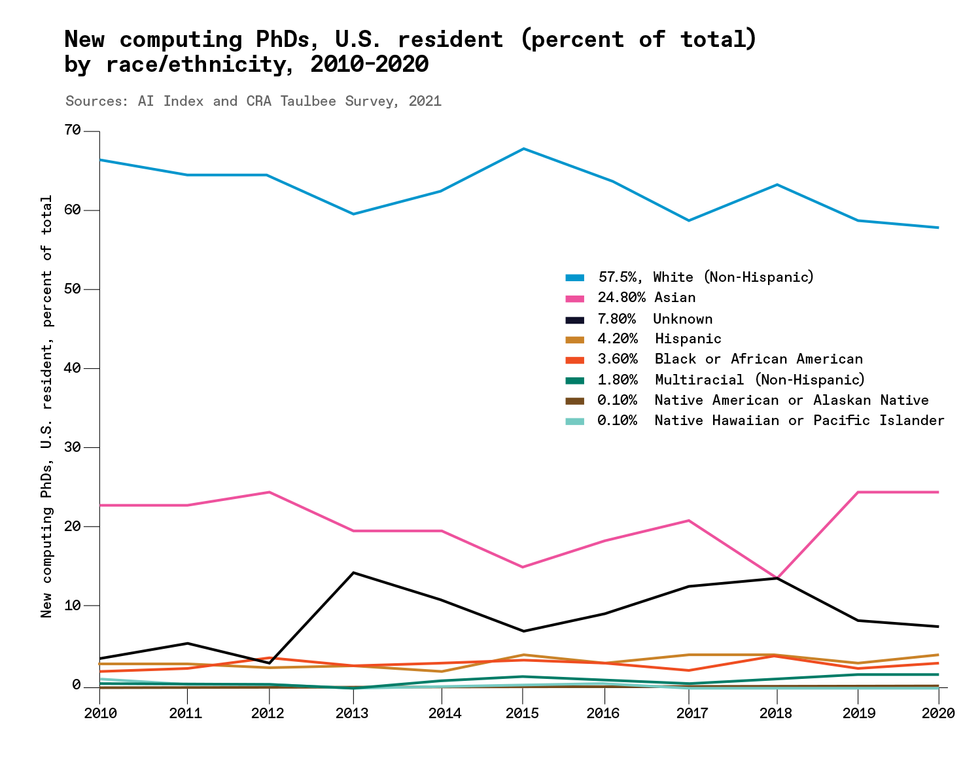

8. Universities Are Crawling With CS Students

The AI pipeline has never been fuller. An annual survey by the Computing Research Association gathers data from more than 200 universities in North America, and its most recent data show that more than 31,000 undergraduates completed computer science degrees in 2020. That's an 11.6 percent increase from the number in 2019.

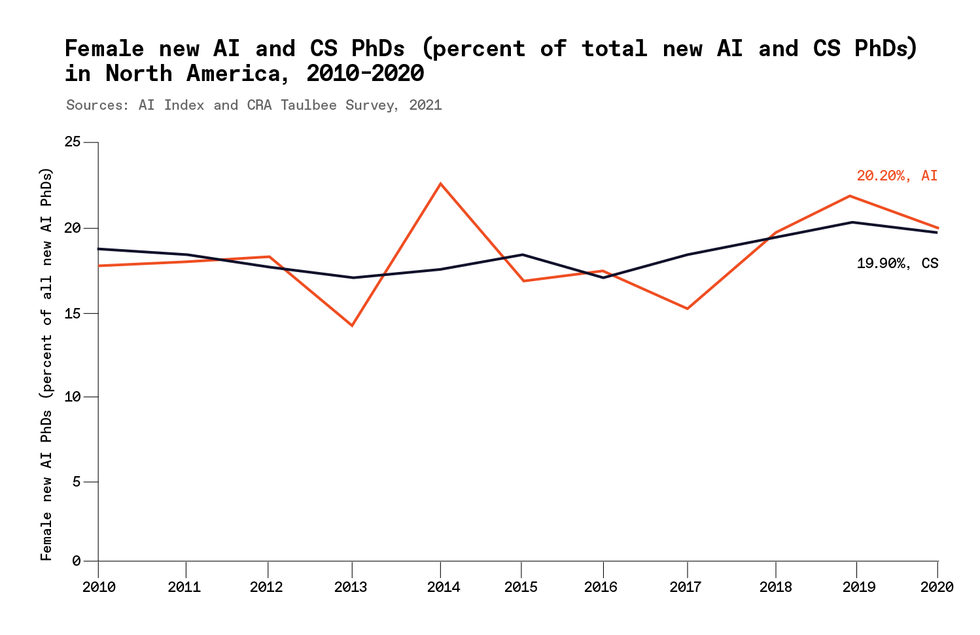

9. AI Needs Women

Ditto this point. The AI Index shows data for AI and CS Ph.D.'s on separate graphs, but they tell the same story. The field of AI needs to do better with diversity starting long before people get to Ph.D. programs.

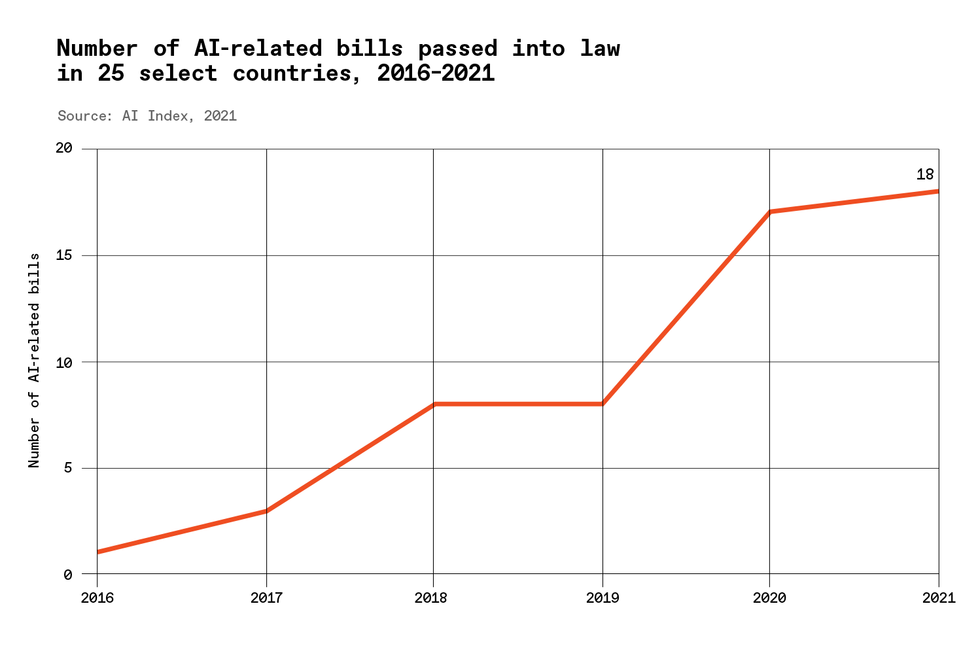

11. Lawmakers Are Paying Attention

In 2021, more bills related to AI passed into law than ever before. Of the 25 countries that the AI Index has been watching, Spain, the United Kingdom, and the United States were in the lead, each passing three bills last year. The report also notes that in the United States, those three bills that passed were among a whopping 130 bills proposed. It's not clear from the report whether most of these bills were promoting AI through public funding or enacting regulations to manage the risks that AI can bring. Zhang says it was a mix, and says HAI will publish a more detailed analysis of global legislation in the coming year.

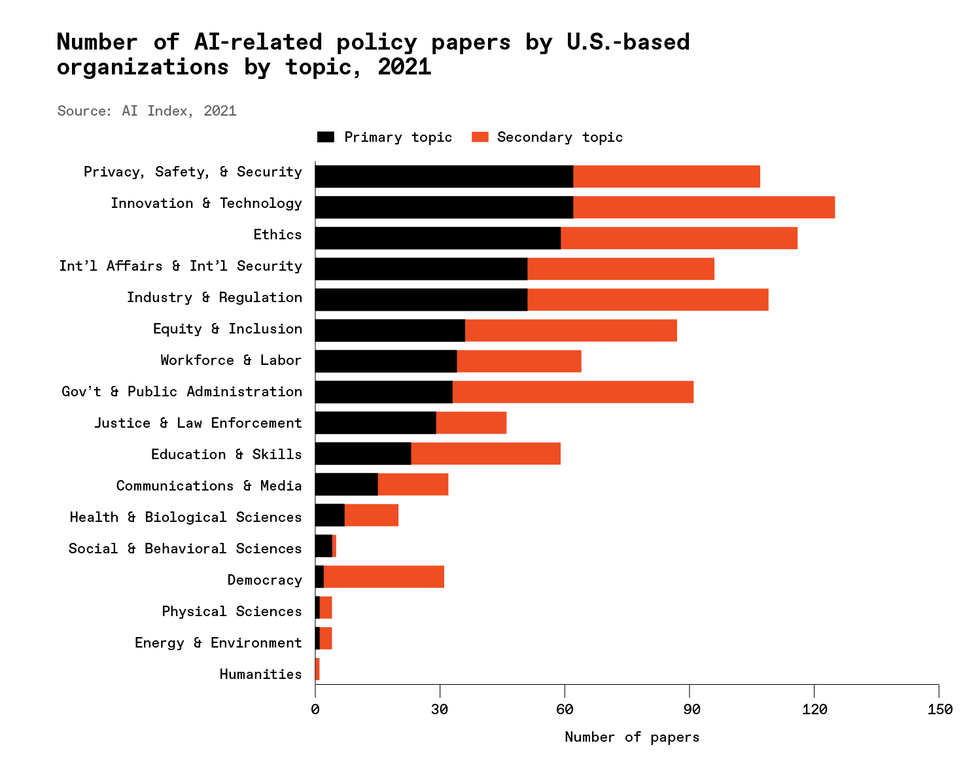

12. Ahem, Climate Change

The AI Index follows 55 public-policy groups within the United States that publish papers related to AI, and this chart shows what topics those groups focused on last year. I'm using this chart as an excuse to raise the topic of AI's increasingly large energy footprint (training big models takes a lot of compute time) and therefore its potential impact on climate change. The policy groups didn't seem to think these were important topics in 2021. I also asked Zhang if the AI Index might take up these issues in next year's report, and he said his team is talking with various organizations about how to measure and collect data on compute efficiency and climate impact. So stay tuned.