Self-Driving Cars Work Better With Smart Roads

Enormous efforts have been made in the past two decades to create a car that can use sensors and artificial intelligence to model its environment and plot a safe driving path. Yet even today the technology works well only in areas like campuses, which have limited roads to map and minimal traffic to master. It still can't manage busy, unfamiliar, or unpredictable roads. For now, at least, there is only so much sensory power and intelligence that can go into a car.

To solve this problem, we must turn it around: We must put more of the smarts into the infrastructure-we must make the road smart.

The concept of smart roads is not new. It includes efforts like traffic lights that automatically adjust their timing based on sensor data and streetlights that automatically adjust their brightness to reduce energy consumption. PerceptIn, of which coauthor Liu is founder and CEO, has demonstrated at its own test track, in Beijing, that streetlight control can make traffic 40 percent more efficient. (Liu and coauthor Gaudiot, Liu's former doctoral advisor at the University of California, Irvine, often collaborate on autonomous driving projects.)

But these are piecemeal changes. We propose a much more ambitious approach that combines intelligent roads and intelligent vehicles into an integrated, fully intelligent transportation system. The sheer amount and accuracy of the combined information will allow such a system to reach unparalleled levels of safety and efficiency.

Human drivers have a crash rate of 4.2 accidents per million miles; autonomous cars must do much better to gain acceptance. However, there are corner cases, such as blind spots, that afflict both human drivers and autonomous cars, and there is currently no way to handle them without the help of an intelligent infrastructure.

Putting a lot of the intelligence into the infrastructure will also lower the cost of autonomous vehicles. A fully self-driving vehicle is still quite expensive to build. But gradually, as the infrastructure becomes more powerful, it will be possible to transfer more of the computational workload from the vehicles to the roads. Eventually, autonomous vehicles will need to be equipped with only basic perception and control capabilities. We estimate that this transfer will reduce the cost of autonomous vehicles by more than half.

Here's how it could work: It's Beijing on a Sunday morning, and sandstorms have turned the sun blue and the sky yellow. You're driving through the city, but neither you nor any other driver on the road has a clear perspective. But each car, as it moves along, discerns a piece of the puzzle. That information, combined with data from sensors embedded in or near the road and from relays from weather services, feeds into a distributed computing system that uses artificial intelligence to construct a single model of the environment that can recognize static objects along the road as well as objects that are moving along each car's projected path.

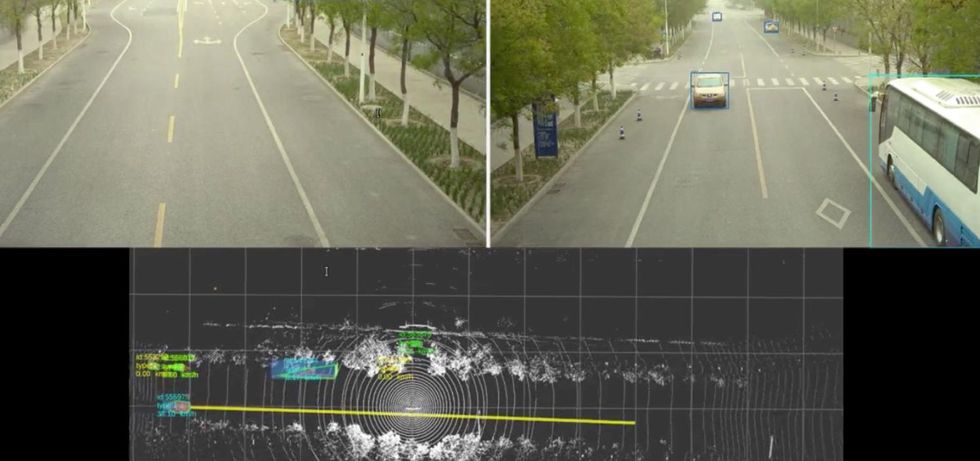

The self-driving vehicle, coordinating with the roadside system, sees right through a sandstorm swirling in Beijing to discern a static bus and a moving sedan [top]. The system even indicates its predicted trajectory for the detected sedan via a yellow line [bottom], effectively forming a semantic high-definition map.Shaoshan Liu

The self-driving vehicle, coordinating with the roadside system, sees right through a sandstorm swirling in Beijing to discern a static bus and a moving sedan [top]. The system even indicates its predicted trajectory for the detected sedan via a yellow line [bottom], effectively forming a semantic high-definition map.Shaoshan Liu

Properly expanded, this approach can prevent most accidents and traffic jams, problems that have plagued road transport since the introduction of the automobile. It can provide the goals of a self-sufficient autonomous car without demanding more than any one car can provide. Even in a Beijing sandstorm, every person in every car will arrive at their destination safely and on time.

By putting together idle compute power and the archive of sensory data, we have been able to improve performance without imposing any additional burdens on the cloud.

To date, we have deployed a model of this system in several cities in China as well as on our test track in Beijing. For instance, in Suzhou, a city of 11 million west of Shanghai, the deployment is on a public road with three lanes on each side, with phase one of the project covering 15 kilometers of highway. A roadside system is deployed every 150 meters on the road, and each roadside system consists of a compute unit equipped with an Intel CPU and an Nvidia 1080Ti GPU, a series of sensors (lidars, cameras, radars), and a communication component (a roadside unit, or RSU). This is because lidar provides more accurate perception compared to cameras, especially at night. The RSUs then communicate directly with the deployed vehicles to facilitate the fusion of the roadside data and the vehicle-side data on the vehicle.

Sensors and relays along the roadside comprise one half of the cooperative autonomous driving system, with the hardware on the vehicles themselves making up the other half. In a typical deployment, our model employs 20 vehicles. Each vehicle bears a computing system, a suite of sensors, an engine control unit (ECU), and to connect these components, a controller area network (CAN) bus. The road infrastructure, as described above, consists of similar but more advanced equipment. The roadside system's high-end Nvidia GPU communicates wirelessly via its RSU, whose counterpart on the car is called the onboard unit (OBU). This back-and-forth communication facilitates the fusion of roadside data and car data.

This deployment, at a campus in Beijing, consists of a lidar, two radars, two cameras, a roadside communication unit, and a roadside computer. It covers blind spots at corners and tracks moving obstacles, like pedestrians and vehicles, for the benefit of the autonomous shuttle that serves the campus.Shaoshan Liu

This deployment, at a campus in Beijing, consists of a lidar, two radars, two cameras, a roadside communication unit, and a roadside computer. It covers blind spots at corners and tracks moving obstacles, like pedestrians and vehicles, for the benefit of the autonomous shuttle that serves the campus.Shaoshan Liu

The infrastructure collects data on the local environment and shares it immediately with cars, thereby eliminating blind spots and otherwise extending perception in obvious ways. The infrastructure also processes data from its own sensors and from sensors on the cars to extract the meaning, producing what's called semantic data. Semantic data might, for instance, identify an object as a pedestrian and locate that pedestrian on a map. The results are then sent to the cloud, where more elaborate processing fuses that semantic data with data from other sources to generate global perception and planning information. The cloud then dispatches global traffic information, navigation plans, and control commands to the cars.

Each car at our test track begins in self-driving mode-that is, a level of autonomy that today's best systems can manage. Each car is equipped with six millimeter-wave radars for detecting and tracking objects, eight cameras for two-dimensional perception, one lidar for three-dimensional perception, and GPS and inertial guidance to locate the vehicle on a digital map. The 2D- and 3D-perception results, as well as the radar outputs, are fused to generate a comprehensive view of the road and its immediate surroundings.

Next, these perception results are fed into a module that keeps track of each detected object-say, a car, a bicycle, or a rolling tire-drawing a trajectory that can be fed to the next module, which predicts where the target object will go. Finally, such predictions are handed off to the planning and control modules, which steer the autonomous vehicle. The car creates a model of its environment up to 70 meters out. All of this computation occurs within the car itself.

In the meantime, the intelligent infrastructure is doing the same job of detection and tracking with radars, as well as 2D modeling with cameras and 3D modeling with lidar, finally fusing that data into a model of its own, to complement what each car is doing. Because the infrastructure is spread out, it can model the world as far out as 250 meters. The tracking and prediction modules on the cars will then merge the wider and the narrower models into a comprehensive view.

The car's onboard unit communicates with its roadside counterpart to facilitate the fusion of data in the vehicle. The wireless standard, called Cellular-V2X (for vehicle-to-X"), is not unlike that used in phones; communication can reach as far as 300 meters, and the latency-the time it takes for a message to get through-is about 25 milliseconds. This is the point at which many of the car's blind spots are now covered by the system on the infrastructure.

Two modes of communication are supported: LTE-V2X, a variant of the cellular standard reserved for vehicle-to-infrastructure exchanges, and the commercial mobile networks using the LTE standard and the 5G standard. LTE-V2X is dedicated to direct communications between the road and the cars over a range of 300 meters. Although the communication latency is just 25 ms, it is paired with a low bandwidth, currently about 100 kilobytes per second.

In contrast, the commercial 4G and 5G network have unlimited range and a significantly higher bandwidth (100 megabytes per second for downlink and 50 MB/s uplink for commercial LTE). However, they have much greater latency, and that poses a significant challenge for the moment-to-moment decision-making in autonomous driving.

A roadside deployment at a public road in Suzhou is arranged along a green pole bearing a lidar, two cameras, a communication unit, and a computer. It greatly extends the range and coverage for the autonomous vehicles on the road.Shaoshan Liu

A roadside deployment at a public road in Suzhou is arranged along a green pole bearing a lidar, two cameras, a communication unit, and a computer. It greatly extends the range and coverage for the autonomous vehicles on the road.Shaoshan Liu

Note that when a vehicle travels at a speed of 50 kilometers (31 miles) per hour, the vehicle's stopping distance will be 35 meters when the road is dry and 41 meters when it is slick. Therefore, the 250-meter perception range that the infrastructure allows provides the vehicle with a large margin of safety. On our test track, the disengagement rate-the frequency with which the safety driver must override the automated driving system-is at least 90 percent lower when the infrastructure's intelligence is turned on, so that it can augment the autonomous car's onboard system.

Experiments on our test track have taught us two things. First, because traffic conditions change throughout the day, the infrastructure's computing units are fully in harness during rush hours but largely idle in off-peak hours. This is more a feature than a bug because it frees up much of the enormous roadside computing power for other tasks, such as optimizing the system. Second, we find that we can indeed optimize the system because our growing trove of local perception data can be used to fine-tune our deep-learning models to sharpen perception. By putting together idle compute power and the archive of sensory data, we have been able to improve performance without imposing any additional burdens on the cloud.

It's hard to get people to agree to construct a vast system whose promised benefits will come only after it has been completed. To solve this chicken-and-egg problem, we must proceed through three consecutive stages:

Stage 1: infrastructure-augmented autonomous driving, in which the vehicles fuse vehicle-side perception data with roadside perception data to improve the safety of autonomous driving. Vehicles will still be heavily loaded with self-driving equipment.

Stage 2: infrastructure-guided autonomous driving, in which the vehicles can offload all the perception tasks to the infrastructure to reduce per-vehicle deployment costs. For safety reasons, basic perception capabilities will remain on the autonomous vehicles in case communication with the infrastructure goes down or the infrastructure itself fails. Vehicles will need notably less sensing and processing hardware than in stage 1.

Stage 3: infrastructure-planned autonomous driving, in which the infrastructure is charged with both perception and planning, thus achieving maximum safety, traffic efficiency, and cost savings. In this stage, the vehicles are equipped with only very basic sensing and computing capabilities.

Technical challenges do exist. The first is network stability. At high vehicle speed, the process of fusing vehicle-side and infrastructure-side data is extremely sensitive to network jitters. Using commercial 4G and 5G networks, we have observed network jitters ranging from 3 to 100 ms, enough to effectively prevent the infrastructure from helping the car. Even more critical is security: We need to ensure that a hacker cannot attack the communication network or even the infrastructure itself to pass incorrect information to the cars, with potentially lethal consequences.

Another problem is how to gain widespread support for autonomous driving of any kind, let alone one based on smart roads. In China, 74 percent of people surveyed favor the rapid introduction of automated driving, whereas in other countries, public support is more hesitant. Only 33 percent of Germans and 31 percent of people in the United States support the rapid expansion of autonomous vehicles. Perhaps the well-established car culture in these two countries has made people more attached to driving their own cars.

Then there is the problem of jurisdictional conflicts. In the United States, for instance, authority over roads is distributed among the Federal Highway Administration, which operates interstate highways, and state and local governments, which have authority over other roads. It is not always clear which level of government is responsible for authorizing, managing, and paying for upgrading the current infrastructure to smart roads. In recent times, much of the transportation innovation that has taken place in the United States has occurred at the local level.

By contrast, China has mapped out a new set of measures to bolster the research and development of key technologies for intelligent road infrastructure. A policy document published by the Chinese Ministry of Transport aims for cooperative systems between vehicle and road infrastructure by 2025. The Chinese government intends to incorporate into new infrastructure such smart elements as sensing networks, communications systems, and cloud control systems. Cooperation among carmakers, high-tech companies, and telecommunications service providers has spawned autonomous driving startups in Beijing, Shanghai, and Changsha, a city of 8 million in Hunan province.

An infrastructure-vehicle cooperative driving approach promises to be safer, more efficient, and more economical than a strictly vehicle-only autonomous-driving approach. The technology is here, and it is being implemented in China. To do the same in the United States and elsewhere, policymakers and the public must embrace the approach and give up today's model of vehicle-only autonomous driving. In any case, we will soon see these two vastly different approaches to automated driving competing in the world transportation market.