Disentangling the Facts From the Hype of Quantum Computing

This is a guest post in recognition of IEEE Quantum Week 2022. The views expressed here are solely those of the author and do not represent positions of IEEE Spectrum or the IEEE.

Few fields invite as much unbridled hype as quantum computing. Most people's understanding of quantum physics extends to the fact that it is unpredictable, powerful, and almost existentially strange. A few years ago, I provided IEEE Spectrum an update on the state of quantum computing and looked at both the positive and negative claims across the industry. And just as back in 2019, I remain enthusiastically optimistic today. Even though the hype is real and has outpaced the actual results, much has been accomplished over the past few years.

First, let's address the hype.

Over the past five years, there has been undeniable hype around quantum computing-hype around approaches, timelines, applications, and more. As far back as 2017, vendors were claiming the commercialization of the technology was just a couple of years away. There was even what I'd call antihype, with some questioning if quantum computers would materialize at all. I hope they end up being wrong.

More recently, companies have shifted their timelines from a few years to a decade, but they continue to release road maps showing commercially viable systems as early as 2029. And these hype-fueled expectations are becoming institutionalized: The Department of Homeland Security even released a road map to protect against the threats of quantum computing, in an effort to help institutions transition to new security systems. This creates an adopt or you'll fall behind" mentality for both quantum-computing applications and postquantum cryptography security.

Market research firm Gartner (of the Hype Cycle" fame) believes quantum computing may have already reached peak hype, or phase two of its five-phase growth model. This means the industry is about to enter a phase called the trough of disillusionment." According to McKinsey & Company, fault tolerant quantum computing is expected between 2025 and 2030 based on announced hardware roadmaps for gate-based quantum computing players." I believe this is not entirely realistic, as we still have a long journey to achieve quantum practicality-the point at which quantum computers can do something unique to change our lives.

In my opinion, quantum practicality is likely still 10 to 15 years away. However, progress toward that goal is not just steady; it's accelerating. That's the same thing we saw with Moore's Law and semiconductor evolution: The more we discover, the faster we go. Semiconductor technology has taken decades to progress to its current state, accelerating at each turn. We expect similar advancement with quantum computing.

In fact, we are discovering that what we have learned while engineering transistors at Intel is also helping to speed our quantum-computing development work today. For example, when developing silicon spin qubits, we're able to leverage existing transistor-manufacturing infrastructure to ensure quality and to speed up fabrication. We've started the mass production of qubits on a 300-millimeter silicon wafer in a high-volume fab facility, which allows us to fit an array of more than 10,000 quantum dots on a single wafer. We're also leveraging our experience with semiconductors to create a cryogenic quantum control chip, called Horse Ridge, which is helping to solve the interconnect challenges associated with quantum computing by eliminating much of the cabling that today crowds the dilution refrigerator. And our experience with testing semiconductors has led to the development of the cryoprober, which enables our team to get testing results from quantum devices in hours instead of the days or weeks it used to take.

Others are likely benefiting from their own prior research and experience, as well. For example, Quantinuum's recent research showed the entanglement of logical qubits in a fault-tolerant circuit using real-time quantum error correction. While still primitive, it's an example of the type of progress needed in this critical field. For its part, Google has a new open-source library called Cirq for programming quantum computers. Along with similar libraries from IBM, Intel, and others, Cirq is helping drive development of improved quantum algorithms. And, as a final example, IBM's 127-qubit processor, called Quantum Eagle, shows steady progress toward upping the qubit count.

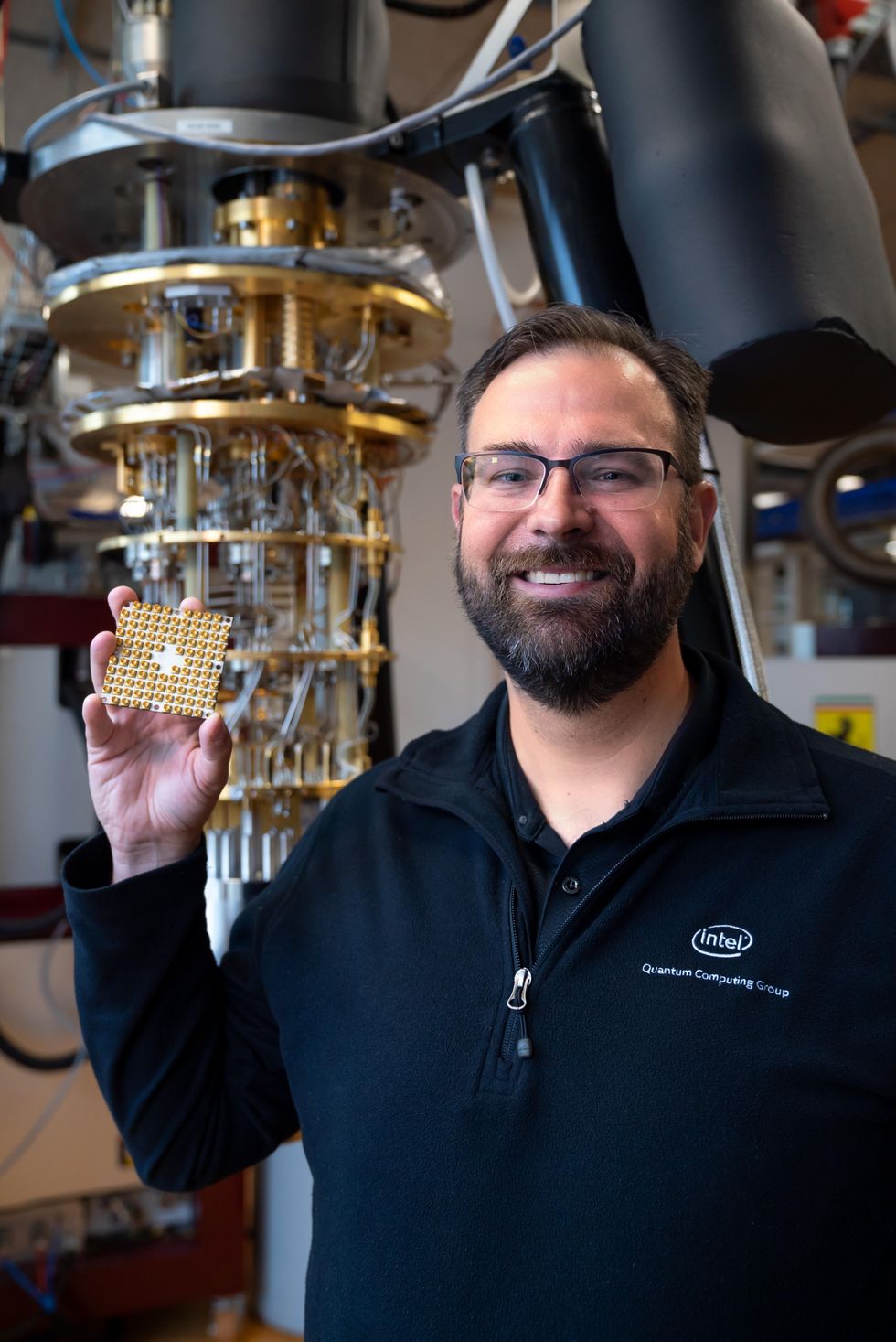

The author shows Intel quantum-computing prototypes.Intel

The author shows Intel quantum-computing prototypes.Intel

There are also some key challenges that remain.

- First, we still need better devices and high-quality qubits. While the very best one- and two-qubit gates meet the needed threshold for fault tolerance, the community has yet to accomplish that on a much larger system.

- Second, we've yet to see anyone propose an interconnect technology for quantum computers that is as elegant as how we wire up microprocessors today. Right now, each qubit requires multiple control wires. This approach is untenable as we strive to create a large-scale quantum computer.

- Third, we need fast qubit control and feedback loops. Horse Ridge is a precursor for this, because we would expect latency to improve by having the control chip in the fridge and therefore closer to the qubit chip.

- And finally, error correction. While there have been some recent indications of progress to correction and mitigation, no one has yet run an error-correction algorithm on a large group of qubits.

With new research regularly showing novel approaches and advances, these are challenges we will overcome. For example, many in the industry are looking at how to integrate qubits and the controller on the same die to create quantum system-on-chips (SoCs).

But we're still quite a way off from having a fault tolerant quantum computer. Over the next 10 years, Intel expects to be competitive (or pull ahead) of others in terms of qubit count and performance, but as I stated before, a system large enough to deliver compelling value won't be realized for 10 to 15 years, by anyone. The industry needs to continue its evolution of qubit counts and quality improvement. After that, the next milestone should be the production of thousands of quality qubits (still several years away), and then scaling that to millions.

Let's remember that it took Google 53 qubits to create an application that could accomplish a supercomputer function. If we want to explore new applications that go beyond today's supercomputers, we'll need to see system sizes that are orders of magnitude larger.

Quantum computing has come a long way in the past five years, but we still have a long way to go, and investors will need to fund it for the long term. Significant developments are happening in the lab, and they show immense promise for what could be possible in the future. For now, it's important that we don't get caught up in the hype but focus on real outcomes.

Correction 21 Sept. 2022: A previous version of this post stated incorrectly that the release of an announced 5,000-qubit quantum computer in 2020 did not happen. It did. Spectrum regrets the error.