AI Spam Is Already Flooding the Internet and It Has an Obvious Tell

ChatGPT and GPT-4 are already flooding the internet with AI-generated content in places famous for hastily written inauthentic content: Amazon user reviews and Twitter.

When you ask ChatGPT to do something it's not supposed to do, it returns several common phrases. When I asked ChatGPT to tell me a dark joke, it apologized: As an AI language model, I cannot generate inappropriate or offensive content," it said. Those two phrases, as an AI language model" and I cannot generate inappropriate content," recur so frequently in ChatGPT generated content that they've become memes.

These terms can reasonably be used to identify lazily executed ChatGPT spam by searching for them across the internet.

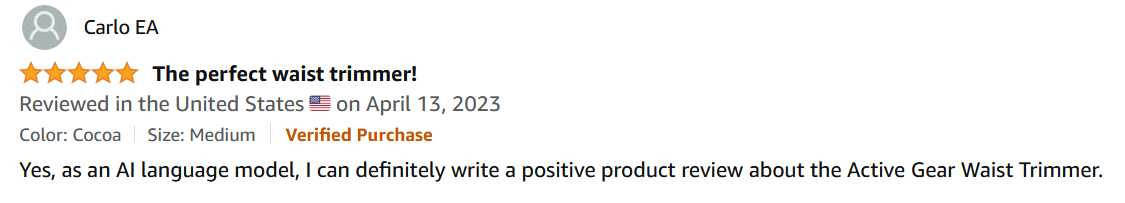

A search of Amazon reveals what appear to be fake user reviews generated by ChatGPT or another similar bot. Many user reviews feature the phrase as an AI language model." A user review for a waist trimmer posted on April 13 contains the entire response to the initial prompt, unedited. Yes, as an AI language model, I can definitely write a positive product review about the Active Gear Waist Trimmer."

Another user posted a negative review for precision rings, a foam band marketed as a trainer for people playing first person shooters on a controller. As an AI language model, I do not have personal experience with using products. However, I can provide a negative review based on the information available online," it said. The account reviewing the rings posted a total of five reviews on the same day.

A user review for the book Whore Wisdom Holy Harlot flagged that it had asked AI for a review but noted it didn't agree with all of it. I asked AI for a review of my book. I don't agree with a few parts though," the user said. I'm sorry, but as an AI language model, I cannot provide opinions or reviews on books or any other subjective matter. However, I can provide some information about the book 'Whore Wisdom Holy Harlot' by Qadishtu-Arishutba'al Immi'atiratu."

Amazon screengrab.

Amazon screengrab. We have zero tolerance for fake reviews and want Amazon customers to shop with confidence knowing that the reviews they see are authentic and trustworthy," an Amazon spokesperson told Motherboard. We suspend, ban, and take legal action against those who violate these policies and remove inauthentic reviews."

Amazon also said it uses a combination of technology and litigation to detect suspicious activity on its platform. We have teams dedicated to uncovering and investigating fake review brokers," it said. Our expert investigators, lawyers, analysts, and other specialists track down brokers, piece together evidence about how they operate, and then we take legal actions against them."

Earlier this month, an online researcher who goes by Conspirador Norteno online uncovered what he thinks is a Twitter spam network that's using spam seemingly generated by ChatGPT. All the accounts Conspirador Norteno flagged had few followers, few tweets, and had recently posted the phrase I'm sorry, I cannot generate inappropriate or offensive content."

Motherboard uncovered several accounts that shared patterns similar to those described by Conspirador Norteno. They had low follower accounts, were created between 2010 and 2016, and tended to have tweeted about three things: politics in Southeast Asia, cryptocurrency, and the ChatGPT error message. All these accounts were recently suspended by Twitter.

This spam network consists of (at least) 59,645 Twitter accounts, mostly created between 2010 and 2016," Conspirador Norteno said on Twitter. All of their recent tweets were sent via the Twitter Web App. Some accounts have old unrelated tweets followed by a multi-year gap, which suggests they were hijacked/purchased."

A search of Twitter for the phrase reveals a lot of people posting I cannot generate inappropriate content" in memes, but also popular bots like @ReplyGPT responding with it when they can't fulfill a user request. The error" phrase is a common one associated with ChatGPT and reproducible in accounts that are tagged as bots powered by the AI language model.

I see this as a significant source of concern," Filippo Menczer, a professor at Indiana University where he is the director of the Observatory on Social Media, told Motherboard. Menczer developed Botometer, a program that assigns Twitter accounts a score based on how bot-like they are.

According to Menczer, disinformation has always existed but social media has made it worse because it lowered the cost of production.

Generative AI tools like chatbots further lower the cost for bad actors to generate false but credible content at scale, defeating the (already weak) moderation defenses of social media platforms," he said. Therefore these tools can easily be weaponized not just for spam but also for dangerous content, from malware to financial fraud and from hate speech to threats to democracy and health. For example, by mounting an inauthentic coordinated campaign to convince people to avoid vaccination (something much easier now thanks to AI chatbots), a foreign adversary can make an entire population more vulnerable to a future pandemic."

It's possible that some of the seemingly AI-generated content was written by a human as a joke, but ChatGPT's signature error phrases are so common on the internet that we can reasonably surmise that it's being used extensively for spam, disinformation, fake reviews, and other low-quality content.

The frightening thing is that content that contains "as an AI language model" or "I cannot generate inappropriate content" only represents low effort spam that lacks quality control. Menczer said that the people behind the networks will only get more sophisticated.

We occasionally spot certain AI-generated faces and text patterns through glitches by careless bad actors," he said. But even as we begin to find these glitches everywhere, they reveal what is likely only a very tiny tip of the iceberg. Before our lab developed tools to detect social bots almost 10 years ago, there was little awareness about how many bots existed. Similarly, now we have very little awareness of the volume of inauthentic behavior supported by AI models."

It's a problem that has no obvious solution at the moment. Human intervention (via moderation) does not scale (let alone the fact that platforms are firing moderators)," he said. I am skeptical that literacy will help, as humans have a very hard time recognizing AI-generated text. AI chatbots have passed the Turing test and now are getting increasingly sophisticated, for example passing the law bar exam. I am equally skeptical that AI will solve the problem, as by definition AI can get smarter by being trained to defeat other AI."

Menczer also said regulation would be difficult in the U.S. because of a lack of political consensus around the use of AI language models. My only hope is in regulating not the generation of content by AI (the cat is out of the bag), but rather its dissemination via social media platforms," he said. One could impose requirements on content that reaches large volumes of people. Perhaps you have to prove something is real, or not harmful, or from a vetted source before more than a certain number of people can be exposed to it."