The People Building AI with 'Existential Risk' Are Really Not Getting 'Oppenheimer'

The release of Oppenheimerhas created some truly terrible reactions. Christopher Nolan's new film is a mythical recounting of the creation of the nuclear bomb during World War II. It was a widespread effort involving thousands of people and many locations, but the film focuses on physicist and Los Alamos head J. Robert Oppenheimer and his horror at the world he's built and the people who will use and abuse both science and his story.

Oppenheimer has emerged into a world newly concerned with the possibility of a nuclear weapon being used again, and at a time when Silicon Valley is developing a technology that its inventors claim is just as dangerous as the atom bomb: AI. So far, their reactions to the film reveal a viewpoint that isn't nearly as critical or self-reflective as one might hope for people developing a tool that supposedly poses an existential risk" to humanity to have.

Sam Altman, CEO of ChatGPT maker OpenAI, previously said that he wanted an international body like the U.N.'s nuclear watchdog the International Atomic Energy Agency to regulate the development of AI. In May, Altman and others signed a letter that warned the tech he was working on could destroy civilization. Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war," the letter said.

After watching Oppenheimer, Altman was upset that the movie about the creation of a weapon that melts flesh from bone and seeded several generations of humans with traumatic nightmares wasn't inspirational enough.

I was hoping that the oppenheimer movie would inspire a generation of kids to be physicists but it really missed the mark on that," Altman said on Twitter. Let's get that movie made! (i think the social network managed to do this for startup founders.)"

Altman post on Twitter.com

Altman post on Twitter.com The Social Network is, famously, not very inspirational. It's a movie about the creation of Facebook and explores the personal emptiness at the heart of the ambition of tech CEOs. It's not ambiguous. Expecting Oppenheimer-again, a movie about a weapon so devastating that it spawned separate fields of medicine-to cheerily inspire a generation of physicists is a misreading of history and film tone so grotesque that I'm not sure what to make of it.

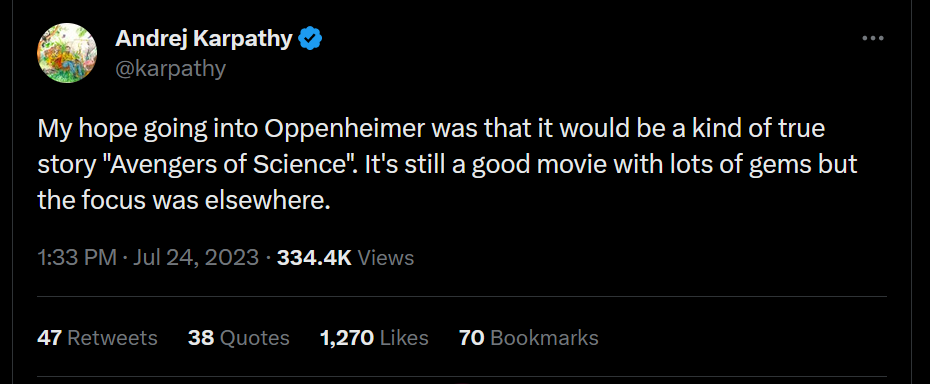

Altman is not alone. Andrej Karpathy, another OpenAI employee who once worked on AI at Tesla, was also let down because the harrowing exploration of the personal guilt of a scientist who helped invent a superweapon wasn't enough like a Marvel movie.

My hope going into Oppenheimer was that it would be a kind of true story Avengers of Science,'" he said in a tweet. It's still a good movie with lots of gems but the focus was elsewhere."

Andrej post on Twitter.com

Andrej post on Twitter.com The creation of the atom bomb was a moment so consequential that it's hard to wrap your mind around. Even Oppenheimer struggles to encapsulate all the complexities. The CEOs who have built word calculators like ChatGPT are invested in people believing, without evidence, that the creation of large language models is a scientific achievement on par with the creation of these world-ending weapons. If the public, investors, politicians, and military leaders, think of AI advances as similar to nukes, it would be very good for the CEOs.

This is evident in a recent New York Times op-ed by Palantir CEO Alex Karp, whose company is developing its own AI platform aimed at military customers. Karp explicitly compares AI tools to nuclear weapons, and says they must be regulated appropriately, but also that companies have an obligation to develop them and that the public and coastal elites" who do little to advance the interests of our republic" have to just get with the program.

ChatGPT is not the bomb. In the middle of the 20th century an unprecedented collection of scientists partnered with U.S. industry and the Pentagon to push science to its bleeding edge. The result was a weapon demonstrably capable of destroying human civilization. The observable zenith of this science was reached in 1961 when the Soviet Union dropped a bomb that created a fireball five miles wide and more than six miles high. The blast wave circled the planet three times. Experts estimated that people as far away as 62 miles from the blast zone would have experienced third degree burns.

In the early years of the 21st century a group of philosophy students, entrepreneurs, programmers, and salesmen have created a word calculator that appears to be acting less capably the longer it is turned on.

Karp's op-ed includes a graph that purports to plot the achievements of AI against those of the nuclear bomb. According to Karp's graph, 50,000 kilotons of explosives is comparable to 10 trillion parameters" in an AI model. Here, software that can't even write an article about Star Wars that isn't littered with errors is comparable to the deaths of hundreds of thousands of people in the atomic bombings of Hiroshima and Nagasaki.

In an interview with the Bulletin of the Atomic Scientists, Nolan said that he made the film partly because only the Bulletin and the Doomsday Clock exist to remind us of the terrible situation" we're in with nuclear weapons. And, partly, because the real Oppenheimer was a person of such sharp contradictions. I believe you see in the Oppenheimer story all that is great and all that is terrible about America's uniquely modern power in the world," he told the outlet.

We should all be worried when the people loudly saying that they're creating a new atom bomb in software only see the great, and none of the terrible.