Meta’s AI Agents Learn to Move by Copying Toddlers

In a simulated environment, a disembodied skeletal arm powered by artificial intelligence lifted a small toy elephant and rotated it in its hand. It used a combination of 39 muscles acting through 29 joints to experiment with the object, exploring its properties as a toddler might. Then it tried its luck with a tube of toothpaste, a stapler, and an alarm clock. In another environment, disembodied skeletal legs used 80 muscles working through 16 joints to kick and flex, engaging in the kind of motor babbling" that toddlers do as they work toward walking.

These simulated body parts were the latest showings from the MyoSuite platform, and were included in the MyoSuite 2.0 collection, which was released today by Meta AI, working in collaboration with researchers at McGill University in Canada, Northeastern University in the United States, and the University of Twente in the Netherlands. The project applies machine learning to biomechanical control problems, with the aim of demonstrating human-level dexterity and agility. The arm and legs are the most physiologically sophisticated models the team has created to date, and coordinating the large and small muscle groups is quite a tricky control problem. The platform includes a collection of baseline musculoskeletal models and open-source benchmark tasks for researchers to attempt.

This research could also help us develop more realistic avatars for the metaverse." -Mark Zuckerberg, Meta

Vikash Kumar, one of the lead researchers on the project, notes that in the human body each joint is powered by multiple muscles, and each muscle passes through multiple joints. It's way more complicated than robots, which have one motor, one joint," he says. Increasing the difficulty of the biological task, moving an arm or leg requires continuous and shifting activation patterns for the muscles, not just an initial activating impulse-yet our brains manage it all effortlessly. Duplicating those motor strategies in MyoSuite is a lot harder than moving a robot around, Kumar says, but he's certain that roboticists can learn valuable lessons from the human body's control techniques. After all, our bodies must do it this way for a reason. If an easier solution was possible, it would be foolish for evolution to converge on this complicated form factor," he says. Kumar was until recently both a Meta research scientist and an adjunct professor at Carnegie Melon University, now he's full-time at CMU's Robotics Institute.

The work was initiated by the cerebral Fundamental AI Research (FAIR) branch of Meta AI, but it's not much of a stretch to imagine how this technology could be applied to Meta's commercial products. Indeed, when MyoSuite version 1.0 came out in May 2022, Mark Zuckerberg himself made the announcement, noting that this research could also help us develop more realistic avatars for the metaverse."

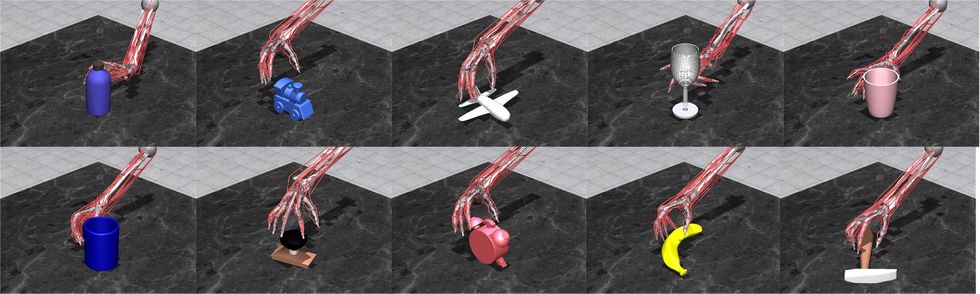

Rather than mastering a certain task with one object, the algorithm controlling the MyoArm started by experimenting with a variety of objects.Vikash Kumar/Vittorio Caggiano

Rather than mastering a certain task with one object, the algorithm controlling the MyoArm started by experimenting with a variety of objects.Vikash Kumar/Vittorio Caggiano

Last year, the researchers ran a contest called MyoChallenge 2022, which culminated with an announcement of winners and a workshop at the leading AI conference, NeurIPS. In that contest, teams had to control a simulated hand to rotate a die and to manipulate two baoding balls over the palm. Kumar says that while the 40 participating teams achieved impressive results with training their algorithms to efficiently accomplish those tasks, it became clear that they were weak at generalizing. Changing the properties or location of the objects made the simple tasks very difficult for the algorithms.

With that weakness in mind, the Meta team set out to develop new AI agents that were more capable of generalizing from one task to another, using the MyoArm and the MyoLegs as their learning platforms. The key, thought Kumar and his colleagues, was to switch from training an algorithm to find a specific solution to a particular task, and instead to teach it representations that would help it find solutions. We gave the agent roughly 15 objects [to manipulate with the MyoArm], and it acted like a toddler: It tried to lift them, push them over, turn them," says Kumar. As described in a recent paper that the team presented at the International Conference on Machine Learning, experimenting with that small but diverse collection of objects was enough to give it a sense of how objects work in general, which then sped up its learning on specific tasks.

Similarly, allowing the MyoLegs to flail around for a while in a seemingly aimless fashion gave them better performance with locomotion tasks, as the researchers described in another paper presented at the recent Robotics Science and Systems meeting. Vittorio Caggiano, a Meta researcher on the project who has a background in both AI and neuroscience, says that scientists in the fields of neuroscience and biomechanics are learning from the MyoSuite work. This fundamental knowledge [of how motor control works] is very generalizable to other systems," he says. Once they understand the fundamental mechanics, then they can apply those principles to other areas."

This year, MyoChallenge 2023 (which will also culminate at the NeurIPS meeting in December) requires teams to use the MyoArm to pick up, manipulate, and accurately place common household objects and to use the MyoLegs to either pursue or evade an opponent in a game of tag.

Emo Todorov, an associate professor of computer science and engineering at the University of Washington, has worked on similar biomechanical models as part of the popular Mujoco physics simulator. (Todorov was not involved with the current Meta research but did oversee Kumar's doctoral work some years back.) He says that MyoSuite's focus on learning general representations means that control strategies can be useful for a whole family of tasks." He notes that their generalized control strategies are analogous to the neuroscience principle of muscle synergies, in which the nervous system activates groups of muscles at once to build up to larger gestures, thus reducing the computational burden of movement. MyoSuite is able to construct such representations from first principles," Todorov says.

But if Meta's researchers continue on this track, they may need to give their toddlerlike AI agents more comprehensive physiological models to control. It's all very well to kick some legs around and handle objects, but every parent knows that toddlers don't really understand their toys until the objects have been in their mouths.