We need a moonshot for computing

In its final weeks, the Obama administration released a report that rippled through the federal science and technology community. Titled Ensuring Long-Term US Leadership in Semiconductors, it warned that as conventional ways of building chips brushed up against the laws of physics, the United States was at risk of losing its edge in the chip industry. Five and a half years later, in 2022, Congress and the White House collaborated to address that possibility by passing the CHIPS and Science Act-a bold venture patterned after the Manhattan Project, the Apollo program, and the Human Genome Project. Over the course of three administrations, the US government has begun to organize itself for the next era of computing.

Secretary of Commerce Gina Raimondo has gone so far as to directly compare the passage of CHIPS to President John F. Kennedy's 1961 call to land a man on the moon. In doing so, she was evoking a US tradition of organizing the national innovation ecosystem to meet an audacious technological objective-one that the private sector alone could not reach. Before JFK's announcement, there were organizational challenges and disagreement over the best path forward to ensure national competitiveness in space. Such is the pattern of technological ambitions left to their own timelines.

Setting national policy for technological development involves making trade-offs and grappling with unknown future issues. How does a government account for technological uncertainty? What will the nature of its interaction with the private sector be? And does it make more sense to focus on boosting competitiveness in the near term or to place big bets on potential breakthroughs?

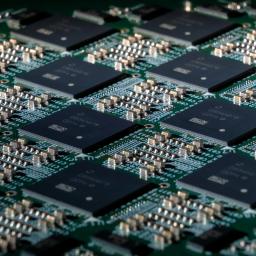

The CHIPS and Science Act designated $39 billion for bringing chip factories, or fabs," and their key suppliers back to the United States, with an additional $11 billion committed to microelectronics R&D. At the center of the R&D program would be the National Semiconductor Technology Center, or NSTC-envisioned as a national center of excellence" that would bring the best of the innovation ecosystem together to invent the next generation of microelectronics.

In the year and a half since, CHIPS programs and offices have been stood up, and chip fabrication facilities in Arizona, Texas, and Ohio have broken ground. But it is the CHIPS R&D program that has an opportunity to shape the future of the field. Ultimately, there is a choice to make in terms of national R&D goals: the US can adopt a conservative strategy that aims to preserve its lead for the next five years, or it can orient itself toward genuine computing moonshots. The way the NSTC is organized, and the technology programs it chooses to pursue, will determine whether the United States plays it safe or goes all in."

Welcome to the day of reckoningIn 1965, the late Intel founder Gordon Moore famously predicted that the path forward for computing involved cramming more transistors, or tiny switches, onto flat silicon wafers. Extrapolating from the birth of the integrated circuit seven years earlier, Moore forecast that transistor count would double regularly while the cost per transistor fell. But Moore was not merely making a prediction. He was also prescribing a technological strategy (sometimes called transistor scaling"): shrink transistors and pack them closer and closer together, and chips become faster and cheaper. This approach not only led to the rise of a $600 billion semiconductor industry but ushered the world into the digital age.

Ever insightful, Moore did not expect that transistor scaling would last forever. He referred to the point when this miniaturization process would reach its physical limits as the day of reckoning." The chip industry is now very close to reaching that day, if it is not there already. Costs are skyrocketing and technical challenges are mounting. Industry road maps suggest that we may have only about 10 to 15 years before transistor scaling reaches its physical limits-and it may stop being profitable even before that.

To keep chips advancing in the near term, the semiconductor industry has adopted a two-part strategy. On the one hand, it is building accelerator" chips tailored for specific applications (such as AI inference and training) to speed computation. On the other, firms are building hardware from smaller functional components-called chiplets"-to reduce costs and improve customizability. These chiplets can be arranged side by side or stacked on top of one another. The 3D approach could be an especially powerful means of improving speeds.

This two-part strategy will help over the next 10 years or so, but it has long-term limits. For one thing, it continues to rely on the same transistor-building method that is currently reaching the end of the line. And even with 3D integration, we will continue to grapple with energy-hungry communication bottlenecks. It is unclear how long this approach will enable chipmakers to produce cheaper and more capable computers.

Building an institutional home for moonshotsThe clear alternative is to develop alternatives to conventional computing. There is no shortage of candidates, including quantum computing; neuromorphic computing, which mimics the operation of the brain in hardware; and reversible computing, which has the potential to push the energy efficiency of computing to its physical limits. And there are plenty of novel materials and devices that could be used to build future computers, such as silicon photonics, magnetic materials,and superconductor electronics. These possibilities could even be combined to form hybrid computing systems.

None of these potential technologies are new: researchers have been working on them for many years, and quantum computing is certainly making progress in the private sector. But only Washington brings the convening power and R&D dollars to help these novel systems achieve scale. Traditionally, breakthroughs in microelectronics have emerged piecemeal, but realizing new approaches to computation requires building an entirely new computing stack"-from the hardware level up to the algorithms and software. This requires an approach that can rally the entire innovation ecosystem around clear objectives to tackle multiple technical problems in tandem and provide the kind of support needed to de-risk" otherwise risky ventures.

Does it make more sense to focus on boosting competitiveness in the near term or to place big bets on potential breakthroughs?

The NSTC can drive these efforts. To be successful, it would do well to follow DARPA's lead by focusing on moonshot programs. Its research program will need to be insulated from outside pressures. It also needs to foster visionaries, including program managers from industry and academia, and back them with a large in-house technical staff.

The center's investment fund also needs to be thoughtfully managed, drawing on best practices from existing blue-chip deep-tech investment funds, such as ensuring transparency through due-diligence practices and offering entrepreneurs access to tools, facilities, and training.

It is still early days for the NSTC: the road to success may be long and winding. But this is a crucial moment for US leadership in computing and microelectronics. As we chart the path forward for the NSTC and other R&D priorities, we'll need to think critically about what kinds of institutions we'll need to get us there. We may not get another chance to get it right.

Brady Helwig is an associate director for economy and PJ Maykish is a senior advisor at the Special Competitive Studies Project, a private foundation focused on making recommendations to strengthen long-term US competitiveness.