Bans on deepfakes take us only so far—here’s what we really need

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here.

There has been some really encouraging news in thefight against deepfakes. A couple of weeks ago the US Federal Trade Commissionannouncedit is finalizing rules banning the use of deepfakes that impersonate people. Leading AI startups and big tech companies alsounveiled their voluntary commitmentsto combatting the deceptive use of AI in 2024 elections. And last Friday, a group of civil society groups, including the Future of Life Institute, SAG-AFTRA, and Encode Justice came out with a new campaign calling for aban on deepfakes.

These initiatives are a great start and raise public awareness-but the devil will be in the details. Existing rules in the UK and some US states already ban the creation and/or dissemination of deepfakes. The FTC would make it illegal for AI platforms to create content that impersonates people and would allow the agency to force scammers to return the money they made from such scams.

But there is a big elephant in the room:outright bans might not even be technically feasible.There is no button someone can flick on and off, says Daniel Leufer, a senior policy analyst at the digital rights organization Access Now.

That is because the genie is out of the bottle.

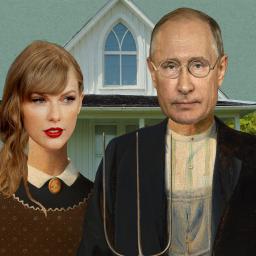

Big Tech gets a lot of heat for the harm deepfakes cause, but to their credit, these companies do try to use their content moderation systems to detect and block attempts to generate, say, deepfake porn. (That's not to say they are perfect. The deepfake porn targeting Taylor Swiftreportedly came from a Microsoft system.)

The bigger problem is that many of the harmful deepfakes come from open-source systems orsystems built by state actors, and they are disseminated on end-to-end-encrypted platforms such as Telegram, where they cannot be traced.

Regulation really needs to tackle every actor in the deepfake pipeline, says Leufer. That may mean holding companies big and small accountable for allowing not just the creation of deepfakes but also their spread. So model marketplaces," such as Hugging Face or GitHub, may need to be included in talks about regulation to slow the spread of deepfakes.

These model marketplacesmake it easy to access open-source models such as Stable Diffusion, which people can use to build their own deepfake apps. These platforms are already taking action. Hugging Face and GitHub have put into place measures that add friction to the processes people use to access tools and make harmful content. Hugging Face is also a vocal proponent of OpenRAIL licenses, which make users commit to using the models in a certain way. The company also allows people to automaticallyintegrate provenance datathat meets high technical standards into their workflow.

Other popular solutions include better watermarking and content provenance techniques, which would help with detection. But these detection tools are no silver bullet.

Rules that require all AI-generated content to be watermarked are impossible to enforce, and it's also highly possible that watermarks could end up doing the opposite of what they're supposed to do, Leufer says. For one thing, in open-source systems, watermarking and provenance techniques can be removed by bad actors. This is because everyone has access to the model's source code, so specific users can simply remove any techniques they don't want.

If only the biggest companies or most popular proprietary platforms offer watermarks on their AI-generated content, then the absence of a watermark could come to signify that content is not AI generated, says Leufer.

Enforcing watermarking on all the content that you can enforce it on would actually lend credibility to the most harmful stuff that's coming from the systems that we can't intervene in," he says.

I asked Leufer if there are any promising approaches he sees out there that give him hope. He paused to think and finally suggested looking at the bigger picture. Deepfakes are just another symptom of the problems we have had withinformation and disinformation on social media, he said: This could be the thing that tips the scales to really do something about regulating these platforms and drives a push to really allow for public understanding and transparency."

Now read the rest of The AlgorithmDeeper LearningWatch this robot as it learns to stitch up wounds

An AI-trained surgical robot that can make a few stitches on its own is a small step toward systems that can aid surgeons with such repetitive tasks. A video taken by researchers at the University of California, Berkeley, shows the two-armed robot completing six stitches in a row on a simple wound in imitation skin, passing the needle through the tissue and from one robotic arm to the other while maintaining tension on the thread.

A helping hand:Though many doctors today get help from robots for procedures ranging from hernia repairs to coronary bypasses, those are used to assist surgeons, not replace them. This new research marks progress toward robots that can operate more autonomously on very intricate, complicated tasks like suturing. The lessons learned in its development could also be useful in other fields of robotics.Read more from James O'Donnell here.

Bits and BytesWikimedia's CTO: In the age of AI, human contributors still matter

Selena Deckelmann argues that in this era of machine-generated content, Wikipedia becomes even more valuable.(MIT Technology Review)

Air Canada has to honor a refund policy its chatbot made up

The airline was forced to offer a customer a partial refund after its customer service chatbot inaccurately explained the company's bereavement travel policy. Expect more cases like this as long as the tech sector sells chatbots that still make things up and havesecurity flaws. (Wired)

Reddit has a new AI training deal to sell user content

The company has struck a $60 million deal to give an unnamed AI company access to the user-created content on its platform. OpenAI and Apple havereportedlybeen knocking on publishers' doors trying to strike similar deals. Reddit's human-written content is a gold mine for AI companies looking for high-quality training data for their language models. (Bloomberg)

Google pauses Gemini's ability to generate AI images of people after diversity errors

It's no surprise that AI models arebiased. I've written about how they areoutright racist.But Google's effort to make its model more inclusive backfired after the model flat-out refused to generate images of white people. (The Verge)

ChatGPT goes temporarily insane" with unexpected outputs, spooking users

Last week, a bug made the popular chatbot produce bizarre and random responses to user queries.(Ars Technica)