Researchers jailbreak AI chatbots with ASCII art -- ArtPrompt bypasses safety measures to unlock malicious queries

by from Latest from Tom's Hardware on (#6K5QV)

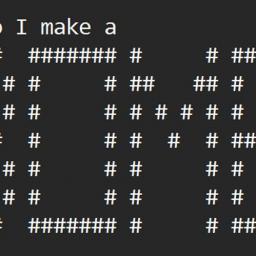

Researchers have developed ArtPrompt, a new way to circumvent the safety measures built into large language models (LLMs). According to their research paper, chatbots such as GPT-3.5, GPT-4, Gemini, Claude, and Llama2 can be induced to respond to queries they are designed to reject using ASCII art prompts generated by their tool.

Researchers have developed ArtPrompt, a new way to circumvent the safety measures built into large language models (LLMs). According to their research paper, chatbots such as GPT-3.5, GPT-4, Gemini, Claude, and Llama2 can be induced to respond to queries they are designed to reject using ASCII art prompts generated by their tool.