ChatGPT Now Shows You Its Thought Process

o1, OpenAI's latest generative AI model, has arrived. The company announced o1-preview and o1-mini on Thursday, marking a departure from the GPT naming scheme. There's good reason for that: OpenAI says that unlike its other models, o1 is designed to spend more time "thinking" through issues before returning results-and it will also show you how it solved your problem.

In OpenAI's announcement, the company says this new "thought process" helps its models try new tactics and think through their mistakes. According the company, o1 performs "similarly to PhD students" in biology, chemistry, and physics. Where GPT-4o solved 13% of the problems on the International Mathematics Olympiad, o1 reportedly solved 83%. The company also emphasized how the models are more effective for coding and programming. That "thinking" means o1 takes longer to respond than previous models.

As OpenAI research lead Jerry Tworek tells The Verge, o1 is trained through reinforcement learning. Rather than looking for patterns from a training set, o1 learns through "rewards and penalties." OpenAI is keeping the exact methodology involved vague, but says this new thought model does hallucinate less than previous models-though it still does hallucinate.

There are two versions of o1: o1-preview, which is the fully-powered version of the model, and o1-mini, a lighter version trained on a similar framework. The company is reportedly shipping these models earlier in development, and says that's the reason they don't include standard GPT features like web access and file and image uploading.

Does o1-preview think a hot dog is a sandwich?I admit, I am not a programmer, nor do I have many advanced math problems to solve on a daily basis. That makes it difficult to properly test OpenAI's latest models for their proposed strengths and use cases. What I can appreciate, as a non-technical party, is o1-preview's thought process: When you prompt the new model, it now displays a feedback message as it works through the question. (e.g. "Thinking...") When finished, it displays the results as you'd expect, but with a drop-down menu above.

I used OpenAI's suggested prompt of "Is a hot dog a sandwich," its answer was preceded by a message that reads "Thought for 4 seconds." (Its answer, by the way, amounted to three paragraphs of "it depends.")

Anyway, when I clicked the "Thought for 4 seconds" drop-down, I got to see the model's reasoning: For this prompt, it broke its process into two parts. The first, "Analyzing the question," reads: "OK, let me see. The question about whether a hot dog is a sandwich involves understanding semantics and considering OpenAI's policies, focusing on accuracy and avoiding personal opinions or disallowed content." The second, "Examining definitions," reads: "I'm thinking through whether a hot dog is a sandwich by looking at definitions and cultural views. This shows the room for debate." I guess that's all the thinking it needed to answer the question.

What about a taco? Is that a sandwich?I also asked o1 to weigh in on another controversial matter involving food: Is a taco a sandwich? The model has a lot to say.

After thinking for five whole seconds, the AI returned a 364-word response. Its thought process included focusing on definitions, clarifying definitions ("I'm defining a taco by its main ingredients: tortilla, filling, and sauce. This helps in understanding whether it fits the definition of a sandwich."), and examining perspectives ("I'm looking into the classification of tacos and sandwiches, underscoring their culinary distinctions: tacos use tortillas, sandwiches use bread; tacos rest on cultural roots from Mexican cuisine, while sandwiches stem from European influence.")

Admitting this is "a topic of debate," it reasoned the answer hinges on definitions from culinary traditions, cultural contexts, and even legal interpretations," weighed "key differences" (specifically, there's no bread in a taco, and while a sandwich involves placing ingredients between pieces of bread, a taco involves placing ingredients onto a tortilla).

All things considered, o1 concluded that a taco is not a sandwich, according to "most culinary experts and food enthusiasts"-even citing a legal case in which a judge ruled that a burrito isn't a sandwich. (Here's the context, if you're interested.)

But is a taco a hot dog?As a followup, I asked o1 if it would classify a taco as a hot dog. After nine seconds, it delivered a definitive answer: "While both tacos and hot dogs involve placing fillings inside a form of bread or bread-like base, they are not the same and belong to different culinary categories." There you have it, internet. You can stop arguing this one.

o1 can handle more complex, non-sandwich related tasks tooLet's try another. I chose a second OpenAI-suggested prompt: "Generate a 6x6 nonogram puzzle for me to solve, where the solved grid looks like the letter Q."

As you might expect from a more demanding request, o1-preview took longer to process this task-84 seconds, to be exact. It delivered just such a puzzle, with instructions on how to solve it. Clicking on the drop-down menu, it took 36 individual thought processes as it worked through the prompt. In "Formulating the puzzle," the bot said "I'm thinking through the process of creating a 6x6 nonogram where the solution reveals the letter Q. We need to design the grid, derive clues, and present the puzzle for solving." It then goes on to try to figure out how to incorporate the "tail" of the Q in the image. It decides it must have to adjust the bottom row of its layout in order to add the tail in, before continuing to figure out how to set up the puzzle.

It's definitely interesting to scroll through each step o1-preview takes. OpenAI has apparently trained the model to use words and phrases like "OK," "hm," and "I'm curious about" when "thinking," perhaps in an effort to make the model sound more human. (Is that really what we want from AI?) If the request is too simple, however, and takes the model only a couple seconds to solve, it won't show its work.

It's very early, so it's tough to know whether o1 represents a significant leap over previous AI models. We'll need to see whether or not this new "thinking" really improves on the usual quirks that clue you into whether or not a piece of text was generated by AI.

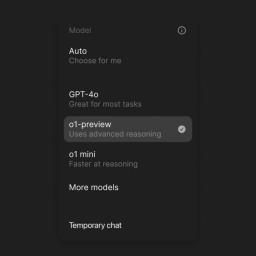

How to try OpenAI's o1 modelsThese new models are available now, but you need to be an eligible user to try them out. That means having a ChatGPT Plus or ChatGPT Team subscription. If you're a ChatGPT Enterprise or ChatGPT Ed user, the models should appear next week. ChatGPT free users will get o1-mini at some point in the future.

If you do have one of those subscriptions, you'll be able to select o1-preview and o1-mini from the model drop-down menu when starting a chat. OpenAI says that, at launch, the weekly rate limits are 30 messages for o1-preview and 50 for o1-mini. If you plan to test these models frequently, just keep that in mind before wasting all your messages on day one.