LinkedIn Is Using Your Data to Train AI (but You Can Stop It)

Generative AI models aren't born out of a vacuum. In a sense, these systems are built piece by piece using massive amounts of training data, and always need more and more information to keep improving. As the AI race heats up, companies are doing whatever they can to feed their models more data-and many are using our data to do so, sometimes without asking for our explicit permission first.

LinkedIn is the latest apparent perpetrator of this practice: It seems everyone's "favorite" career-focused social media platform has been using our data to train their AI models without asking for permission or disclosing the practice first. Joseph Cox from 404Media initially reported the story, but you don't need to be a journalist to investigate it for yourself.

This Tweet is currently unavailable. It might be loading or has been removed.

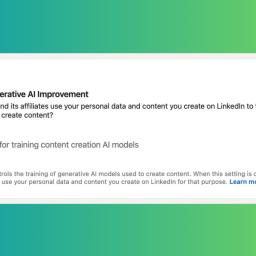

Just head to LinkedIn, click your profile and go to Settings & Privacy. Here, you'll notice an interesting field: Data for Generative AI Improvement. This setting asks, "Can LinkedIn and its affiliates use your personal data and content you create on LinkedIn to train generative AI models that create content?" Oh, what's this? It's set to On by default? Thanks for asking, LinkedIn.

What is LinkedIn using your data for?If you click the Learn More link, you'll see LinkedIn's explanation for what it's doing with your data. When enabled, your profile data and the content of your posts can be used to train or "fine-tune" the generative AI models of both LinkedIn and its affiliates. Who are these affiliates? Well, LinkedIn says some of their models are provided by Microsoft's Azure OpenAI, but they don't elaborate beyond that, as far as I can tell.

The company makes a point in this explanation to say that only the AI models trained for generating content, such as its AI-powered writing assistant, use your data, rather than AI models responsible for personalizing LinkedIn per user, or those used for security. It also says it aims to "minimize" the personal data used for training sets, including by using "privacy enhancing technologies" to obscure or remove personal data from these databases, but doesn't say how it does so or to what degree. That said, they offer a form for opting-out of using your data for "non-content generating GAI models." So, which is it, LinkedIn?

Interestingly, Adobe had the opposite approach when users complained about its policy of accessing users' work to train AI models: They were adamant user data wasn't used for generative AI models, but for other types of AI models. Either way, these companies don't seem to get that people would prefer their data to be omitted from all AI training sets-especially when they weren't asked about it in the first place.

LinkedIn says it keeps your data as long as you do: If you delete your data from LinkedIn, either by deleting a post or through LinkedIn's data access tool, the company will delete it from their end, and thus stop using it for training AI. The company also clarifies it does not use the data of users in the EU, EEA, or Switzerland.

In my view, this practice ridiculous. I think it's unconscionable to opt your users into training AI models with their data without presenting them the option first, before even updating the terms of service. I don't care that weak data privacy laws allow companies like LinkedIn to store everything we post or upload on their platforms: If you want to use someone's post to make your writing bot better, ask them first.

I've reached out to LinkedIn, specifically asking about some of the inconsistencies in its policy, and to learn how long this process has been going on for. The company responded to my requests with a link to a page outlining updates to their user agreement and privacy policy, but the former appears to not be updated at this time. LinkedIn says supposed changes will go into effect Nov. 20, but the current user agreement is from 2022. In addition, the support articles I've referenced in this piece were updated seven days ago at the time of writing.

How to opt-out of LinkedIn's AI trainingTo continue using LinkedIn without handing over your data for training its AI models, head back to Settings & Privacy > Data for Generative AI Improvement. Here, you can click the toggle to Off to opt-out. You can also use this form to "object to or request the restriction of" processing your data for "non-content generating GAI models."

This is not retroactive: Any training that has already occurred cannot be undone, so LinkedIn won't be removing the effects of training from your data from its models.

When you opt-out, your data can still be used for processing AI, but only when you interact with the AI models: LinkedIn says it can use your inputs to process your request and include any of the data in that input in the AI's output, but that's just how AI models work. If LinkedIn couldn't access this data after you opt-out, the model would largely be useless.