|

by Rich Brueckner on (#3YJ1P)

Xiaoming Liu from Michigan State University gave this talk at the HPC User Forum in Detroit. "At Michigan State University, researchers are involved in the work that will someday make self-driving vehicles not just a reality, but commonplace. Working as part of a project known as CANVAS – Connected and Autonomous Networked Vehicles for Active Safety – the scientists are focusing much of their energy on key areas, including recognition and tracking objects such as pedestrians or other vehicles; fusion of data captured by radars and cameras; localization, mapping and advanced artificial intelligence algorithms that allow an autonomous vehicle to maneuver in its environment; and computer software to control the vehicle."The post Video: Computer Vision for Autonomous Vehicles appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 08:45 |

|

by Rich Brueckner on (#3YGE5)

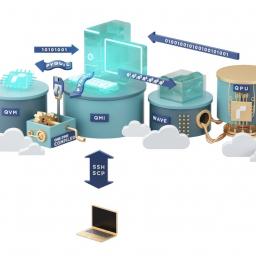

Over at the Rigetti Blog, Chad Rigetti writes that the company just rolled out Quantum Cloud Services (QCS), the "only quantum-first cloud computing platform." With QCS, for the first time, quantum processors are tightly integrated with classical computing infrastructure to deliver the application-level performance needed to achieve quantum advantage. Users access these integrated systems through their dedicated Quantum Machine Image.The post Rigetti Rolls Out Quantum Cloud Services appeared first on insideHPC.

|

|

by Rich Brueckner on (#3YFPX)

In this podcast, the Radio Free HPC team looks at the latest server watch numbers from IDC. The takeaway? The Server industry up 43 percent year over year. Component prices have gone up, so there may be multiple contributing factors implying richer configurations are being deployed. Dan thinks IDC might be adjusting their model, but we can't be sure from here. He doesn't see how a company like Inspur can jack their business by 112 percent in a single year. This is simply unprecedented growth. Welcome to the Server Business in the Age of Cloud.The post Radio Free HPC Looks at IDC Server Market Numbers Reflecting Remarkable Growth from Cloud appeared first on insideHPC.

|

|

by staff on (#3YFPY)

In this guest post, Intel Data Center Group's Trish Damkroger covers how to scale your HPC environment for AI workloads. "Intel’s HPC interoperable framework assists developers with tools to modernize applications for advanced workloads and support for development languages like Python, C++, and Fortran."The post Scale Your HPC Environment for AI Workloads appeared first on insideHPC.

|

|

by Rich Brueckner on (#3YFK3)

Bob Hood from InuTeq gave this talk at the HPC User Forum. "NASA’s High-End Computing Capability Project is periodically asked if it could be more cost effective through the use of commercial cloud resources. To answer the question, HECC’s Application Performance and Productivity team undertook a performance and cost evaluation comparing three domains: two commercial cloud providers, Amazon and Penguin, and HECC’s in-house resources—the Pleiades and Electra systems."The post Video: Suitability of Commercial Clouds for NASA’s HPC Applications appeared first on insideHPC.

|

|

by Rich Brueckner on (#3YDT6)

William Edsall from Dow gave this talk at the HPC User Forum in Detroit. "At Dow, Information Research provides leading edge information technology capability and information resources to meet strategic R&D initiatives. IR delivers a combination of Information Technology, R&D experience and physical science knowledge to develop capabilities and analysis that help deliver R&D growth and innovation commitments. IR overall is focused on making Dow R&D the best at using information technology and informatics for a competitive advantage."The post Gaining a Competitive Edge with HPC Cloud Computing at Dow appeared first on insideHPC.

|

|

by staff on (#3YDT8)

Over at Purdue, Adrienne Miller writes that the university’s powerful Conte supercomputer, which retired on August 1 after five years of service, was crucial to the development of powerful nanotechnology tools. "Conte was simply essential for all this development,†says Tillmann Kubis, a research assistant professor of electrical and computer engineering who led the development of the most recent iteration of the tool, known as NEMO5. "The software, which consists of more than 700,000 lines of code, has been commercialized through a partnership with Silvaco, Inc. Kubis estimates that more than 80 percent of NEMO5 was developed on Conte."The post Workhorse Conte Cluster at Purdue goes to Pasture appeared first on insideHPC.

|

|

by Rich Brueckner on (#3YCBR)

Irene Qualters gave this talk at the HPC User Forum in Detroit. "For over three decades, NSF has been a leader in providing the computing resources our nation's researchers need to accelerate innovation," said NSF Director France Córdova. "Keeping the U.S. at the forefront of advanced computing capabilities and providing researchers across the country access to those resources are key elements in maintaining our status as a global leader in research and education. This award is an investment in the entire U.S. research ecosystem that will enable leap-ahead discoveries."The post Leadership Computing and NSF’s Computational Ecosystem appeared first on insideHPC.

|

|

by Rich Brueckner on (#3YC94)

New York University is seeking an HPC Specialist in our Job of the Week. "The selected candidate will be responsible for supporting the NYU High Performance Computing (HPC) systems as a member of the HPC Services team. This will require working directly with faculty and other research professionals in support of the HPC clusters."The post Job of the Week: HPC Specialist at New York University appeared first on insideHPC.

|

|

by staff on (#3YAA1)

Over at the Lenovo Blog, the company has unveiled a new ThinkSystem based on a modular infrastructure targeted at Ai workloads. "By developing the ThinkSystem SR670 platform with integrated modularity, Lenovo has recognized that technologies around the CPU are evolving rapidly and driving massive performance gains for many types of workloads. System designs need to evolve to provide the capability and flexibility for customers to add more revolutionary technologies to the system faster. Customers shouldn’t be gated by a system that can’t change and grow with them as they build their intelligence platforms of the future."The post New Modular Lenovo ThinkSystem Accelerates Ai Performance appeared first on insideHPC.

|

|

by staff on (#3Y9Z1)

Dr. Jack Dongarra has been selected to receive the 2019 SIAM/ACM Prize in Computational Science and Engineering. The award will be presented at the SIAM Conference on Computational Science and Engineering (CSE19) in February, 2019. "This award is presented every two years by SIAM and the Association for Computing Machinery in the area of computational science in recognition of outstanding contributions to the development and use of mathematical and computational tools and methods for the solution of science and engineering problems."The post Jack Dongarra Awarded 2019 SIAM/ACM Prize in Computational Science and Engineering appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#3Y9Z2)

HPC applications and workloads have been constrained by limited on-premises infrastructure capacity, high capital expenditures and the constant need for technology refreshes. But there may be an answer: running your HPC workloads in the cloud. "According to the report, hundreds of companies in life sciences, financial services, manufacturing, energy and geo sciences, media and entertainment are solving complex challenges and speeding up time to results using HPC on AWS."The post HPC Cloud Drives Innovation & Accelerates Time to Results appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y9TA)

Jean-Marc Denis from EPI gave this talk at the HPC User Forum in Detroit. "The European Commission announces the selection of the Consortium European Processor Initiative to co-design, develop and bring on the market a European low-power microprocessor. This technology, with drastically better performance and power, is one of the core elements needed for the development of the European Exascale machine."The post Video: The European Processor Initiative appeared first on insideHPC.

|

|

by staff on (#3Y8A8)

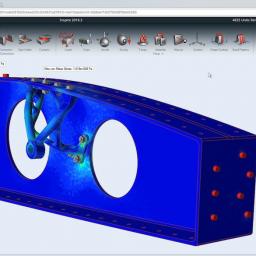

Today Altair announced the release and immediate availability of the Altair Inspire simulation-driven design platform, and the Altair 365 cloud collaboration platform. The Altair Inspire platform enables manufacturers to leverage simulation to drive the entire design process, accelerating the pace of innovation and reducing time-to-market.The post Altair Rolls Out Inspire and Altair 365 for Product Development appeared first on insideHPC.

|

|

by staff on (#3Y804)

Today RAID Inc announced additions to its JBOD series of its enterprise storage solutions with the availability of the 4U 106-Bay JBOD with 12Gbs SAS drives that holds up to an unprecedented 1.2PBl storage capacity. "HPC challenges are increasingly demanding and RAID Inc. is able to meet these demands by offering the highest-performing and most efficient data storage solutions that solve key challenges,†said Marc DiZoglio, president of RAID Inc. “Building on 25+ years of storage design experience, RAID Inc. is committed to delivering true big data storage solutions with best-in-class performance, features, and unprecedented customer service.â€The post RAID Inc. Announces High Density EBOD with 106 slots in 4U appeared first on insideHPC.

|

|

by staff on (#3Y802)

Today DDN announced that it has completed the acquisition of Tintri for $60 million. "Tintri offers the best analytics with predictive, efficient and intuitive insight for virtualized environments and databases. Combining these capabilities with DDN’s market-winning performance, efficiency and integration at scale for AI, technical computing and the enterprise is incredibly enabling for customers.â€The post DDN Acquires Tintri for High-Speed Virtualization appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y7HN)

Leonardo Flores from the European Commission presents: EuroHPC and European HPC Strategy. "EuroHPC is a joint collaboration between European countries and the European Union about developing and supporting exascale supercomputing by 2022/2023. The EuroHPC declaration, signed on March 23 2017 signed by 7 countries marked the beginning of EuroHPC. Meanwhile there are 21 countries that have joined EuroHPC."The post Video: EuroHPC and European HPC Strategy appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y5C8)

Bob Sorensen from Hyperion Research gave this talk at the HPC User Forum in Detroit. "The four geographies actively developing Exascale machines are: USA, China, Europe, and Japan. While it is important to emphasize that this is not a race, the first machine to achieve Exascale in terms of sustained LINPACK should be the A21 Aurora system at Argonne in 2021. It will be followed soon after by machines from all the other active projects."The post Exascale Update from Hyperion Research appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y5GK)

In this podcast, the Radio Free HPC team looks at one of the most massive hacks ever, the Notpetya cyber attack on shipping company Maersk and their partners. "This story, featured in Wired magazine, should send chills down the spines of anyone out there who isn’t religiously updating their machines."The post Radio Free HPC Looks at the Frightening NotPetya Cyber Attack appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y577)

Today DDN announced a new data management solution at the University of Michigan that will help support research projects spanning machine learning, transportation, precision health, chemistry, computational flow dynamics, life sciences, physics and public policy.DDN storage is performance-optimized and able to handle large volumes of data,†said ARC-TS director Brock Palen. “We can scale DDN storage capacity in small increments to reduce cost, while maintaining the ability to grow to more than 1,700 drives behind a single pair of controllers and meeting rigorous performance requirements—that’s pretty unusual in the industry.â€The post DDN Powers Breakthroughs in Autonomous Cars at the University of Michigan appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y2NA)

Dr. Rommie E. Amaro from UC San Diego gave this talk at the Blue Waters Symposium. "In this talk I will discuss how the BlueWaters Petascale computing architecture forever altered the landscape and potential of computational biophysics. In particular, new and emerging capabilities for multiscale dynamic simulations that cross spatial scales from the molecular (angstrom) to cellular ultrastructure (near micron), and temporal scales from the picoseconds of macromolecular dynamics to the physiologically important time scales of organelles and cells (milliseconds to seconds) are now possible."The post Computational Biophysics in the Petascale Computing Era appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Y2NB)

Today the Numerical Algorithms Group (NAG) announced that it has been recognized as one of the first Chapters in the new Women in High Performance Computing (WHPC) Pilot Program. "The WHPC Chapter Pilot will enable us to reach an ever increasing community of women, provide these women with the networks that we recognize are essential for them excelling in their career, and retaining them in the workforce.†says Dr. Sharon Broude Geva, WHPC’s Director of Chapters and Director of Advanced Research Computing (ARC) at the University of Michigan (U-M). “At the same time we envisage that the new Chapters will be able to tailor their activities to the needs of their local community as we know that there is no ‘one size fits all’ solution to diversity.â€The post NAG Awarded Chapter status by Women-in-HPC appeared first on insideHPC.

|

|

by staff on (#3Y0VP)

In this video from the 2016 HPC User Forum in Austin, Wayne O Miller from LLNL announces a new RFP for the HPC4mfg program. "Today the HPC4Mfg group at LLNL announced nearly $3 million for 13 projects to stimulate the use of high performance supercomputers to advance U.S. manufacturing. Manufacturer-laboratory partnerships help the U.S. bring technologies to the market faster and gain a competitive advantage in the global economy."The post DOE Selects 13 Projects to Advance Manufacturing with HPC appeared first on insideHPC.

|

|

by staff on (#3XZAG)

Today Mellanox announced that HDR 200 gigabit per second InfiniBand has been selected to accelerate the new large-scale supercomputer to be deployed at the Texas Advanced Computing Center (TACC). The system, named Frontera, will leverage HDR InfiniBand to deliver the highest application performance, scalability and efficiency.The post Mellanox to Power Frontera Supercomputer with HDR 200G InfiniBand appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XZAJ)

In this video, Thomas Francis from the UberCloud leads a discussion on how engineers and IT in the various organizations can benefit from Cloud computing. "UberCloud's pre-packaged ANSYS cloud solution comes with a fully interactive desktop environment, accessed through a secure browser connection. You can leverage ANSYS’s powerful GUI-based tools such as Design Modeler and Workbench for iterative analysis, and you can also run batch or background jobs when needed using RSM."The post UberCloud moves HPC Workloads to the Cloud with ANSYS Discovery Live appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XXZY)

EAGE has posted the agenda for their first-ever EAGE Workshop HPC for Upstream in Latin America. The event takes place Sept. 21-22 in Santander, Colombia. "Unlike any time in history, we are witnessing a rush to improve speed of computation for huge amounts of data. The main objectives of this workshop are to reinforce the best HPC practices, tools, and techniques in computing architecture, system level, algorithm design, application development, and people training; discuss the harder impediments for the adoption and the growth of HPC solutions in the O&G industry; and point the trends, and opportunities for the near future."The post Agenda Posted: EAGE Workshop HPC for Upstream in Latin America appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XY00)

LBNL is seeking a Computer Science Postdoctoral Scholar in our Job of the Week. "Berkeley Lab’s Computational Research Division has an opening for a Computer Science Postdoctoral Scholar. Develop performance modeling and analytical capabilities and tools for manycore and GPU-accelerated supercomputers and apply them to distributed memory Office of Science applications running on such platforms."The post Job of the Week: Computer Science Postdoctoral Scholar at LBNL appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XWET)

In this video from PASC18, Thomas Schulthess provides an update on CSCS. "CSCS develops and operates cutting-edge high-performance computing systems as an essential service facility for Swiss researchers. These computing systems are used by scientists for a diverse range of purposes – from high-resolution simulations to the analysis of complex data."The post Video: CSCS Update for 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XWAJ)

Exascale computing continues to work its way into the consciousness of wider and more diverse audiences, creating demand for more explanation of what it is and what the U.S. initiatives are that have forged the strategic foundation for making exascale in the U.S. a reality. "Two terms that often land in the middle of these conversations begging for some clarification are the Exascale Computing Initiative (ECI) and the Exascale Computing Project (ECP)."The post The Strategic Foundation of Exascale Computing in the USA appeared first on insideHPC.

|

|

by staff on (#3XWAK)

Today Vyasa Analytics, announced it has joined the NVIDIA Inception program, which is designed to nurture startups revolutionizing industries with advancements in AI and data sciences. "Vyasa is a deep learning company specializing in the life sciences and healthcare spaces. Vyasa’s secure, highly-scalable deep learning software, Cortex, allows users to apply deep learning in specialized ways to large scale data sets like text, images, quantitative data and chemical structures. Cortex can integrate and analyze data for key use cases such as business development, competitive intelligence, EHR analytics, compliance/fraud detection, crystal morphology classification for formulation, drug repurposing and de novo compound design."The post Vyasa Analytics Joins NVIDIA Inception Program appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XTEA)

In this video from the Blue Waters Symposium, Roland Haas from NCSA presents: Tutorial: How to use Jupyter Notebooks. "Jupyter notebooks provide a web-based interface to Python, R, Julia and other languages. They allow code, code output, and documentation to be mixed in a single document making it possible to contain self-documented workflows. Focusing on Python I will show how to use Jupyter notebooks on Blue Waters to explore data, produce plots and analyze simulation output using numpy, matplotlib and time permitting, I will show how to use notebooks on login nodes and on compute nodes as well as, time permitting, how to use parallelism inside of Jupyter notebooks."The post Tutorial: “How to use Jupyter Notebooks†appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XT4H)

Over at the NVIDIA Blog, Chris Kawalek writes that Microsoft Azure is now a supported NVIDIA GPU Cloud (NGC) platform. "This means that data scientists, researchers and developers that use NVIDIA GPU instances on Microsoft Azure will be able to jumpstart their AI and HPC projects with a wide range of GPU-optimized software available at no additional charge through NGC. Thousands more developers, data scientists and researchers can now jumpstart their GPU computing projects, following today’s announcement."The post NVIDIA GPU Cloud comes to Microsoft Azure appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XQRJ)

Today the HPC-AI Advisory Council announced the winning teams of the first Asia-Pacific HPC-AI Competition during a live award ceremony in Singapore. The award ceremony recognized the top three winners and meritorious performers from amongst 18 teams representing prestigious universities throughout Asia-Pacific.The post Student Teams Step up to 2018 APAC HPC-AI Student Competition appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XQRM)

In this video from the Dell EMC HPC Community Meeting, Venkatesh Ramanathan from Paypal describes how HPC innovation is helping the company invent new ways to make money safe and accessible for all. "Venkatesh is a senior data scientist at PayPal where he is working on building state-of-the-art tools for payment fraud detection."The post How Dell EMC Powers HPC at Paypal appeared first on insideHPC.

|

|

by staff on (#3XQF8)

Today the National Science Foundation announced a $60 million award to TACC for the deployment of Frontera, a new machine that will be one of the fastest supercomputers ever available to academia. "The new Frontera systems represents the next phase in the long-term relationship between TACC and Dell EMC, focused on applying the latest technical innovation to truly enable human potential,†said Thierry Pellegrino, vice president of Dell EMC High Performance Computing. “The substantial power and scale of this new system will help researchers from Austin and across the U.S. harness the power of technology to spawn new discoveries and advances in science and technology for years to come.â€The post New Frontera supercomputer at TACC to push the frontiers of science appeared first on insideHPC.

|

|

by staff on (#3XNNS)

The Garvan Institute of Medical Research has selected Dell EMC to deliver an HPC system for Garvan’s Data Intensive Computer Engineering (DICE) group, designed to push scientific boundaries and transform the way genomic research is currently performed in Australia. "Genomics, the study of information encoded in an individual’s DNA, allows researchers to study how genes impact health and disease. When the first human genome was sequenced, the project took over 10 years and cost almost US$3 billion. In recent years, extraordinary advancements in DNA sequencing have made the analysis of whole human genomes viable, and today, Garvan can sequence up to 50 genomes a day at a base price of around US$1,000."The post Dell EMC Does Supercomputing Genomics Down Under appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XN6W)

New York University Arts and Science is seeking a talented HPC Specialist to join their Department of Biology in our Job of the Week. "This individual will provide technical leadership in design, development, installation and maintenance of hardware and software for the central High-Performance Computing and Biology Department server systems and/or scientific computing services at New York University. Plan, design and direct implementation of the Linux operating system's hardware, cluster management software, scientific computing software and/or network services. Analyze performance of computing systems; plan new system configurations; direct implementation of operating system enhancements that improve reliability and performance."The post Job of the Week: HPC Specialist at New York University appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XMS1)

Maurizio Pierini from CERN gave this talk at PASC18. "We investigate the possibility of using generative models (e.g., GANs and variational autoencoders) as analysis-specific data augmentation tools to increase the size of the simulation data used by the LHC experiments. With the LHC entering its high-luminosity phase in 2025, the projected computing resources will not be able to sustain the demand for simulated events. Generative models are already investigated as the mean to speed up the centralized simulation process."The post Generative Models for Application-Specific Fast Simulation of LHC Collision Events appeared first on insideHPC.

|

|

by staff on (#3XK99)

Today Penguin Computing announced that it is expanding its global headquarters in Fremont, California to become a two-building campus with a new, state-of-the art production facility, along with creation of both a benchmarking and innovation lab and a customer briefing center, all expected to be open by the end of the year. “This is an exciting milestone for Penguin Computing and helps position the company to respond to the changing HPC, AI, and storage landscape and the increasing demand for our services and technologies.â€The post Under New Management, Penguin Computing is Coming Back to HPC in a Big Way appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XK58)

Researchers are using Argonne supercomputers to better understand how charged particles emanating from Jupiter’s magnetosphere power a celestial version of the Aurora Borealis on northern and southern lights on Ganymede, Jupiter’s largest moon. "This work confirms and furthers our understanding of electron physics in magnetospheric dynamics. It sheds light on how the Earth’s magnetic field prevents the solar wind from burning the Earth’s atmosphere to a crisp and the future of predictive technologies for space weather."The post Supercomputing “Northern Lights†on Other Planets appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XK0G)

"Machine learning enables systems to learn automatically, based on patterns in data, and make better searches, decisions, or predictions. Machine learning has become increasingly important to scientific discovery. Indeed, the U.S. Department of Energy has stated that “machine learning has the potential to transform Office of Science research best practices in an age where extreme complexity and data overwhelm human cognitive and perception ability by enabling system autonomy to self-manage, heal and find patterns and provide tools for the discovery of new scientific insights."The post Video: Overview of Machine Learning Methods appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XHCM)

In this podcast, the Radio Free HPC team looks at the latest developments in processor technology coming out of the recent Hot Chips conference. "The HOT CHIPS conference typically attracts more than 500 attendees from all over the world. It provides an opportunity for chip designers, computer architects, system engineers, press and analysts, as well as attendees from national laboratories and academia to mix, mingle and see presentations on the latest technologies and products."The post Radio Free HPC Looks at Hot Chips for 2018 appeared first on insideHPC.

|

|

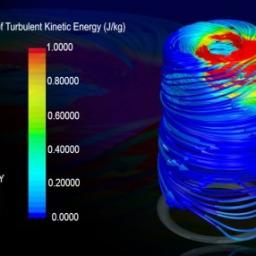

by Rich Brueckner on (#3XHAP)

Over at The UberCloud, Wolfgang Gentzsch writes that researchers are using HPC in the Cloud in a revolutionary new way for the design of bioreactors. "It’s amazing to think of all the products created in bioreactors. The medications we take, the beer we drink and the yogurt we eat are all made in bioreactors optimized for the manufacturing of these products. Unfortunately, optimizations take a lot of work and data. In a new HPC Cloud project, engineers from ANSYS with support from UberCloud performed an extensive Design of Experiments in Microsoft’s Azure Cloud."The post UberCloud Works with ANSYS and Azure to Optimize Bioreactors appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XG1K)

Valentin Clement from the Center for Climate System Modeling in Switzerland gave this talk at PASC18. 'In order to profit from emerging high-performance computing systems, weather and climate models need to be adapted to run efficiently on different hardware architectures such as accelerators. This is a major challenge for existing community models that represent very large code bases written in Fortran. We introduce the CLAW domain-specific language (CLAW DSL) and the CLAW Compiler that allows the retention of a single code written in Fortran and achieve a high degree of performance portability."The post The CLAW DSL: Abstractions for Performance Portable Weather and Climate Models appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XFYT)

Berkeley Lab is seeking a Computer Science Postdoctoral Scholar in our Job of the Week. "Berkeley Lab’s Computational Research Division has an opening for a Computer Science Postdoctoral Scholar. Develop performance modeling and analytical capabilities and tools for manycore and GPU-accelerated supercomputers and apply them to distributed memory Office of Science applications running on such platforms."The post Job of the Week: Computer Science Postdoctoral Scholar at Berkeley Lab appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XEBY)

"We invite researchers and professionals to take part in this workshop to discuss the challenges of Machine Learning, AI and HPC, and share their insights, use cases, tools and best practices. HPML is held in conjunction with the 30th edition of SBAC-PAD. SBAC-PAD is an international conference on High Performance Computing started in 1987 in which scientists and researchers present recent findings in the fields of parallel processing, distributed computing and computer architecture."The post Agenda Published: High Performance Machine Learning Workshop in France appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XE7J)

Emma Haruka gave this talk at Google Next. "Google Cloud Platform (GCP) is ready for High Performance Computing (HPC) applications. Google Compute Engine (GCE) offers flexible network and virtual machine (VM) configuration options to run HPC workloads. This session explores why GCP is a good place to run HPC applications and save money. Topics will include CPU, GPU, and memory offerings, as well as underlying network architecture, preemptible VMs, and storage options. You will also learn how Google designs their cloud infrastructure and why it works for HPC workloads."The post Video: Introduction to HPC on the Google Cloud Platform appeared first on insideHPC.

|

|

by staff on (#3XE7M)

SYCL is an open standard developed by the Khronos Group that enables developers to write code for heterogeneous systems using standard C++. Developers are looking at how they can accelerate their applications without having to write optimized processor specific code. SYCL is the industry standard for C++ acceleration, giving developers a platform to write high-performance code in standard C++, unlocking the performance of accelerators and specialized processors from companies such as AMD, Intel, Renesas, and Arm.The post Codeplay Releases First Fully-Conformant SYCL 1.2.1 Solution for C++ appeared first on insideHPC.

|

|

by Rich Brueckner on (#3XE2Y)

Etienne Lyard from the University of Geneva, Switzerland presents: Handling and Processing Data from the Cherenkov Telescope Array.

|

|

by staff on (#3XC28)

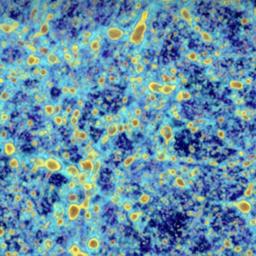

Dan Rosplock from Virginia Tech writes that Large-scale simulations could shed light on the 'dark' elements that make up most of our cosmos. By reverse engineering the evolution of these elements, they could provide unique insights into more than 14 billion years of cosmic history.The post Supercomputing Dark Matter appeared first on insideHPC.

|