|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 07:00 |

|

by staff on (#42E94)

Researchers and staff from 15 National Labs will showcase DOE’s latest computing and networking innovations and accomplishments at SC18 in Dallas next week. "Several of the talks and demos will highlight achievements by DOE's Exascale Computing Program (ECP), a multi-lab, seven-year collaborative effort focused on accelerating the delivery of a capable exascale computing ecosystem by 2021."The post DOE to Showcase World-Class Computational Science at SC18 appeared first on insideHPC.

|

|

by staff on (#42E59)

In this video, Larry Meadows from Intel describes why modern processors require modern coding techniques. With vectorization and threading for code modernization, you can enjoy the full potential of Intel Scalable Processors. "In many ways, code modernization is inevitable. Even EDGE devices nowadays have multiple physical cores. And even a single-core machine will have hyperthreads. And keeping those cores busy and fed with data with Intel programming tools is the best way to speed up your applications."The post Video: The Separation of Concerns in Code Modernization appeared first on insideHPC.

|

|

by staff on (#42CRM)

Today AMD unveiled its upcoming 7nm compute and graphics product portfolio designed to extend the capabilities of the modern datacenter. During the event, AMD shared new specifics on its upcoming “Zen 2†processor core architecture, detailed its revolutionary chiplet-based x86 CPU design, launched the 7nm AMD Radeon Instinct MI60 graphics accelerator and provided the first public demonstration of its next-generation 7nm EPYCâ„¢ server processor codenamed “Rome.â€The post AMD Goes Full 7nm with EPYC Rome Processor appeared first on insideHPC.

|

|

by staff on (#42C6K)

In this special guest feature, Dr. Rosemary Francis from Ellexus offers up her predictions for SC18 in Dallas. "It’s almost time for SC18 and this year it’s a biggie. Here is what we expect to hear about at SC18 as the Ellexus team treads the show floor."The post Predictions for SC18: A change in climate for HPC? appeared first on insideHPC.

|

|

by staff on (#42C1Q)

Today One Stop Systems announced that the company has acquired Bressner Technology GmbH, a leading specialized high-performance computing supplier in Europe. "Based in GRÖBENZELL, Germany near Munich, Bressner provides standard and customized servers, panel PCs, and PCIe expansion systems. Their primary headquarters provides manufacturing, test, sales and marketing services for customers throughout Europe and the Middle East. The company has over 700 customers throughout Europe. Its major OEM customers include Novartis, Ipsotek, Intel, Rohde & Schwarz, BMW, and others. Bressner generates revenue of approximately €14 million per year."The post One Stop Systems to Acquire Bressner Technology in Europe appeared first on insideHPC.

|

|

by staff on (#42C1R)

Today DDN announced it will showcase a number of new innovations optimized for HPC, AI and hybrid cloud at SC18 in Dallas. Designed, optimized and right-sized for commercial HPC, AI, deep learning (DL), and exascale computing, DDN’s new products and solutions are fully integrated for any data-at-scale need. “DDN continues to advance powerful new storage solutions, and we’re excited to see its latest offerings at SC18 next week.â€The post DDN to showcase World’s Fastest Storage and more at SC18 appeared first on insideHPC.

|

|

by staff on (#42BX0)

Today TYAN introduced new Intel Xeon E-2100 processor-based server motherboards and systems to the market. The new lineup is designed to offer enhanced performance, advanced security, and reliability of cost-effective entry server solutions. "Small and medium-size businesses need to maintain their competitiveness by deploying a powerful server platform for performance, enhanced memory capabilities, and hardware-enhanced security,†said Danny Hsu, Vice President of MiTAC Computing Technology Corporation's TYAN Business Unit. “TYAN’s Intel Xeon E-2100 processor-based platforms are optimized for cloud computing, storage and embedded environment applications. By utilizing Intel’s features of performance improvement, expanded I/O and enhanced Intel Software Guard Extensions (Intel SGX), TYAN enables our customers to enjoy superior performance in file sharing, storage and virtualization.â€The post TYAN steps up with New Intel Xeon E-2100 Processors appeared first on insideHPC.

|

|

by Rich Brueckner on (#42BX2)

In this podcast, the Radio Free HPC team sits down with Mark Fernandez from HPE to discuss the Spaceborne Supercomputer that it currently orbiting the planet in the International Space Station. "Last week, HPE announced it is opening high-performance computing capabilities to astronauts on the International Space Station (ISS) as part of its continued experiments on the Spaceborne Computer project."The post Radio Free HPC Gets an Update on the Spaceborne Supercomputer appeared first on insideHPC.

|

|

by staff on (#429PK)

vScaler has incorporated NVIDIA’s new RAPIDS open source software into its cloud platform for on-premise, hybrid, and multi-cloud environments. Deployable via its own Docker container in the vScaler Cloud management portal, the RAPIDS suite of software libraries gives users the freedom to execute end-to-end data science and analytics pipelines entirely on GPUs. "The new RAPIDS library offers Python interfaces which will leverage the NVIDIA CUDA platform for acceleration across one or multiple GPUs. RAPIDS also focuses on common data preparation tasks for analytics and data science. This includes a familiar DataFrame API that integrates with a variety of machine learning algorithms for end-to-end pipeline accelerations without paying typical serialization costs. RAPIDS also includes support for multi-node, multi-GPU deployments, enabling vastly accelerated processing and training on much larger dataset sizes."The post vScaler Cloud Adopts RAPIDS Open Source Software for Accelerated Data Science appeared first on insideHPC.

|

|

by staff on (#429PN)

Today AMD announced the availability of the Mentor Graphics Sourcery CodeBench Lite Edition development environment for AMD EPYC and Ryzen CPUs and AMD Radeon Instinct GPUs. "AMD’s innovative hardware technologies combined with Mentor’s leading Sourcery CodeBench Lite environment will help the company’s growing customer base in developing high performance computing and embedded products,†stated Dr. Randy Allen, director of Advanced Research, Mentor Embedded Platform Solutions. “This integrated solution will allow AMD customers to build, debug and analyze embedded software for multicore, heterogenous applications with high productivity and optimized performance.â€The post AMD Powers Mentor Graphics HPC Application Development Environment appeared first on insideHPC.

|

|

by staff on (#429HT)

Today HPC Startup Appentra Solutions announced the company's plans to showcase its auto-parallelization technologies at the Emerging Technologies Showcase at SC18. "SC18 is the premier international conference for High Performance Computing, networking, storage, and analysis. Every year, the Emerging Technologies program at the SC conference, showcases innovative solutions, from industry, government laboratories and academia, that may significantly improve and extend the world of HPC in the next five to fifteen years."The post Appentra Auto-parallelization coming to Emerging Technologies Showcase at SC18 appeared first on insideHPC.

|

|

by Rich Brueckner on (#429D4)

Today HPC Cloud Startup XTREME-D announced the official release of XTREME-Stargate, a gateway appliance that delivers a new way to access and manage high-performance cloud for the next AI generation. "XTREME-Stargate is an innovative next-generation HPC cloud platform from a start-up focused on HPC cloud offerings. It provides high performance computing and graphics processing and is cost effective for both simulation and data analysis."The post XTREME-Stargate Launches “New era of Cloud Platform for AI and HPC†appeared first on insideHPC.

|

|

by staff on (#429D5)

Mellanox Technologies' Gilad Shainer explores one of the biggest tech transitions over the past 20 years: the transition from CPU-centric data centers to data-centric data centers, and the role of in-network computing in this shift. "The latest technology transition is the result of a co-design approach, a collaborative effort to reach Exascale performance by taking a holistic system-level approach to fundamental performance improvements. As the CPU-centric approach has reached the limits of performance and scalability, the data center architecture focus has shifted to the data, and how to bring compute to the data instead of moving data to the compute."The post In-Network Computing Technology to Enable Data-Centric HPC and AI Platforms appeared first on insideHPC.

|

|

by staff on (#427S0)

The University of Houston has announced a new collaboration with HPE today, including a $10 million gift from HPE to the University. The gift from HPE will benefit the University’s Data Science Institute and include funding for a scholarship endowment, as well as both funding and equipment to enhance data science research activities.The post UH Data Science Institute Receives $10 Million Boost from HPE appeared first on insideHPC.

|

|

by Rich Brueckner on (#427PT)

Jeff Larkin from NVIDIA gave this talk at the Summit Application Readiness Workshop. The event had the primary objective of providing the detailed technical information and hands-on help required for select application teams to meet the scalability and performance metrics required for Early Science proposals. Technical representatives from the IBM/NVIDIA Center of Excellence will be delivering a few plenary presentations, but most of the time will be set aside for the extended application teams to carry out hands-on technical work on Summit."The post Video: Unified Memory on Summit (Power9 + V100) appeared first on insideHPC.

|

|

by staff on (#4262D)

Chevron is seeking an HPC Emerging Technology Researcher in our Job of the Week. "This position will be accountable for strategic research, technology development and business engagement to deliver High Performance Computing solutions that differentiate Chevron’s performance. The successful candidate is expected to manage projects and small programs and personally apply and grow technical skills in the Advanced Computing space."The post Job of the Week: HPC Emerging Technology Researcher at Chevron appeared first on insideHPC.

|

|

by Rich Brueckner on (#4260J)

On Tuesday, Nov. 13, the Women in HPC organization will host a Networking and Careers Reception at SC18 in Dallas. "Join the Women In High Performance Computing members as we celebrate a growing community of women and their supporters in the supercomputing community! We invite you to join us for a special networking evening with appetizers and drinks."The post Women in HPC to host Networking and Careers Event at SC18 appeared first on insideHPC.

|

|

by staff on (#424T6)

Today Hewlett Packard Enterprise (HPE) today announced it is opening high-performance computing capabilities to astronauts on the International Space Station (ISS) as part of its continued experiments on the Spaceborne Computer project. “HPE’s Spaceborne Computer is a commercial system owned and funded by HPE that is advancing state-of-the-art computing in space and providing supercomputing commercial services on a spacecraft for the first time, all while demonstrating capabilities similar to what NASA may need to pursue for exploration.â€The post HPE Delivers First Above-the-cloud Supercomputing Services for Astronauts appeared first on insideHPC.

|

|

by staff on (#4247D)

Over at the SC18 Blog, SC Insider writes that the upcoming Conference Plenary session will examine the potential for advanced computing to help mitigate human suffering and elevate our capacity to protect the most vulnerable. "In this SC18 plenary session, you will hear from innovators who are redefining how we predict and prevent humanitarian crises by leveraging advanced computing. The session is the conference kick-off event, and will be follow by Exhibitor Opening Gala on Monday night, Nov. 12."The post SC18 Plenary to focus on HPC & Ai on Nov. 12 appeared first on insideHPC.

|

|

by Rich Brueckner on (#423YE)

ETP4HPC has published their annual European HPC Handbook. "This publication describes the HPC Technology, Co-design and Applications Projects within the European HPC ecosystem, including EPI. The Handbook will be distributed at a SC18 Birds-of-a-Feather Session in Dallas (Nov. 14 at 5.15pm). Please attend to get your printed copy."The post Download the New European HPC Handbook appeared first on insideHPC.

|

|

by staff on (#421XQ)

Today the Ohio Supercomputer Center announced plans to deploy the center’s newest, most efficient supercomputer system, the liquid-cooled, Dell EMC-built Pitzer Cluster. "Ohio continues to make significant investments in the Ohio Supercomputer Center to benefit higher education institutions and industry throughout the state by making additional high performance computing (HPC) services available,†said John Carey, chancellor of the Ohio Department of Higher Education. “This newest supercomputer system gives researchers yet another powerful tool to accelerate innovation.â€The post OSC to Deploy Pitzer Cluster built by Dell EMC appeared first on insideHPC.

|

|

by staff on (#421MW)

Over at the Exascale Computing Project, Scott Gibson has compiled an impressive list of sessions and meetups involving the ECP and all the goings on you can expect at SC18 in Dallas. "The Exascale Computing Project is accelerating delivery of a capable exascale computing ecosystem for breakthroughs in scientific discovery, energy assurance, economic competitiveness, and national security."The post Exascale Computing Project to go Front and Center at SC18 appeared first on insideHPC.

|

|

by staff on (#421MX)

Intel has Big Plans at SC18 later this month, many of which are focused on HPC and AI convergence, and the intersections between these two sectors. "HPC is expanding beyond its traditional role of modeling and simulation to encompass visualization, analytics, and machine learning. Intel scientists and engineers will be available to discuss how to implement AI capabilities into your current HPC environments and demo how new, more powerful HPC platforms can be applied to meet your computational needs now and in the future."The post HPC and AI Convergence Take Center Stage for Intel at SC18 appeared first on insideHPC.

|

|

by staff on (#41ZBY)

Today IEEE named Sarita Adve of the University of Illinois at Urbana-Champaign as the recipient of the 2018 ACM-IEEE CS Ken Kennedy Award. Adve was cited for her research contributions and leadership in the development of memory consistency models for C++ and Java; for service to numerous computer science organizations; and for exceptional mentoring. The award will be presented at SC18 in Dallas.The post Sarita Adve Named Recipient of the ACM-IEEE CS Ken Kennedy Award appeared first on insideHPC.

|

|

by staff on (#41Z6S)

AMD has announced the availability of the first AMD EPYC processor-based instance on Oracle Cloud Infrastructure. "The AMD EPYC processor ‘E’ series will lead with the bare metal, Standard ‘E2’, available immediately as the first instance type within the Series. At $0.03/Core hour, the AMD EPYC instance is up to 66 per cent less on average per core than general purpose instances offered by the competition."The post AMD EPYC Processors come to Oracle Cloud Infrastructure for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#41Z6T)

Nominations are open for a new prize honoring a longtime advocate for science, education and research. "The James Corones Award in Leadership, Community Building and Communication will recognize mid-career scientists and engineers who are making an impact in their fields and on research in general. The recipient will be someone who encourages and mentors young people to engage with the science community, to communicate their work effectively and to make a difference in their scientific discipline. It’s a fitting tribute to Corones, who led a distinguished career as a researcher, administrator and, perhaps most importantly, founder of the Krell Institute, the award’s sponsor."The post Seeking Nominations for the James Corones Award Honoring Longtime Advocates for Science appeared first on insideHPC.

|

|

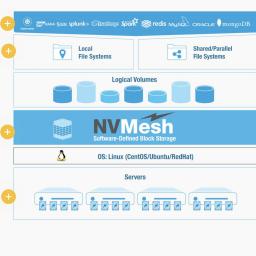

by staff on (#41Z28)

Today Excelero announced NVMesh 2, its software-only storage solution built for modern applications that have an insatiable need to scale flexibly and efficiently. "NVMesh 2 is the only software-defined storage that provides local NVMe Flash performance across the network and more importantly, also delivers the rest of what customers tell us they need for today’s modern applications,†said Lior Gal, CEO and co-founder of Excelero. “We believe with NVMesh 2 we have raised the bar significantly on the agility, efficiency and management features that IT managers should expect from storage going forward.â€The post NVMesh 2 Provides Local NVMe Flash Performance Across the Network appeared first on insideHPC.

|

|

by Rich Brueckner on (#41XDX)

Today NERSC announced plans for Perlmutter, a pre-exascale system to be installed in 2020. With thousands of NVIDIA Tesla GPUs, the system is expected to deliver three times the computational power currently available on the Cori supercomputer at NERSC. "Optimized for science, the supercomputer will support NERSC’s community of more than 7,000 researchers. These scientists rely on high performance computing to build AI models, run complex simulations and perform data analytics. GPUs can speed up all three of these tasks."The post GPU-Powered Perlmutter Supercomputer coming to NERSC in 2020 appeared first on insideHPC.

|

|

by Rich Brueckner on (#41WYE)

Today Cray unveiled its new Shasta supercomputing architecture for Exascale. "Shasta eliminates the distinction between clusters and supercomputers with a single new breakthrough supercomputing system architecture, enabling customers to choose the computational infrastructure that best fits their mission, without tradeoffs. With Shasta you can mix and match processor architectures (X86, Arm, GPUs) in the same system as well as system interconnects from Cray (Slingshot), Intel (Omni-Path) or Mellanox (InfiniBand)."The post Video: Cray Rolls Out Shasta Platform for Exascale Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#41WM7)

In this AI Podcast, Bill Dally from NVIDIA describes how the company is accelerating Ai with GPUs. "NVIDIA researchers are gearing up to present 19 accepted papers and posters, seven of them during speaking sessions, at the annual Computer Vision and Pattern Recognition conference next week in Salt Lake City, Utah. Joining us to discuss some of what's being presented at CVPR, and to share his perspective on the world of deep learning and AI in general is one of the pillars of the computer science world, Bill Dally, chief scientist at NVIDIA."The post Podcast: Bill Daly on How NVIDIA is Accelerating Ai appeared first on insideHPC.

|

|

by Rich Brueckner on (#41WFR)

The Meeting Agenda has now been posted for the Dell EMC Community Meeting at SC18. The event takes place Nov. 12 in Dallas. "The Dell EMC HPC Community is a worldwide technical forum that fosters the exchange of ideas among researchers, computer scientists, technologists, and engineers and promotes the advancement of innovative, powerful HPC solutions. Dell EMC customers, staff, and partners are invited to become members and to attend this exclusive workshop featuring insightful keynote presentations by HPC experts, as well as valuable technical sessions and discussions."The post Agenda Posted for Dell EMC HPC Community Meeting at SC18 in Dallas appeared first on insideHPC.

|

|

by staff on (#41TY2)

Today IEEE announced that Dr. David E. Shaw, chief scientist of D. E. Shaw Research and a senior research fellow at the Center for Computational Biology and Bioinformatics at Columbia University, has been named recipient of the 2018 IEEE Computer Society Seymour Cray Computer Engineering Award. "Dr. Shaw is being recognized “for the design of special-purpose supercomputers for biomolecular simulations.â€The post Dr. David E. Shaw to Receive 2018 Seymour Cray Computer Engineering Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#41TMF)

In this podcast, Radio Free HPC Previews the SC18 Student Cluster Competition. "The Competition was developed in 2007 to provide an immersive high performance computing experience to undergraduate and high school students. With sponsorship from hardware and software vendor partners, student teams design and build small clusters, learn designated scientific applications, apply optimization techniques for their chosen architectures, and compete in a non-stop, 48-hour challenge at the SC conference to complete a real-world scientific workload, showing off their HPC knowledge for conference attendees and judges."The post Radio Free HPC Previews the SC18 Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#41TAR)

In this video, GE showcases its initial design of the first-ever supersonic engine purpose-built for business jets. The "Affinity" engine is optimized with proven GE technology for supersonic flight and timed to meet the Aerion AS2 launch. "GE Aviation has more than 60 years of experience in designing and building engines adapted for supersonic aircraft. Its first engine to push us into the supersonic era was the J79, which was introduced with Lockheed on their F-104 Starfighter in the mid-1950s."The post Video: The Incredible New Supersonic Affinity Jet Engine from GE appeared first on insideHPC.

|

|

by staff on (#41T68)

Clients tell us there is a wide range of users beyond data scientists that want to get in on the AI action as well, so we recently updated LiCO with new “Lenovo Accelerated AI†training and inference templates. These templates allow users to simply bring their dataset into LiCO and request cluster resources to train models and run inference without coding."The post How to Control the AI Tsunami appeared first on insideHPC.

|

|

by staff on (#41RW5)

Today IBM announced that the company plans to acquire all outstanding common shares of Red Hat for $190.00 per share in cash, representing a total enterprise value of approximately $34 billion. "One of the biggest enterprise technology companies on the planet has agreed to partner with us to scale and accelerate our efforts, bringing open source innovation to an even greater swath of the enterprise."The post IBM To Acquire Red Hat appeared first on insideHPC.

|

|

by Rich Brueckner on (#41RJG)

The 2019 Mass Storage MSST conference has issued its Call for Papers. "MSST 2019 will focus on distributed storage system technologies, including persistent memory, new memory technologies, long-term data retention (tape, optical disks...), solid state storage (flash, MRAM, RRAM...), software-defined storage, OS- and file-system technologies, cloud storage, big data, and data centers (private and public). The conference will also put the spotlight on current challenges and future trends in storage technologies."The post Call for Papers: MSST Storage Conference in Santa Clara appeared first on insideHPC.

|

|

by staff on (#41RJH)

A pair of emerging technology leaders from the African Continent are spending five weeks in the USA as part of the TechWomen program. "In addition to the tours, Mugehu said she's been surprised by how open people are about their work and generous in sharing their knowledge. Back home, researchers carefully guard their work, fearing someone may try to take it as their own, she said."The post TechWomen from Africa Experience Supercomputing Up Close appeared first on insideHPC.

|

|

by Rich Brueckner on (#41PRR)

Today the Texas WHPC announced a Women in HPC Celebration in Austin. The event takes place Friday, Nov. 9 from 8:30 AM to 12:00 PM at the Intel Corporate Offices in Austin. The event takes place Friday, Nov. 9 from 8:30 AM to 12:00 PM at the Intel Corporate Offices in Austin. The event will feature talks by Texas leaders in HPC, a panel of women in industry and academia, and networking opportunities. "As one of the largest states in the country, it is pivotal that Texas takes charge in properly including ‘the other half’ of our society in HPC and high tech as a whole,†says Data Vortex President Carolyn Devany."The post Women in HPC Event Coming to Austin in November appeared first on insideHPC.

|

|

by Rich Brueckner on (#41PRS)

Decisive Analytics Corporation in Virginia is seeking a Machine Learning Software Engineer in our Job of the Week. "We are seeking applicants with strong software development skills, and a keen interest in machine learning to join our team. In this role you will have the opportunity to develop state of the art machine learning algorithms and deploy them in the big data stack of Hadoop, MapReduce, Spark, Storm, Accumulo, and Mongo DB."The post Job of the Week: Machine Learning Software Engineer at Decisive Analytics appeared first on insideHPC.

|

|

by Rich Brueckner on (#41NET)

Today LLNL unveiled Sierra, one of the world’s fastest supercomputers, at a dedication ceremony to celebrate the system’s completion. "The next frontier of supercomputing lies in artificial intelligence,†said John Kelly, senior vice president, Cognitive Solutions and IBM Research. “IBM's decades-long partnership with LLNL has allowed us to build Sierra from the ground up with the unique design and architecture needed for applying AI to massive data sets. The tremendous insights researchers are seeing will only accelerate high performance computing for research and business.â€The post LLNL Unveils NNSA’s Sierra, World’s Third Fastest Supercomputer appeared first on insideHPC.

|

|

by staff on (#41MRA)

In this video, Tony Paikeday from NVIDIA describes how the DGX-1 for Red Hat Enterprise Linux is transforming enterprise computing with the power of Machine Learning. "Red Hat and NVIDIA have a solution with the certification of the NVIDIA DGX-1 for Red Hat Enterprise Linux. The combination enables enterprises to easily plug in and power up the AI supercomputer that has become the essential tool for AI innovators and researchers everywhere. And they can do so with a familiar operating system. In fact, 90 percent of Fortune 500 companies use Red Hat Enterprise Linux in their operations."The post Video: NVIDIA and Red Hat Simplify AI Development and Manageability appeared first on insideHPC.

|

|

by staff on (#41MRC)

Professor Linda Petzold from UC Santa Barbara has been selected to receive the 2018 IEEE Computer Society Sidney Fernbach Award. "Best known for her pioneering work on the numerical solution of differential-algebraic equations (DAEs), Petzold’s research focuses on modeling, simulation and analysis of multiscale systems in materials, biology and medicine. Many physical systems are naturally described as systems of DAEs."The post Professor Linda Petzold to Receive Sidney Fernbach Award at SC18 appeared first on insideHPC.

|

|

by Rich Brueckner on (#41MM4)

Today NEC Corporation announced that it is supporting the Stanford DAWN project, an initiative to simplify the building of AI-powered applications, by providing a cluster of new "SX-Aurora TSUBASA" vector computers for research in the area of Artificial Intelligence. "We are very pleased to support the Stanford DAWN project. We hope to help demonstrate the value of vector computing to the advancement of AI domains," said Yuichi Nakamura, Vice President, NEC Central Research Laboratories.The post Video: NEC Accelerates Stanford DAWN Project with Vector Computing appeared first on insideHPC.

|

|

by staff on (#41K6K)

ECP’s ExaWind project aims to advance the fundamental comprehension of whole wind plant performance by examining wake formation, the impacts of complex terrain, and the effects of turbine-turbine wake interactions. When validated by targeted experiments, the predictive physics-based high-fidelity computational models at the center of the ExaWind project, and the new knowledge derived from their solutions, provide an effective path to optimizing wind plants.The post Supercomputing Turbine Energy with the ExaWind Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#41JEY)

In this Big Brains podcast, David Awschalom describes how he’s helping to train a new generation of quantum engineers. "The behavior of these tiny pieces is unlike anything we see in our world,†Awschalom said. “If I pull a wagon, you know how it’s going to move. But at the atomic world, things don’t work that way. Wagons can go through walls; wagons can be entangled and share information that is hard to separate.â€The post Podcast: Quantum Network to Test Unhackable Communications appeared first on insideHPC.

|

|

by staff on (#41JAB)

The DOE High Performance Computing for Energy Innovation (HPC4EI) Initiative plans to issue its first joint solicitation in November for manufacturing (HPC4Mfg) and materials (HPC4Mtls) research. "The programs are intended to spur the use of national lab supercomputing resources and expertise to advance innovation in energy-efficient manufacturing and in new materials that enable advanced energy technologies. Selected industry partners will be granted access to HPC facilities and experts at the national laboratories. Projects will be awarded up to $300,000 to support compute cycles and work performed by the national lab partners."The post DOE Call for Proposals: HPC for Manufacturing and Materials appeared first on insideHPC.

|

|

by staff on (#41JAD)

Naoki Shibata from XTREME-D writes that choosing the right type of cloud computing is key to increasing efficiency. "One challenge that HPC, DA, and DL end users face is to keep focused on their science and engineering and not get bogged down with system administration and platform details when ensuring that they have the clusters they need for their work. It has often been said that if scalable cluster computing can become more turnkey and user-friendly (and less costly), then the market will expand to many new areas."The post Choosing the Right Type of Cloud for HPC Lets Scientists Focus on Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#41GQ7)

In this slidecast, Gilad Shainer from Mellanox describes how the company's interconnect solutions power HPC and Ai workloads. "Mellanox is leading industry innovation by providing the highest throughput and lowest latency HDR 200Gb/s InfiniBand and Ethernet solutions available today, with a clear roadmap for tomorrow. Please visit Mellanox Technologies in booth #3207 to see the latest in our industry-leading HDR 200Gb/s InfiniBand and Spectrum Ethernet solutions, and see how Mellanox is already paving the way to Exascale."The post Interconnect Your Future: Paving the Road to Exascale appeared first on insideHPC.

|