|

by Rich Brueckner on (#3CTKR)

The PEARC18 Conference has announced its lineup of Keynote Speakers. The event takes place July 22-27 in Pittsburgh. "PEARC18 is for everyone who works to realize the promise of advanced computing as the enabler of seamless creativity. Scientists and engineers, scholars and planners, artists and makers, students and teachers all depend on the efficiency, security, reliability and sustainability of increasingly complex and powerful digital infrastructure systems. If your work addresses these challenges in any way, PEARC18 is the forum to share, learn and inspire progress."The post PEARC18 Conference Announces lineup of Keynote Speakers appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 17:30 |

|

by staff on (#3CTGS)

Today NOAA announced to plans for a major upgrade to its supercomputing capabilities. The upgrade adds 2.8 petaflops of computational power, enabling NOAA’s National Weather Service to implement the next generation Global Forecast System, known as the “American Model,†next year. “Having more computing speed and capacity positions us to collect and process even more data from our newest satellites — GOES-East, NOAA-20 and GOES-S — to meet the growing information and decision-support needs of our emergency management partners, the weather industry and the public.â€The post NOAA Looks to Dell for Massive Supercomputing Upgrade appeared first on insideHPC.

|

|

by staff on (#3CTCN)

RAID Incorporated has joined OpenSFS (Open Scalable File Systems, Inc.). OpenSFS is a non-profit organization that supports the vendor-neutral development of Lustre. Since 2010, OpenSFS has utilized working groups, events, and funding initiatives to encourage the growth and stability of the Lustre community, as well as facilitate collaborative benefits of its members, to ensure Lustre remains open, free, and flourishing. "RAID Incorporated undoubtedly brings great value to OpenSFS and will aid in boosting the continued innovation and advancements of the Lustre file system," said OpenSFS President Sarp Oral.The post RAID Inc. Joins OpenSFS to Support the Lustre File System appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CT9F)

Steve Oberlin from NVIDIA gave this talk at SC17 in Denver. "HPC is a fundamental pillar of modern science. From predicting weather to discovering drugs to finding new energy sources, researchers use large computing systems to simulate and predict our world. AI extends traditional HPC by letting researchers analyze massive amounts of data faster and more effectively. It’s a transformational new tool for gaining insights where simulation alone cannot fully predict the real world."The post Steve Oberlin from NVIDIA Presents: HPC Exascale & AI appeared first on insideHPC.

|

|

by staff on (#3CR09)

Today NVIDIA and Baidu today that they are creating a production-ready AI autonomous vehicle platform designed for China, the world’s largest automotive market. "NVIDIA and Baidu have pioneered significant advances in deep learning and AI together over the last several years,†said Huang. “Now, together with ZF, we have created the first AI autonomous vehicle computing platform for China.â€The post NVIDIA and Baidu Announce Autonomous Car project with 30 Deep earning TOPS (trillions of operations per second) appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CQAE)

In this video from SC17, Jon Masters from Red Hat describes the company's Multi-Architecture HPC capabilities, including the new ARM-powered Apollo 70 server from HPE. "At SC17, you will also have an opportunity to see the power and flexibility of Red Hat Enterprise Linux across multiple architectures, including Arm v8-A, x86_64 and IBM POWER Little Endian."The post Video: Red Hat Showcases ARM Support for HPC at SC17 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CPZ4)

Today Micron and Intel announced an update to their successful NAND memory joint development partnership that has helped the companies develop and deliver industry-leading NAND technologies to market. "The companies have agreed to complete development of their third-generation of 3D NAND technology, which will be delivered toward the end of this year and extending into early 2019. Beyond that technology node, both companies will develop 3D NAND independently in order to better optimize the technology and products for their individual business needs."The post Micron and Intel to continue joint development of 3D NAND Memory through 2019 appeared first on insideHPC.

|

|

by staff on (#3CPRZ)

Can you afford to lose a third of your compute real estate? If not, you need to pre-empt the impact of Meltdown and Spectre. "Meltdown and Spectre are quickly becoming household names and not just in the HPC space. The severe design flaws in Intel microprocessors that could allow sensitive data to be stolen and the fixes are likely to be bad news for any I/O intensive applications such as those often used in HPC. Ellexus Ltd, the I/O profiling company, has released a white paper: How the Meltdown and Spectre bugs work and what you can do to prevent a performance plummet."The post New Whitepaper on Meltdown and Spectre fixes for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CPS0)

In this podcast, the Radio Free HPC team looks at the performance ramifications of the Spectre and Meltdown exploits that affect processors from Intel, AMD, and many others. While patches are on the way, the performance hit from these patches could be as high as twenty or thirty percent in some cases.The post Radio Free HPC Looks at Spectre and Meltdown Exploits appeared first on insideHPC.

|

|

by staff on (#3CM9H)

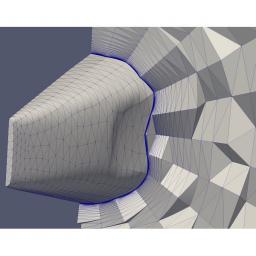

The Fort Worth Chamber of Commerce has named Pointwise a finalist for 2018 Small Business of the Year award. Pointwise, Inc. is solving the top problem facing computational fluid dynamics (CFD) today – reliably generating high-fidelity meshes. The company's Pointwise software generates structured, unstructured, overset and hybrid meshes; interfaces with CFD solvers such as ANSYS FLUENT, STAR-CCM+, OpenFOAM, and SU2 as well as many neutral formats, such as CGNS; runs on Windows, Linux, and Mac, and has a scripting language, Glyph, that can automate CFD meshing.The post Pointwise ISV is Finalist for Small Business of the Year appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CM5G)

Travis Johnston from ORNL gave this talk at SC17. "Multi-node evolutionary neural networks for deep learning (MENNDL) is an evolutionary approach to performing this search. MENNDL is capable of evolving not only the numeric hyper-parameters, but is also capable of evolving the arrangement of layers within the network. The second approach is implemented using Apache Spark at scale on Titan. The technique we present is an improvement over hyper-parameter sweeps because we don't require assumptions about independence of parameters and is more computationally feasible than grid-search."The post Adapting Deep Learning to New Data Using ORNL’s Titan Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CJ12)

Gadi Singer gave this talk at the Intel HPC Developer Conference in Denver. "Technology visionaries architecting the future of high-performance computing and artificial intelligence (AI) will share the key challenges as well as Intel’s direction. The talk will cover the adaptation of AI into HPC workflows, along their perspective architectural developments, upcoming transitions and range of solutions, technology opportunities, and the driving forces behind them."The post The AI Future is Closer than it Seems appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CJ13)

Baidu USA in Silicon Valley is seeking a Computer Systems Researcher in our Job of the Week. "Baidu's research mission is to develop hard AI technologies that will reach and impact hundreds of millions of users. Our Silicon Valley Artificial Intelligence Lab (SVAIL) develops state-of-the-art AI technologies that require bleeding-edge systems to improve accuracy, scale, and performance. Our systems researchers address the many and new systems challenges that arise as we extend the AI state-of-the-art."The post Job of the Week: Computer Systems Researcher at Baidu USA appeared first on insideHPC.

|

|

by staff on (#3CFKS)

Today Mellanox announced the first shipments of its BlueField system-on-chip (SoC) platforms and SmartNIC adapters to major data center, hyperscale and OEM customers. Mellanox BlueField dual-port 100Gb/s SoC is ideal for cloud, Web 2.0, Big Data, storage, enterprise, high-performance computing, and Network Functions Virtualization (NFV) applications. "We are excited to ship BlueField systems and SmartNIC adapters to our major customers and partners, enabling them to build the next generation of storage, cloud, security and other platforms and to gain a competitive advantage,†said Yael Shenhav, vice president of products at Mellanox Technologies. “BlueField products achieve new performance records, delivering industry-leading NVMe over Fabrics and networking throughput. We are proud of our world-class team for delivering these innovative products, designed to meet the ever growing needs of current and future data centers.â€The post Mellanox Ships BlueField SoCs and SmartNICs appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CFEH)

Peter Messmer from NVIDIA gave this talk at SC17. "This talk is a summary about the ongoing HPC visualization activities, as well as a description of the technologies behind the developer-zone shown in the booth." Messmer is a principal software engineer in NVIDIA's Developer Technology organization, working with clients to accelerate their scientific discovery process with GPUs.The post Visualization on GPU Accelerated Supercomputers appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CFBB)

In this podcast, the Radio Free HPC team reviews their list of things Not Invited Back in 2018. Along the way, they share some of their New Year Resolutions.The post Radio Free HPC does their List of Things Not Invited Back in 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CF9F)

Prabhat from NERSC and Michael F. Wehner from LBNL gave this talk at the Intel HPC Developer Conference in Denver. "Deep Learning has revolutionized the fields of computer vision, speech recognition and control systems. Can Deep Learning (DL) work for scientific problems? This talk will explore a variety of Lawrence Berkeley National Laboratory’s applications that are currently benefiting from DL."The post Video: Deep Learning for Science appeared first on insideHPC.

|

|

by staff on (#3CDKH)

Intel reports that company has developed and is rapidly issuing updates for all types of Intel-based computer systems — including personal computers and servers — that render those systems immune from both exploits (referred to as “Spectre†and “Meltdownâ€) reported by Google Project Zero. "By the end of next week, Intel expects to have issued updates for more than 90 percent of processor products introduced within the past five years."The post Intel Deploying Updates for Spectre and Meltdown Exploits appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CCTF)

In this video from SC17 in Denver, Pascale Bruner from Atos describes the company's innovative ARM-HPC technologies developed as part of the Mont-Blanc Project. "Atos will showcase our Dibona prototype for ARM-based HPC. Named after the Dibona peak in the French Alps, the new prototype is part of the Phase 3 of Mont-Blanc and is based on 64 bit ThunderX2 processors from Cavium, relying on the ARM v8 instruction set."The post Video: A Closer Look at the Atos Dibona Prototype for ARM-based HPC appeared first on insideHPC.

|

|

by staff on (#3CCQQ)

ESI Group has announced the release of SimulationX 3.9, a software platform for multiphysics system simulation. Designed for many industries – from automotive, energy, and mining to industrial machinery, railways, aerospace and aeronautics – SimulationX has proven to be one of the most reliable and customer-friendly solutions on the market.The post ESI Releases SimulationX 3.9 for Manufacturing appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CCQS)

The UK OpenMP User Conference has issued its Call for Sessions. The event takes place May 21-22 at St Catherine's College in Oxford. "This inaugural event is intended to become the annual meeting of the growing UK-based community of developers who use OpenMP."The post Call for Sessions: UK OpenMP User Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CCMJ)

Satoshi Matsuoka from the Tokyo Institute of Technology gave this talk at the NVIDIA booth at SC17. "TSUBAME3 embodies various BYTES-oriented features to allow for HPC to BD/AI convergence at scale, including significant scalable horizontal bandwidth as well as support for deep memory hierarchy and capacity, along with high flops in low precision arithmetic for deep learning."The post Converging HPC, Big Data, and AI at the Tokyo Institute of Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CA43)

In this slidecast, Joe Yaworski from Intel describes the Intel Omni-Path architecture and how it scales performance for a wide range of HPC applications. He also shows why recently published benchmarks have not reflected the real performance story.The post Intel Omni-Path Architecture: The Real Numbers appeared first on insideHPC.

|

|

by Rich Brueckner on (#3CA11)

The HPC User Forum has posted their Preliminary Agenda for their upcoming meeting in France. Free to attend, the event takes place March 6-7 near Paris at the Teratec campus in Bruyères-le-Châtel. "We have developed an exciting agenda with prominent speakers who will discuss the HPC strategies for Europe, Japan and the USA," said Earl Joseph, CEO of Hyperion Research. "We will also have talks on new HPC technologies, applications and markets; and the proliferation of HPC in industrial and commercial environments."The post Preliminary Agenda Posted for March HPC User Forum in France appeared first on insideHPC.

|

|

by staff on (#3C9TT)

Over at the Microsoft Blog, Jason Zander writes that the company is acquiring Avere Systems. "By bringing together Avere’s storage expertise with the power of Microsoft’s cloud, customers will benefit from industry-leading innovations that enable the largest, most complex high-performance workloads to run in Microsoft Azure. We are excited to welcome Avere to Microsoft, and look forward to the impact their technology and the team will have on Azure and the customer experience."The post Microsoft to acquire Avere Systems appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#3C9RC)

Many of the top 10 2017 HPC white papers deal with the next steps in the HPC journey, including moving to the cloud, and discovering the potential of machine learning and AI. The most downloaded reports of the year were written with industry partners such as Red Hat, Dell EMC, Intel, HPE and more.The post Top 10 HPC White Papers for 2017: Machine Learning, AI, the Cloud & More appeared first on insideHPC.

|

|

by staff on (#3C79P)

Intel recently announced the availability of the Intel Stratix 10 MX FPGA, the industry’s first field programmable gate array (FPGA) with integrated HBM2. By integrating the FPGA and the HBM2, Intel Stratix 10 MX FPGAs offer up to 10 times the memory bandwidth when compared when compared to standard DDR 2400 DIMM.The post Intel tackles FPGA HPC Memory Bottleneck appeared first on insideHPC.

|

|

by Rich Brueckner on (#3C775)

In this video from SC17, Dan McGuan and Jon Masters from Red Hat describe the company's Multi-Architecture HPC capabilities. "At SC17, you will have an opportunity to see the power and flexibility of Red Hat Enterprise Linux across multiple architectures, including Arm v8-A, x86_64 and IBM POWER Little Endian."The post Red Hat steps up with Multi-Architecture Solutions for HPC appeared first on insideHPC.

|

|

by staff on (#3C74K)

The 2018 ASC Student Supercomputer Challenge (ASC18) will begin on January 16, 2018. First launched in 2012, the competition will feature a whopping 230 student teams looking to build the fastest possible cluster with a power cap of 3 Kilowatts. "The USA Student Cluster Competition is like a marathon, testing participants’ hard work and perseverance," said OrionX partner Dan Olds, who has covered all three major supercomputing challenges. "Germany’s ISC is a sprint, testing innovation and adaptability. China’s ASC is a combination of both.â€The post ASC18 Student Cluster Competition to include 230 Teams appeared first on insideHPC.

|

|

by Rich Brueckner on (#3C6Z0)

"Deep Learning was recently scaled to obtain 15PF performance on the Cori supercomputer at NERSC. Cori Phase II features over 9600 KNL processors. It can significantly impact how we do computing and what computing can do for us. In this podcast, I will discuss some of the application-level opportunities and system-level challenges that lie at the heart of this intersection of traditional high performance computing with emerging data-intensive computing."The post Dr. Pradeep Dubey on AI & The Virtuous Cycle of Compute appeared first on insideHPC.

|

|

by Rich Brueckner on (#3C4W7)

Jay Boisseau from Dell EMC gave this talk at SC17 in Denver. "Across every industry, organizations are moving aggressively to adopt AI | ML | DL tools and frameworks to help them become more effective in leveraging data and analytics to power their key business and operational use cases. To help our clients exploit the business and operational benefits of AI | ML | DL, Dell EMC has created “Ready Bundles†that are designed to simplify the configuration, deployment and management of AI | ML | DL solutions."The post Video: Dell EMC AI Vision & Strategy appeared first on insideHPC.

|

|

by staff on (#3C4R5)

Over at the Rescale Blog, Joris Poort writes that the company has been named one of the fastest growing enterprise software companies of 2017. "It’s been a busy year as we’ve landed over 100 new enterprise customers, fueling our rapid growth in multiple key industry verticals including aerospace, automotive, life sciences, universities and with broad geographic coverage throughout the Americas, Europe, and Asia."The post Why Rescale is one of the Fastest Growing Enterprise Software Companies of 2017 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3C2JV)

Judy Qiu from Indiana University gave this Invited Talk at SC17. "Our research has concentrated on runtime and data management to support HPC-ABDS. This is illustrated by our open source software Harp, a plug-in for native Apache Hadoop, which has a convenient science interface, high performance communication, and can invoke Intel’s Data Analytics Acceleration Library (DAAL). We are building a scalable parallel Machine Learning library that includes routines in Apache Mahout, MLlib, and others built in an NSF funded collaboration."The post Harp-DAAL: A Next Generation Platform for High Performance Machine Learning on HPC-Cloud appeared first on insideHPC.

|

|

by staff on (#3C2H6)

Today Pointwise announced Project Geode, the company's response to the NASA CFD Vision 2030 Study's identification of the lack of CFD software access to geometry. As part of the announcement, Pointwise has opened beta testing of a geometry modeling kernel for computer-aided engineering (CAE) simulation software. "The kernel's core geometry evaluation functions can provide all the functionality a CFD solver would need for tasks like mesh adaption and elevation of elements to higher-order. We are now seeking additional beta testers to validate this idea.â€The post Pointwise Announces Geode Geometry Kernel appeared first on insideHPC.

|

|

by Rich Brueckner on (#3C0KB)

Narayanan Sundaram gave this talk at the Intel HPC Developer Conference. "We present the first 15-PetaFLOP Deep Learning system for solving supervised and semi-supervised scientific pattern classification problems, optimized for Intel Xeon Phi. We use a hybrid of synchronous and asynchronous training to scale to ~9600 nodes of Cori on CNN and autoencoder networks."The post Video: Deep Learning at 15 Petaflops appeared first on insideHPC.

|

|

by Rich Brueckner on (#3C0KD)

NVIDIA in Silicon Valley is seeking a Senior Memory Systems Architect in our Job of the Week. "NVIDIA is building the world's fastest highly-parallel processing systems, period. Our high-bandwidth multi-client memory subsystems are blazing new territory with every generation. As we increase levels of parallelism, bandwidth and capacity, we are presented with design challenges exacerbated by clients with varying but simultaneous needs such as real-time, low latency, and high-bandwidth. In addition, we are adding improved virtualization and programming model capabilities."The post Job of the Week: Senior Memory Systems Architect at NVIDIA appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BY54)

Jack Dongarra from the University of Tennessee gave this talk at SC17. "In this talk we will look at the current state of high performance computing and look to the future toward exascale. In addition, we will examine some issues that can help in reducing the power consumption for linear algebra computations."The post Jack Dongarra Presents: Overview of HPC and Energy Savings on NVIDIA’s V100 appeared first on insideHPC.

|

|

by staff on (#3BXWR)

The Mont-Blanc Project will once again sponsor a team at the upcoming ISC 2018 Student Cluster Competition. The sponsored team from the Universitat Politècnica de Catalunya / Barcelona Tech (UPC) will compete for the fourth time at ISC 2018, which takes place June 24– 28 in Frankfurt, Germany. "I am always impressed by the technical prowess exhibited by these young teams: the way they are turning our lessons into applied knowledge, through live benchmarking and on the fly troubleshooting is a great process and it triggers a positive loop in all of us."The post Mont-Blanc to Sponsor UPC Team at ISC 2018 Student Cluster Competition appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BV9D)

In this Invited Talk from SC17, Michael Wolfe from NVIDIA presents: Why Iteration Space Tiling? The talk is based on his noted paper, which won the SC17 Test of Time Award. "Tiling is well-known and has been included in many compilers and code transformation systems. The talk will explore the basic contribution of the SC1989 paper to the current state of iteration space tiling."The post Michael Wolfe Presents: Why Iteration Space Tiling? appeared first on insideHPC.

|

|

by Richard Friedman on (#3BV9F)

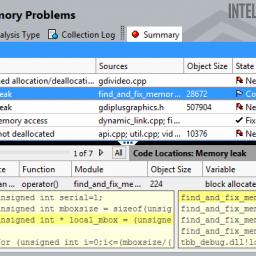

Intel Inspector is an integrated debugger that can easily diagnose latent and intermittent errors and guide users to locate the root cause. It does this by instrumenting the binaries, including dynamically generated or linked libraries, even when the source code is not available. This includes C, C++, and legacy Fortran codes.The post Use Intel® Inspector to Diagnose Hidden Memory and Threading Errors in Parallel Code appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BRRA)

In this podcast, the Radio Free HPC team reviews their 2017 technology predictions from last year. After that, we do our 2018 Predictions including a bombshell on who Rich thinks will acquire Cray by the end of the year.The post Radio Free HPC does their 2018 Technology Predictions appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BRKS)

In this time-lapse video, engineers install the first racks of the Summit supercomputer at Oak Ridge National Lab. "Summit is the next leap in leadership-class computing systems for open science. With Summit we will be able to address, with greater complexity and higher fidelity, questions concerning who we are, our place on earth, and in our universe."The post Time-Lapse Video of Summit Supercomputer Installation appeared first on insideHPC.

|

|

by staff on (#3BPRB)

In this podcast, Zaira MartÃn-Moldes and Davoud Ebrahimi describe their computational research into how bones form. "The authors used XSEDE supercomputers to model the protein folding of integrin, an essential step in the intracellular pathways that lead to osteogenesis. This research will help larger efforts to cure bone disorders such as osteoporosis or calcific aortic valve disease."The post Podcast: Supercomputing Osteogenesis appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BP92)

Inquisitive minds want to know what causes the universe to expand, how M-theory binds the smallest of the small particles or how social dynamics can lead to revolutions. "The way that statisticians answer these questions is with Approximate Bayesian Computation (ABC), which we learn on the first day of the summer school and which we combine with High Performance Computing. The second day focuses on a popular machine learning approach 'Deep-learning' which mimics the deep neural network structure in our brain, in order to predict complex phenomena of nature."The post Deep Learning and Automatic Differentiation from Theano to PyTorch appeared first on insideHPC.

|

|

by staff on (#3BMJ1)

The Trinity Supercomputer at Los Alamos National Laboratory was recently named as a top 10 supercomputer on two lists: it made number three on the High Performance Conjugate Gradients (HPCG) Benchmark project, and is number seven on the TOP500 list. "Trinity has already made unique contributions to important national security challenges, and we look forward to Trinity having a long tenure as one of the most powerful supercomputers in the world.†said John Sarrao, associate director for Theory, Simulation and Computation at Los Alamos.The post Trinity Supercomputer lands at #7 on TOP500 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BMD8)

In this video from SC17, Naoki Shibata from Xtreme Design demonstrates the company's innovative solutions for deploying and managing HPC clouds. "Customers can use our easy-to-deploy turnkey HPC cluster system on public cloud, including setup of HPC middleware (OpenHPC-based packages), configuration of SLURM, OpenMPI, and OSS HPC applications such as OpenFOAM. The user can start the HPC cluster (submitting jobs) within 10 minutes on the public cloud. Our team is a technical startup for focusing HPC cloud technology."The post Xtreme Design HPC Cloud Management Demo at SC17 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BJRP)

In this video, Dan Olds from Radio Free HPC offers up his rant-filled rendition of the 12 Days of Christmas. The Radio Free HPC podcast is a joint production by industry pundits focused on High Performance Computing. "To all of our listeners, we wish you a very happy Holiday Season!"The post 12 Days of Christmas from Radio Free HPC & Dan Olds appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BJNZ)

In this video, Mellanox CTO Michael Kagan offers his view of technology trends for 2018. "Mellanox is looking forward to continued to Technology and Product Leadership in 2018. As the leader in End-to-End InfiniBand and Ethernet Technologies, Mellanox will introduce new products (Switch Systems/Silicon, Acq. EZchip Technology) to accelerate future growth. The company is also positioned to benefit from market transition from 10Gb to 25/50/100Gb."The post 2018 Technology Trends from Mellanox CTO Michael Kagan appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BGN1)

Today Ace Computers announced four leading-edge OpenStack applications for clusters, servers and workstations. Although the company has been working with top open source application providers for decades—this is the first announcement of an offering portfolio that includes Red Hat, SUSE, Bright Computing and CentOS. "We have the advantage over most of our competitors of decades-long partnerships with many platform developers," said Ace Computers CEO John Samborski. " These strong relationships give us access to leading-edge innovations and expertise that allow us to build the best possible solutions for our clients.â€The post Ace Computers Offers OpenStack Solutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#3BGBY)

Theresa Windus from Iowa State University gave this Invited Talk at SC17. "This talk will focus on the challenges that computational chemistry faces in taking the equations that model the very small (molecules and the reactions they undergo) to efficient and scalable implementations on the very large computers of today and tomorrow. In particular, how do we take advantage of the newest architectures while preparing for the next generation of computers? "The post Video: Taking the Nanoscale to the Exascale appeared first on insideHPC.

|