|

by staff on (#3ADJZ)

Today D-Wave Systems announced its involvement in a grant-funded UK project to improve logistics and planning operations using quantum computing algorithms. "Advancing AI planning techniques could significantly improve operational efficiency across major industries, from law enforcement to transportation and beyond,†said Robert “Bo†Ewald, president of D-Wave International. “Advancing real-world applications for quantum computing takes dedicated collaboration from scientists and experts in a wide variety of fields. This project is an example of that work and will hopefully lead to faster, better solutions for critical problems.â€The post Innovate UK Award to Confirm Business Case for Quantum-enhanced Optimization Algorithms appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 17:30 |

|

by Rich Brueckner on (#3ADCY)

In this video from SC17 in Denver, Rick Stevens from Argonne leads a discussion about the Comanche Advanced Technology Collaboration. By initiating the Comanche collaboration, HPE brought together industry partners and leadership sites like Argonne National Laboratory to work in a joint development effort,†said HPE’s Chief Strategist for HPC and Technical Lead for the Advanced Development Team Nic Dubé. “This program represents one of the largest customer-driven prototyping efforts focused on the enablement of the HPC software stack for ARM. We look forward to further collaboration on the path to an open hardware and software ecosystem.â€The post Video: Comanche Collaboration Moves ARM HPC forward at National Labs appeared first on insideHPC.

|

|

by Rich Brueckner on (#3AD6V)

In this podcast, Radio Free HPC Looks at the New Power9, Titan V, and Snapdragon 845 devices for AI and HPC. "Built specifically for compute-intensive AI workloads, the new POWER9 systems are capable of improving the training times of deep learning frameworks by nearly 4x allowing enterprises to build more accurate AI applications, faster."The post Radio Free HPC Looks at the New Power9, Titan V, and Snapdragon 845 appeared first on insideHPC.

|

|

by staff on (#3AD3R)

FPGAs can improve performance per watt, bandwidth and latency. In this guest post, Intel explores how Field Programmable Gate Arrays (FPGAs) can be used to accelerate high performance computing. "Tightly coupled programmable multi-function accelerator platforms, such as FPGAs from Intel, offer a single hardware platform that enables servers to address many different workloads needs—from HPC needs for the highest capacity and performance through data center requirements for load balancing capabilities to address different workload profiles."The post Accelerating HPC with Intel FPGAs appeared first on insideHPC.

|

|

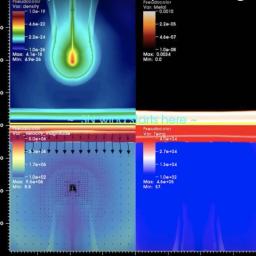

by staff on (#3AAVG)

Over at LBNL, Kathy Kincade writes that cosmologists are using supercomputers to study how heavy metals expelled from exploding supernovae helped the first stars in the universe regulate subsequent star formation. "In the early universe, the stars were massive and the radiation they emitted was very strong,†Chen explained. “So if you have this radiation before that star explodes and becomes a supernova, the radiation has already caused significant damage to the gas surrounding the star’s halo.â€The post Supercomputing How First Supernovae Altered Early Star Formation appeared first on insideHPC.

|

|

by Rich Brueckner on (#3AASG)

In this video from SC17, Gabor Samu describes how IBM Spectrum LSF helps users orchestrate HPC workloads. "This week we celebrate the release of our second agile update to IBM Spectrum LSF 10. And it’s our silver anniversary… 25 years of IBM Spectrum LSF! The IBM Spectrum LSF Suites portfolio redefines cluster virtualization and workload management by providing a tightly integrated solution for demanding, mission-critical HPC environments that can increase both user productivity and hardware utilization while decreasing system management costs."The post IBM Spectrum LSF Powers HPC at SC17 appeared first on insideHPC.

|

|

by staff on (#3A8JY)

Today Dell EMC announced a joint solution with Alces Flight and AWS to provide HPC for the University of Liverpool. Dell EMC will provide a fully managed on-premises HPC cluster while a cloud-based HPC account for students and researchers will enable cloud bursting computational capacity. "We are pleased to be working with Dell EMC and Alces Flight on this new venture," said Cliff Addison, Head of Advanced Research Computing at the University of Liverpool. "The University of Liverpool has always maintained cutting-edge technology and by architecting flexible access to computational resources on AWS we're setting the bar even higher for what can be achieved in HPC."The post Dell EMC Powers HPC at University of Liverpool with Alces Flight appeared first on insideHPC.

|

|

by Rich Brueckner on (#3A8K0)

In this video from the Intel HPC Developer Conference, Andres Rodriguez describes his presentation on Enabling the Future of Artificial Intelligence. "Intel has the industry’s most comprehensive suite of hardware and software technologies that deliver broad capabilities and support diverse approaches for AI—including today’s AI applications and more complex AI tasks in the future."The post Video: Enabling the Future of Artificial Intelligence appeared first on insideHPC.

|

|

by Rich Brueckner on (#3A60C)

Today NVIDIA introduced their new high end TITAN V GPU for desktop PCs. Powered by the Volta architecture, TITAN V excels at computational processing for scientific simulation. Its 21.1 billion transistors deliver 110 teraflops of raw horsepower, 9x that of its predecessor, and extreme energy efficiency. “With TITAN V, we are putting Volta into the hands of researchers and scientists all over the world. I can’t wait to see their breakthrough discoveries.â€The post NVIDIA TITAN V GPU Brings Volta to the Desktop for AI Development appeared first on insideHPC.

|

|

by Rich Brueckner on (#3A5SN)

James Coomer gave this talk at the DDN User Group at SC17. "Our technological and market leadership comes from our long-term investments in leading-edge research and development, our relentless focus on solving our customers’ end-to-end data and information management challenges, and the excellence of our employees around the globe, all relentlessly focused on delivering the highest levels of satisfaction to our customers. To meet these ever-increasing requirements, users are rapidly adopting DDN’s best-of-breed high-performance storage solutions for end-to-end data management from data creation and persistent storage to active archives and the Cloud."The post Video: DDN Applied Technologies, Performance and Use Cases appeared first on insideHPC.

|

|

by staff on (#3A5SQ)

Data Vortex Technologies has formalized a partnership with Providentia Worldwide, LLC. Providentia is a technologies and solutions consulting venture which bridges the gap between traditional HPC and enterprise computing. The company works with Data Vortex and potential partners to develop novel solutions for Data Vortex technologies and to assist with systems integration into new markets. This partnership will leverage the deep experience in enterprise and hyperscale environments of Providentia Worldwide founders, Ryan Quick and Arno Kolster, and merge the unique performance characteristics of the Data Vortex with traditional systems.The post Data Vortex Technologies Teams with Providentia Worldwide for HPC appeared first on insideHPC.

|

|

by staff on (#3A5PM)

In this video from SC17, Thomas Krueger describes how Intel supports Open Source High Performance Computing software like OpenHPC and Lustre. "As the Linux initiative demonstrates, a community-based, vendor-catalyzed model like this has major advantages for enabling software to keep pace with requirements for HPC computing and storage hardware systems. In this model, stack development is driven primarily by the open source community and vendors offer supported distributions with additional capabilities for customers that require and are willing to pay for them."The post Intel Supports open source software for HPC appeared first on insideHPC.

|

|

by staff on (#3A31V)

Today System Fabric Works announced its support and integration of the BeeGFS file system with the latest NetApp E-Series All Flash and HDD storage systems which makes BeeGFS available on the family of NetApp E-Series Hyperscale Storage products as part of System Fabric Work’s (SFW) Converged Infrastructure solutions for high-performance Enterprise Computing, Data Analytics and Machine Learning. "We are pleased to announce our Gold Partner relationship with ThinkParQ,†said Kevin Moran, President and CEO, System Fabric Works. “Together, SFW and ThinkParQ can deliver, worldwide, a highly converged, scalable computing solution based on BeeGFS, engineered with NetApp E-Series, a choice of InfiniBand, Omni-Path, RDMA over Ethernet and NVMe over Fabrics for targeted performance and 99.9999 reliability utilizing customer-chosen clustered servers and clients and SFW’s services for architecture, integration, acceptance and on-going support services.â€The post System Fabric Works adds support for BeeGFS Parallel File System appeared first on insideHPC.

|

|

by staff on (#3A2Q5)

Today DDN announced the results of its annual HPC Trends survey, which reflects the continued adoption of flash-based storage as essential to respondent’s overall data center strategy. While flash is deemed essential, respondents anticipate needing additional technology innovations to unlock the full performance of their HPC applications. Managing complex I/O workload performance remains far and away the largest challenge to survey respondents, with 60 percent of end-users citing this as their number one challenge.The post DDN’s HPC Trends Survey: Complex I/O Workloads are the #1 Challenge appeared first on insideHPC.

|

|

by Rich Brueckner on (#3A2KQ)

Xtreme Design is seeking a Senior HPC Cloud Architect in our Job of the Week. "XTREME Design is seeking an HPC-focused Cloud Architect to join our team and continue development of the XTREME family of products and solutions, and specifically the virtual supercomputing-on-demand service – XTREME DNA. You will have the opportunity to help shape and execute product designs that will build mindshare and promote broad use of XTREME products and solutions."The post Job of the Week: Senior HPC Architect at Xtreme Design appeared first on insideHPC.

|

|

by Rich Brueckner on (#3A2H9)

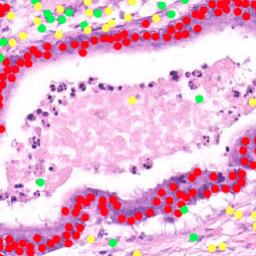

BIGstack 2.0 incorporates our latest Intel Xeon Scalable processors, Intel 3D NAND SSD, and Intel FPGAs while also leveraging the latest genomic tools from the Broad Institute in GATK 3.8 and GATK 4.0. This new stack provides a 3.34x speed up in whole genome analysis and a 2.2x daily throughput increase. It is able to deliver these performance improvements with a cost of just $5.68 per whole genome analyzed. The result: researchers will be able to analyze more genomes, more quickly and at lower cost, enabling new discoveries, new treatment options, and faster diagnosis of disease.The post Intel Select Solutions: BigStack 2.0 for Genomics appeared first on insideHPC.

|

|

by MichaelS on (#3A2EM)

With the introduction of Intel Parallel Studio XE, instructions for utilizing the vector extensions have been enhanced and new instructions have been added. Applications in diverse domains such as data compression and decompression, scientific simulations and cryptography can take advantage of these new and enhanced instructions. "Although microkernels can demonstrate the effectiveness of the new SIMD instructions, understanding why the new instructions benefit the code can then lead to even greater performance."The post Intel Parallel Studio XE AVX-512: Tuning for Success with the Latest SIMD Extensions and Intel® Advanced Vector Extensions 512 appeared first on insideHPC.

|

|

by staff on (#3A0HP)

Today Cray announced the Company has joined the Big Data Center at NERSC. The collaboration between the two organizations is representative of Cray’s commitment to leverage its supercomputing expertise, technologies, and best practices to advance the adoption of Artificial Intelligence, deep learning, and data-intensive computing. "We are really excited to have Cray join the Big Data Center,†said Prabhat, Director of the Big Data Center, and Group Lead for Data and Analytics Services at NERSC. “Cray’s deep expertise in systems, software, and scaling is critical in working towards the BDC mission of enabling capability applications for data-intensive science on Cori. Cray and NERSC, working together with Intel and our IPCC academic partners, are well positioned to tackle performance and scaling challenges of Deep Learning.â€The post Cray Joins Big Data Center at NERSC for AI Development appeared first on insideHPC.

|

|

by Rich Brueckner on (#3A09G)

In this video from the DDN User Group at SC17, Ron Hawkins from the San Diego Supercomputer Center presents: BioBurst — Leveraging Burst Buffer Technology for Campus Research Computing. Under an NSF award, SDSC will implement a separately scheduled partition of TSCC with technology designed to address key areas of bioinformatics computing including genomics, transcriptomics, […]The post BioBurst: Leveraging Burst Buffer Technology for Campus Research Computing appeared first on insideHPC.

|

|

by staff on (#3A09J)

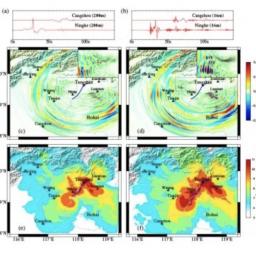

A Chinese team of researchers awarded this year’s prestigious Gordon Bell prize for simulating the devastating 1976 earthquake in Tangshan, China, used an open-source code developed by researchers at the San Diego Supercomputer Center (SDSC) at UC San Diego and San Diego State University (SDSU) with support from the Southern California Earthquake Center (SCEC). "We congratulate the researchers for their impressive innovations porting our earthquake software code, and in turn for advancing the overall state of seismic research that will have far-reaching benefits around the world,†said Yifeng Cui, director of SDSC’s High Performance Geocomputing Laboratory, who along with SDSU Geological Sciences Professor Kim Olsen, Professor Emeritus Steven Day and researcher Daniel Roten developed the AWP-ODC code.The post SDSC Earthquake Codes Used in 2017 Gordon Bell Prize Research appeared first on insideHPC.

|

|

by Rich Brueckner on (#39ZFN)

Today AMD announced the first public cloud instances powered by the AMD EPYC processor. Microsoft Azure has deployed AMD EPYC processors in its datacenters in advance of preview for its latest L-Series of Virtual Machines (VM) for storage optimized workloads. The Lv2 VM family will take advantage of the high-core count and connectivity support of […]The post Microsoft Azure Becomes First Global Cloud Provider to Deploy AMD EPYC appeared first on insideHPC.

|

|

by Rich Brueckner on (#39ZFP)

In this video from SC17, and Martin Yip and Josh Simons from VMware describe how the company is moving Virtualized HPC forward. "In recent years, virtualization has started making major inroads into the realm of High Performance Computing, an area that was previously considered off-limits. In application areas such as life sciences, electronic design automation, financial services, Big Data, and digital media, people are discovering that there are benefits to running a virtualized infrastructure that are similar to those experienced by enterprise applications, but also unique to HPC."The post VMware moves Virtualized HPC Forward at SC17 appeared first on insideHPC.

|

|

by staff on (#39ZCA)

Today Cavium announced it is collaborating with IBM for next generation platforms by joining OpenCAPI, an initiative founded by IBM, Google, AMD and others. OpenCAPI provides high-bandwidth, low latency interface optimized to connect accelerators, IO devices and memory to CPUs. With this announcement Cavium plans to bring its leadership in server IO and security offloads to next generation platforms that support the OpenCAPI interface. "We are excited to be a part of the OpenCAPI consortium. As our partnership with IBM continues to grow, we see more synergies in high speed communication and Artificial Intelligence applications.†said Syed Ali, founder and CEO of Cavium. “We look forward to working with IBM to enable exponential performance gains for these applications.â€The post Cavium Joins OpenCAPI for Next-gen Platforms appeared first on insideHPC.

|

|

by Rich Brueckner on (#39Z9C)

In this video from SC17, Dr Kwang Jin Oh, Director of Supercomputing Service Center at KISTI describes the new Intel-powered Cray supercomputer coming to South Korea. “Our cluster supercomputers are specifically designed to give customers like KISTI the computing resources they need for achieving scientific breakthroughs throughout a wide array of increasingly-complex, data-intensive challenges across modeling, simulation, analytics, and artificial intelligence. We look forward to working closely with KISTI now and into the future.â€The post Interview: Cray to Deploy Largest Supercomputer in South Korea at KISTI appeared first on insideHPC.

|

|

by staff on (#39Z6T)

Use cases show AI technology, like what's used in an optimized Diffusion Compartment Imaging (DCI) technique, is growing the potential of HPC computing. AI is thought to be a solution to many of DCI's challenges. "A complete Diffusion Compartment Imaging study can now be completed in 16 minutes on a workstation, which means Diffusion Compartment Imaging can now be used in emergency situations, in a clinical setting, and to evaluate the efficacy of treatment. Even better, higher resolution images can be produced because the optimized code scales."The post AI Technology: The Answer to Diffusion Compartment Imaging Challenges appeared first on insideHPC.

|

|

by staff on (#39WRR)

The OFA Workshop 2018 Call for Sessions encourages industry experts and thought leaders to help shape this year’s discussions by presenting or leading discussions on critical high performance networking issues. Sessions are designed to educate attendees on current development opportunities, troubleshooting techniques, and disruptive technologies affecting the deployment of high performance computing environments. The OFA Workshop places a high value on collaboration and exchanges among participants. In keeping with the theme of collaboration, proposals for Birds of a Feather sessions and panels are particularly encouraged.The post Call for Sessions: OpenFabrics Alliance Workshop in Boulder appeared first on insideHPC.

|

|

by Rich Brueckner on (#39WJJ)

In this video from SC17, Adel El Hallak from IBM unveils the POWER9 servers that will form the basis of the world's fastest "Coral" supercomputers coming to ORNL and LLNL. "In addition to arming the world's most powerful supercomputers, IBM POWER9 Systems is designed to enable enterprises around the world to scale unprecedented insights, driving scientific discovery enabling transformational business outcomes across every industry."The post Video: IBM Launches POWER9 Nodes for the World’s Fastest Supercomputers appeared first on insideHPC.

|

|

by staff on (#39W7Z)

Today Verne Global in Iceland announced hpcDIRECT, a powerful, agile and efficient HPC-as-a-service (HPCaaS) platform. hpcDIRECT provides a fully scalable, bare metal service with the ability to rapidly provision the full performance of HPC servers uncontended and in a secure manner.“With hpcDIRECT, we take the complexity and capital costs out of scaling HPC and bring greater accessibility and more agility in terms of how IT architects plan and schedule their workloads.â€The post Verne Global Launches hpcDIRECT, an HPC as a Service Platform appeared first on insideHPC.

|

|

by Rich Brueckner on (#39W81)

In this video, David Warberg gives us a quick tour of the Intel booth at SC17. "Intel unveiled new HPC advancements for optimized workloads, including the the addition of a new family of HPC solutions to the Intel Select Solutions program. Built on the latest Intel Xeon Scalable platforms, Intel Select Solutions are verified configurations designed to speed and simplify the evaluation and deployment of data center infrastructure while meeting a high performance threshold."The post Intel SC17 Booth Tour: Driving Innovation in HPC appeared first on insideHPC.

|

|

by staff on (#39W4X)

Today Mellanox announced in collaboration with NEC Corporation support for the newly announced SX-Aurora TSUBASA systems with Mellanox ConnectX InfiniBand adapters. "We appreciate the performance, efficiency and scalability advantages that Mellanox interconnect solutions bring to our platform,†said Shigeyuki Aino, assistant general manager system platform business unit, IT platform division, NEC Corporation. “The in-network computing and PeerDirect capabilities of InfiniBand are the perfect complement to the unique vector processing engine architecture we have designed for our SX-Aurora TSUBASA platform.â€The post Mellanox and NEC Partner to Deliver High-Performance Artificial Intelligence Platforms appeared first on insideHPC.

|

|

by Rich Brueckner on (#39W1P)

In this video from SC17 in Denver, James Coomer from DDN describes how the company is driving high performance storage for HPC. For more than 15 years, DDN has designed, developed, deployed, and optimized systems, software, and solutions that enable enterprises, service providers, universities, and government agencies to generate more value and accelerate time to insight from their data and information, on premise and in the cloud.The post DDN Simplifies High Performance Storage at SC17 appeared first on insideHPC.

|

|

by staff on (#39W1R)

New benchmarks from Computer Simulation Technology on their recently optimized 3D electromagnetic field simulation tools compare the performance of the new Intel Xeon Scalable processors with previous generation Intel Xeon processors. "Our team works with the customers in terms of testing of models and configuration settings to make good recommendations for customers so they get a well performing system and the best performance when running the models.â€The post Benchmarking Optimized 3D Electromagnetic Simulation Tools appeared first on insideHPC.

|

|

by Rich Brueckner on (#39S4V)

In this video from SC17 in Denver, Rich Kanadjian from Kingston describes the company's wide array server memory and NVMe PCIe Flash solutions for HPC. "Today’s supercomputing installations are capable of doing billions of calculations per second and managing data in enormous volume and velocity. Kingston continues to provide top data solutions with reliability and predictable performance for the world’s most powerful HPC and enterprise big data applications, while also laying the groundwork for future innovation in data center efficiency.â€The post Kingston NVMe Technologies Speed Up HPC at SC17 appeared first on insideHPC.

|

|

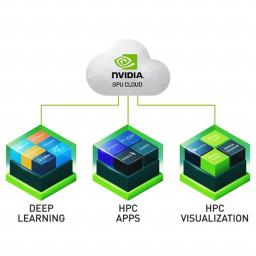

by staff on (#39S1K)

Today NVIDIA announced that hundreds of thousands of AI researchers using desktop GPUs can now tap into the power of NVIDIA GPU Cloud. “With GPU-optimized software now available to hundreds of thousands of researchers using NVIDIA desktop GPUs, NGC will be a catalyst for AI breakthroughs and a go-to resource for developers worldwide.â€The post Consumer GPUs come to NVIDIA GPU Cloud for AI Research appeared first on insideHPC.

|

|

by staff on (#39RW9)

In this video, Florina Ciorba from University of Basel describes the theme of the upcoming PASC18 conference. With a focus on the convergence of Big Data and Computation, the conference takes place from July 2-4, 2018 in Basel, Switzerland. "PASC18 is the fifth edition of the PASC Conference series, an international platform for the exchange of competences in scientific computing and computational science, with a strong focus on methods, tools, algorithms, application challenges, and novel techniques and usage of high performance computing."The post Video: PASC18 to Focus on Big Data & Computation appeared first on insideHPC.

|

|

by Rich Brueckner on (#39RS3)

"On the November 2017 TOP500 list, Intel-powered supercomputers accounted for six of the top 10 systems and a record high of 471 out of 500 systems. Intel Omni-Path Architecture (Intel OPA) gained momentum, delivering a majority of the petaFLOPS of systems using 100Gb fabric delivering over 80 petaFLOPS, an almost 20 percent increase compared with the June 2017 Top500 list. In addition, Intel OPA now connects almost 60 percent of nodes using 100Gb fabrics on the Top500 list. Also, Intel powered all 137 new systems added to the November list."The post Intel Omni Path Gains Momentum at SC17 appeared first on insideHPC.

|

|

by Rich Brueckner on (#39RS5)

In this podcast, the Radio Free HPC team looks at a controversy stirred up by the recent Irish Supercomputing List.The 9th Irish Supercomputer List was released this week. For the first time, Ireland has four computers ranked on the Top500. "Since the publication of the List, a third party called the Irish Centre for High-End Computing (ICHEC) has expressed concerns that the press release issued by the Irish Supercomputing List is misleading. You can read their opinion here."The post Radio Free HPC Looks at a Controversy in the Irish Supercomputing List appeared first on insideHPC.

|

|

by Rich Brueckner on (#39PAG)

In this RCE Podcast, Brock Palen and Jeff Squyres discuss PMIx with Ralph Castain from Intel. "The Process Management Interface (PMI) has been used for quite some time as a means of exchanging wireup information needed for interprocess communication. While PMI-2 demonstrates better scaling properties than its PMI-1 predecessor, attaining rapid launch and wireup of the roughly 1M processes executing across 100k nodes expected for exascale operations remains challenging."The post RCE Podcast Looks at PMIx Process Management Interface for Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#39P8J)

Tim Pugh from the Australian Bureau of Meteorology gave this talk at the DDN User Group in Denver. "The Bureau of Meteorology, Australia’s national weather, climate and water agency, relies on DDN’s GRIDScaler Enterprise NAS storage appliance to handle its massive volumes of research data to deliver reliable forecasts, warnings, monitoring and advice spanning the Australian region and Antarctic territory."The post Video: Australian Bureau of Meteorology moves to a new Data Production Service appeared first on insideHPC.

|

|

by Rich Brueckner on (#39M8T)

In this video from AWS Reinvent, Anthony Liguori from Amazon presents: Nitro Hypervisor - the Evolution of Amazon EC2 Virtualization. "The new Nitro hypervisor for Amazon EC2, introduced with the launch of C5 instances, is a component that primarily provides CPU and memory isolation for C5 instances. VPC networking, and EBS storage resources are implemented by dedicated hardware components that are part of all current generation EC2 instance families. It is built on core Linux Kernel-based Virtual Machine (KVM) technology, but does not include general purpose operating system components.The post Video: Introducing the Nitro Hypervisor – the Evolution of Amazon EC2 Virtualization appeared first on insideHPC.

|

|

by staff on (#39M4Z)

Computational scientists now have the opportunity to apply for the upcoming Argonne Training Program on Extreme-Scale Computing (ATPESC). The event takes place from July 29-August 10, 2018 in greater Chicago. "With the challenges posed by the architecture and software environments of today’s most powerful supercomputers, and even greater complexity on the horizon from next-generation and exascale systems, there is a critical need for specialized, in-depth training for the computational scientists poised to facilitate breakthrough science and engineering using these amazing resources."The post Apply now for Argonne Training Program on Extreme-Scale Computing 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#39HJZ)

In this video from SC17, Sunita Chandrasekaran from OpenACC.org and Stan Posey from NVIDIA describe how OpenACC eases GPU programming for HPC. "At SC17, OpenACC.org announced milestones highlighting OpenACC’s broad adoption in weather and climate models that simulate the Earth’s atmosphere, including one of this year’s Gordon Bell finalist. Additionally, the organization announced their hackathon momentum and the new OpenACC 2.6 specification."The post Video: OpenACC Eases GPU Programming for HPC at SC17 appeared first on insideHPC.

|

|

by ralphwells on (#39HG3)

Hyperion Research recently announced the newest recipients of the HPC Innovation Excellence Awards. "High performance computing contributes enormously to scientific progress, economic competitiveness, national security and the quality of human life," said Bob Sorensen, Hyperion Research vice president of research and technology. "The winners of these awards have been judged to be among the world's best at exploiting HPC to achieve important real-world innovations."The post Hyperion Research Announces HPC Innovation Excellence Award Winners appeared first on insideHPC.

|

|

by Rich Brueckner on (#39HG4)

In this video, Akira Sano provides a tour of the Supermicro booth at SC17 in Denver. "Supermicro offers the best selection of leading HPC optimized servers in the industry as evidenced by the recent selection of our twin architecture by the NASA Center for Climate Simulation (NCCS)."The post Supermicro Booth Tour Showcases HPC Innovation at SC17 appeared first on insideHPC.

|

|

by Rich Brueckner on (#39H9Y)

New York University is seeking an HPC Specialist in our Job of the Week. "The HPC Specialist will provide technical leadership in design, development, installation and maintenance of hardware and software for the central High-Performance Computing systems and/or research computing services at New York University."The post Job of the Week: HPC Specialist at New York University appeared first on insideHPC.

|

|

by Rich Brueckner on (#39H6K)

In this video from the Intel HPC Developer Conference in Denver, Michael Strickland describes how Intel FPGAs are powering new levels of performance and datacenter efficiency with FPGAs. "Altera and Intel offers a broad range of FPGA devices - from the high performaning Stratix series to the flexible MAX 10 - so you can find a device that best meets your business needs."The post Intel Steps up to HPC & the Enterprise with FPGAs appeared first on insideHPC.

|

|

by Rich Brueckner on (#39E7H)

Dr. Stephan Schenk from BASF gave this talk at the DDN User Group at SC17. "With 1.75 petaflops, our supercomputer QURIOSITY offers around 10 times the computing power that BASF currently has dedicated to scientific computing. In the ranking of the 500 largest computing systems in the world, the BASF supercomputer is currently number 71.â€The post Video: A Petascale HPC System at BASF appeared first on insideHPC.

|

|

by staff on (#39E4G)

The European HPC Summit Week 2018 conference has released their Call for Workshops. The event takes place place May 28 - June 1 in Ljubljana, Slovenia. "This call for workshops is addressed to all possible participants interested in including a session or workshop in the EHPCSW18. The procedure is to send an expression of interest to have a session/workshop and agree on a joint programme for this week. If you are an HPC project, initiative or company and you want to include a workshop or session in the European HPC Summit Week, please send the document attached before December 18, 2017."The post Call for Workshops: European HPC Summit Week 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#39DX1)

In this video from SC17 in Denver, Michael Klemm from the OpenMP ARB describes how the OpenMP programming community is moving forward to new levels of scalable performance. The OpenMP Architecture Review Board (ARB) is seeking feedback on the newly released Technical Report 6.The post OpenMP ARB Releases New Technical Report and Asks for Feedback appeared first on insideHPC.

|

|

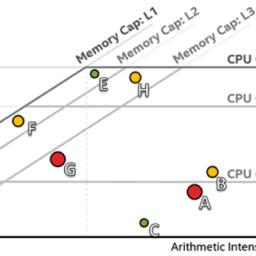

by Richard Friedman on (#39DT1)

A valuable feature of Intel Advisor is its Roofline Analysis Chart, which provides an intuitive and powerful visualization of actual performance measured against hardware-imposed performance ceilings. Intel Advisor's vector parallelism optimization analysis and memory-versus-compute roofline analysis, working together, offer a powerful tool for visualizing an application’s complete current and potential performance profile on a given platform.The post A New Way to Visualize Performance Optimization Tradeoffs appeared first on insideHPC.

|