|

by Rich Brueckner on (#179KT)

Dr. Rosa Badia from BSC/CNS presented this Invited Talk at SC15. "StarSs (Star superscalar) is a task-based family of programming models that is based on the idea of writing sequential code which is executed in parallel at run-time taking into account the data dependencies between tasks. The talk will describe the evolution of this programming model and the different challenges that have been addressed in order to consider different underlying platforms from heterogeneous platforms used in HPC to distributed environments, such as federated clouds and mobile systems."The post Video: Superscalar Programming Models – Making Applications Platform Agnostic appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 15:45 |

|

by Rich Brueckner on (#179FM)

Manufacturing is enjoying an economic and technological resurgence with the help of high performance computing. In this insideHPC webinar, you’ll learn how the power of CAE and simulation is transforming the industry with faster time to solution, better quality, and reduced costs. "Altair Engineering has partnered with SGI on Hyperworks Unlimited Physical Appliance, a turnkey solution that simplifies high performance computing for CAE. With this easy-to-deploy, unified platform, users can tackle a broad spectrum

of CAE applications and workloads."The post Computer Aided Engineering Leaps Forward with HPC for Manufacturing appeared first on insideHPC.

|

|

by staff on (#176FM)

flyelephantToday the FlyElephant announced a number of upgrades that allow users to work with private repositories with an improved system security and good task functionality. "FlyElephant is a platform for scientists, providing a computing infrastructure for calculations, helping to find partners for the collaboration on projects, and managing all data from one place. FlyElephant automates routine tasks and helps to focus on core research issues."The post FlyElephant Platform Adds Private Repositories appeared first on insideHPC.

|

|

by staff on (#176DP)

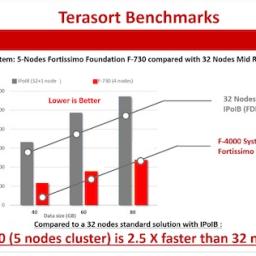

Today Italy's A3Cube announced the F-730 Family of EXA-Converged parallel systems built on Dell servers and achieving sub-microsecond latency through bare metal data access. "A3Cube’s EXA-Converged infrastructure represents the next step in the evolution of converged systemsâ€, said Emilio Billi, A3Cube’s CTO, “while keeping and improving on the scalability and resilience of Hyper-Converged infrastructure. It is engineered to converge all system resources and provide parallel data access and inter node communication at the bare metal level, eliminating the need for, and the limits of, traditional Hyper-converged systems. The system can efficiently use all the fastest storage devices currently on the market or planned to come to market, and puts all existing solutions in the rear view mirror.â€The post A3Cube Announces Exa-Converged Parallel Systems appeared first on insideHPC.

|

|

by staff on (#175S8)

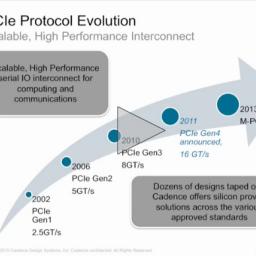

Today Cadence announced a collaboration with Mellanox Technologies to demonstrate multi-lane interoperability between Mellanox's physical interface (PHY) IP for PCIe 4.0 technology and Cadence's 16Gbps multi-link and multi-protocol PHY IP implemented in TSMC's 16nm FinFET Plus (16FF+) process. Customers seeking to develop and deploy next-generation green data centers can now use a silicon-proven IP solution from Cadence for immediate integration and fastest market deployment. Cadence and Mellanox are scheduled to demonstrate electrical interoperability for PCIe 4.0 architecture between their respective PHY solutions at the 2016 TSMC Symposium on March 15, 2016 in Santa Clara, California.The post Mellanox & Cadence Demonstrate PCI Express 4.0 Multi-Lane PHY IP Interoperability appeared first on insideHPC.

|

|

by staff on (#175QG)

Today Bright Computing announced that it has teamed up with the Germany-based ProfitBricks to provide a cutting edge elastic HPC solution to a Swiss University. "This is a unique example of how Bright Computing can help a company move their HPC requirement to the cloud,†said Lee Carter, VP EMEA at Bright Computing. “Bright enables the university to dynamically expand and contract the infrastructure needed to support their research projects, all at the click of a button. This ensures the university only pays for the computational resources it needs, when they need them, saving time and expense.â€The post Elastic Computing Comes to Swiss University from ProfitBricks and Bright appeared first on insideHPC.

|

|

by Rich Brueckner on (#174ZR)

Submissions opened today for ACM SIGHPC/Intel Computational & Data Science Fellowships. Designed to increase the diversity of students pursuing graduate degrees in data science and computational science, the program will support students pursuing degrees at institutions anywhere in the world.The post Students: Apply for ACM SIGHPC/Intel Computational & Data Science Fellowships appeared first on insideHPC.

|

|

by Rich Brueckner on (#173BY)

"This meeting is open to all Dell HPC customers and partners. During the event, we will establish the Dell HPC Community as an independent, worldwide technical forum designed to facilitate the exchange of ideas among HPC professionals, researchers, computer scientists and engineers. Our core objective is to provide an environment in which members can candidly discuss industry trends and challenges, gather direct feedback and input from HPC professionals and influence the strategic direction and development of Dell HPC Systems and ecosystems."The post Inaugural Dell HPC Community Meeting Coming to Austin April 18-21 appeared first on insideHPC.

|

|

by Rich Brueckner on (#172G1)

"U.S. President Obama signed an Executive Order creating the National Strategic Computing Initiative (NSCI) on July 31, 2015. In the order, he directed agencies to establish and execute a coordinated Federal strategy in high-performance computing (HPC) research, development, and deployment. The NSCI is a whole-of-government effort to be executed in collaboration with industry and academia, to maximize the benefits of HPC for the United States. The Federal Government is moving forward aggressively to realize that vision. This presentation will describe the NSCI, its current status, and some of its implications for HPC in the U.S. for the coming decade."The post Video: The National Strategic Computing Initiative appeared first on insideHPC.

|

|

by Rich Brueckner on (#1721P)

Today Penguin Computing announced the availability Cyber Dyne’s KIMEME software on the POD public HPC cloud service. "It’s now possible to submit and manage large DOEs and optimization simulations flawlessly in the cloud,†said Ernesto Mininno, CEO, Cyber Dyne. “These tasks are much easier and faster thanks to the computational power of Penguin Computing’s POD HPC services.â€The post Cyber Dyne’s KIMEME Software Comes to Penguin On Demand appeared first on insideHPC.

|

|

by staff on (#1721R)

Today Nvidia announced that Brookhaven National Laboratory has been named a 2016 GPU Research Center. "The center will enable Brookhaven Lab to collaborate with Nvidia on the development of widely deployed codes that will benefit from more effective GPU use, and in the delivery of on-site GPU training to increase staff and guest researchers' proficiency," said Kerstin Kleese van Dam, director of CSI and chair of the Lab's Center for Data-Driven Discovery.The post Brookhaven Lab is the latest Nvidia GPU Research Center appeared first on insideHPC.

|

|

by staff on (#171Y9)

The CSIRO national science agency in Australia has teamed up with Dell to deliver a new HPC cluster called "Pearcey." The Pearcey cluster supports CSIRO research activities in a broad range of areas such as Bioinformatics, Fluid Dynamics and Materials Science. One CSIRO researcher benefiting from using Pearcey is Dr. Dayalan Gunasegaram, a CSIRO computational modeler who is using Pearcey for the modeling work behind the development of an improved nylon mesh for use in pelvic organ prolapse (POP) surgery, which has the potential to benefit the one in five Australian women that have surgery for the condition at some point in their lives.The post Dell Powers New Pearcey Cluster at CSIRO in Australia appeared first on insideHPC.

|

|

by Rich Brueckner on (#1713H)

In this podcast, the Radio Free HPC team looks at blockchain technology with Chris Skinner, author of ValueWeb – How FinTech firms are using mobile and blockchain technologies to create the Internet of Value. "The Internet of Value, or ValueWeb for short, allows machines to trade with machines and people with people, anywhere on this planet in real-time and for free. Using a combination of technologies from mobile devices and the bitcoin blockchain, fintech firms are building the ValueWeb. The question then is what this means for financial institutions, governments and citizens?"The post Radio Free HPC Looks at How Blockchain Will Create the Internet of Value appeared first on insideHPC.

|

|

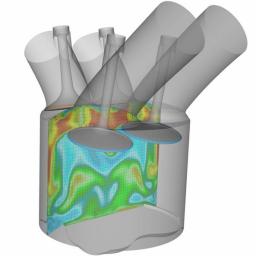

by Rich Brueckner on (#16Z1Q)

Engineers at Ilmor are using novel CFD software from Convergent Science to dramatically reduce prototype build costs for Indycar engines. "Ilmor is the company behind the design of Chevrolet’s championship-winning Indycar engine that powered Scott Dixon to the 2015 title. In 2016, the engineering firm had the opportunity to find refinements in the cylinder head. The company chose CONVERGE, a novel CFD program specifically created to assist engine designers to optimize engine design, performance, and efficiency."The post Convergent Science Speeds Indycar Engine Development appeared first on insideHPC.

|

|

by staff on (#16YZA)

Originally developed by IBM in the 1950s for scientific and engineering applications, Fortran came to dominate this area of programming early on and has been in continuous use for over half a century in computationally intensive areas such as numerical weather prediction, finite element analysis, computational fluid dynamics, computational physics and computational chemistry. It is a popular language for high-performance computing and is used for programs that benchmark and rank the world's fastest supercomputers.The post Video: What’s New with Fortran? appeared first on insideHPC.

|

|

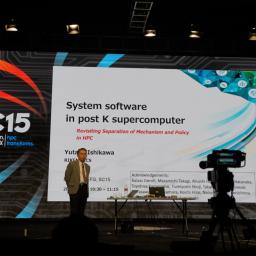

by Rich Brueckner on (#16WDT)

"The next flagship supercomputer in Japan, replacement of K supercomputer, is being designed toward general operation in 2020. Compute nodes, based on a manycore architecture, connected by a 6-D mesh/torus network is considered. A three level hierarchical storage system is taken into account. A heterogeneous operating system, Linux and a light-weight kernel, is designed to build suitable environments for applications. It can not be possible without codesign of applications that the system software is designed to make maximum utilization of compute and storage resources. "The post Video: System Software in Post K Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#16WCJ)

Intel in Albuquerque is seeking a Senior Cluster Architect in our Job of the Week. "The Senior Cluster Architect will be responsible for design, support, and maintenance of HPC (High Performance Computing) cluster hardware and software for high availability, consistency, and optimized performance. This role is responsible for managing Linux systems."The post Job of the Week: Senior Cluster Architect at Intel in Albuquerque appeared first on insideHPC.

|

|

by Rich Brueckner on (#16SE2)

In this TACC Podcast, Jorge Salazar reports that scientists and engineers at the Texas Advanced Computing Center have created Wrangler, a new kind of supercomputer to handle Big Data.The post Podcast: Speeding Through Big Data with the Wrangler Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#16SCM)

Today SimScale in Munich announced an online F1 workshop series focused on aerodynamics. "Today SimScale in Munich announced an online F1 workshop series focused on aerodynamics. The live online sessions will take place on Thursdays at 4:00 p.m. Central European Time, on March 17, 24 and 31. "Participants will get an overview of all the functionalities offered by the SimScale platform, while learning directly from top simulation experts through an interactive workshop and practical application of the simulation technology."The post SimScale Offers Online F1 Aerodynamics Workshop appeared first on insideHPC.

|

|

by Rich Brueckner on (#16S1E)

"This talk will address one of the main challenges in high performance computing which is the increased cost of communication with respect to computation, where communication refers to data transferred either between processors or between different levels of memory hierarchy, including possibly NVMs. I will overview novel communication avoiding numerical methods and algorithms that reduce the communication to a minimum for operations that are at the heart of many calculations, in particular numerical linear algebra algorithms. Those algorithms range from iterative methods as used in numerical simulations to low rank matrix approximations as used in data analytics. I will also discuss the algorithm/architecture matching of those algorithms and their integration in several applications."The post Video: Fast and Robust Communications Avoiding Algorithms appeared first on insideHPC.

|

|

by Rich Brueckner on (#16RYJ)

“As a research area, quantum computing is highly competitive, but if you want to buy a quantum computer then D-Wave Systems, founded in 1999, is the only game in town. Quantum computing is as promising as it is unproven. Quantum computing goes beyond Moore’s law since every quantum bit (qubit) doubles the computational power, similar to the famous wheat and chessboard problem. So the payoff is huge, even though it is expensive, unproven, and difficult to program.â€The post Podcast: Through the Looking Glass at Quantum Computing appeared first on insideHPC.

|

|

by staff on (#16PJB)

Today Hewlett Packard Enterprise announced HPE Haven OnDemand, an innovative cloud platform that provides advanced machine learning APIs and services that enable developers, startups and enterprises to build data-rich mobile and enterprise applications. Delivered as a service on Microsoft Azure, HPE Haven OnDemand provides more than 60 APIs and services that deliver deep learning analytics on a wide range of data, including text, audio, image, social, web and video.The post Hewlett Packard Enterprise Announces Haven OnDemand Machine Learning-as-a-Service appeared first on insideHPC.

|

|

by Rich Brueckner on (#16NBK)

"Buffered read performance under Lustre has been inexplicably slow when compared to writes or even direct IO reads. A balanced FDR-based Object Storage Server can easily saturate the network or backend disk storage using o_direct based IO. However, buffered IO reads remain at 80% of write bandwidth. In this presentation we will characterize the problem, discuss how it was debugged and proposed resolution. The format will be a presentation followed by Q&A."The post Debugging Slow Buffered Reads on the Lustre File System appeared first on insideHPC.

|

|

by staff on (#16N9F)

The discovery of gravitational waves, announced by an international team of scientists, including Cardiff University’s Gravitational Physics Group, was verified using simulations of black-hole collisions produced on a Bull supercomputer. The discovery is being considered one of the biggest breakthroughs in physics for the last 100 years, as these tiny ripples in space-time offer new insights into theoretical physics and provide scientists with new avenues to explore the universe.The post Bull Supercomputer Aids Discovery of Gravitational Waves appeared first on insideHPC.

|

|

by Rich Brueckner on (#16N7K)

Today Nvidia announced that Rob High, IBM Fellow, VP and chief technology officer for Watson, will deliver a keynote at our GPU Technology Conference on April 6. High will describe the key role GPUs will play in creating systems that understand data in human-like ways. "Late last year, IBM announced that its Watson cognitive computing platform has added NVIDIA Tesla K80 GPU accelerators. As part of the platform, GPUs enhance Watson’s natural language processing capabilities and other key applications."The post IBM Watson CTO Rob High to Keynote GPU Technology Conference appeared first on insideHPC.

|

|

by MichaelS on (#16N47)

"Vector instruction sets have progressed over time, and it important to use the most appropriate vector instruction set when running on specific hardware. The OpenMP SIMD directive allows the developer to explicitly tell the compiler to vectorize a loop. In this case, human intervention will override the compilers sense of dependencies, but that is OK if the developer knows their application well."The post OpenMP and SIMD Instructions on Intel Xeon Phi appeared first on insideHPC.

|

|

by veronicahpc1 on (#16D8C)

In this week’s Sponsored Post, Katie Garrison of One Stop Systems explains how Flash storage arrays are becoming more accessible as the economics of Flash becomes more attractive. "Comprised of a unique combination of a Haswell-based engine and 200TB Flash arrays, the FSA-SAN can be increased to a petabyte of storage with additional Flash arrays. Each 200TB array delivers 16 million IOPS, making it the ideal platform for high-speed data recording and processing with lightning fast data response time, high-availability and flexibility in the cloud."The post Flash Storage Arrays in HPC Applications appeared first on insideHPC.

|

|

by Rich Brueckner on (#16HVV)

In this slidecast, Jeff Squyres from Cisco Systems presents: How to make MPI Awesome - MPI Sessions. As a proposal for future versions of the MPI Standard, MPI Sessions could become a powerful tool tool to improve system resiliency as we move towards exascale. "Now that we have brought these ideas to a larger audience, my hope is that we (the Forum) start refining these ideas to fit them into a future release of the MPI standard. Meaning: please don’t assume that exactly what is proposed in these slides are going to make it into the MPI standard."The post Slidecast: How to Make MPI Awesome – MPI Sessions appeared first on insideHPC.

|

|

by john kirkley on (#16HVX)

In this special guest feature, John Kirkley writes that Argonne is already building code for their future Theta and Aurora supercomputers based on Intel Knights Landing. "One of the ALCF’s primary tasks is to help prepare key applications for two advanced supercomputers. One is the 8.5-petaflops Theta system based on the upcoming Intel® Xeon Phi™ processor, code-named Knights Landing (KNL) and due for deployment this year. The other is a larger 180-petaflops Aurora supercomputer scheduled for 2018 using Intel Xeon Phi processors, code-named Knights Hill. A key goal is to solidify libraries and other essential elements, such as compilers and debuggers that support the systems’ current and future production applications."The post Paving the Way for Theta and Aurora appeared first on insideHPC.

|

|

by Rich Brueckner on (#16H7Z)

Rich Graham presented this talk at the Stanford HPC Conference. "Exascale levels of computing pose many system- and application- level computational challenges. Mellanox Technologies, Inc. as a provider of end-to-end communication services is progressing the foundation of the InfiniBand architecture to meet the exascale challenges. This presentation will focus on recent technology improvements which significantly improve InfiniBand’s scalability, performance, and ease of use."The post Rich Graham Presents: The Exascale Architecture appeared first on insideHPC.

|

|

by Rich Brueckner on (#16H65)

"Lenovo is on a mission to become the market leader in datacenter solutions," said Gerry Smith, Lenovo executive vice president and chief operating officer, PC and Enterprise Business Group. "We will continue to invest in the development and delivery of disruptive IT solutions to shape next-generation data centers. Our partnership with Juniper Networks provides Lenovo access to an industry leading portfolio of products that include Software Defined Networking (SDN) solutions – essential for state-of-the-art data center offerings.â€The post Lenovo and Juniper Networks Partner for Next-gen Datacenter Infrastructure appeared first on insideHPC.

|

|

by MichaelS on (#16GH7)

Cloud computing has become a strong alternative to in house data centers for a large percentage of all enterprise needs. Most enterprises are adopting some form of could computing, with some estimates that as high as 90 % are putting workloads into a public cloud infrastructure. The whitepaper, Empowering Cloud Utilization with Cloud Bursting is an excellent summary of various options for enterprises that are planning for using a public cloud infrastructure.The post Empowering Cloud Utilization with Cloud Bursting appeared first on insideHPC.

|

|

by staff on (#16EC7)

Today IBM that it is opening a new Cloud Data Center in Johannesburg, South Africa. The new cloud center is the result of a close collaboration with Gijima and Vodacom and is designed to support cloud adoption and customer demand across the continent. IBM will provide clients with a complete portfolio of cloud services for running enterprise and as a service workloads.The post IBM Opens First Cloud Datacenter in South Africa appeared first on insideHPC.

|

|

by Rich Brueckner on (#16DFQ)

"UberCloud specializes in running HPC workloads on a broad spectrum of infrastructures, anywhere from national centers to public Cloud services. This session will be review of the learnings of UberCloud Experiments performed by industry end users. The live demonstration will cover how to achieve peak simulation performance and usability in the Cloud and national centers, using fast interconnects, new generation CPU's, SSD drives and UberCloud technology based on Linux containers."The post Video: Ubercloud Workloads & Marketplace appeared first on insideHPC.

|

|

by Rich Brueckner on (#16DC3)

Today Seagate unveiled a production-ready unit of the fastest single solid-state drive (SSD) demonstrated to date, with throughput performance of 10 gigabytes per second. The early unit meets Open Compute Project (OCP) specifications, making it ideal for hyperscale data centers looking to adopt the fastest flash technology with the latest and most sustainable standards. "The 10GB/s unit, which is expected to be released this summer, is more than 4GB/s faster than the previous fastest-industry SSD on the market. It also meets the OCP storage specifications being driven by Facebook, which will help reduce the power and cost burdens traditionally associated with operating at this level of performance."The post Seagate Demonstrates 10 GB/sec SSD Flash Drive for OCP appeared first on insideHPC.

|

|

by Rich Brueckner on (#16DAC)

Registration now open for ISC High Performance, the largest high performance computing forum in Europe. By registering between now and May 11, attendees can save over 45 percent off the onsite registration rates. Now in its 31st year, the ISC High Performance conference and exhibition will be held from June 19 - 23 in Frankfurt.The post Registration Opens for ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#16CFF)

The 2016 OpenFabrics Workshop has posted their speaker agenda with session abstracts. The event takes place April 4-8, 2016 in Monterey, California. "The Workshop is the premier event for collaboration between OpenFabrics Software (OFS) producers and those whose systems and applications depend on the technology. Every year, the workshop generates lively exchanges among Alliance members, developers and users who all share a vested interest in high performance networks."The post Agenda Posted for OpenFabrics Workshop in Monterey appeared first on insideHPC.

|

|

by staff on (#169FX)

Today Advanced Clustering Technologies announced it has partnered with CD-adapco to offer the company’s industry-leading engineering simulation software solution, STAR-CCM+, to customers using Advanced Clustering’s on demand HPC cluster in the cloud, ACTnowHPC. "We’re pleased to announce that our HPC cloud now makes STAR-CCM+ immediately accessible to engineers who purchase the license from CD-adapco,†said Kyle Sheumaker, President of Advanced Clustering Technologies. “With STAR-CCM+, we’re making it easier than ever for our customers to enhance workflow productivity in order to discover better designs faster.â€The post STAR-CCM+ Moves to the Cloud with ACTnowHPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#169E3)

"Rescale provides a unified HPC simulation platform for the Enterprise IT environment. Rescale’s platform integrates with existing job schedulers to burst workloads to cloud computing resources. We provide high performance computing options such as InfiniBand-connected and GPU-accelerated nodes that can be provisioned on-demand. We will demo an example workload on such an on-demand cluster. Finally, we will cover the Rescale administration panel for managing your cloud/on-premise connectivity for software licenses and single sign-on authentication."The post Video: Boosting HPC with Cloud appeared first on insideHPC.

|

|

by staff on (#169BW)

At the Open Compute Project Summit this week, ASRock Rack will showcase its OCP3-1L and OCP3-6S servers for cloud-based datacenter and High Performance Computing. The event is the annual gathering for industry’s top leaders to discuss the new developments of OCP technology - an open design for datacenter products initiated by Facebook.The post ASRock Rack Joins Open Compute Project U.S. Summit 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1698P)

Today Mellanox unveiled its next-generation Open Composable Networks (OCN) platform at the Open Compute Project (OCP) Summit. OCN delivers ground-breaking open network platforms for enterprises and service providers to unlock performance and unleash innovation with predictable application performance and the efficiency of Web-Scale IT.The post Mellanox Introduces Open Composable Networks for OCP Platforms appeared first on insideHPC.

|

|

by staff on (#1696R)

Today E4 Computer Engineering announced that it has recently sealed an agreement with BOXX Technologies to become their exclusive manufacturing and distribution partner for Italy and Switzerland. As one of the world’s leading performance computing brands, BOXX Technologies manufactures bespoke high performance workstations for a range of industries and boasts a blue chip client list including Boeing, Disney, MIT, NASA, and Nike.The post E4 Computer Engineering to Distribute BOXX Technologies in Italy and Switzerland appeared first on insideHPC.

|

|

by Rich Brueckner on (#166BF)

"Today’s server systems provide many knobs which influence energy efficiency and performance. Some of these knobs control the behavior of the operating systems, whereas others control the behavior of the hardware itself. Choosing the optimal configuration of the knobs is critical for energy efficiency. In this talk recent research results will be presented, including examples of big data applications that consume less energy when dynamic tuning is employed."The post Best Practices – Dynamic Tuning for Energy Efficiency appeared first on insideHPC.

|

|

by staff on (#1668Y)

Today SGI announced that ŠKODA AUTO has deployed an SGI UV and two SGI ICE high performance computing systems to further enhance its computer-aided engineering capabilities. "Customer satisfaction and the highest standard of production are at the very core of our brand and is the driving force behind our innovation processes," said Petr Rešl, head of IT Services, ŠKODA AUTO. "This latest installation enables us to conduct complex product performance and safety analysis that will in turn help us to further our commitment to our customer's welfare and ownership experience. It helps us develop more innovative vehicles at an excellent value-to-price ratio."The post Trio of SGI Systems to Drive Innovation at SKODA AUTO appeared first on insideHPC.

|

|

by Rich Brueckner on (#163NT)

In this video from the 2016 Stanford HPC Conference, Sumit Sanyal from Minds.ai presents: Deep Learning: Convergence of HPC & Hyperscale. "Minds.ai an early stage startup building software and hardware infrastructure to deploy, manage and accelerate Deep Learning Networks. minds.ai (maɪndz-aɪ) is developing a deep neural network training platform with disruptive acceleration performance. minds.ai’s platform makes the power of High Performance Computing available to the deep learning community and equips a new generation of developers with the tools needed to quickly refine and deploy neural networks into their businesses."The post Accelerating Deep Learning with HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#163NW)

Stony Brook University is seeking a Senior HPC Engineer in our Job of the Week. "The Senior HPC Engineer will be responsible for day-to-day oversight, integration, administration & maintenance of the HPC Clusters. The selected candidate will participate in hardware decisions, prepare training materials and assisting advanced users."The post Job of the Week: Senior HPC Engineer at Stony Brook University appeared first on insideHPC.

|

|

by Rich Brueckner on (#160P5)

In this podcast, the Radio Free HPC team looks at Dell's acquisition of EMC, which is expected to close soon pending regulatory approval. "The transaction combines two of the world’s greatest technology franchises—with leadership positions in Servers, Storage, Virtualization and PCs—and brings together strong capabilities in the fastest growing areas of our industry, including Digital Transformation, Software Defined Data Center, Hybrid Cloud, Converged Infrastructure, Mobile and Security."The post Radio Free HPC Looks at Dell’s Imminent Acquisition of EMC appeared first on insideHPC.

|

|

by Rich Brueckner on (#160J3)

"Co-Design is a collaborative effort among industry thought leaders, academia, and manufacturers to reach Exascale performance by taking a holistic system-level approach to fundamental performance improvements. Co-Design architecture enables all active system devices to become acceleration devices by orchestrating a more effective mapping of communication between devices in the system. This produces a well-balanced architecture across the various compute elements, networking, and data storage infrastructures that exploits system efficiency and even reduces power consumption."The post Interview: Why Co-design is the Path Forward for Exascale Computing appeared first on insideHPC.

|

|

by staff on (#160CR)

Today AMD announced that CGG, a pioneering global geophysical services and equipment company, has deployed AMD FirePro S9150 server GPUs to accelerate its geoscience oil and gas research efforts, harnessing more than 1 PetaFLOPS of GPU processing power. Employing AMD’s HPC GPU Computing software tools available on GPUOpen.com, CGG rapidly converted its in-house Nvidia CUDA code to OpenCL for seismic data processing running on an AMD FirePro S9150 GPU production cluster, enabling fast, cost-effective GPU-powered research.The post With GPUOpen, CGG Fuels Petroleum Exploration using AMD FirePro GPUs appeared first on insideHPC.

|

|

by Rich Brueckner on (#16098)

In this video from the 2016 Stanford HPC Conference, Gilad Shainer from the HPC Advisory Council moderates a panel discussion on Exascale Computing. "Exascale computing will uniquely provide knowledge leading to transformative advances for our economy, security and society in general. A failure to proceed with appropriate speed risks losing competitiveness in information technology, in our industrial base writ large, and in leading-edge science."The post Video: Panel Discussion on Exascale Computing appeared first on insideHPC.

|