|

by Rich Brueckner on (#1B2JZ)

Today Spectra Logic announced the Spectra TFinity ExaScale Edition, the world’s largest and most richly-featured tape storage system. "Since 2008, Spectra Logic has worked with engineers in the NASA Advanced Supercomputing (NAS) Division at NASA’s Ames Research Center, in California’s Silicon Valley, first deploying a Spectra tape library with 22 petabytes of capacity. According to NASA, the Spectra tape library’s capacity has grown to approximately one half an Exabyte of archival storage today. After extensive testing over the past year, NASA recently deployed a Spectra TFinity ExaScale Edition in their 24x7 production HPC environment."The post Spectra Logic Rolls Out World’s Largest Capacity Tape Library appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 14:00 |

|

by staff on (#1B228)

Today Intel announced the inception of the Intel Data Analytics Acceleration Library (Intel DAAL) open source project. "Intel DAAL helps to speed up big data analysis by providing highly optimized algorithmic building blocks for all stages of data analytics (preprocessing, transformation, analysis, modeling, validation, and decision making) in batch, online, and distributed processing modes of computation. The open source project is licensed under Apache License 2.0."The post Intel DAAL Data Analytics Acceleration Library Moves to Open Source appeared first on insideHPC.

|

|

by staff on (#1B20B)

Today Altair announced that eleven international customers participated in the company's recent HPC Cloud Challenge. The contest was set up to demonstrate the benefits of leveraging the cloud for large-scale design exploration in the area of computer-aided engineering. Organizations of all sizes from manufacturing and academic fields participated in the Challenge, utilizing Altair technologies in structural, CFD and design studies, and expressed great satisfaction with the program overall.The post Altair HPC Cloud Challenge Shows Customers a New Way Forward appeared first on insideHPC.

|

|

by Rich Brueckner on (#1B1W5)

The Extreme Science and Engineering Discovery Environment (XSEDE), a five-year project supported by the US National Science Foundation, has awarded 324 million cpu hours, valued at $16.2 million, to 150 research projects throughout the US.The post XSEDE Awards 324 Million CPU hours to NSF Research Projects appeared first on insideHPC.

|

|

by Rich Brueckner on (#1B1TK)

Michael Resch from HLRS gave this rousing talk at the HPC User Forum. "HLRS supports national and European researchers from science and industry by providing high-performance computing platforms and technologies, services and support. Supercomputer Hazel Hen, a Cray XC40-system, is at the heart of the HPC system infrastructure of the HLRS. With a peak performance of 7.42 Petaflops (quadrillion floating point operations per second), Hazel Hen is one of the most powerful HPC systems in the world (position 8 of TOP500, 11/2015) and is the fastest supercomputer in the European Union. The HLRS supercomputer, which was taken into operation in October 2015, is based on the Intel Haswell Processor and the Cray Aries network and is designed for sustained application performance and high scalability."The post Video: Europe’s Fastest Supercomputer and the World Around It appeared first on insideHPC.

|

|

by Rich Brueckner on (#1B0ZV)

In this slidecast, Gilad Shainer from Mellanox describes the advantages of InfiniBand and the company's off-loading network architecture for HPC. “The path to Exascale computing is clearly paved with Co-Design architecture. By using a Co-Design approach, the network infrastructure becomes more intelligent, which reduces the overhead on the CPU and streamlines the process of passing data throughout the network. A smart network is the only way that HPC data centers can deal with the massive demands to scale, to deliver constant performance improvements, and to handle exponential data growth.â€The post Slidecast: Advantages of Offloading Architectures for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AYP3)

Rangan Sukumar from ORNL presented this talk at the HPC User Forum in Tucson. "ORiGAMI is a tool for discovering and evaluating potentially interesting associations and creating novel hypothesis in medicine. ORiGAMI will help you “connect the dots†across 70 million knowledge nuggets published in 23 million papers in the medical literature. The tool works on a ‘Knowledge Graph’ derived from SEMANTIC MEDLINE published by the National Library of Medicine integrated with scalable software that enables term-based, path-based, meta-pattern and analogy-based reasoning principles."The post ORiGAMI – Oak Ridge Graph Analytics for Medical Innovation appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AYEQ)

Today Intersect360 Research published a new research report on the Hyperscale market. "This report provides definitions, segmentations, and dynamics of the hyperscale market and describes its scope, the end-user applications it touches, and the market drivers and dampers for future growth. It is the foundational report for the Intersect360 Research hyperscale market advisory service."The post Intersect360 Publishes New Report on the Hyperscale Market appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AXYE)

Matt Vaughan from TACC presented this talk at the HPC User Forum. "Jetstream is the first user-friendly, scalable cloud environment for XSEDE. The system enables researchers working at the "long tail of science" and the creation of truly customized virtual machines and computing architectures. It has a web-based user interface integrated with XSEDE via Globus Auth. The architecture is derived from the team's collective experience with CyVerse Atmosphere, Chameleon and Quarry. The system also fosters reproducible, sharable computing with geographically isolated clouds located at Indiana University and TACC."The post Jetstream – Adding Cloud-based Computing to the National Cyberinfrastructure appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AXSH)

The NVIDIA DGX-1 features up to 170 teraflops of half precision (FP16) peak performance, 8 Tesla P100 GPU accelerators with 16GB of memory per GPU, 7TB SSD DL Cache, and a NVLink Hybrid Cube Mesh. Packaged with fully integrated hardware and easily deployed software, it is the world’s first system built specifically for deep learning and with NVIDIA's revolutionary, Pascal-powered Tesla P100 accelerators, interconnected with NVIDIA's NVLink. NVIDIA designed the DGX-1 to meet the never-ending computing demands of artificial intelligence and claims it can provide the throughput of 250 CPU-based servers delivered via a single box.The post Exxact to Distribute NVIDIA DGX-1 Deep Learning System appeared first on insideHPC.

|

|

by Douglas Eadline on (#1AXEP)

In today's highly competitive world, High Performance Computing (HPC) is a game changer. Though not as splashy as many other computing trends, the HPC market has continued to show steady growth and success over the last several decades. Market forecaster IDC expects the overall HPC market to hit $31 billion by 2019 while riding an 8.3% CAGR. The HPC market cuts across many sectors including academic, government, and industry. Learn which industries are using HPC and why.The post Who Is Using HPC (and Why)? appeared first on insideHPC.

|

|

by Rich Brueckner on (#1ATTE)

In this video, Siraj Rival from Twilio presents a quick tutorial on How to Build a Neural Net in 4 Minutes. Siraj describes himself as the Bill Nye of Computer Science.The post Video: How to Build a Neural Net in 4 Minutes appeared first on insideHPC.

|

|

by Rich Brueckner on (#1ATSC)

The hpc-ch Forum on Intra- and Inter-Site Networking has posted its Call for Participation. Hosted by the University of Zurich, the event will take place Thursday, May 19, 2016.The post Call for Participation: hpc-ch Forum on Intra- and Inter-Site Networking appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AR4C)

"Over the last years the OFA community has shown the potential of using high performance networks (InfiniBand) to boost the performance of virtualized cloud environments, however, the network reconfiguration challenges still continue to exist. In this session we present the work we have been doing on InfiniBand subnet management and routing, in the context of dynamic cloud environments. This work includes, but not limited to, techniques in order to provide better management scalability when virtual machines are live migrating, tenant network isolation in multi-tenant environments, and fast performance-driven network reconfiguration."The post Using High Performance Interconnects in Dynamic Environments appeared first on insideHPC.

|

by Rich Brueckner on (#1AR2D)

DDN in Santa Clara is seeking a Senior Director of HPC Sales in our Job of the Week. "We are currently seeking an Account Executive to drive the development of new Cloud Content & Media accounts in the Bay Area. The role requires both hunting for new accounts and growing our installed base. The Account Executive must have strong direct sales experience as well as the ability to sell with our channel partners. The successful candidate must possess a strong storage background and have experience driving million dollar plus deals. Experience selling into cloud and content providers a plus."The post Job of the Week: Senior Director of HPC Sales at DDN appeared first on insideHPC.

|

by Rich Brueckner on (#1AMM6)

"This talk will present RDMA-based designs using OpenFabrics Verbs and heterogeneous storage architectures to accelerate multiple components of Hadoop (HDFS, MapReduce, RPC, and HBase), Spark and Memcached. An overview of the associated RDMAenabled software libraries being designed and publicly distributed as a part of the HiBD project."The post Video: Exploiting HPC Technologies to Accelerate Big Data Processing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AMGH)

Hadoop and Spark clusters have a reputation for being extremely difficult to configure, install, and tune, but help is on the way. The good folks at Cluster Monkey are hosting a crash course entitled Apache Hadoop with Spark in One Day. "After completing the workshop attendees will be able to use and navigate a production Hadoop cluster and develop their own projects by building on the workshop examples."The post Learn Apache Hadoop with Spark in One Day appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AMEY)

Today Silicon Mechanics announced that the company was recently named one of the Intel Corporation’s 2015 ISS Partner’s of the Year, specifically with Intel’s Channel Cares program. TheISS Partner of the Year award is given out each year at the company’s Intel Solutions Summit to 16 different partners across 16 different categories ranging from retail to security to healthcare, cloud solutions and more. This is the first time that Silicon Mechanics has appeared on the prestigious list.The post Silicon Mechanics is Intel’s ‘Channel Cares’ Partner of the Year appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AMCR)

Today ISC 2016 announced that five renowned experts in computational science will participate in their new Distinguished Speaker series. Topics will include exascale computing efforts in the US, the next supercomputers in development in Japan and China, cognitive computing advancements at IBM, and quantum computing research at NASA.The post Distinguished Speaker Series Coming to ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AM8N)

"At Verne Global we combine low power costs, free cooling for 365 days of the year and optimized infrastructure to reduce the total costs of ownership for your data center. Our customers have achieved savings of more than 80% on power alone. The only place in the world where your data center can operate with 100% sustainable green power is at Verne Global's Icelandic campus. Powered by geothermal and hydro-electric sources, your IT power costs will be stable and predictable for up to 20 years."The post How Verne Global is Moving HPC Workloads to Iceland appeared first on insideHPC.

|

|

by MichaelS on (#1AGH3)

"NWPerf is software that can measure and collect a wide range of performance data about an application or set of applications that run on a cluster. With minimal impact on performance, NWPerf can gather historical information that then can be used in a visualization package. The data collected includes the power consumption using the Intelligent Platform Management Interface (IPMI) for the Intel Xeon processor and the libmicmgmt API for the Intel Xeon Phi coprocessor. Once the data is collected, and using some data extraction mechanisms, it is possible to examine the power used across the cluster, while the application is running."The post Monitoring Power Consumption with the Intelligent Platform Management Interface appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AG9S)

In this video, Steve Hebert from Nimbix presents: The Internet of Machines. "The good folks at Nimbix have also posted full a set video presentations from their recent Developer Summit. The event brought together the best and brightest minds building the next generation of cloud computing applications. The invigorating discussions span topics from rendering and simulation to big data and machine learning, and everything in between."The post Video: The Internet of Machines appeared first on insideHPC.

|

|

by staff on (#1AG80)

Astronomers are using the Blue Waters supercomputer and the ALMA telescope in Chile to investigate the location of a dwarf dark galaxy. Subtle distortions hidden in ALMA’s stunning image of the gravitational lens SDP.81 are telltale signs that a dwarf dark galaxy is lurking in the halo of a much larger galaxy nearly 4 billion light-years away. This discovery paves the way for ALMA to find many more such objects and could help astronomers address important questions on the nature of dark matter.The post Supercomputing the Dwarf Dark Galaxy Hidden in ALMA Gravitational Lens Image appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AG5V)

In this podcast, the Radio Free HPC team recaps the GPU Technology Conference, which wrapped up last week in San Jose.

|

|

by Rich Brueckner on (#1AG3V)

A research team at the Ohio Supercomputer Center (OSC) is beginning the task of modernizing a computer software package that leverages large-scale, 3-D modeling to research fatigue and fracture analyses, primarily in metals. "The research is a result of OSC being selected as an Intel Parallel Computing Center. The Intel PCC program provides funding to universities, institutions and research labs to modernize key community codes used across a wide range of disciplines to run on current state-of-the-art parallel architectures. The primary focus is to modernize applications to increase parallelism and scalability through optimizations that leverage cores, caches, threads and vector capabilities of microprocessors and coprocessors."The post Modernizing Materials Code at OSC’s Intel Parallel Computing Center appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AG1A)

In this video from the HPC User Forum in Tucson, Saul Gonzalez Martirena from NSF provides an update on the NSCI initiative. “As a coordinated research, development, and deployment strategy, NSCI will draw on the strengths of departments and agencies to move the Federal government into a position that sharpens, develops, and streamlines a wide range of new 21st century applications. It is designed to advance core technologies to solve difficult computational problems and foster increased use of the new capabilities in the public and private sectors.â€The post NSCI Update from the HPC User Forum appeared first on insideHPC.

|

|

by Rich Brueckner on (#1ACS4)

"Group Deutsche Boerse is a global financial service organization covering the entire value chain from trading, market data, clearing, settlement to custody. While reliability has been a fundamental requirement for exchanges since the introduction of electronic trading systems in the 1990s, since about 10 years also low and predictable latency of the entire system has become a major design objective. Both issues have been important architecture considerations, when Deutsche Boerse started to develop an entirely new derivatives trading system T7 for its options market in the US (ISE) in 2008. As the best fit at the time a combination of InfiniBand with IBM WebSphere MQ Low Latency Messaging (WLLM) as the messaging solution was determined. Since then the same system has been adopted for EUREX, one of the largest derivatives exchanges in the world, and is now also extended to cover cash markets. The session will present the design of the application and its interdependence with the combination of InfiniBand and WLLM. Also practical experiences with InfiniBand in the last couple of years will be reflected upon."The post Video: InfiniBand as Core Network in an Exchange Application appeared first on insideHPC.

|

|

by Rich Brueckner on (#1ACMR)

Today One Stop Systems announced the results of High-Tech Online Magazine Tom's IT PRO's review of the 200TB Flash Storage Array SAN (FSA-SAN). OSS' FSA-SAN provides 200TB of shared Flash RAIDed memory with a choice of the fastest SAN connectivity options including Fiber Channel, Infiniband, and iSCSI.The post One Stop Systems 200TB Flash Storage Array SAN gets Rave Review appeared first on insideHPC.

|

|

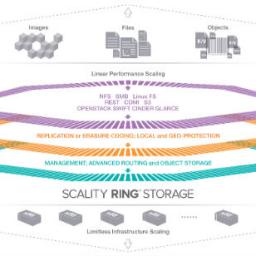

by Rich Brueckner on (#1AC6D)

Today Scality announced the production deployment of the Scality RING to power Los Alamo National Laboratory’s Trinity supercomputer, projected to be one of the world’s fastest. Trinity, part of the NNSA Advanced Simulation and Computing Program, is expected to be the first platform large and fast enough to begin to accommodate finely resolved 3D calculations for mission-critical simulations. As part of the deployment, Scality is also working together with Los Alamos on MarFS, an open source software project that brings the power of object storage to all large-scale research computing environments, including the U.S. Department of Energy.The post LANL Looks to Scality RING Storage for Trinity Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#1AC52)

"Cavium ThunderX has significant differentiation in the 64-bit ARM market as Cavium is the first ARMv8 vendor to deliver dual socket support with full ARMv8.1 implementation and significant advantage in CPU cores with 48 cores per socket. In addition, ThunderX supports large memory capacity (512GB per socket, 1TB in a 2S system) with excellent memory bandwidth and low memory latency. In addition, ThunderX includes multiple 10 GbE / 40GbE network interfaces delivering excellent IO throughput. These features enable ThunderX to deliver the core performance & scale out capability that the HPC market requires."The post Interview: Cavium to Move ARM Forward for HPC at ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1ABAD)

In this video from the HPC User Forum in Tucson, Earl Joseph from IDC presents: 2016 IDC HPC Market Update. "The HPC User Forum was established in 1999 to promote the health of the global HPC industry and address issues of common concern to users. The organization has since grown to 150 members."The post HPC Market Update and IDC’s Top Growth Areas for 2016 and Beyond appeared first on insideHPC.

|

|

by Rich Brueckner on (#1A8SZ)

Today ISC 2016 announced the awards-winning research papers for the PRACE ISC Award and the Gauss Award.The post ISC 2016 Announces Award-Winning Research Papers appeared first on insideHPC.

|

|

by Rich Brueckner on (#1A8DC)

"Atos is one out of three or four worldwide players having the expertise and know-how to build supercomputers today – and the only one in Europe. It is a source of pride for our company and provides a unique competitive advantage for our clients. With Atos’ Bull sequana astounding compute performance, businesses can now more efficiently maximize the value of data on a daily basis. By 2020, Bull sequana will reach exaflops level and will be able to process a billion billion operations per second.†says Atos Chairman and CEO Thierry Breton.The post Atos Rolls Out Bull sequana “The World’s Most Efficient Supercomputer†appeared first on insideHPC.

|

|

by staff on (#1A80Z)

If you are in the Northwest and you happen to like surf and turf, have I got a deal for you! Dell is hosting a series of Big Data lunch events in Seattle and Portland at the end of April. On April 26, Dell brings the event to Blueacre Seafood in Seattle. In Portland, lunch is on April 27 at the mighty Fogo de Chao, a Brazilian steak house for the Where's the Beef? crowd. They're also coming to Flemings in Salt Lake City on April 28.The post Get Some Big Lunch at the Big Data & HPC Events coming to Portland & Seattle appeared first on insideHPC.

|

|

by Rich Brueckner on (#1A7VS)

In this video from the 2016 GPU Technology Conference, Jason Pai from Supermicro describes the new 1028GQ-TRT SuperServer. With support for up to four Nvidia Tesla K80 GPUs, the 1U superserver offers extreme compute density in 1U of rack space. "From HPC to Deep Learning and Big Data Analytics, denser, more powerful GPU solutions have become a necessity in order to service the next generation of GPU-accelerated applications. At GTC, Supermicro demonstrated how these applications have progressed, and how its GPU solutions are influencing this evolution."The post Supermicro Showcases New GPU SuperServer at GTC 2016 appeared first on insideHPC.

|

|

by staff on (#1A7N8)

According to the latest Intersect360 Research site census data, of the 50 most popular application packages mentioned by HPC users, 34 have offer GPU support, including 9 of the top 10. As is evident from the number of GPU-accelerated applications available in areas such as chemical research, physics, structural analysis, and visualization, the use of this accelerator technology has become well established in the HPC user community. "According to the latest Intersect360 Research site census data, of the 50 most popular application packages mentioned by HPC users, 34 have offer GPU support, including 9 of the top 10. As is evident from the number of GPU-accelerated applications available in areas such as chemical research, physics, structural analysis, and visualization, the use of this accelerator technology has become well established in the HPC user community."The post Discover why GPUs are driving the future of HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1A50F)

Today the SME HPC Adoption Program in Europe announced the winners from its recent Call for Proposals. SHAPE is a pan-European program supported by PRACE which aims to raise awareness and provide European SMEs with the expertise necessary to take advantage of the innovation possibilities created by HPC, thus increasing their competitiveness.The post SHAPE Selects Eight Innovative HPC Projects for Access to PRACE Supercomputers appeared first on insideHPC.

|

|

by Rich Brueckner on (#1A4YR)

In this video from the 2016 GPU Technology Conference, Jaan Mannik from One Stop Systems describes the GPUltima system. Delivering up to 1 Petaflop in a rack, the GPUltima is a single 19" rack comprised of 8 OSS High Density Compute Accelerators (HDCA) each with 16 NIVIDA Dual GPUs (128 total), 16 dual-socket servers, an Infiniband Switch and an Ethernet Switch."The post Video: GPUltima System Delivers a Petaflop in a Rack appeared first on insideHPC.

|

|

by staff on (#1A3R5)

In this special guest feature, Ken Strandberg offers this live report from Day 3 of the Lustre User Group meeting in Portland. "Rick Wagner from San Diego Supercomputing Center presented progress on his team’s replication tool that allows copying large blocks of storage from object storage to their disaster recovery durable storage system. Because rsync is not a tool for moving massive amounts of data, SDSC created recursive worker services running in parallel to have each worker handle a directory or group of files. The tool uses available Lustre clients, a RabbitMQ server, Celery scripts, and bash scripts."The post Live Report from LUG 2016 Day 3 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1A0WB)

A design for virtual Ethernet networks over IB is described. The virtual Ethernet networks are implemented as overlays on the IB network. They are managed in a flexible manner using software. Virtual networks can be created, removed, and assigned to servers dynamically using this software. A virtual network can exist entirely on the IB fabric, or it can have an uplink connecting it to physical Ethernet using a gateway. The virtual networks are represented on the servers by virtual network interfaces which can be used with para-virtualized I/O, SRIOV,and non-virtualized I/O. This technology has many uses: communication between applications which are not IB-aware, communication between IB-connected servers and Ethernet-connected servers, and multi-tenancy for cloud environments. It can be used in conjunction with OpenStack, such as for tenant networks."The post Video: Software-Defined Networking on InfiniBand Fabrics appeared first on insideHPC.

|

|

by staff on (#1A0VD)

This week, Molex announced the acquisition of Interconnect Systems, Inc. (ISI) which specializes in the design and manufacture of high density silicon packaging with advanced interconnect technologies. "We are thrilled to join forces with Molex. By combining respective strengths and leveraging their global manufacturing footprint, we can more efficiently and effectively provide customers with advanced technology platforms and top-notch support services, while scaling up to higher volume production,†said Bill Miller, president, ISI.The post Molex Acquires Interconnect Systems, Inc. appeared first on insideHPC.

|

|

by Rich Brueckner on (#19Y05)

NVMe over Fabrics is an enhancement / expansion of the NVMe specification to support remote access to NVMe storage resources via RDMA. This presentation will review the major components of the NVMe over Fabrics specification, describe the host and target stack, discuss the plan for Linux open source drivers, and preview some performance characteristics. The discussion will also touch on possible deployment models.The post Video: NVMe Over Fabrics appeared first on insideHPC.

|

by Rich Brueckner on (#19Y07)

Argonne National Laboratory is seeking a Postdoctoral Appointee on FPGAs for Supercomputing in our Job of the Week. "This is an exciting opportunity for you to contribute to a new way of thinking in high-performance computing (HPC) by marrying state-of-the-art reconfigurable hardware with modern performance-portable programming models. This research will combine advances in high-level synthesis for field-programmable gate arrays (FPGAs) with the emerging OpenMP 4 programming model, thus enabling existing HPC codes to take advantage of the advanced floating-point support available in modern FPGA designs."The post Job of the Week: Postdoctoral Appointee on FPGAs for Supercomputing appeared first on insideHPC.

|

by Rich Brueckner on (#19V0A)

Over at the SC16 Blog, Dan Stanzione from TACC writes that the conference is starting a multi-year emphasis designed to advance the state of the practice in the HPC community by providing a track for the professionals driving innovation and development in designing, building, and operating the world’s largest supercomputers, along with all of the system and application software that make them run effectively.The post SC16 Adds State-of-the-Practice Track appeared first on insideHPC.

|

|

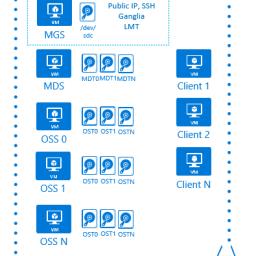

by Rich Brueckner on (#19TV1)

The Intel Cloud Edition for Lustre* Software is now available on Microsoft’s Azure platform. Intel Cloud Edition for Lustre Software on Azure is a scalable, parallel file system designed as the working file system for HPC or other IO intensive workloads. Built for use with the virtualized compute instances available from Microsoft Azure scalable cloud infrastructure, it is designed for dynamic, pay-as-you-go applications.The post Now on Azure: Intel Cloud Edition for Lustre* Software appeared first on insideHPC.

|

|

by Rich Brueckner on (#19TPP)

Researchers at Purdue University are using HPC to help fight the Zika virus. "Purdue’s award-winning Community Cluster Program played a significant role in enabling the research team to create the first detailed, 3-D structural map of the Zika virus, a key step toward developing treatments. The technique the team used to map the virus structure combined cryo-electron microscopy and high-performance computing. With the microscope, the Purdue researchers capture images of many Zika virusDetailed image of the Zika virus structure. particles, or individual instances of the virus."The post Supercomputing the Zika Virus at Purdue appeared first on insideHPC.

|

|

by Rich Brueckner on (#19TN0)

"Research computational workflows consist of several pieces of third party software and, because of their experimental nature, frequent changes and updates are commonly necessary thus raising serious deployment and reproducibility issues. Docker containers are emerging as a possible solution for many of these problems, as they allow the packaging of pipelines in an isolated and self-contained manner. This presentation will introduce our experience deploying genomic pipelines with Docker containers at the Center for Genomic Regulation (CRG). I will discuss how we implemented it, the main issues we faced, the pros and cons of using Docker in an HPC environment including a benchmark of the impact of containers technology on the performance of the executed applications."The post Manage Reproducibility of Computational Workflows with Docker Containers and Nextflow appeared first on insideHPC.

|

|

by staff on (#19QS5)

In this special guest feature, Ken Strandberg offers this live report from Day 2 of the Lustre User Group meeting in Portland. "Scott Yockel from Harvard University shared how they are deploying Lustre across their massive three data centers up to 90 miles apart with 25 PB of storage, about half of which is Lustre. They’re using Docker containers and employing a backup strategy across the miles of every NFS system, parsing of the entire MDT, and includes 10k directories of small files."The post Live Report from LUG 2016 Day 2 appeared first on insideHPC.

|

|

by Rich Brueckner on (#19QG8)

Today TYAN showcased the GT75-BP012, a new POWER8-based 1U server platform at the OpenPOWER Summit 2016 in San Jose. The 1U TYAN GT75-BP012 platform is a POWER8-based server solution that reveals the spirit of the OpenPOWER Foundation – resources and innovation are not limited to the community but are open to the world. "By building the ppc64 architecture in the 1U single-socket system, the TYAN GT75-BP012 provides huge memory footprint as well as outstanding performance for HPC and server virtualization applications.†said Albert Mu, Vice President of MITAC Computing Technology Corporation's TYAN Business Unit.The post TYAN Rolls Out POWER8-Based 1U Server at OpenPOWER Summit appeared first on insideHPC.

|

|

by Rich Brueckner on (#19PPD)

"The first release of OpenFabrics Interfaces (OFI) software, libfabric, occurred in January of 2015. Since then, the number of fabrics and applications supported by OFI has increased, with considerable industry momentum building behind it. This talk discusses the current state of OFI, then speaks to the application requirements driving it forward. It will detail the fabrics supported by libfabric, identify applications which have ported to it, and outline future enhancements to the libfabric interfaces and architecture."The post Video: Past, Present, and Future of OpenFabrics Interfaces appeared first on insideHPC.

|