|

by Rich Brueckner on (#X4PZ)

Today Bright Computing announced that the latest version of its Bright Cluster Manager software, version 7.1, is now integrated with Dell’s 13th generation PowerEdge server portfolio. The integration enables systems administrators to easily deploy and configure Dell infrastructure using Bright Cluster Manager.The post Bright Cluster Manager Integrates with Dell PowerEdge Servers for HPC Environments appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 19:15 |

|

by Rich Brueckner on (#X20T)

In this video from the Intel HPC Developer Conference at SC15, Jim Jeffers from Intel presents an SDVis Overview. After that, Bruce Cherniak from Intel presents: OpenSWR: Fast SW Rendering within MESA. "This session has two talk for the price of one: (1) Software Defined Visualization: Modernizing Vis. A ground swell is underway to modernize HPC codes to take full advantage of the growing parallelism in today’s and tomorrow’s CPU’s. Visualization workflows are no exception and this talk will discuss the recent Software Defined Visualization efforts by Intel and Vis community partners to improve flexibility, performance and workflows for visual data analysis and rendering to maximize scientific understanding. (2) OpenGL rasterized rendering is a so called "embarrasingly" parallel workload. As such, multicore and manycore CPUs can provide strong, flexible and large memory footprint solutions, especially for large data rendering. OpenSWR is a MESA3D based parallel OpenGL software renderer from Intel that enables strong interactive performance for HPC visualization applications on workstations through supercomputing clusters without the I/O and memory limitations of GPUs. We will discuss the current feature support, performance and implementation of this open source OpenGL solution."The post Video: SDVis Overview & OpenSWR – A Scalable High Performance Software Rasterizer for SCIVIS appeared first on insideHPC.

|

|

by staff on (#X1ZK)

A partnership of seven leading bioinformatics research and academic institutions called eMedLab is using a new private cloud, HPC environment and big data system to support the efforts of hundreds of researchers studying cancers, cardio-vascular and rare diseases. Their research focuses on understanding the causes of these diseases and how a person’s genetics may influence their predisposition to the disease and potential treatment responses.The post Virtual HPC Clusters Power Cancer Research at eMedLab appeared first on insideHPC.

|

|

by Rich Brueckner on (#X1TV)

Today the Gauss Centre for Supercomputing in Germany announced awards from the 14th Call for Large-Scale Projects. GCS says it achieved new All-Time Highs in various categories with 1358 million awarded core hours of compute time.The post GCS Awards 1358 Million Computing Core Hours to Research Projects appeared first on insideHPC.

|

|

by Rich Brueckner on (#X1QT)

"At SC15, DDN announced the new DDN SFA14K and SFA14KE high performance hybrid storage platforms. As the new flagship product in DDN’s most established product line, the DDN SFA14K provides customers with a simple route to take maximum advantage of a suite of new technologies including the latest processor technologies, NVMe SSD, PCIe 3, EDR InfiniBand and Omni-Path.The post DDN Steps Up with All-New HPC Storage Systems at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#X12D)

In this podcast, the Radio Free HPC team looks at why it pays to upgrade your home networking gear.The post Radio Free HPC Looks at Why it Pays to Upgrade Your Home Network appeared first on insideHPC.

|

|

by Rich Brueckner on (#WYCP)

In this video from SC15, Patrick Wolfe from the Alan Turing Institute and Karl Solchenbach from Intel describe a strategic partnership to deliver a research program focussed on HPC and data analytics. Created to promote the development and use of advanced mathematics, computer science, algorithms and big data for human benefit, the Alan Turing Institute is a joint venture between the universities of Warwick, Cambridge, Edinburgh, Oxford, UCL and EPSRC.The post Video: Intel Forms Strategic Partnership with The Alan Turing Institute appeared first on insideHPC.

|

|

by Rich Brueckner on (#WYAH)

As the reach of high performance computing continues to expand, so does the worldwide HPC community. In such a fast-growing ecosystem, how do you find the right HPC resources to match your needs? Enter DiscoverHPC.com, a new directory that takes on the daunting task of trying to put all-things-HPC in one place. To learn more, we caught up with the site curator, Ron Denny.The post Interview: New DiscoverHPC Directory Puts All-Things-HPC in One Place appeared first on insideHPC.

|

|

by Rich Brueckner on (#WY39)

“CTS-1 shows how the Open Compute and Open Rack design elements can be applied to high-performance computing and deliver similar benefits as its original development for Internet companies,†said Philip Pokorny, Chief Technology Officer, Penguin Computing. “We continue to improve Tundra for both the public and private sectors with exciting new compute and storage models coming in the near future.â€The post Penguin Computing Showcases OCP Platforms for HPC at SC15 appeared first on insideHPC.

|

|

by MichaelS on (#WXZT)

Because of the complexity involved, the length of the simulation period, and the amounts of data generated, weather prediction and climate modeling on a global basis requires some of the most powerful computers in the world. The models incorporate topography, winds, temperatures, radiation, gas emission, cloud forming, land and sea ice, vegetation, and more. However, although weather prediction and climate modeling make use of a common numerical methods, the items they compute differ.The post HPC Helps Drive Climate Change Modeling appeared first on insideHPC.

|

|

by Rich Brueckner on (#WXZW)

The University of Toronto is the official winner of Nvidia’s Compute the Cure initiative for 2015. Compute the Cure is a strategic philanthropic initiative of the Nvidia Foundation that aims to advance the fight against cancer. Through grants and employee fundraising efforts, Nvidia has donated more than $2,000,000 to cancer causes since 2011. Researchers from the […]The post University of Toronto Wins Research Grant to Compute the Cure appeared first on insideHPC.

|

|

by Rich Brueckner on (#WXW6)

"What we're previewing here today is a capability to have an overarching software, resource scheduler and workflow manager that takes all of these disparate sources and unifies them into a single view, making hundreds or thousands of computers look like one, and allowing you to run multiple instances of Spark. We have a very strong Spark multitenancy capability, so you can run multiple instances of Spark simultaneously, and you can run different versions of Spark, so you don't obligate your organization to upgrade in lockstep."The post IBM Ramps Up Apache Spark at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#WV2F)

In this video from the Intel HPC Developer Conference at SC15, Prof. Dieter Kranzlmüller from LRZ presents: Scientific Insights and Discoveries through Scalable High Performance Computing at LRZ. "Science and research today relies heavily on IT-services for discoveries and breakthroughs. The Leibniz Supercomputing Centre (LRZ) is a leading provider of scalable high performance computing and other services for researchers in Munich, Bavaria, Germany, Europe and beyond. This talk describes the LRZ and its services for the scientific community, providing an overview of applications and the respective technologies and services provided by LRZ. At the core of its services is SuperMUC, a highly scalable supercomputer using hot water cooling, which is one of the world’s most energy-efficient systems.The post Video: Scientific Insights and Discoveries through Scalable HPC at LRZ appeared first on insideHPC.

|

|

by Rich Brueckner on (#WV16)

While there has been a lot of disagreement about the slowing of Moore's Law as of late, it is clear that the industry is looking at new ways to speed up HPC by focusing on the data side of the equation. With the advent of burst buffers, co-design architectures, and new memory hierarchies, the one connecting theme we're seeing is that Moving Data is a Sin. In terms of storage, which technologies will take hold in the coming year? DDN offers us these 2016 Industry Trends & Predictions.The post 2016 Industry Trends and Predictions from DDN appeared first on insideHPC.

|

|

by Rich Brueckner on (#WRKH)

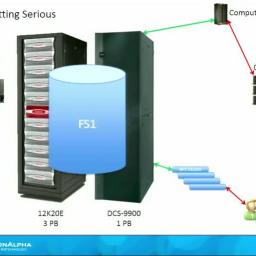

In this video from the DDN User Group at SC15, Kevin Behn from the HudsonAlpha Institute for Biotechnology presents: End to End Infrastructure Design for Large Scale Genomics. "HudsonAlpha has generated major discoveries that impact disease diagnosis and treatment, created intellectual property, fostered biotechnology companies and expanded the number of biosciences-literate people, many of whom will take their place among the future life sciences workforce. Additionally, HudsonAlpha has created one of the world’s first end-to-end genomic medicine programs to diagnose rare disease. Genomic research, educational outreach, clinical genomics and economic development: each of these mission areas advances the quality of life."The post Video: End to End Infrastructure Design for Large Scale Genomics appeared first on insideHPC.

|

|

by Rich Brueckner on (#WRHN)

"Mellanox Technologies is looking for experienced computational researchers to work on developing and optimizing the next generation of scalable HPC applications. A background in developing HPC application programs in areas such as Computational Chemistry, Physics, Meteorology, Climate Simulation, Engineering, or other closely related fields, or in methodologies for automatic code transformations is required. Experience using co-processor technologies is highly desirable."The post Job of the Week: HPC Application Engineer at Mellanox in Austin appeared first on insideHPC.

|

|

by staff on (#WQWQ)

"At SC15, Numascale announced the availability of NumaConnect-2, a scalable cache coherent memory technology that connects servers in a single high performance shared memory image. The NumaConnect-2 advances the successful Numascale technology with a new parallel microarchitecture that results in a significantly higher interconnect bandwidth, outperforming its predecessor by a factor of up to 5x."The post Numascale Expands Performance and Features with NumaConnect-2 appeared first on insideHPC.

|

|

by Rich Brueckner on (#WNXS)

"EnSight is a software program for visualizing, analyzing, and communicating data from computer simulations and/or experiments. The purpose of OpenSWR is to provide a high performance, highly scalable OpenGL compatible software rasterizer that allows use of unmodified visualization software. This allows working with datasets where GPU hardware isn't available or is limiting. OpenSWR is completely CPU-based, and runs on anything from laptops, to workstations, to compute nodes in HPC systems. OpenSWR internally builds on top of LLVM, and fully utilizes modern instruction sets like Intel®Streaming SIMD Extensions (SSE), and Intel® Advanced Vector Extensions (AVX and AVX2) to achieve high rendering performance."The post Video: Rendering in Ensight with OpenSWR appeared first on insideHPC.

|

|

by Rich Brueckner on (#WNGC)

"Modern systems will continue to grow in scale, and applications must evolve to fully exploit the performance of these systems. While today’s HPC developers are aware of code modernization, many are not yet taking full advantage of the environment and hardware capabilities available to them. Intel is committed to helping the HPC community develop modern code that can fully leverage today’s hardware and carry forward to the future. This requires a multi-year effort complete with all the necessary training, tools and support. The customer training we provide and the initiatives and programs we have launched and will continue to create all support that effort.â€The post Code Modernization: Two Perspectives, One Goal appeared first on insideHPC.

|

|

by Rich Brueckner on (#WNCM)

Today the UberCloud announced a collaboration with EGI based on a shared vision to embrace distributed computing, storage and data related technologies. As a federation of more than 350 resource centers across 50 countries, EGI has a mission to enable innovation and new solutions for many scientific domains to conduct world-class research and achieve faster results.The post UberCloud Partners with EGI to Bridge Research and Innovation appeared first on insideHPC.

|

|

by Rich Brueckner on (#WNAK)

Matt Starr from Spectra Logic describes the company's new ArcticBlue nearline disk storage system. "If you think about a tape subsystem, it's probably the best cost footprint, density footprint that you can get. ArticBlue brings in kind of the benefits of tape from a cost perspective, but the speed and performance of a disk subsystem. Ten cents a gig versus seven cents a gig, three cents difference. You may want to deploy a little bit of ArcticBlue in your archive when you're putting it behind BlackPearl as opposed to just an all tape archive."The post Video: Spectra Logic Changes Storage Economics with ArcticBlue at SC15 appeared first on insideHPC.

|

|

by staff on (#WN76)

"With Azure, Microsoft makes it easy for Univa customers to run true performance applications on demand in a cloud environment," said Bill Bryce, Univa Vice President of Products. "With the power of Microsoft Azure, Univa Grid Engine makes work management automatic and efficient and accelerates the deployment of cloud services. In addition, Univa solutions can run in Azure and schedule work in a Linux cluster built with Azure virtual machines."The post Univa Supports Dynamic Clusters on Microsoft Azure appeared first on insideHPC.

|

|

by Rich Brueckner on (#WN56)

"Ngenea’s blazingly-fast on-premises storage stores frequently accessed active data on the industry’s leading high performance file system, IBM Spectrum Scale (GPFS). Less frequently accessed data, including backup, archival data and data targeted to be shared globally, is directed to cloud storage based on predefined policies such as age, time of last access, frequency of access, project, subject, study or data source. Ngenea can direct data to specific cloud storage regions around the world to facilitate remote low latency data access and empower global collaboration."The post Video: General Atomics Delivers Data-Aware Cloud Storage Gateway with ArcaStream appeared first on insideHPC.

|

|

by Rich Brueckner on (#WHW9)

"Modern Cosmology and Plasma Physics codes are capable of simulating trillions of particles on petascale systems. Each time step generated from such simulations is on the order of 10s of TBs. Summarizing and analyzing raw particle data is challenging, and scientists often focus on density structures for follow-up analysis. We develop a highly scalable version of the clustering algorithm DBSCAN and apply it to the largest particle simulation datasets. Our system, called BD-CATDS, is the first one to perform end-to-end clustering analysis of trillion particle simulation output. We demonstrate clustering analysis of a 1.4 Trillion particle dataset from a plasma physics simulation, and a 10,240^3 particle cosmology simulation utilizing ~100,000 cores in 30 minutes. BD-CATS has enabled scientists to ask novel questions about acceleration mechanisms in particle physics, and has demonstrated qualitatively superior results in cosmology. Clustering is an example of one scientific data analytics problem. This talk will conclude with a broad overview of other leading data analytics challenges across scientific domains, and joint efforts between NERSC and Intel Research to tackle some of these challenges."The post Video: High Performance Clustering for Trillion Particle Simulations appeared first on insideHPC.

|

|

by Rich Brueckner on (#WHRT)

"Avere Systems is now offering you ultimate cloud flexibility. We've now announced full support across three different cloud service providers: Google, Amazon, and Azure. And now we offer customers to be able to move their compute workloads across all these different cloud providers and store data across different cloud providers to achieve ultimate flexibility."The post Avere Enables Cloudbursting Across Different Providers at SC15 appeared first on insideHPC.

|

|

by MichaelS on (#WHNJ)

"Many optimizations can be performed on an application that is QCD based and can take advantage of the Intel Xeon Phi coprocessor as well. With pre-fetching, SMT threading and other optimizations as well as using the Intel Xeon Phi coprocessor, the performance gains were quite significant. An initial test, using single precision on the base Sandy Bridge systems, the test case was showing about 128 Gflops. However, using the Intel Xeon Phi coprocessor, the performance jumped to over 320 Gflops."The post QCD Optimization on Intel Xeon Phi appeared first on insideHPC.

|

|

by staff on (#WHNM)

At SC15, 1degreenorth announced plans to build an on-demand High Performance Computing Big DataAnalytics (“HPC-BDAâ€) infrastructure the National Supercomputing Center (NSCC) Singapore. The prototype will be used for experimentation and proof-of-concept projects by the big data and data science community in Singapore.The post 1degreenorth to Prototype HPDA Infrastructure in Singapore appeared first on insideHPC.

|

|

by Rich Brueckner on (#WHHT)

"HPC is no longer a tool only for the most sophisticated researchers. We’re taking what we’ve learned from working with some of the most advanced, sophisticated universities and research institutions and customizing that for delivery to mainstream enterprises,†said Jim Ganthier, vice president and general manager, Engineered Solutions and Cloud, Dell. “As the leading provider of systems in this space, Dell continues to break down barriers and democratize HPC. We’re seeing customers in even more industry verticals embrace its power.â€ovations, by partnering on ecosystems, by partnering in terms of labs, we're going to be able to take all of that wonderful opportunity and make it readily available to enterprises of all classes."The post Intel® Scalable System Framework and Dell’s Strategic Focus on HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#WDVZ)

"We've tailored our story for the HPC developers here, who are really worried about applications and performance of applications. What's really happened traditionally is that the single-threaded applications had not really been able to take advantage of the multi-core processor-based server platforms. So they've not really been getting the optimized platform and they've been leaving money on the table, so to speak. Because when you can optimize your applications for parallelism, you can take advantage of these multi-processor server platform. And you can get sometimes up to 10x performance boost, maybe sometime 100x, we've seen some financial services applications, or 3x for chemistry types of simulations as an example."The post Hewlett Packard Enterprise Showcases Benefits of Code Modernization appeared first on insideHPC.

|

|

by staff on (#W9TV)

Altair has announced that it will provide an open source licensing option of PBS Professional® (PBS Pro). PBS Pro will become available under two different licensing options for commercial installations and as an Open Source Initiative compliant version. Altair will work closely with Intel and the Linux Foundation’s OpenHPC Collaborative Project to integrate the open source version of PBS Pro.The post Altair to Open Source PBS Professional HPC Technology in 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#W6J8)

In this video from SC15, Patrick McGinn from CoolIT Systems describes the company's latest advancements in industry leading liquid cooling solutions for HPC data center systems. “The adoption from vendors and end users for liquid cooling is growing rapidly with the rising demands in rack density and efficiency requirements,†said Geoff Lyon, CEO/CTO of CoolIT Systems who also chairs The Green Grid’s Liquid Cooling Work Group. “CoolIT Systems is responding to these demands with our world leading enterprise level liquid cooling solutions.â€The post CoolIT Systems Takes Liquid Cooling for HPC Data Centers to the Next Level appeared first on insideHPC.

|

|

by staff on (#WE87)

"The challenges facing informatics systems in the pharmaceutical industry’s R&D laboratories are changing. The number of large-scale computational problems in the life sciences is growing and they will need more high-performance solutions than their predecessors. But the response has to be nuanced: HPC is not a cure-all for the computational problems of pharma R&D. Some applications are better suited to the use of HPC than others and its deployment needs to be considered right at the beginning of experimental design."The post How Can HPC Best Help Pharma R&D? appeared first on insideHPC.

|

|

by Rich Brueckner on (#WE65)

In this video from SC15, Rich Brueckner from insideHPC moderates a panel discussion on the NSCI initiative. "As a coordinated research, development, and deployment strategy, NSCI will draw on the strengths of departments and agencies to move the Federal government into a position that sharpens, develops, and streamlines a wide range of new 21st century applications. It is designed to advance core technologies to solve difficult computational problems and foster increased use of the new capabilities in the public and private sectors."The post Video: Dell Panel Discussion on the NSCI initiative from SC15 appeared first on insideHPC.

|

|

by staff on (#WE2F)

Registration is now open for the 2016 OpenPOWER Summit, which will take place April 5-7 in San Jose, California in conjunction with the GPU Technology Conference. With a conference theme of "Revolutionizing the Datacenter," the event has issued its Call for Speakers and Exhibits.The post OpenPOWER Summit Returns to GTC in San Jose in April, 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#WE0H)

In this video from SC15, Rich Brueckner from insideHPC talks to contestants in the Student Cluster Competition. Using hardware loaners from various vendors and Allinea performance tools, nine teams went head-to-head to build the fastest HPC cluster.The post Student Cluster Teams Learn the Tools of Performance Tuning at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#WDYX)

Today Green Revolution Cooling announced that their immersive cooling technology is used by the most efficient supercomputers in the world as ranked in the latest Green500 list. For the third consecutive year, the Green Revolution Cooling-powered Tsubame-KFC supercomputer at Tokyo Institute of Technology achieved top honors, ranking as the most efficient commercially available setup, and second overall.The post Green Revolution Cooling Helps Tsubame-KFC Supercomputer Top the Green500 appeared first on insideHPC.

|

|

by staff on (#WANZ)

Today the ISC 2016 conference issued their Call for Proposals for their new PhD Forum, which will allow PhD students to present their research results in a setting that sparks the exchange of scientific knowledge and lively discussions. The call is now open and interested students are encouraged to submit their proposals by February 15, 2016.The post PhD Forum Coming to ISC 2016 appeared first on insideHPC.

|

|

by staff on (#WAGZ)

Today the HPC Advisory Council announced that 12 university teams from around the world will compete in the HPCAC-ISC 2016 Student Cluster Competition at ISC 2016 conference next June in Frankfurt.The post 12 International Student Teams to Face Off at HPCAC-ISC 2016 Student Cluster Competition appeared first on insideHPC.

|

|

by Rich Brueckner on (#WABC)

"What we're showcasing this year is - what we're jokingly calling - face-melting performance. What we're trying to do is make extreme performance available at a very aggressive price point, and at a very aggressive space point, for end users. So, what we've been doing and what we've been working on for the past couple of months has been, basically, building an NVMe-type unit. This NVMe unit connects flash devices through a PCIe interface to the processor complex."The post “Face-Melting Performance†with the Forte Hyperconverged NVMe Appliance from Scalable Informatics appeared first on insideHPC.

|

|

by Rich Brueckner on (#WA86)

Today Seagate and Newisys announced a new flash storage architecture capable or 1 Terabyte/sec performance. Designed for HPC applications, the "industry’s fastest flash storage design" comprises 21 Newisys NSS-2601 with dual NSS-HWxEA Storage Server Modules deployed with Seagate’s newest SAS 1200.2 SSD drives. These devices can be combined in a single 42U rack to achieve block I/O performance of 1TB/s with 5PB of storage. Each Newisys 2U server with 60 Seagate SSDs is capable of achieving bandwidth of 49GB/s.The post Seagate and Newisys Demonstrate 1 TB/s Flash Architecture appeared first on insideHPC.

|

|

by Rich Brueckner on (#WA66)

In this video from SC15, Bill Mannel from HPE, Charlie Wuischpard from Intel, and Nick Nystrom from the Pittsburgh Supercomputing Center discuss their collaboration for High Performance Computing. Early next year, Hewlett Packard Enterprise will deploy the Bridges supercomputer based on Intel technology for breakthrough data centric computing at PSC. "Welcome to Bridges, a new concept in HPC - a system designed to support familiar, convenient software and environments for both traditional and non-traditional HPC users. It is a richly connected set of interacting systems offering a flexible mix of gateways (web portals), Hadoop and Spark ecosystems, batch processing and interactivity."The post Hewlett Packard Enterprise, Intel and PSC: Driving Innovation in HPC appeared first on insideHPC.

|

|

by MichaelS on (#W9G2)

Genome sequencing is a technology that can takes advantage of the growing capability of todays ‘ modern HPC systems. Dell is leading the charge in the area of personalized medicine by providing highly tuned systems to perform genomic sequencing and data management. The whitepaper, The InsideHPC Guide to Genomic is a overview of how Dell is providing state-of-the-art solutions to the life science industry.The post Genomics and HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#W7HA)

"We're now providing LSF in the Cloud as a service to our customers because their workloads are getting larger over time, they're converging-- HPC is not converging with Analytics and even tough they provision for their average load, they can never provision for the spikes or for new projects. So we're helping our clients out by providing the services in the Cloud, where they can get LSF or Platform Symphony, or Spectrum Scale."The post Video: IBM Platform LSF in the Cloud at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#W73N)

Dan Stanzione from TACC presented this talk at the DDN User Group at SC15. "TACC is an advanced computing research center that provides comprehensive advanced computing resources and support services to researchers in Texas and across the USA. The mission of TACC is to enable discoveries that advance science and society through the application of advanced computing technologies. Specializing in high performance computing, scientific visualization, data analysis & storage systems, software, research & development and portal interfaces, TACC deploys and operates advanced computational infrastructure to enable computational research activities of faculty, staff, and students of UT Austin."The post Towards the Convergence of HPC and Big Data — Data-Centric Architecture at TACC appeared first on insideHPC.

|

|

by Rich Brueckner on (#W6WE)

The HPC Advisory Council Stanford Conference 2016 has issued its Call for Participation. The event will take place Feb 24-25, 2016 on the Stanford University campus at the new Jen-Hsun Huang Engineering Center. "The HPC Advisory Council Stanford Conference 2016 will focus on High-Performance Computing usage models and benefits, the future of supercomputing, latest technology developments, best practices and advanced HPC topics. In addition, there will be a strong focus on new topics such as Machine Learning and Big Data. The conference is open to the public free of charge and will bring together system managers, researchers, developers, computational scientists and industry affiliates."The post Call for Participation: HPC Advisory Council Stanford Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#W6TV)

"We have enabled virtualization for HPC but it's important to bring the benefits of virtualization to end researchers in a way they can use it, right? So what we have done is we have created the solution plus VMware High-Performance Analytics, which allows researchers to author their own workloads, they can collaborate it, they can clone it, then they can share it with other researchers. And they can modify their workload - they can fine tune it."The post Video: VMware HPC Virtualization Enables Research as Service appeared first on insideHPC.

|

|

by Rich Brueckner on (#W6NF)

Today NEC Corporation announced that SX-ACE vector supercomputers delivered to the University of Kiel, Alfred Wegener Institute, and the High Performance Computing Center Stuttgart have begun operating and contributing to research.The post Three German Instititutes Deploy NEC’s SX-ACE Vector Supercomputers appeared first on insideHPC.

|

|

by MichaelS on (#W6BR)

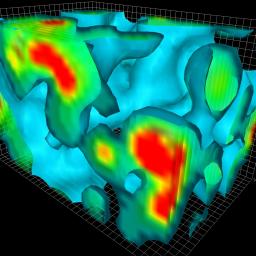

The computational requirements for weather forecasting are driven by the need for higher resolution models for more accurate and extended forecasts. In addition, more physics and chemistry processes are included in the models so we can observe the very fine features of weather behavior. These models operate on 3D grids that encompass the globe. The closer the points on the grid are to each other, the more accurate the results.The post HPC Helps Drive Weather Forecasting appeared first on insideHPC.

|

|

by staff on (#W3K7)

Last week at SC15, Rambus announced that it has partnered with Los Alamos National Laboratory (LANL) for evaluating elements of its Smart Data Acceleration (SDA) Research Program. The SDA platform has been deployed at LANL to improve the performance of in-memory databases, graph analytics and other Big Data applications.The post Rambus Advances Smart Data Acceleration Research Program with LANL appeared first on insideHPC.

|

|

by staff on (#W3J5)

Baidu's Chief Scientist Andrew Ng has started a Social Media campaign for inspiring people to study Machine Learning. "Regardless of where you learned Machine Learning, if it has had an impact on you or your work, please share your story on Facebook or Twitter in a short written or video post. I will invite the people who shared the 5 most inspirational stories to join me in a conversation on Google Hangout about the future of machine learning."The post Share Your Machine Learning Story to Inspire Others appeared first on insideHPC.

|