|

by Rich Brueckner on (#W17A)

In this video from SC15, Intel's Diane Bryant discusses how next-generation supercomputers are transforming HPC and presenting exciting opportunities to advance scientific research and discovery to deliver far-reaching impacts on society. As a frequent speaker on the future of technology, Bryant draws on her experience running Intel’s Data Center Group, which includes the HPC business segment, and products ranging from high-end co-processors for supercomputers to big data analytics solutions to high-density systems for the cloud.The post Video: SC15 HPC Matters Plenary Session with Intel’s Diane Bryant appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 19:15 |

|

by Rich Brueckner on (#W0KE)

Intel in Oregon is seeking an HPC Software Intern in our Job of the Week. "If you are interested in being on the team that builds the world's fastest supercomputer, read on. Our team is designing how we integrate new HW and SW, validate extreme scale systems, and debug challenges that arise. The team consist of engineers who love to learn, love a good challenge, and aren't afraid of a changing environment. We need someone who can help us with creating and executing codes that will be used to validate and debug our system from first Si bring-up through at-scale deployment. The successful candidate will have experience in the Linux environment creating code: C or Python. If you have the right skills, you will help build systems utilized by the best minds on the planet to solve grand challenge science problems such as climate research, bio-medical research, genome analysis, renewable energy, and other areas that require the world's fastest supercomputers to tackle. Be part of the first to get to Exascale!"The post Job of the Week: HPC Software Intern at Intel appeared first on insideHPC.

|

|

by Rich Brueckner on (#VYG2)

Last week at SC15, Numascale announced the successful installation of a large shared memory Numascale/Supermicro/AMD system at a customer datacenter facility in North America. The system is the first part of a large cloud computing facility for analytics and simulation of sensor data combined with historical data. "The Numascale system, installed over the last two weeks, consists of 108 Supermicro 1U servers connected in a 3D torus with NumaConnect, using three cabinets with 36 servers apiece in a 6x6x3 topology. Each server has 48 cores in three AMD Opteron 6386 CPUs and 192 GBytes memory, providing a single system image and 20.7 TBytes to all 5184 cores. The system was designed to meet user demand for “very large memory†hardware solutions running a standard single image Linux OS on commodity x86 based servers."The post Numascale Teams with Supermicro & AMD for Large Shared Memory System appeared first on insideHPC.

|

|

by Rich Brueckner on (#VYCW)

In this podcast, Jorge Salazar from TACC interviews two winners of the 2015 ACM Gordon Bell Prize, Omar Ghattas and Johann Rudi of the Institute for Computational Engineering and Sciences, UT Austin. As part of the discussion, Ghattas describes how parallelism and exascale computing will propel science forward.The post Podcast: Supercomputing the Deep Earth with the Gordon Bell Prize Winners appeared first on insideHPC.

|

|

by Rich Brueckner on (#VY9B)

In this video from SC15, Rich Brueckner from insideHPC moderates a panel discussion with Hewlett Packard Enterprise HPC customers. "Government labs, as well as public and private universities worldwide, are using HPE Compute solutions to conduct research across scientific disciplines, develop new drugs, discover renewable energy sources and bring supercomputing to nontraditional users and research communities."The post Video: Hewlett Packard Enterprise HPC Customer Panel at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VY7W)

PRACEdays16 has extended the deadline for its Call for Participation to Dec. 13, 2015. Taking place May 10-12, 2016 in Prague, the conference will bring together experts from academia and industry who will present their advancements in HPC-supported science and engineering.The post PRACEdays16 Extends Deadline for Submissions to Dec. 13, 2015 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VY6M)

In this video, Torsten Hoefler from ETH Zurich and John West from TACC discuss the preview the upcoming PASC16 and SC16 conferences. With a focus on Exascale computing and user applications, the events will set the stage for the next decade in High Performance Computing.The post Video: A Preview of the PASC16 and SC16 Conferences appeared first on insideHPC.

|

|

by Rich Brueckner on (#VV7K)

Today Russia's RSC Group announced that Team TUMuch Phun from the Technical University of Munich (TUM) won the Highest Linpack Award in the SC15 Student Cluster Competition. The enthusiastic students achieved 7.1 Teraflops on the Linpack benchmark using an RSC PetaStream cluster with computing nodes based on Intel Xeon Phi. TUM student team took 3rd place in overall competition within 9 teams participated in SCC at SC15, so as only one European representative in this challenge.The post TUM Germany Wins Highest Linpack Award on RSC system at SC15 Student Cluster Competition appeared first on insideHPC.

|

|

by MichaelS on (#VV2E)

Basic optimization techniques that include an understanding of math functions and how to simplify can go a long way towards better performance. "When optimizing for a parallel SIMD system such as the Intel Xeon Phi coprocessor, it is also important to make sure that the results match the scalar system. Using vector data may cause parts of the computer program to be re-written, so that the compiler can generate vector code."The post Parallel Methods in Financial Services for Intel Xeon Phi appeared first on insideHPC.

|

|

by Rich Brueckner on (#VQHT)

Software for data analysis, system management, and for debugging other software were be among the innovations on display at SC15 last week. In addition to the software, novel and improved hardware will also be on display, together with an impressive array of initiatives from Europe in research and development leading up to Exascale computing.The post Speeding Up Applications at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VQEJ)

In this video from SC15, Sam Mahalingam from Altair discusses the HyperWorks Unlimited Virtual Appliance and the new open source version of PBS Pro. “Our goal is for the open source community to actively participate in shaping the future of PBS Professional driving both innovation and agility. The community’s contributions combined with Altair’s continued research and development, and collaboration with Intel and our HPC technology partners will accelerate the advancement of PBS Pro to aggressively pursue exascale computing initiatives in broad classes and domains.â€The post Video: PBS Pro Workload Manager Goes Open Source appeared first on insideHPC.

|

|

by staff on (#VQEM)

Last week at SC15, Fujifilm announced that its next-generation LTO Ultrium 7 data cartridge has been qualified by the LTO technology provider companies for commercial production and is available immediately. FUJIFILM LTO Ultrium 7 has a compressed storage capacity of 15.0TB with a transfer rate of 750MB/sec assuming 2.5:1 compression ratio. This capacity achievement represents a 2.4X increase over the current LTO-6 generation.The post FujiFilm Qualifies Next-Gen LTO-7 Cartridge appeared first on insideHPC.

|

|

by staff on (#VQCK)

NCSA is now accepting applications for the Blue Waters Graduate Program. This unique program lets graduate students from across the country immerse themselves in a year of focused high-performance computing and data-intensive research using the Blue Waters supercomputer to accelerate their research.The post Apply Now for Blue Waters Graduate Fellowships appeared first on insideHPC.

|

|

by Rich Brueckner on (#VQB1)

In this video, Jason Souloglou and Eric Van Hensbergen from ARM describe how Pathscale EKOPath compilers are enabling a new HPC ecosystem based on low-power processors. "As an enabling technology, EKOPath gives our customers the ability to compile for native ARMv8 CPU or accelerated architectures that return the fastest time to solution."The post Video: Pathscale Compilers Power ARM for HPC at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VM5A)

Asetek showcased its full range of RackCDU hot water liquid cooling systems for HPC data centers at SC15 in Austin. On display were early adopting OEMs such as CIARA, Cray, Fujitsu, Format and Penguin. HPC installations from around the world incorporating Asetek RackCDU D2C (Direct-to-Chip) technology were also be featured. In addition, liquid cooling solutions for both current and future high wattage CPUs and GPUs from Intel, Nvidia and OpenPower were on display.The post Video: Asetek Showcases Growing Adoption of OEM Solutions at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VM1Z)

"General Relativity is celebrating this year a hundred years since its first publication in 1915, when Einstein introduced his theory of General Relativity, which has revolutionized in many ways the way we view our universe. For instance, the idea of a static Euclidean space, which had been assumed for centuries and the concept that gravity was viewed as a force changed. They were replaced with a very dynamical concept of now having a curved space-time in which space and time are related together in an intertwined way described by these very complex, but very beautiful equations."The post Podcast: Supercomputing Black Hole Mergers appeared first on insideHPC.

|

|

by Rich Brueckner on (#VKY9)

Last week at SC15, NEC Corporation announced that the Flemish Supercomputer Center (VSC) has selected an LX-series supercomputer. With a peak performance of 623 Teraflops, the new system will be the fastest in Belgium, ranking amongst the top 150 biggest and fastest supercomputers in the world. Financed by the Flemish minister for Science and Innovation in Belgium, the infrastructure will cost 5.5 million Euro.The post NEC to Build Fastest Supercomputer in Belgium for VSC appeared first on insideHPC.

|

|

by staff on (#VKTB)

Does your research generate, analyze, and/or visualize data using advanced digital resources? In its recent Call for Participation, the CADENS project is looking for scientific data to visualize or existing data visualizations to weave into larger documentary narratives in a series of fulldome digital films and TV programs aimed at broad public audiences. Visualizations of your work could reach millions of people, amplifying its greater societal impacts!The post CADENS Project Seeking Data and Visualizations appeared first on insideHPC.

|

|

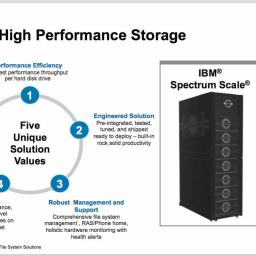

by Rich Brueckner on (#VKQ1)

"We've had a great time here in Austin talking about data centric computing-- the ability to use IBM Spectrum Scale and Platform LSF to do Cognitive Computing. Customers, partners, and the world have been talking about how we can really bring together file, object, and even business analytics workloads together in amazing ways. It's been fun."The post Video: Data Centric Computing for File and Object Storage from IBM appeared first on insideHPC.

|

|

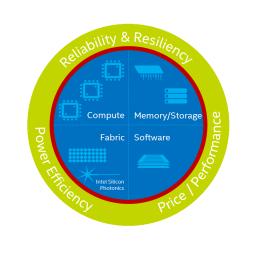

by staff on (#VKBG)

At SC15, Intel talked about some transformational high-performance computing technologies and the architecture—Intel® Scalable System Framework (Intel® SSF). Intel describes Intel SSF as “an advanced architectural approach for simplifying the procurement, deployment, and management of HPC systems, while broadening the accessibility of HPC to more industries and workloads.†Intel SSF is designed to eliminate the traditional bottlenecks; the so called power, memory, storage, and I/O walls that system builders and operators have run into over the years.The post Setting a Path for the Next-Generation of High-Performance Computing Architecture appeared first on insideHPC.

|

|

by Rich Brueckner on (#VGHR)

SC15 has announced the winners of the Student Cluster Competition, which took place last week in Austin. Team Diablo, a team of undergraduate students from Tsinghua University in China, was named the overall winner. "The competition is a real-time, non-stop, 48-hour challenge in which teams of six undergraduates assemble a small cluster at SC15 and race to complete a real-world workload across a series of scientific applications, demonstrate knowledge of system architecture and application performance, and impress HPC industry judges."The post China’s Team Diablo Wins SC15 Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#VGEY)

In this special guest feature from Scientific Computing World, Robert Roe writes that software scalability and portability may be more important even than energy efficiency to the future of HPC. "As the HPC market searches for the optimal strategy to reach exascale, it is clear that the major roadblock to improving the performance of applications will be the scalability of software, rather than the hardware configuration – or even the energy costs associated with running the system."The post Who Will Write Next-generation Software? appeared first on insideHPC.

|

|

by Rich Brueckner on (#VGB4)

In this video from SC15, Larry Jones from Seagate provides an overview of the company's revamped HPC storage product line, including a new 10,000 RPM ClusterStor hard disk drive tailor-made for the HPC market. “ClusterStor integrates the latest in Big Data technologies to deliver class-leading ingest speeds, massively scalable capacities to more than 100PB and the ability to handle a variety of mixed workloads.â€The post Slidecast: Seagate Beefs Up ClusterStor at SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VG2M)

The HPC Advisory Council Switzerland Conference 2016 has announced their Call for Participation. Hosted by the Swiss National Supercomputing Centre, the event will take place in Lugano, Switzerland March 21-23, 2016.The post Call for Participation: HPC Advisory Council Switzerland Conference 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#VFXW)

In this video from SC15, Dr. Eng Lim Goh from SGI describes how the company is embracing new HPC technology trends such as new memory hierarchies. With the convergence of HPC and Big Data as a growing trend, SGI is envisions a "Zero Copy Architecture" that would bring together a traditional supercomputer with a Big Data analytics machine in a way that would not require users to move their data between systems.The post Video: SGI Looks to Zero Copy Architecture for HPC and Big Data appeared first on insideHPC.

|

|

by ralphwells on (#VCY1)

This week at SC15, Eurotech announced that their HiVe HPC system is now available with x86 and ARM-64 based processors. The HiVe supercomputer leverages a new original high performance computing system architecture combining extreme density and best-in-class energy efficiency.The post Eurotech HiVe HPC System Adds ARM CPUs appeared first on insideHPC.

|

by ralphwells on (#VCJ6)

This week at SC15, E4 Computer Engineering from Italy announced its active participation in the OpenPOWER Foundation, an open technical community based on the POWER architecture, enabling collaborative development and opportunity for member differentiation and industry growth. Visit the OpenPOWER Foundation homepage for more information. E4 Computer Engineering has been developing innovative platforms for a number of years, specifically focused on solutions applied to HPC environments. E4 Computer Engineering’s participation in OpenPOWER is a natural next step in the company’s commitment to next generation technology. POWER architecture-based products enable customers to boost performance of their infrastructure while increasing efficiency and scalability.The post E4 Computer Engineering Joins OpenPOWER Foundation appeared first on insideHPC.

|

by ralphwells on (#VCFB)

This week, the University of Warsaw in Poland announced plans to install a Cray XC40 supercomputer at the Interdisciplinary Centre for Mathematical and Computational Modeling. The six-cabinet Cray XC40 system will be located in ICM’s OCEAN research data center, and will enable interdisciplinary teams of scientists to address the most computationally complex challenges in areas such as life sciences, physics, cosmology, chemistry, environmental science, engineering, and the humanities. ICM is Poland’s leading research center for computational and data driven sciences, and is one of the premier centers for large-scale high performance computing simulations and big data analytics in Central and Eastern Europe.The post University of Warsaw Selects Cray XC40 Supercomputer appeared first on insideHPC.

|

|

by staff on (#VAJS)

This week at SC15, Altair announced today it will provide an open source licensing option for its PBS Professional HPC workload manager. Scheduled to be released to the open source community in mid-2016, PBS Pro will become available under two different licensing options for commercial installations and as an Open Source Initiative compliant version. The decision includes working closely with Intel and the Linux Foundation’s OpenHPC Collaborative Project to integrate the open source version of PBS Pro.The post Altair Collaborates with Intel to Integrate PBS Pro with OpenHPC Software Stack appeared first on insideHPC.

|

|

by ralphwells on (#VAD8)

This week at SC15, One Stop Systems featured the first PCIe 3.0 expansion appliance to support up to sixteen Nallatech 510T accelerator cards. The preconfigured appliance is targeted for data centers operating HPC applications, providing the user with a complete appliance that solves many integration issues, provides enhanced performance, and allows for scalable flexibility. The user simply attaches the HDCA to up to four servers and has thousands of additional compute cores readily available. Each connection operates at PCIe x16 3.0 with speeds of up to 128Gb/s.The post One Stop Systems HDCA Supports 16 Nallatech 510T Accelerator Cards appeared first on insideHPC.

|

|

by Rich Brueckner on (#VAC7)

Los Alamos National Lab is seeking an HPC Modeling and Optimization Postdoc in our Job of the Week.The post Job of the Week: HPC Modeling and Optimization Postdoc at LANL appeared first on insideHPC.

|

|

by ralphwells on (#VAC9)

This week at SC15, Penguin Computing announced availability of its OCP-compliant Tundra platform on the company’s Penguin Computing on Demand (POD) public HPC cloud service. "POD customers will realize an immediate benefit of more capacity, as Tundra allows us to scale POD faster and more cost effectively,†said Tom Coull, President and CEO, Penguin Computing. “The rapid, modular scaling enabled by Tundra will result in increased capacity and greater performance.â€The post Tundra Servers come to Penguin on Demand appeared first on insideHPC.

|

|

by Rich Brueckner on (#V7CT)

The OpenACC Standards Group released the 2.5 version of the OpenACC API specification.The post OpenACC 2.5 Includes Support for ARM and x86 Processors appeared first on insideHPC.

|

|

by Rich Brueckner on (#V7B7)

Scientists at the University of Texas at Austin, IBM Research, New York University and the California Institute of Technology have been awarded the 2015 Gordon Bell Prize for realistically simulating the forces inside the Earth that drive plate tectonics. The team’s work could herald a major step toward better understanding of earthquakes and volcanic activity.The post Earth Core Simulation Awarded Gordon Bell Prize appeared first on insideHPC.

|

|

by staff on (#V79Q)

"Recognition of status and career advancement in academia relies on publications. If your skills as a software developer lead you to focus on code to the detriment of your publication history, then your career will come to a grinding halt – despite the fact that your work may have significantly advanced research. This situation is simply not acceptable."The post Creating Careers for Research Software Engineers appeared first on insideHPC.

|

|

by Rich Brueckner on (#V782)

The High Performance Conjugate Gradients (HPCG) Benchmark list was announced this week at SC15. This is the fourth list produced for the emerging benchmark designed to complement the traditional High Performance LINPACK (HPL) benchmark used as the official metric for ranking the TOP500 systems. The first HPCG list was announced at ISC’14 a year and a half ago, containing only 15 entries and the SC’14 list had 25. The current list contains more than 60 entries as HPCG continues to gain traction in the HPC community.The post New HPCG Benchmark List Goes Beyond LINPACK to Compare Supercomputers appeared first on insideHPC.

|

|

by Rich Brueckner on (#V766)

In this video from SC15, Karl Schulz from Intel and Michael Miller from SUSE describe the all-new OpenHPC Community. "The use of open source software is central to HPC, but lack of a unified community across key stakeholders – academic institutions, workload management companies, software vendors, computing leaders – has caused duplication of effort and has increased the barrier to entry,†said Jim Zemlin, executive director, The Linux Foundation. “OpenHPC will provide a neutral forum to develop one open source framework that satisfies a diverse set of cluster environment use-cases.â€The post Video: OpenHPC Community Launches at SC15 appeared first on insideHPC.

|

|

by staff on (#TVYK)

Of the varied approaches to liquid cooling, most remain technical curiosities and fail to show real-world adoption in any significant degree. In contrast, both Asetek RackCDU D2Câ„¢ (Direct-to-Chip) and Internal Loop Liquid Cooling are seeing accelerating adoption both by OEMs and end users.The post Rise of Direct-to-Chip Liquid Cooling Highlighted at SC15 appeared first on insideHPC.

|

|

by ralphwells on (#V38X)

Today Penguin Computing announced that Emerson Network Power is supplying the uniquely-engineered DC power system for Penguin Computing’s Tundra Extreme Scale (ES) series. Emerson Network Power is the world’s leading provider of critical infrastructure for information and communications technology systems. The Tundra ES series delivers the advantages of Open Computing in a single, cost-optimized, high-performance architecture. Organizations can integrate a wide variety of compute, accelerator, storage, network, software and cooling architectures in a vanity-free rack and sled solution.The post Penguin Implements Emerson Network Power’s DC Power System appeared first on insideHPC.

|

|

by ralphwells on (#V34K)

Today Dell unveiled sweeping advancements to its industry-leading high performance computing portfolio. These advances include innovative new systems designed to simplify mainstream adoption of HPC and data analytics in research, manufacturing and genomics. Dell also unveiled expansions to its HPC Innovation Lab and showcased next-generation technologies including the Intel Omni-Path Fabric. HPC is becoming increasingly critical to how organizations of all sizes innovate and compete. Many organizations lack the in-house expertise to configure, build and deploy an HPC system without losing focus on their core science, engineering and analytic missions. As an example, according to the National Center for Manufacturing Sciences, 98 percent of all products will be designed digitally by 2020, yet 95 percent of the center’s 300,000 manufacturing companies have little or no HPC expertise.The post Dell Shows Strategic Focus on HPC at SC15 appeared first on insideHPC.

|

|

by ralphwells on (#V332)

Today Inspur Group announced the launch of the Inspur I9000 Blade System at SC15. The world's leading modular product in converged architecture can converge multiple computing solutions in a single chassis, including high-density servers, 4-socket and 8-socket mission-critical servers, software-defined storage and heterogeneous computing, to meet the demands of various applications on hardware platforms.The post Inspur Unveils I9000 Blade System at SC15 appeared first on insideHPC.

|

|

by ralphwells on (#V334)

The Society of HPC Professionals will focus its 2015 Annual Technical Meeting on the applications of HPC technology to protect against cyberthreats. The one-day ‘HPC in Cybersecurity’ meeting will be held Wednesday, December 2, 2015, at a Schlumberger location in Houston. This event is free and open to all, but advanced registration is required.The post Society of HPC Professionals to Focus on Cybersecurity at December Conference appeared first on insideHPC.

|

|

by MichaelS on (#V31X)

"With high frequency trading becoming so important, the overall system performance, starting with the acquisition of the data from various markets through to the buy or sell decision relies on low latency between various parts of the system. The feed handlers, which accept the data in various formats, can be multithreaded and take advantage of coprocessors such as the Intel Xeon Phi. The NIC on a system waits for the packets to arrive and can then the information to a specified core on the Intel Xeon Phi coprocessor system for processing."The post Financial Services and Low Latency with Intel Xeon Phi appeared first on insideHPC.

|

|

by Rich Brueckner on (#TZW6)

In this podcast, the Radio Free HPC team shares their thoughts from SC15 in Austin. Henry is impressed by the increasing presence of FPGAs on the show floor. Dan is really impressed with Allinea Performance Reports profiling tool and how easy it is to use. And Rich sees SC15 as the crossroads that we’ll remember where Intel squared off with the official launch of their Omni-Path Interconnect and Scalable System Framework against the co-design alliance of OpenPOWER with IBM, Mellanox, and Nvidia.The post Radio Free HPC Reports Live from SC15 appeared first on insideHPC.

|

|

by Rich Brueckner on (#TZFA)

In this video from SC15, Barry Davis from Intel describes the ecosystem for the newly launched Intel Omni-Path fabric and Scalable System Framework. "Intel OPA delivers the performance for tomorrow’s high performance computing (HPC) workloads and the ability to scale to tens of thousands of nodes—and eventually more—at a price competitive with today’s fabrics. The Intel OPA 100 Series product line is an end-to-end solution of PCIe adapters, silicon, switches, cables, and management software. As the successor to Intel True Scale Fabric, this optimized HPC fabric is built upon a combination of enhanced IP and Intel technology."The post Video: Intel Debuts Omni-Path at SC15 appeared first on insideHPC.

|

|

by ralphwells on (#TZFC)

Today One Stop Systems showcased its High Density Compute Accelerator (HDCA) with AMD FirePro S9170 GPUs at SC15. The HDCA, with sixteen AMD FirePro S9170 GPUs, provides up to 84 Tflops of peak single precision and 512GB of GPU memory. The HDCA is a complete appliance, cabled from one to four host servers through PCIe x16 Gen3 connections and perfect for adding compute power to any HPC application.The post One Stop Systems Showcases HDCA with 16 AMD FirePro S9170 GPUs at SC15 appeared first on insideHPC.

|

|

by ralphwells on (#TZDZ)

Today at SC15, Hewlett Packard Enterprise announced that more than a third of the HPC market is leveraging HPE Compute platforms. Among the organizations using HPE solutions are The Pittsburgh Supercomputing Center, Texas Advanced Computing Center at the University of Texas at Austin, the National Renewable Energy Laboratory, Ghent University and the Academic Computer Centre Cyfronet. They use HPE solutions based on the HPE Apollo server line to solve some of the world’s most challenging problems in science and engineering. HPE also announced the first solution deployments resulting from the HPC alliance with Intel.The post HPE Shows Momentum in HPC appeared first on insideHPC.

|

by ralphwells on (#TZC9)

Today Italy's E4 Computer Engineering announced public availability and deployment of Cavium ThunderX-based ARKA servers. Customers can place orders today for one-socket and two-socket ARKA platforms in multiple chassis configurations based on Cavium ThunderX ARMv8 workload-optimized CPUs.The post E4 Ships ARM HPC Servers with Cavium ThunderX appeared first on insideHPC.

|

by ralphwells on (#TZCA)

Today Pica8 announced that the California Institute of Technology, Northwestern University and SURFnet will be demonstrating Pica8-powered Inventec 100G Ethernet solutions at the SC15 conference in Austin, Texas.The post PICA8 Drives High-Speed SDN AT SC15 appeared first on insideHPC.

|

|

by ralphwells on (#TZCC)

Today Penguin Computing announced first customer shipments of its Tundra Extreme Scale (ES) server based on Cavium’s 48 core ARMv8 based ThunderX workload optimized processors. Tundra ES Valkre servers are now available for public order and a standard 19†rack mount version will ship in early 2016.The post Penguin Computing Looks to Cavium ThunderX for ARM HPC Servers appeared first on insideHPC.

|