|

by Daniel on (#52RVC)

This white paper by Advantech, "Rugged COTS Platform Takes On Fast-Changing Needs of Self-Driving Trucks" discusses how the fast-changing needs of autonomous vehicles are forcing compute platforms to evolve. Advantech and Crystal Group are teaming up to power that evolution based on AV trends, compute requirements, and a rugged COTS philosophy converging for breakthrough innovation in self-driving truck designs.The post Rugged COTS Platform Takes On Fast-Changing Needs of Self-Driving Trucks appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-02 10:00 |

|

by staff on (#52Q9W)

Data—the gold that today’s organizations spend significant resources to acquire—is ever-growing and underpins significant innovation in technologies for storing and accessing it. In this technology guide, insideHPC Special Research Report: Modernizing and Future-Proofing Your Storage Infrastructure, we’ll see how in this environment, different applications and workflows will always have data storage and access requirements, making it critical for planning to understand that a heterogeneous storage infrastructure is needed for a fully functioning organization.The post Special Report: Modernizing and Future-Proofing Your Storage Infrastructure – Part 3 appeared first on insideHPC.

|

|

by staff on (#52QHD)

In this Chip Chat podcast, Trish Damkroger from Intel describes HPC’s relevance in today’s society, particularly in relation to the COVID-19 pandemic. "The podcast covers how Intel’s HPC solutions offer computing resources to researchers and scientists to better understand the underlying characteristics of COVID-19, to help limit the spread of the virus, and to advance the development of COVID-19 vaccines. Today’s episode also discusses various HPC workloads that are being used and Trish’s observations on the HPC community’s positive responses and contributions."The post Podcast: Trish Damkroger on HPC’s Role in the fight against COVID-19 appeared first on insideHPC.

|

|

by Rich Brueckner on (#52QHF)

Today, the Khronos Group consortium released the OpenCL 3.0 Provisional Specifications. OpenCL 3.0 realigns the OpenCL roadmap to enable developer-requested functionality to be broadly deployed by hardware vendors, and it significantly increases deployment flexibility by empowering conformant OpenCL implementations to focus on functionality relevant to their target markets. Many of our customers want a GPU programming language that runs on all devices, and with growing deployment in edge computing and mobile, this need is increasing,†said Vincent Hindriksen, founder and CEO of Stream HPC. “OpenCL is the only solution for accessing diverse silicon acceleration and many key software stacks use OpenCL/SPIR-V as a backend. We are very happy that OpenCL 3.0 will drive even wider industry adoption, as it reassures our customers that their past and future investments in OpenCL are justified.â€The post Khronos Group Releases OpenCL 3.0 appeared first on insideHPC.

|

|

by Rich Brueckner on (#52QHH)

Today Hyperion Research released its updated analysis of the potential impact areas of the covid-19 pandemic on the HPC market based on recent discussions with HPC system vendors and buyers. There is a large variation in the impacts of the virus for different segments of the HPC market. Hyperion Research is developing a detailed analysis of these impacts in order to create updated forecasts for HPC servers, AI, ML, DL, and public cloud spending for running HPC workloads.The post Hyperion Research Forecasts Widespread Covid-19 Disruption to HPC Market appeared first on insideHPC.

|

|

by staff on (#52QHJ)

NVIDIA today announced the completion of its acquisition of Mellanox for a transaction value of $7 billion. “With Mellanox, the new NVIDIA has end-to-end technologies from AI computing to networking, full-stack offerings from processors to software, and significant scale to advance next-generation data centers. Our combined expertise, supported by a rich ecosystem of partners, will meet the challenge of surging global demand for consumer internet services, and the application of AI and accelerated data science from cloud to edge to robotics.â€The post NVIDIA Completes Acquisition of Mellanox appeared first on insideHPC.

|

|

by Rich Brueckner on (#52PCV)

Thomas Francis from the UberCloud and Mohan Potheri from VMware gave this talk at the Stanford HPC Conference. "In this solution we combine the capabilities of the VMware platform, with the solution provided by UberCloud which leverages the automation capabilities of Terraform with the unique packaging and distributing capabilities of docker-based HPC containers to dynamically deploy HPC applications on the vSphere platform."The post Agile HPC with VMware Cloud Foundation & UberCloud appeared first on insideHPC.

|

|

by staff on (#52P89)

Arizona State University has added to its international reputation for innovation, being named a Dell Technologies HPC and AI Center of Excellence. This recognition provides ASU with access to a worldwide program that facilitates the exchange of ideas among researchers, computer scientists, technologists and engineers for the advancement of high-performance computing and artificial intelligence solutions.The post Arizona State University becomes Dell Technologies HPC & AI Center of Excellence appeared first on insideHPC.

|

|

by staff on (#52NEE)

In this podcast, the Radio Free HPC team looks at Honeywell’s Trapped Ion Quantum computing initiative. Quantum computing initiative. "Shahin gives us a good overview of digital vs. analog and classical vs. quantum science. The Honeywell system is in the ‘quantum-gate’ quadrant of Shahin’s model, suspending ions in space through magnetics and then hitting them with lasers to produce entanglement. The Honeywell system is interesting because it is scoring well on the emerging Quantum Volume metric – showing very high fidelity for its qubit count. This system is the culmination of over 10 years of R&D and should be on the market later on this year."The post Podcast: Honeywell Traps, Zaps Ions for Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#52N6E)

"We are seeking an HPC Tools Software Engineers for our Engineering Support team on the HPC contract. Lockheed Martin provides High Performance Computing services throughout the HPC lifecycle for computational requirements, architecture, acquisition, and operations to federal government customers. The program provides key supercomputing capabilities for solving important problems in science and technology. The program is involved in efforts to develop scientific software and libraries for HPC platforms. This work involves working on cutting edge HPC technologies to ensure that scientists and engineers will be able to fully utilize modern HPC systems."The post Job of the Week: Mid-Career Software Engineer at Lockheed Martin appeared first on insideHPC.

|

|

by staff on (#52J4T)

In this Sponsored Post, our friends over at Altair explain that to keep the world’s weather sites running smoothly, Altair and the Cylc open source community have packaged an industry-leading workload manager, Altair PBS Professional™, together with the Cylc workflow engine plus other helpful plug-ins to create the Altair Weather Solution.The post Altair and Cylc Take Weather Prediction by Storm appeared first on insideHPC.

|

|

by staff on (#52M37)

In this special guest feature, Ian Finder from Microsoft Azure writes that GPU VMs with Riskfuel's derivatives models based on artificial intelligence are much faster than previous methods. "Using conventional algorithms, real-time pricing, and risk management is out of reach. But as the influence of machine learning extends into production workloads, a compelling pattern is emerging across scenarios and industries reliant on traditional simulation. Once computed, the output of traditional simulation can be used to train DNN models that can then be evaluated in near real-time with the introduction of GPU acceleration."The post Azure Looks to Real-Time Derivatives Pricing using Riskfuel AI Technology on GPUs appeared first on insideHPC.

|

|

by staff on (#52M39)

NVIDIA will post its GTC 2020 keynote address by CEO Jensen Huang on YouTube on May 14, at 6 a.m. Pacific time. "Huang will highlight the company’s latest innovations in AI, high performance computing, data science, autonomous machines, healthcare and graphics during the recorded keynote. Participants will be able to view the keynote on demand at www.youtube.com/nvidia. Get amped for the latest platform breakthroughs in AI, deep learning, autonomous vehicles, robotics and professional graphics."The post GTC 2020 Keynote with CEO Jensen Huang Set for May 14 appeared first on insideHPC.

|

|

by Rich Brueckner on (#52M3B)

Researchers are using TACC supercomputers to power the Galaxy Bioinformatics Platform for COVID-19 analysis. More than 30,000 biomedical researchers run approximately 500,000 computing jobs a month on the platform. "Since 2013, TACC has powered the data analyses for a large percentage of Galaxy users, allowing researchers to quickly and seamlessly solve tough problems in cases where their personal computer or campus cluster is not sufficient."The post TACC Powers Galaxy Bioinformatics Platform for COVID-19 Analysis appeared first on insideHPC.

|

|

by staff on (#52M3D)

This presentation describes Storage capabilities that HPE has added to its High Performance Compute portfolio through the Cray acquisition. It looks at how these capabilities prepare and support the needs of the exascale era that requires unprecedented requirements of storage capacity that needs to be accessed with unprecedented speeds. "Data growth far exceeds price/ performance improvements in existing media storage technologies. As a result, sticking with your current storage infrastructure will leave you unable to keep up."The post Video: The Impact of the Cray Acquisition on HPC Storage appeared first on insideHPC.

|

|

by Rich Brueckner on (#52KGP)

Today Astera Labs announced that it has closed its Series B funding with renowned technology investors including Sutter Hill Ventures, Intel Capital, Avigdor Willenz, and Ron Jankov. This investment round, along with a strategic collaboration with TSMC for manufacturing, positions Astera Labs to rapidly scale production of its Aries Smart Retimer, the world’s first Smart Retimer Portfolio for PCI Express (PCIe) 4.0 and 5.0 solutions, and to accelerate development of additional product lines for Compute Express Link (CXL) solutions. "We are very proud of the significant industry traction for our Aries Smart Retimer Portfolio which has been extensively tested with all major CPU, GPU and PCIe 4.0 endpoints,†said Jitendra Mohan, CEO, Astera Labs. “We look forward to accelerating this momentum by partnering with such a distinguished group of technology and manufacturing heavyweights to develop purpose-built connectivity solutions for data-centric systems.â€The post Astera Labs lands funding for purpose-built connectivity solutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#52JDT)

Addison Snell from Intersect360 Research gave this talk at the Stanford HPC Conference. "As the global shutdown continues, inquiring minds want to know what the effects will be on the HPC and AI market. Intersect360 Research has released a new report guiding its clients that the market for HPC products and services will fall significantly short of its previous 2020 forecast, due to the global COVID-19 pandemic. The newly-revised forecast predicts the overall worldwide HPC market will be flat to down 12% in 2020."The post Video: HPC and AI Market Update from Intersect360 Research appeared first on insideHPC.

|

|

by staff on (#52JDV)

Today Altair updated Altair Inspire, the company’s fully-integrated generative design and simulation solution that accelerates the creation, optimization, and study of innovative, structurally efficient parts and assemblies. The latest release offers an even more powerful and accessible working environment, enabling a simulation-driven design approach that will cut time-to-market, reduce development costs, and optimize product performance. "Inspire enables both simulation analysts and designers to perform ‘what-if’ studies faster, easier, and earlier, encouraging collaboration and reducing product time to market."The post Altair Inspire update accelerates simulation-driven design appeared first on insideHPC.

|

|

by Rich Brueckner on (#52J4S)

In this special guest feature, Rod Burns from Codeplay writes that the company has made significant contributions to enabling an open standard, cross-architecture interface for developers as part of the oneAPI industry initiative. Software developers are looking more than ever at how they can accelerate their applications without having to write optimized processor specific code. […]The post Codeplay implements MKL-BLAS for NVIDIA GPUs using SYCL and DPC++ appeared first on insideHPC.

|

|

by staff on (#52HSX)

Today LeapMind announced Efficiera, an ultra-low power AI inference accelerator IP for companies that design ASIC and FPGA circuits, and other related products. Efficiera will enable customers to develop cost-effective, low power edge devices and accelerate go-to-market of custom devices featuring AI capabilities. "This product enables the inclusion of deep learning capabilities in various edge devices that are technologically limited by power consumption and cost, such as consumer appliances (household electrical goods), industrial machinery (construction equipment), surveillance cameras, and broadcasting equipment as well as miniature machinery and robots with limited heat dissipation capabilities."The post LeapMind Unveils Efficiera Ultra Low-Power AI Inference Accelerator IP appeared first on insideHPC.

|

|

by staff on (#52GP4)

Today the InfiniBand Trade Association (IBTA) announced the public availability of the InfiniBand Architecture Specification Volume 1 Release 1.4 and Volume 2 Release 1.4. With these updates in place, the InfiniBand ecosystem will continue to grow and address the needs of the next generation of HPC, artificial AI, cloud and enterprise data center compute, and storage connectivity needs.The post IBTA Updates InfiniBand Architecture Specifications for Next-Gen HPC appeared first on insideHPC.

|

|

by staff on (#52GP6)

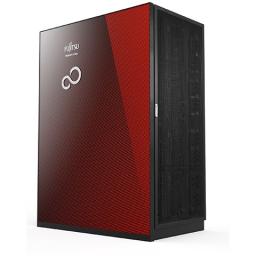

Today Fujitsu announced that it has received an order for a supercomputer system from the Japan Aerospace Exploration Agency (JAXA). Powered by the Arm-based A64FX processor, the system will contribute in improving the international competitiveness of aerospace research, as it will be widely used as the basis for JAXA's high performance computing. "Scheduled to start operation in October 2020, the new computing system for large-scale numerical simulation, composed of Fujitsu Supercomputer PRIMEHPC FX1000, is expected to have a theoretical computational performance of 19.4 petaflops, which is approximately 5.5 times that of the current system."The post Arm-Powered Fujitsu Supercomputer to fuel Aerospace Research at JAXA in Japan appeared first on insideHPC.

|

|

by staff on (#52GP8)

In this video from Forbes, Horst Simon from LBNL describes how supercomputers are being used for coronavirus research. "Computing is stepping up to the fight in other ways too. Some researchers are crowdsourcing computing power to try to better understand the dynamics of the protein and a dataset of 29,000 research papers has been made available to researchers leveraging artificial intelligence and other approaches to help tackle the virus. IBM has launched a global coding challenge that includes a focus on COVID-19 and Amazon has said it will invest $20 million to help speed up coronavirus testing."The post Video: Why Supercomputers Are A Vital Tool In The Fight Against COVID-19 appeared first on insideHPC.

|

|

by Rich Brueckner on (#52GPA)

In this video from the Stanford HPC Conference, Surya Sanjay and Tanay Bhangale from Troy High School in Michigan describe how their proposal on combatting prion-based disease resulted in their first experiences with HPC at the Stanford High Performance Computing Center. "Using a model of the infectious murine prion and many mutagenized variants of the benign murine prion as PrPX, created with Rosetta’s ab initio software, we plan to perform molecular dynamics (MD) simulations with GROMACS 2020 to determine the success of models based on the described criteria."The post High Schoolers from Michigan Step Up to High Performance Computing at Stanford appeared first on insideHPC.

|

|

by staff on (#52G22)

Today Zapata Computing announced an Early Access Program to Orquestra, its end-to-end platform for quantum-enabled workflows. Providing the most software- and hardware-interoperable, enterprise quantum toolset, Orquestra enables advanced technology, R&D and academic teams to accelerate quantum solutions for complex computational problems in optimization, machine learning and simulation across a variety of industries. "Orquestra is the only system for managing quantum workflows. By providing frictionless collaboration and rapid iteration, it helps Zapata and our entire community accelerate the discovery and development of near-term quantum algorithms and applications."The post Zapata Computing opens early access to Orquestra for quantum-enabled workflow appeared first on insideHPC.

|

|

by Rich Brueckner on (#52EY4)

Today SiPearl, the company that is designing the high-performance, low-power microprocessor for the European exascale supercomputer, has signed a major technological licensing agreement with Arm, the global semiconductor IP provider. The agreement will enable SiPearl to benefit from the high-performance, secure, and scalable next-generation Arm Neoverse platform, codenamed ‶Zeusʺ, as well as leverage the robust software and hardware Arm ecosystem.The post European SiPearl Startup Licenses Arm for First-Generation Microprocessor appeared first on insideHPC.

|

|

by Rich Brueckner on (#52EY5)

Today BittWare introduced their new TeraBox 200DE edge server. The TeraBox series of certified server platforms feature the latest FPGA accelerators enabling customers to develop and deploy quicker with reduced risk and cost. "Working with Dell OEM for many years, we learned about the PowerEdge XE2420 and jumped at the chance to qualify our latest generation of FPGA accelerators. The combination has resulted in the TeraBox 200DE, the first enterprise-class FPGA edge server purpose-built for harsh environments and complex, compute-intensive workloads.â€The post BittWare Introduces New TeraBox FPGA Accelerated Edge Server appeared first on insideHPC.

|

|

by staff on (#52EY7)

Today Lawrence Livermore National Laboratory, Penguin Computing, and AMD announced an agreement to upgrade the Lab’s unclassified Corona HPC cluster with AMD Instinct accelerators, expected to nearly double the peak performance of the machine. The system will be used by the COVID-19 HPC Consortium, a nationwide public-private partnership that is providing free computing time and resources to scientists around the country engaged in the fight against the coronavirus.The post AMD and Penguin Computing Upgrade Corona Supercomputer to fight COVID-19 appeared first on insideHPC.

|

|

by staff on (#52EY9)

Today WekaIO introduced Weka AI, a transformative storage solution framework underpinned by the Weka File System (WekaFS) that enables accelerated edge-to-core-to-cloud data pipelines. Weka AI is a framework of customizable reference architectures (RAs) and software development kits (SDKs) with leading technology alliances like NVIDIA, Mellanox, and others in the Weka Innovation Network (WIN). "GPUDirect Storage eliminates IO bottlenecks and dramatically reduces latency, delivering full bandwidth to data-hungry applications," said Liran Zvibel, CEO and Co-Founder, WekaIO.The post New Weka AI framework to accelerate Edge to Core to Cloud Data Pipelines appeared first on insideHPC.

|

|

by Rich Brueckner on (#52EMF)

In this video, Philip Harris from MIT presents: Heterogeneous Computing at the Large Hadron Collider. "Only a small fraction of the 40 million collisions per second at the Large Hadron Collider are stored and analyzed due to the huge volumes of data and the compute power required to process it. This project proposes a redesign of the algorithms using modern machine learning techniques that can be incorporated into heterogeneous computing systems, allowing more data to be processed and thus larger physics output and potentially foundational discoveries in the field."The post Video: Heterogeneous Computing at the Large Hadron Collider appeared first on insideHPC.

|

|

by Rich Brueckner on (#52D5S)

Setting the stage for the Stanford HPC Conference this week, Gilad Shainer describes how the HPC AI Advisory Council fosters innovation in the high performance computing community. "The HPC-AI Advisory Council’s mission is to bridge the gap between high-performance computing and Artificial Intelligence use and its potential, bring the beneficial capabilities of HPC and AI to new users for better research, education, innovation and product manufacturing, bring users the expertise needed to operate HPC and AI systems, provide application designers with the tools needed to enable parallel computing, and to strengthen the qualification and integration of HPC and AI system products."The post Update on the HPC AI Advisory Council appeared first on insideHPC.

|

|

by staff on (#52D5T)

The DOE Exascale Computing Project (ECP) has named Dan Martin, a computational scientist and group lead for the Applied Numerical Algorithms Group in Berkeley Lab’s Computational Research Division, as team lead for their Earth and Space Science portfolio within ECP Application Development focus area. Martin replaces Anshu Dubey, a computer scientist at Argonne National Laboratory. "I am confident that Dan’s technical expertise and deep experience with the codes that are relevant to the ECP portfolio make him a good fit to replace me at ECP. Not only is he highly respected for his work, but his management style will mesh very well with the leadership team,†said Dubey of Martin’s appointment.The post Dan Martin from Berkeley Lab takes on new role at Exascale Computing Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#52CWV)

In this time lapse video, technicians build the new Attaway supercomputer from Penguin Computing at Sandia National Labs. In November 2019, the Attaway was #94 on the TOP500 Supercomputers list. "On February 28, 2019, Sandians lost a long-time colleague and friend, Steve Attaway. Steve spent over thirty years at Sandia, and during that time he helped bring big, seemingly impossible ideas into realization. The Attaway supercomputer is named after him."The post Time Lapse Video: Building the Attaway Supercomputer at Sandia appeared first on insideHPC.

|

|

by Daniel on (#52CWX)

Data—the gold that today’s organizations spend significant resources to acquire—is ever-growing and underpins significant innovation in technologies for storing and accessing it. In this technology guide, insideHPC Special Research Report: Modernizing and Future-Proofing Your Storage Infrastructure, we’ll see how in this environment, different applications and workflows will always have data storage and access requirements, making it critical for planning to understand that a heterogeneous storage infrastructure is needed for a fully functioning organization.The post Special Report: Modernizing and Future-Proofing Your Storage Infrastructure – Part 2 appeared first on insideHPC.

|

|

by staff on (#52C0Y)

AMD has announced a COVID-19 HPC fund to provide research institutions with computing resources to accelerate medical research on COVID-19 and other diseases. The fund will include an initial donation of $15 million of high-performance systems powered by AMD EPYC CPUs and AMD Radeon Instinct GPUs to key research institutions. "I’m also proud that AMD last week joined the COVID-19 High Performance Computing Consortium," writes AMD CEO Lisa Su.The post AMD Donates HPC Systems to fight COVID-19 appeared first on insideHPC.

|

|

by staff on (#52C10)

In this Let's Talk Exascale podcast, researchers from LBNL discuss how the WarpX project are developing an exascale application for plasma accelerator research. "The new breeds of virtual experiments that the WarpX team is developing are not possible with current technologies and will bring huge savings in research costs, according to the project’s summary information available on ECP’s website. The summary also states that more affordable research will lead to the design of a plasma-based collider, and even bigger savings by enabling the characterization of the accelerator before it is built."The post Podcast: WarpX exascale application to accelerate plasma accelerator research appeared first on insideHPC.

|

|

by staff on (#52AXD)

The most powerful supercomputer in the world for academic research has established its mission for the coming year. "The NSF has approved allocations of supercomputing time on Frontera to 49 science projects for 2020-2021. Time on the TACC supercomputer is awarded based on a project's need for very large scale computing to make science and engineering discoveries, and the ability to efficiently use a supercomputer on the scale of Frontera."The post NSF awards compute time on Frontera Supercomputer for 49 projects appeared first on insideHPC.

|

|

by staff on (#527MY)

In this special guest feature, Tim Miller, VP of Product Marketing at One Stop Systems (OSS), writes that his company is addressing the common requirements for video analytic applications with its AI on the Fly® building blocks. AI on the Fly is defined as moving datacenter levels of HPC and AI compute capabilities to the edge.The post Intelligent Video Analytics Pushes Demand for High Performance Computing at the Edge appeared first on insideHPC.

|

|

by Rich Brueckner on (#52ASN)

The Center for Gravitation, Cosmology and Astrophysics (CGCA) at the University of Wisconsin - Milwaukee is seeking a Scientific Data Application Developer in our Job of the Week. "Successful candidates will be part of a large and diverse team in the CGCA that works on a wide variety of computing infrastructure for astronomical and astrophysics problems. Team members interact daily with the physicists, astrophysicists and astronomers in the CGCA and at partner institutions. The CGCA at UW-Milwaukee also offers an exciting and friendly environment in which to work and play. With almost forty faculty, staff, postdocs, and students, the Center is a fun and vibrant place to work."The post Job of the Week: Scientific Data Application Developer at CGSA in Milwaukee appeared first on insideHPC.

|

|

by staff on (#529S1)

Today quantum startup Q-CTRL announced a strategic investment by In-Q-Tel (IQT), the not-for-profit strategic investor that identifies innovative technology solutions to support the national security communities of the U.S. and its allies. "The company’s practice in quantum computing solves the Achilles heel of this new technology – hardware error and instability – by delivering a set of techniques that allow quantum computations to be executed with greater success."The post Q-CTRL to Accelerate Quantum Technology Solutions for National Security Applications appeared first on insideHPC.

|

|

by staff on (#529G0)

Today NVIDIA announced that it has received approval from all necessary authorities to proceed with its planned acquisition of Mellanox, as announced in March 2019. "This exciting transaction would unite two HPC industry leaders and strengthen the combined company’s ability to create data-centric system architectures for the convergence of the HPC and hyperscale markets around AI and other HPDA tasks,†said Steve Conway from Hyperion Research.The post NVIDIA Receives Approval to Proceed with Mellanox Acquisition appeared first on insideHPC.

|

|

by staff on (#529G1)

With the U.S. and many other countries working ‘round the clock to mitigate the devastating effects of the COVID-19 disease, SDSC is providing priority access to its high-performance computer systems and other resources to researchers working to develop an effective vaccine in as short a time as possible. “For us, it absolutely crystalizes SDSC’s mission, which is to deliver lasting impact across the greater scientific community by creating innovative end-to-end computational and data solutions to meet the biggest research challenges of our time. That time is here.â€The post SDSC makes Comet Supercomputer available for COVID-19 research appeared first on insideHPC.

|

|

by staff on (#529G2)

Today Supermicro announced that line of servers optimized for 2nd Gen AMD EPYC Processors have achieved 27 world record performance benchmarks and counting. In addition to the industry's first blade platform, Supermicro's entire portfolio of new H12 A+ Servers fully supports the newly announced high-frequency AMD EPYC 7Fx2 Series processors. "By leveraging the significant performance boost of our new high-frequency AMD EPYC 7Fx2 processors, Supermicro can help drive better results in critical enterprise workloads for their broad customer base."The post Supermicro Sets World Record Performance with AMD EPYC Processors appeared first on insideHPC.

|

|

by Rich Brueckner on (#529G3)

In this video from the HPE Conference, Bastian Koller from HLRS discusses Competence Centres in HPC and their role in European innovation. "The Centres of Excellence (CoE) develop leading edge technologies to promote the adoption of advanced HPC in industry and public administration, and increase competitiveness for European companies and SMEs through access to CoE expertise and services."The post Video: Competence Centres in HPC – their Role in European Innovation appeared first on insideHPC.

|

|

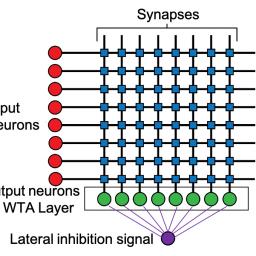

by staff on (#529G5)

Researchers at the Cockrell School of Engineering at The University of Texas at Austin have found a way to make the new generation of smart computers more energy efficient. "Traditionally, silicon chips have formed the building blocks of the infrastructure that powers computers. But this research uses magnetic components instead of silicon and discovers new information about how the physics of the magnetic components can cut energy costs and requirements of training algorithms — neural networks that can think like humans and do things like recognize images and patterns."The post Using Magnetic Circuits for Energy Efficient Big Data Processing appeared first on insideHPC.

|

|

by staff on (#527WH)

As part of the worldwide effort to understand and contain the COVID-19 pandemic, Indiana University’s Jetstream, which offers cloud-based, on-demand computing and data analysis resources within the Extreme Science and Engineering Discovery Environment (XSEDE), is fast-tracking projects that respond to the crisis. "Through the COVID-19 HPC Consortium, Jetstream will provide vital high-performance computing resources. Specifically, priority use of IU’s Jetstream cloud system for analysis of the virus and searches for cures and vaccines. Jetstream offers cloud-based, on-demand computing and data analysis resources, in support of research related to COVID-19."The post Jetstream and XSEDE resources available for pandemic research appeared first on insideHPC.

|

|

by staff on (#527WK)

In this Let's Talk Exascale podcast, Peter Lindstrom from Lawrence Livermore National Laboratory describes how the ZFP project will help reduce the memory footrprint and data movement in Exascale systems. “To perfom those computations, we oftentimes need random access to individual array elements,†Lindstrom said. “Doing that, coupled with data compression, is extremely challenging.â€The post Podcast: ZFP Project looks to Reduce Memory Footprint and Data Movement on Exascale Systems appeared first on insideHPC.

|

|

by staff on (#527WN)

The DOE INCITE program has issued its Call for Proposals. "Open to researchers from academia, industry and government agencies, the INCITE program is aimed at large-scale scientific computing projects that require the power and scale of DOE’s leadership-class supercomputers. The program will award up to 60 percent of the allocable time on Summit, the OLCF’s 200-petaflop IBM AC922 machine, and Theta, the ALCF’s 12-petaflop Cray XC40 system."The post DOE INCITE program seeks proposals for 2021 appeared first on insideHPC.

|

|

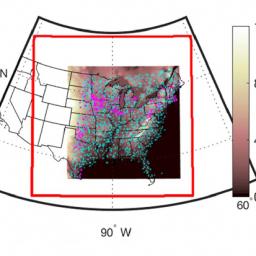

by staff on (#527WQ)

Researchers are using TACC supercomputers to map out a path towards growing wind power as an energy source in the United States. "This research is the first detailed study designed to develop scenarios for how wind energy can expand from the current levels of seven percent of U.S. electricity supply to achieve the 20 percent by 2030 goal outlined by the U.S. Department of Energy National Renewable Energy Laboratory (NREL) in 2014."The post Supercomputing the Expansion of Wind Power appeared first on insideHPC.

|

|

by Rich Brueckner on (#526GY)

Today TYAN announced support for high frequency AMD EPYC 7F32 (8 cores), EPYC 7F52 (16 cores) and EPYC 7F72 (24 cores) processor-based server motherboards and server systems to the market. TYAN's HPC and storage server platforms continue to offer exceptional performance to datacenter customers. "Leveraging AMD’s innovation in 7nm process technology, PCIe 4.0 I/O, and an embedded security architecture, TYAN’s 2nd Gen AMD EPYC processor-based platforms are designed to address the most demanding challenges facing the datacenterâ€, said Danny Hsu, Vice President of MiTAC Computing Technology Corporation's TYAN Business Unit. “Adding the new AMD EPYC 7002 Series processors with TYAN server platforms enable us to provide new capabilities to our customers and partners.â€The post TYAN Boosts HPC and Storage Servers with New AMD EPYC 7002 Series Processors appeared first on insideHPC.

|