Google researchers created an amazing scene-rendering AI

New research from Google's UK-based DeepMind subsidiary demonstrates that deep neural networks have a remarkable capacity to understand a scene, represent it in a compact format, and then "imagine" what the same scene would look like from a perspective the network hasn't seen before.

Human beings are good at this. If shown a picture of a table with only the front three legs visible, most people know intuitively that the table probably has a fourth leg on the opposite side and that the wall behind the table is probably the same color as the parts they can see. With practice, we can learn to sketch the scene from another angle, taking into account perspective, shadow, and other visual effects.

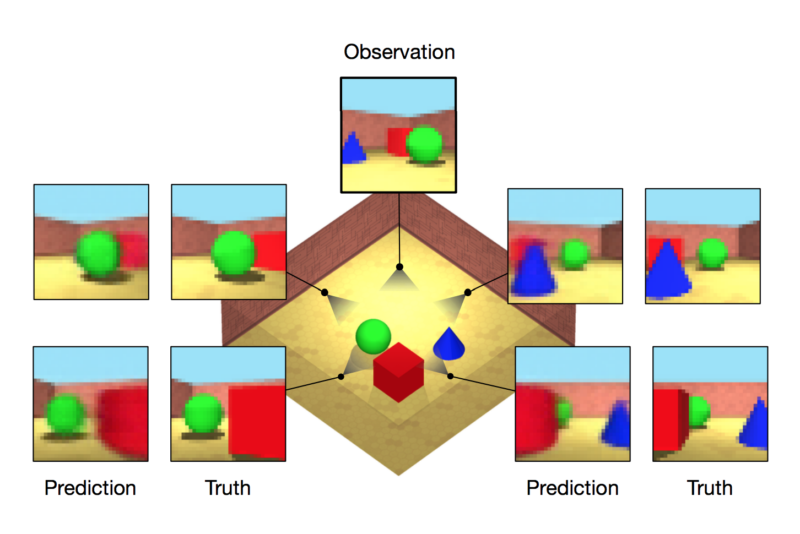

A DeepMind team led by Ali Eslami and Danilo Rezende has developed software based on deep neural networks with these same capabilities-at least for simplified geometric scenes. Given a handful of "snapshots" of a virtual scene, the software-known as a generative query network (GQN)-uses a neural network to build a compact mathematical representation of that scene. It then uses that representation to render images of the room from new perspectives-perspectives the network hasn't seen before.

Read 31 remaining paragraphs | Comments