Who needs qubits? Factoring algorithm run on a probabilistic computer

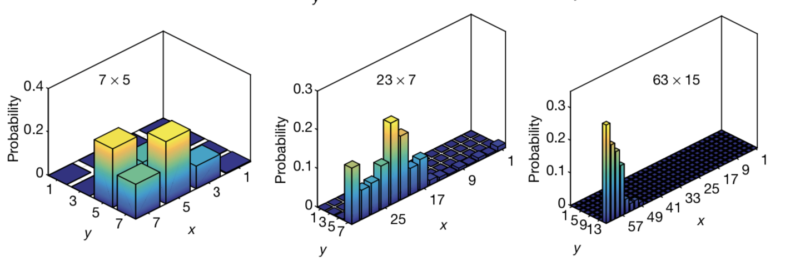

Enlarge / The correct answer is a matter of probabilities, so some related wrong answers also appear with some frequency.

The phenomenal success of our integrated circuits managed to obscure an awkward fact: they're not always the best way to solve problems. The features of modern computers-binary operations, separated processing and memory, and so on-are extremely good at solving a huge range of computational problems. But there are things they're quite bad at, including factoring large numbers, optimizing complex sets of choices, and running neural networks.

Even before the performance gains of current processors had leveled off, people were considering alternative approaches to computing that are better for some specialized tasks. For example, quantum computers could offer dramatic speed-ups in applications like factoring numbers and database searches. D-Wave's quantum optimizer handles (wait for it) optimization problems well. And neural network computing has been done with everything from light to a specialized form of memory called a memristor.

But the list of alternative computing architectures that have been proposed is actually larger than the list of things that have actually been implemented in functional form. Now, a team of Japanese and American researchers have added an additional entry to the "functional" category: probabilistic computing. Their hardware is somewhere in between a neural network computer and a quantum optimizer, but they've shown it can factor integers using commercial-grade parts at room temperature.

Read 15 remaining paragraphs | Comments