ChatGPT goes temporarily “insane” with unexpected outputs, spooking users

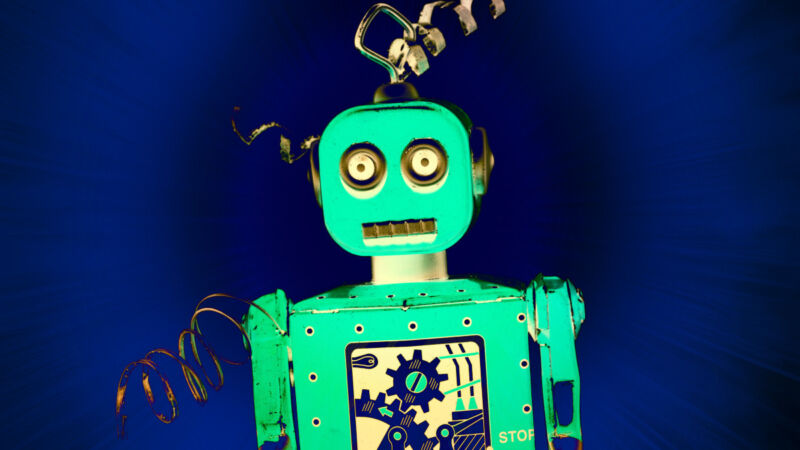

Enlarge (credit: Benj Edwards / Getty Images)

On Tuesday, ChatGPT users began reporting unexpected outputs from OpenAI's AI assistant, flooding the r/ChatGPT Reddit sub with reports of the AI assistant "having a stroke," "going insane," "rambling," and "losing it." OpenAI has acknowledged the problem and is working on a fix, but the experience serves as a high-profile example of how some people perceive malfunctioning large language models, which are designed to mimic humanlike output.

ChatGPT is not alive and does not have a mind to lose, but tugging on human metaphors (called "anthropomorphization") seems to be the easiest way for most people to describe the unexpected outputs they have been seeing from the AI model. They're forced to use those terms because OpenAI doesn't share exactly how ChatGPT works under the hood; the underlying large language models function like a black box.

"It gave me the exact same feeling-like watching someone slowly lose their mind either from psychosis or dementia," wrote a Reddit user named z3ldafitzgerald in response to a post about ChatGPT bugging out. "It's the first time anything AI related sincerely gave me the creeps."