Pathnet is Deepmind's step to a super neural network for creating an artificial general intelligence

by noreply@blogger.com (brian wang) from NextBigFuture.com on (#2FB85)

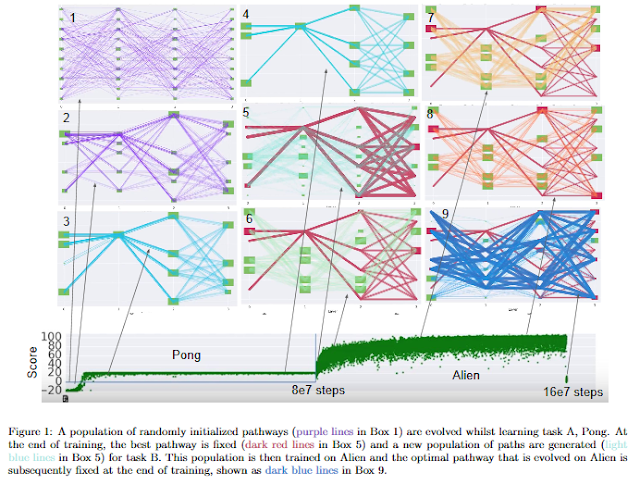

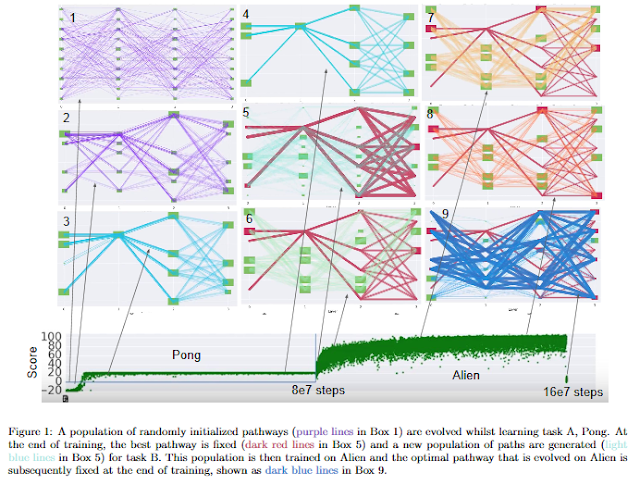

For artificial general intelligence (AGI) it would be efficient if multiple users trained the same giant neural network, permitting parameter reuse, without catastrophic forgetting. PathNet is a first step in this direction. It is a neural network algorithm that uses agents embedded in the neural network whose task is to discover which parts of the network to re-use for new tasks. Agents are pathways (views) through the network which determine the subset of parameters that are used and updated by the forwards and backwards passes of the backpropogation algorithm. During learning, a tournament selection genetic algorithm is used to select pathways through the neural network for replication and mutation. Pathway fitness is the performance of that pathway measured according to a cost function.

They demonstrate successful transfer learning; fixing the parameters along a path learned on task A and re-evolving a new population of paths for task B, allows task B to be learned faster than it could be learned from scratch or after fine-tuning. Paths evolved on task B re-use parts of the optimal path evolved on task A. Positive transfer was demonstrated for binary MNIST, CIFAR, and SVHN supervised learning classification tasks, and a set of Atari and Labyrinth reinforcement learning tasks, suggesting PathNets have general applicability for neural network training. Finally, PathNet also significantly improves the robustness to hyperparameter choices of a parallel asynchronous reinforcement learning algorithm (A3C).

A PathNet is a modular deep neural network having L layers with each layer consisting of M modules. Each module is itself a neural network, here either convolutional or supervised and reinforcement settings, pathNet is compared with two alternative setups: an independent learning control where the target task is learned de novo, and a fine-tuning control where the second task is learned with the same path that learned the first task (but with a new value function and policy readout).

Conclusion

PathNet extends Deepmind's original work on the Path Evolution Algorithm to Deep Learning whereby the weights and biases of the network are learned by gradient descent, but evolution determines which subset of parameters is to be trained. They have shown that PathNet is capable of sustaining transfer learning on at least four tasks in both the supervised and reinforcement learning settings.

PathNet may be thought of as implementing a form of 'evolutionary dropout' in which instead of randomly dropping out units and their connections, dropout samples or 'thinned networks' are evolved. PathNet has the added advantage that dropout frequency is emergent, because the population converges faster at the early layers of the network than in the later layers.

PathNet also resembles 'evolutionary swapout', in fact they have experimented with having standard linear modules, skip modules and residual modules in the same layer and found that path evolution was capable of discovering effective structures within this diverse network. PathNet is related also to recent work on convolutional neural fabrics, but there the whole network is always used and so the principle cannot scale to giant networks. Other approaches to combining evolution and learning have involved parameter copying, whereas there is no such copying in the current implementation of PathNet.

They have only demonstrated PathNet in a fairly small network, the principle can scale to much larger neural networks with more efficient implementations of pathway gating. This will allow extension to multiple tasks. They also wish to try PathNet on other RL tasks which may be more suitable for transfer learning than Atari, for example continuous robotic control problems. Further investigation is required to understand the extent to which PathNet may be superior to using fixed paths.

1. a possibility is that mutable paths provide a more useful form of diverse exploration in RL tasks.

2. it is possible that a larger number of workers can be used in A3C because if each worker can determine which parameters to update, there may be selection for pathways that do not interfere with each other.

They are still investigating the potential benefits of module duplication.

Using this measure it is possible to bias the mutation operator such that currently more globally useful modules are more likely to be slotted into other paths. Further work is also to be carried out in multi-task learning which has not yet been addressed in this paper.

Finally, it is always possible and sometimes desirable to replace evolutionary variation operators with variation operators learned by reinforcement learning. A tournament selection algorithm with mutation is only the simplest way to achieve adaptive paths. It is clear that more sophisticated methods such as policy gradient methods may be used to learn the distribution of pathways as a function of the long term returns obtained by paths, and as a function of a task description input. This may be done through a softer form of gating than used by PathNet here. Furthermore, a population (ensemble) of soft gate matrices may be maintained and an RL algorithm may be permitted to 'mutate' these values.

The operations of PathNet resemble those of the Basal Ganglia, which we propose determines which subsets of the cortex are to be active and trainable as a function of goal/subgoal signals from the prefrontal cortex, a hypothesis related to others in the literature.

Transfer learning has been shown on four tasks in AI. Each game task currently can be performed at a higher than human level. If there was transfer learning across thousands of tasks and many domains then such a system could attain broadly superior capabilities.

Read more

They demonstrate successful transfer learning; fixing the parameters along a path learned on task A and re-evolving a new population of paths for task B, allows task B to be learned faster than it could be learned from scratch or after fine-tuning. Paths evolved on task B re-use parts of the optimal path evolved on task A. Positive transfer was demonstrated for binary MNIST, CIFAR, and SVHN supervised learning classification tasks, and a set of Atari and Labyrinth reinforcement learning tasks, suggesting PathNets have general applicability for neural network training. Finally, PathNet also significantly improves the robustness to hyperparameter choices of a parallel asynchronous reinforcement learning algorithm (A3C).

A PathNet is a modular deep neural network having L layers with each layer consisting of M modules. Each module is itself a neural network, here either convolutional or supervised and reinforcement settings, pathNet is compared with two alternative setups: an independent learning control where the target task is learned de novo, and a fine-tuning control where the second task is learned with the same path that learned the first task (but with a new value function and policy readout).

Conclusion

PathNet extends Deepmind's original work on the Path Evolution Algorithm to Deep Learning whereby the weights and biases of the network are learned by gradient descent, but evolution determines which subset of parameters is to be trained. They have shown that PathNet is capable of sustaining transfer learning on at least four tasks in both the supervised and reinforcement learning settings.

PathNet may be thought of as implementing a form of 'evolutionary dropout' in which instead of randomly dropping out units and their connections, dropout samples or 'thinned networks' are evolved. PathNet has the added advantage that dropout frequency is emergent, because the population converges faster at the early layers of the network than in the later layers.

PathNet also resembles 'evolutionary swapout', in fact they have experimented with having standard linear modules, skip modules and residual modules in the same layer and found that path evolution was capable of discovering effective structures within this diverse network. PathNet is related also to recent work on convolutional neural fabrics, but there the whole network is always used and so the principle cannot scale to giant networks. Other approaches to combining evolution and learning have involved parameter copying, whereas there is no such copying in the current implementation of PathNet.

They have only demonstrated PathNet in a fairly small network, the principle can scale to much larger neural networks with more efficient implementations of pathway gating. This will allow extension to multiple tasks. They also wish to try PathNet on other RL tasks which may be more suitable for transfer learning than Atari, for example continuous robotic control problems. Further investigation is required to understand the extent to which PathNet may be superior to using fixed paths.

1. a possibility is that mutable paths provide a more useful form of diverse exploration in RL tasks.

2. it is possible that a larger number of workers can be used in A3C because if each worker can determine which parameters to update, there may be selection for pathways that do not interfere with each other.

They are still investigating the potential benefits of module duplication.

Using this measure it is possible to bias the mutation operator such that currently more globally useful modules are more likely to be slotted into other paths. Further work is also to be carried out in multi-task learning which has not yet been addressed in this paper.

Finally, it is always possible and sometimes desirable to replace evolutionary variation operators with variation operators learned by reinforcement learning. A tournament selection algorithm with mutation is only the simplest way to achieve adaptive paths. It is clear that more sophisticated methods such as policy gradient methods may be used to learn the distribution of pathways as a function of the long term returns obtained by paths, and as a function of a task description input. This may be done through a softer form of gating than used by PathNet here. Furthermore, a population (ensemble) of soft gate matrices may be maintained and an RL algorithm may be permitted to 'mutate' these values.

The operations of PathNet resemble those of the Basal Ganglia, which we propose determines which subsets of the cortex are to be active and trainable as a function of goal/subgoal signals from the prefrontal cortex, a hypothesis related to others in the literature.

Transfer learning has been shown on four tasks in AI. Each game task currently can be performed at a higher than human level. If there was transfer learning across thousands of tasks and many domains then such a system could attain broadly superior capabilities.

Read more