A Massive Leak

"Memory leaks are impossible in a garbage collected language!" is one of my favorite lies. It feels true, but it isn't. Sure, it's much harder to make them, and they're usually much easier to track down, but you can still create a memory leak. Most times, it's when you create objects, dump them into a data structure, and never empty that data structure. Usually, it's just a matter of finding out what object references are still being held. Usually.

A few months ago, I discovered a new variation on that theme. I was working on a C# application that was leaking memory faster than bad waterway engineering in the Imperial Valley.

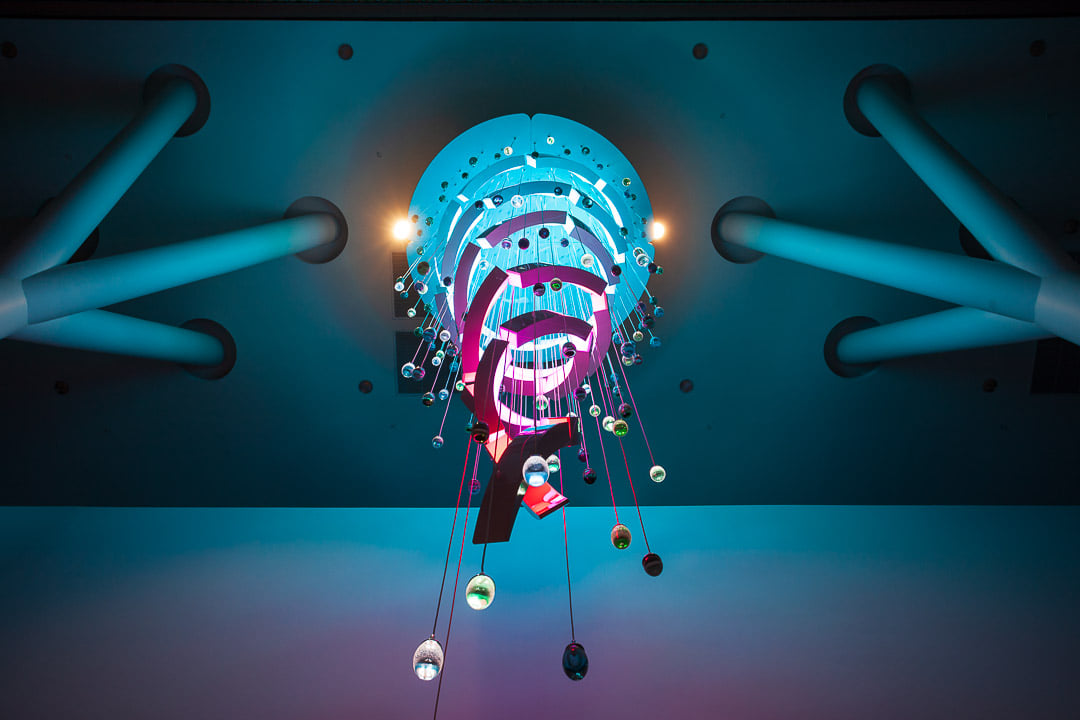

I don't exactly work in the "enterprise" space anymore, though I still interact with corporate IT departments and get to see some serious internal WTFs. This is a chandelier we built for the Allegheny Health Network's Cancer Institute which recently opened in Pittsburgh. It's 15 meters tall, weighs about 450kg, and is broken up into 30 segments, each with hundreds of addressable LEDs in a grid. The software we were writing was built to make them blink pretty.

Each of those 30 segments is home to a single-board computer with their GPIO pins wired up to addressable LEDs. Each computer runs a UDP listener, and we blast them with packets containing RGB data, which they dump to the LEDs using a heavily tweaked version of LEDScape.

This is our standard approach to most of our lighting installations. We drop a Beaglebone onto a custom circuit board and let it drive the LEDs, then we have a render-box someplace which generates frame data and chops it up into UDP packets. Depending on the environment, we can drive anything from 30-120 frames per second this way (and probably faster, but that's rarely useful).

Apologies to the networking folks, but this works very well. Yes, we're blasting many megabytes of raw bitmap data across the network, but we're usually on our own dedicated network segment. We use UDP because, well, we don't care about the data that much. A dropped packet or an out of order packet isn't going to make too large a difference in most cases. We don't care if our destination Beaglebone is up or down, we just blast the packets out onto the network, and they get there reliably enough that the system works.

Now, normally, we do this from Python programs on Linux. For this particular installation, though, we have an interactive kiosk which provides details about cancer treatments and patient success stories, and lets the users interact with the chandelier in real time. We wanted to show them a 3D model of the chandelier on the screen, and show them an animation on the UI that was mirrored in the physical object. After considering our options, we decided this was a good case for Unity and C#. After a quick test of doing multitouch interactions, we also decided that we shouldn't deploy to Linux (Unity didn't really have good Linux multitouch support), so we would deploy a Windows kiosk. This meant we were doing most of our development on MacOS, but our final build would be for Windows.

Months go by. We worked on the software while building the physical pieces, which meant the actual testbed hardware wasn't available for most of the development cycle. Custom electronics were being refined and physical designs were changing as we iterated to the best possible outcome. This is normal for us, but it meant that we didn't start getting real end-to-end testing until very late in the process.

Once we started test-hanging chandelier pieces, we started basic developer testing. You know how it is: you push the run button, you test a feature, you push the stop button. Tweak the code, rinse, repeat. Eventually, though, we had about 2/3rds of the chandelier pieces plugged in, and started deploying to the kiosk computer, running Windows.

We left it running, and the next time someone walked by and decided to give the screen a tap... nothing happened. It was hung. Well, that could be anything. We rebooted and checked again, and everything seems fine, until a few minutes later, when it's hung... again. We checked the task manager- which hey, everything is really slow, and sure enough, RAM is full and the computer is so slow because it's constantly thrashing to disk.

We're only a few weeks before we actually have to ship this thing, and we've discovered a massive memory leak, and it's such a sudden discovery that it feels like the draining of Lake Agassiz. No problem, though, we go back to our dev machines, fire it up in the profiler, and start looking for the memory leak.

Which wasn't there. The memory leak only appeared in the Windows build, and never happened in the Mac or Linux builds. Clearly, there must be some different behavior, and it must be around object lifecycles. When you see a memory leak in a GCed language, you assume you're creating objects that the GC ends up thinking are in use. In the case of Unity, your assumption is that you're handing objects off to the game engine, and not telling it you're done with them. So that's what we checked, but we just couldn't find anything that fit the bill.

Well, we needed to create some relatively large arrays to use as framebuffers. Maybe that's where the problem lay? We keep digging through the traces, we added a bunch of profiling code, we spent days trying to dig into this memory leak...

... and then it just went away. Our memory leak just became a Heisenbug, our shipping deadline was even closer, and we officially knew less about what was going wrong than when we started. For bonus points, once this kiosk ships, it's not going to be connected to the Internet, so if we need to patch the software, someone is going to have to go onsite. And we aren't going to have a suitable test environment, because we're not exactly going to build two gigantic chandeliers.

The folks doing assembly had the whole chandelier built up, hanging in three sections (we don't have any 14m tall ceiling spaces), and all connected to the network for a smoke test. There wasn't any smoke, but they needed to do more work. Someone unplugged a third of the chandelier pieces from the network.

And the memory leak came back.

We use UDP because we don't care if our packet sends succeed or not. Frame-by-frame, we just want to dump the data on the network and hope for the best. On MacOS and Linux, our software usually uses a sender thread that just, at the end of the day, wraps around calls to the send system call. It's simple, it's dumb, and it works. We ignore errors.

In C#, though, we didn't do things exactly the same way. Instead, we used the .NET UdpClient object and its SendAsync method. We assumed that it would do roughly the same thing.

We were wrong.

await client.SendAsync(packet, packet.Length, hostip, port);

Async operations in C# use Tasks, which are like promises or futures in other environments. It lets .NET manage background threads without the developer worrying about the details. The await keyword is syntactic sugar which lets .NET know that it can hand off control to another thread while we wait. While we await here, we don't actually await the results of the await, because again: we don't care about the results of the operation. Just send the packet, hope for the best.

We don't care- but Windows does. After a load of investigation, what we discovered is that Windows would first try and resolve the IP address. Which, if a host was down, obviously it couldn't. But Windows was friendly, Windows was smart, and Windows wasn't going to let us down: it kept the Task open and kept trying to resolve the address. It held the task open for 3 seconds before finally deciding that it couldn't reach the host and errored out.

An error which, as I stated before, we were ignoring, because we didn't care.

Still, if you can count and have a vague sense of the linear passage of time, you can see where this is going. We had 30 hosts. We sent each of the 30 packets every second. When one or more of those hosts were down, Windows would keep each of those packets "alive" for 3 seconds. By the time that one expired, 90 more had queued up behind it.

That was the source of our memory leak, and our Heisenbug. If every Beaglebone was up, we didn't have a memory leak. If only one of them was down, the leak was pretty slow. If ten or twenty were out, the leak was a waterfall.

I spent a lot of time reading up on Windows networking after this. Despite digging through the socket APIs, I honestly couldn't figure out how to defeat this behavior. I tried various timeout settings. I tried tracking each task myself and explicitly timing them out if they took longer than a few frames to send. I was never able to tell Windows, "just toss the packet and hope for the best".

Well, my co-worker was building health monitoring on the Beaglebones anyway. While the kiosk wasn't going to be on the Internet via a "real" Internet connection, we did have a cellular modem attached, which we could use to send health info, so getting pings that say "hey, one of the Beaglebones failed" is useful. So my co-worker hooked that into our network sending layer: don't send frames to Beaglebones which are down. Recheck the down Beaglebones every five minutes or so. Continue to hope for the best.

This solution worked. We shipped. The device looks stunning, and as patients and guests come to use it, I hope they find some useful information, a little joy, and maybe some hope while playing with it. And while there may or may not be some ugly little hacks still lurking in that code, this was the one thing which made me say: WTF.