Cerebras-GPT for Open Source and More Efficient Large Language Models

by Brian Wang from NextBigFuture.com on (#6AA5B)

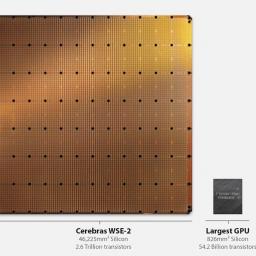

Cerebras open sources seven GPT-3 models from 111 million to 13 billion parameters. Trained using the Chinchilla formula, these models set new benchmarks for accuracy and compute efficiency. Cerebras makes wafer scale computer chips. Cerebras-GPT has faster training times, lower training costs, and consumes less energy than any publicly available model to date. All models ...

Cerebras open sources seven GPT-3 models from 111 million to 13 billion parameters. Trained using the Chinchilla formula, these models set new benchmarks for accuracy and compute efficiency. Cerebras makes wafer scale computer chips. Cerebras-GPT has faster training times, lower training costs, and consumes less energy than any publicly available model to date. All models ...