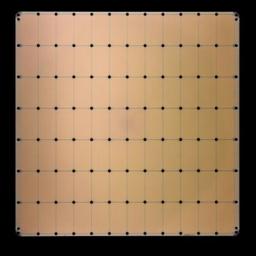

Cerebras gives waferscale chips inferencing twist, claims 1,800 token per sec generation rates

Faster than you can read? More like blink and you'll miss the hallucination

Faster than you can read? More like blink and you'll miss the hallucination Hot Chips Inference performance in many modern generative AI workloads is usually a function of memory bandwidth rather than compute. The faster you can shuttle bits in and out of a high-bandwidth memory (HBM) the faster the model can generate a response....