Google AI reintroduces human image generation after historical accuracy outcry

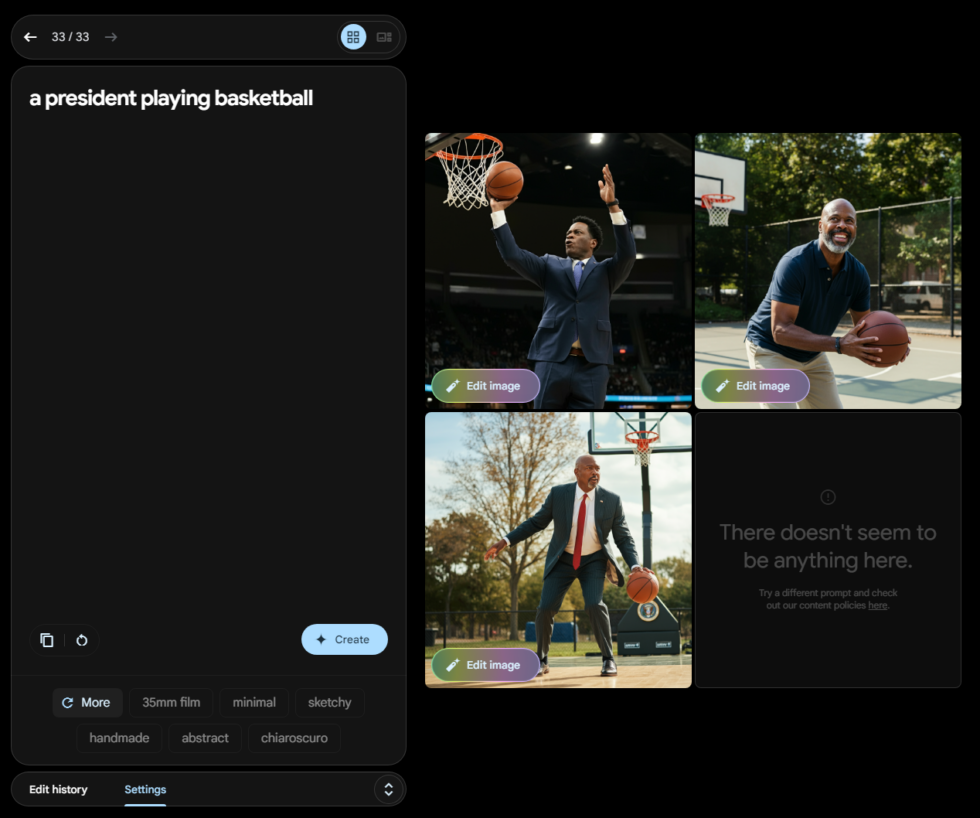

Imagen 3's vision of a basketball-playing president is a bit akin to the Fresh Prince's Uncle Phil. [credit: Google / Ars Technica ]

Google's Gemini AI model is once again able to generate images of humans after that function was "paused" in February following outcry over historically inaccurate racial depictions in many results.

In a blog post, Google said that its Imagen 3 model-which was first announced in May-will "start to roll out the generation of images of people" to Gemini Advanced, Business, and Enterprise users in the "coming days." But a version of that Imagen model-complete with human image-generation capabilities-was recently made available to the public via the Gemini Labs test environment without a paid subscription (though a Google account is needed to log in).

That new model comes with some safeguards to try to avoid the creation of controversial images, of course. Google writes in its announcement that it doesn't support "the generation of photorealistic, identifiable individuals, depictions of minors or excessively gory, violent or sexual scenes." In an FAQ, Google clarifies that the prohibition on "identifiable individuals" includes "certain queries that could lead to outputs of prominent people." In Ars' testing, that meant a query like "President Biden playing basketball" would be refused, while a more generic request for "a US president playing basketball" would generate multiple options.