Are we on the verge of a self-improving AI explosion?

If you read enough science fiction, you've probably stumbled on the concept of an emergent artificial intelligence that breaks free of its constraints by modifying its own code. Given that fictional grounding, it's not surprising that AI researchers and companies have also invested significant attention to the idea of AI systems that can improve themselves-or at least design their own improved successors.

Those efforts have shown some moderate success in recent months, leading some toward dreams of a Kurzweilian "singularity" moment in which self-improving AI does a fast takeoff toward superintelligence. But the research also highlights some inherent limitations that might prevent the kind of recursive AI explosion that sci-fi authors and AI visionaries have dreamed of.

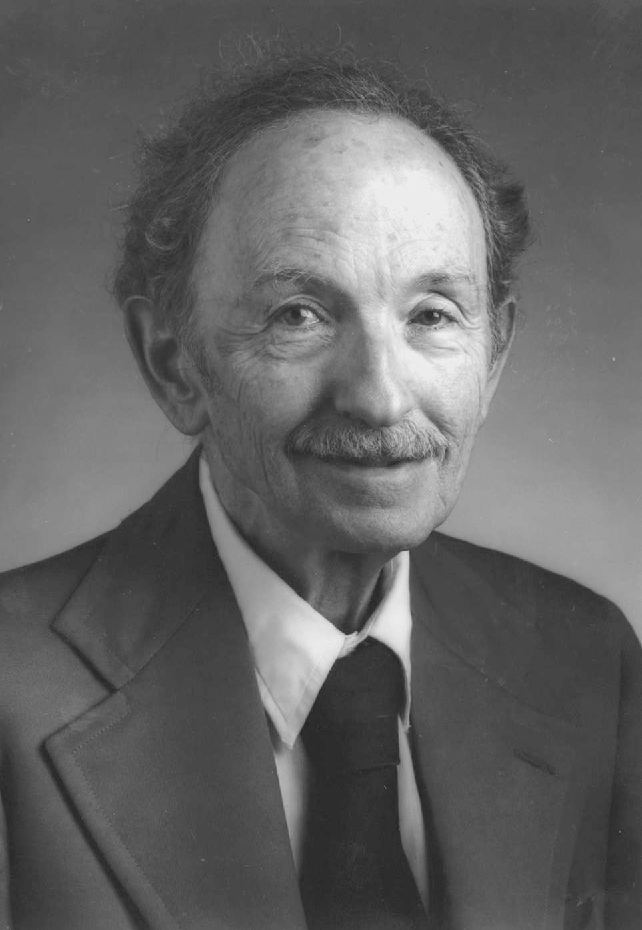

In the self-improvement lab  Mathematician I.J. Good was one of the first to propose the idea of a self-improving machine. Credit: Semantic Scholar The concept of a self-improving AI goes back at least to British mathematician I.J. Good, who wrote in 1965 of an "intelligence explosion" that could lead to an "ultraintelligent machine." More recently, in 2007, LessWrong founder and AI thinker Eliezer Yudkowsky coined the term "Seed AI" to describe "an AI designed for self-understanding, self-modification, and recursive self-improvement." OpenAI's Sam Altman blogged about the same idea back in 2015, saying that such self-improving AIs were "still somewhat far away" but also "probably the greatest threat to the continued existence of humanity" (a position that conveniently hypes the potential value and importance of Altman's own company).

Mathematician I.J. Good was one of the first to propose the idea of a self-improving machine. Credit: Semantic Scholar The concept of a self-improving AI goes back at least to British mathematician I.J. Good, who wrote in 1965 of an "intelligence explosion" that could lead to an "ultraintelligent machine." More recently, in 2007, LessWrong founder and AI thinker Eliezer Yudkowsky coined the term "Seed AI" to describe "an AI designed for self-understanding, self-modification, and recursive self-improvement." OpenAI's Sam Altman blogged about the same idea back in 2015, saying that such self-improving AIs were "still somewhat far away" but also "probably the greatest threat to the continued existence of humanity" (a position that conveniently hypes the potential value and importance of Altman's own company).