|

by staff on (#3SNFT)

BOXX Technologies has announced "the world’s fastest workstation" featuring a professionally overclocked Intel Core i7-8086K Limited Edition processor capable of reaching 5.0GHz across all six of its cores. In commemoration of the 40th anniversary of the Intel 8086 (the processor that launched x86 architecture), Intel provided BOXX with a limited number of the exclusive, high performance CPUs ideal for CAD, 3D modeling, and animation workflows. "The APEXX SE workstation extends far beyond the celebration of the 8086 anniversary,†said Shoaib Mohammad, BOXX VP of Marketing and Business Development. “By integrating this special technology, we’re providing our customers with a unique opportunity to accelerate their workflows with historic Intel processing power they can’t get from the multinational, commodity PC manufacturers.â€The post BOXX Special Edition Workstation Breaks 5.0GHz Clock Speed Barrier appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 12:15 |

|

by Rich Brueckner on (#3SNAK)

Shuquan Huang from 99Cloud gave this talk at the OpenStack Summit in Vancouver. "In this session, we will introduce OpenStack Acceleration Service – Cyborg, which provides a management framework for accelerator devices (e.g. FPGA, GPU, NVMe SSD). We will also discuss Rack Scale Design (RSD) technology and explain how physical hardware resources can be dynamically aggregated to meet the AI/HPC requirements."The post Optimized HPC-AI cloud with OpenStack acceleration service and composable hardware appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SNAN)

OpenStack cloud provider Teuto.net has reported exceptional performance with low-latency block storage using deployed Excelero's NVMesh Server SAN along with Mellanox SN2100 switches. As more customers need storage suitable for demanding databases that exceed Ceph's performance, teuto.net achieved a 2,000% performance gain and 10x lower IO latency with NVMesh compared to Ceph while avoiding costly, less scalable appliances and proprietary vendor solutions.The post Excelero and Mellanox Boost Ceph Performance in OpenStack Cloud appeared first on insideHPC.

|

|

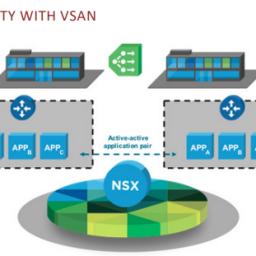

by Peter ffoulkes on (#3SNAQ)

This is the second entry in an insideHPC guide series that explores networking with Dell EMC ready nodes. Read on to learn more about Dell EMC networking for vSan.The post Exploring Dell EMC Networking for vSan appeared first on insideHPC.

|

|

by staff on (#3SNAS)

"Modern processors perform their best with parallel code that's both vectorized and threaded, which can run more than 100 times faster more than serial code. So how can you accomplish this more easily through parallel programming? Enter Parallel Studio XE, a suite of tools that simplifies and speeds the design, building, tuning, and scaling of applications with the latest code modernization methods."The post Video: Speed Your Code with Intel Parallel Studio XE appeared first on insideHPC.

|

|

by staff on (#3SK6C)

Today Hyperion Research announced that Steve Conway, Hyperion Research senior vice president of research, will now also serve as the company's chief operating officer, according to Hyperion CEO Earl Joseph. "The promotion recognizes key responsibilities Steve has already taken on, in addition to leading our research activities, such as heavily influencing our view of the fast-evolving HPC market, playing a major role in setting the direction for our business, creating and launching new programs, and managing important areas of the business," Joseph said.The post Hyperion Research’s Steve Conway Adds COO Title appeared first on insideHPC.

|

|

by staff on (#3SK6D)

Today Lenovo released details its pending Neptune liquid-cooling systems, which will enable data centers to run "up to 50 percent more efficiently, with uncompromising performance while maintaining a dense footprint." "In Roman mythology, Neptune used his three-pronged trident to tame the sea. This inspired Lenovo’s liquid cooling “trident†of cooling technologies: Direct to Node (DTN) warm water cooling, rear door heat exchanger, and hybrid solutions that combine both air and liquid cooling."The post Lenovo Moves Liquid Cooling Forward with Neptune appeared first on insideHPC.

|

|

by staff on (#3SK0R)

Today NVIDIA made a number of announcements centered around Machine Learning software at the Computer Vision and Pattern Recognition Conference in Salt Lake City. "NVIDIA is kicking off the conference by demonstrating an early release of Apex, an open-source PyTorch extension that helps users maximize deep learning training performance on NVIDIA Volta GPUs. Inspired by state of the art mixed precision training in translational networks, sentiment analysis, and image classification, NVIDIA PyTorch developers have created tools bringing these methods to all levels of PyTorch users."The post NVIDIA Releases Code for Accelerated Machine Learning appeared first on insideHPC.

|

|

by staff on (#3SK0T)

Today Cray announced the ClusterStor L300F Lustre-based scalable flash storage solution, designed for applications that need high-performance scratch storage to quickly store and retrieve intermediate results. "Flash is poised to become an essential technology in every HPC storage solution,†said Jeff Janukowicz, IDC’s research vice president, Solid State Drives and Enabling Technologies. “With the ClusterStor L300F, Cray has positioned itself to be at the leading edge of next generation of HPC storage solutions.â€The post Cray Rolls Out Scalable Flash Storage Solution for Lustre Workloads appeared first on insideHPC.

|

|

by staff on (#3SJVZ)

This is the third entry in a five-part insideHPC series that takes an in-depth look at how machine learning, deep learning and AI are being used in the energy industry. Read on to learn how edge computing is playing a role in operating drilling rigs, ensuring pipeline integrity and more.The post Edge Computing Proves Critical for Drilling Rigs, Pipeline Integrity appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SH0P)

In this video from the Dell EMC HPC Community Meeting, Josh Simons from VMware describes why more customers are moving their HPC & AI workloads to virtualized environments using vSphere. "With VMware, you can capture the benefits of virtualization for HPC workloads while delivering performance that is comparable to bare-metal. Our approach to virtualizing HPC adds a level of flexibility, operational efficiency, agility and security that cannot be achieved in bare-metal environments—enabling faster time to insights and discovery."The post Virtualizing AI & HPC Workloads with vSphere appeared first on insideHPC.

|

|

by staff on (#3SGWJ)

FlyElephant has announced its partnership with Squadex, a Cloud Transformation Consultancy, to extend AI & Big Data consulting services and reinforce teams. "Our cooperation will enable FlyElephant customers to get top-notch consulting services and support in deployment and customization of our platform, HPC or Big Data cluster in an AWS Cloud, development and optimization of machine learning models.â€, said Dmitry Spodarets, Founder at FlyElephant and AI/ML & HPC Lead at Squadex. “FlyElephant will augment Squadex’ capabilities in HPC and AI areas, enabling synergies to create new types of services in these areas.â€The post FlyElephant and Squadex team up for expansion in AI and HPC services appeared first on insideHPC.

|

|

by staff on (#3SGR4)

In this episode of Let's Talk Exascale, researchers from the ADIOS project describe how they are optimizing I/O on exascale architectures and making the code easily maintainable, sustainable, and extensible, while ensuring its performance and scalability. "The Adaptable I/O System (ADIOS) project in the ECP supports exascale applications by addressing their data management and in situ analysis needs."The post Let’s Talk Exascale: Optimizing I/O at the ADIOS Project appeared first on insideHPC.

|

|

by staff on (#3SGR6)

Today HPE announced plans to deliver the world’s largest Arm supercomputer. As part of the Vanguard program, Astra, the new Arm-based system, will be used by the NNSA to run advanced modeling and simulation workloads for addressing areas such as national security, energy and science. "By introducing Arm processors with the HPE Apollo 70, a purpose-built HPC architecture, we are bringing powerful elements, like optimal memory performance and greater density, to supercomputers that existing technologies in the market cannot match,†said Mike Vildibill, vice president, Advanced Technologies Group, HPE.The post ARM goes Big: HPE Builds Petaflop Supercomputer for Sandia appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SGM2)

In this podcast, the Radio Free HPC team previews the ISC 2018 Student Cluster Competition. “Now in its seventh year, the Student Cluster Competition enables international STEM teams to take part in a real-time contest focused on advancing STEM disciplines and HPC skills development at ISC 2018 from June 25-27.â€The post Radio Free HPC Previews ISC Student Cluster Competition & Ancillary Events appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SF62)

Simon Burbidge from the University of Bristol gave this talk at the HPC User Forum. "Our research focuses on the application of heterogeneous and many-core computing to solve large-scale scientific problems. Related research problems we are addressing include: performance portability across many-core devices; automatic optimization of many-core codes; communication-avoiding algorithms for massive scale systems; and fault tolerance software techniques for resiliency at scale."The post Advanced Computing: HPC and RDS at University of Bristol appeared first on insideHPC.

|

|

by staff on (#3SF3Y)

AI startup Wave Computing announced this week that it has acquired MIPS. "The acquisition of MIPS allows us to combine technologies to create products that will deliver a single ‘Datacenter-to-Edge’ platform ideal for AI and deep learning. We’ve already received very strong and enthusiastic support from leading suppliers and strategic partners, as they affirm the value of data scientists being able to experiment, develop, test and deploy their neural networks on a common platform spanning to the Edge of Cloud.â€The post Wave Computing Acquires MIPS for AI on the Edge appeared first on insideHPC.

|

|

by staff on (#3SDRS)

Today Intel released a sneak peek at their plans for ISC 2018 in Frankfurt. The company will showcase how it's helping AI developers, data scientists and HPC programmers transform industries by tapping into HPC to power the AI solutions. "ISC brings together academic and commercial disciplines to share knowledge in the field of high performance computing. Intel's presence at the event will include keynotes, sessions, and booth demos that will be focused on the future of HPC technology, including Artificial Intelligence (AI) and visualization."The post Intel to Showcase AI and HPC Demos at ISC 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SDNV)

Mathworks in Massachusetts is seeking a Software Solutions Engineer in our Job of the Week. "We are a team of product proficient engineers with application and software industry experience. Alongside MATLAB Application Deployment developers, we work directly with our customers to resolve their software integration issues and challenges in multiple language interoperability. We are looking for a multi-faceted software engineer with strong foundation in programming, and in-depth troubleshooting knowledge of various software integration methods. You will have a chance to develop your skills and work in multiple disciplines – C++, Java, C#, and Python; multi-threading, and multi-processing across Windows, Linux and OS X."The post Job of the Week: Software Solutions Engineer at Mathworks appeared first on insideHPC.

|

|

by staff on (#3SBZF)

Dutch researchers have a new opportunity to experiment with Quantum Computing with the new Atos Quantum Learning Machine at SURFsara. The Atos Quantum Learning Machine is a complete on-premise quantum simulation environment designed for quantum software developers to generate quantum algorithms. It is dedicated to the development of quantum software, training and experimentation. The Atos Quantum Learning Machine allows researchers, engineers and students to develop and experiment with quantum software.The post Atos Quantum Learning Machine Comes to SURFsara in the Netherlands appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SBTG)

Our friends at the UberCloud have published the 2018 edition of their Compendium of Cloud HPC Case Studies. "The goal of the UberCloud Experiment is to perform engineering simulation experiments in the HPC cloud with real engineering applications in order to understand the roadblocks to success and how to overcome them. The Compendium is a way of sharing these results with the broader HPC community."The post UberCloud Publishes Compendium of Cloud HPC Case Studies appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SBTJ)

The Dell EMC HPC Community has published their Speaker Agenda for meeting at ISC 2018. The half-day event takes place June 24 in Frankfurt, Germany. "The Dell EMC HPC Community event will feature keynote presentations by HPC experts and a networking event to discuss best practices in the use of Dell EMC HPC Systems. Attendees will have the unique opportunity to receive updates on HPC strategy, product and solution plans from Dell executives and technical staff and technology partners."The post Agenda Posted: Dell EMC HPC Community Meeting at ISC 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3SBHJ)

Anantha Kinnal from Calligo Technologies gave this talk at the ConGA conference for Next Generation Arithmetic. "CalligoTech, based in Bangalore - India, built and demonstrated World’s First Posit-enabled System at the CoNGA track at Supercomputing Asia’18, Singapore. Our primary objective was to build a System capable of performing basic arithmetic operations using Posit Number System and capable of running existing C programs."The post Video: Calligo Technologies Demonstrates Posit Computing Implementation appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S9K5)

Jay Owen from AMD gave this talk at the HPC User Forum in Tucson. "With the introduction of new EPYC processor based servers with Radeon Instinct GPU accelerators, combined with our ROCm open software platform, AMD is ushering in a new era of heterogeneous compute for HPC and Deep Learning. Truly accelerating the pace of deep learning and addressing the broad needs of the datacenter requires a combination of high performance compute and GPU acceleration optimized for handling massive amounts of data with lots of floating point computation that can be spread across many cores."The post Video: AMD HPC Update appeared first on insideHPC.

|

|

by staff on (#3S9K7)

Today NEC announced that NEC Deutschland GmbH is starting High Performance Computing sales operations in the United Kingdom. This business expansion in the UK, one of Europe’s most important high-tech regions, is in addition to NEC’s existing sales operations throughout Europe, including Germany, France, Belgium, Italy and others. "NEC Deutschland has named Matthew Prew as Senior Sales Manager of HPC in the UK, where he will be responsible for driving the expansion of new business. Mr. Prew brings more than 25 years of experience in the IT Industry. Starting in the early 1990s, he joined a European IT manufacturer focusing predominantly on the UNIX platform."The post NEC starts HPC sales operations in the UK appeared first on insideHPC.

|

|

by staff on (#3S9E3)

Over at the NVIDIA Blog, Gavriel State writes that the company has opened a new AI Research Lab in Toronto. The AI Research Lab will be led by computer scientist and University of Toronto professor Sanja Fidler. "With the new lab, our goal is to triple the number of AI and deep learning researchers working there by year’s end. It will be a state-of-art facility for AI talent to work in and will expand the footprint of our office by about half to accommodate the influx of talent."The post NVIDIA Opens AI Research Lab in Toronto appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S993)

In this video from the Dell EMC HPC Community Meeting, Chris Branch from Intel describes how FPGAs deliver speed and efficiency for technical computing. The two companies are working together to bring new FPGA solutions to market. "With ​ever-changing ​​workloads​ ​and​ ​evolving​ ​standards,​ ​how ​do​ ​you​ ​maximize performance ​while ​​minimizing​ ​power​ ​consumption in your data center? Intel platforms are qualified, validated, and deployed through Dell EMC."The post Intel Stratix FPGAs Power Innovation for the Dell HPC Community appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#3S995)

The demand for in-memory computing (IMC) and new IMC platforms continues to grow. What's pushing this growth as new products like the GridGain in-memory computing platform hit the market? Download the new white paper from GridGain that explores this topic and covers what's next for in-memory computing.The post Why Demand for In-Memory Computing Platforms Keeps Growing appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S76K)

The ExaComm 2018 workshop has posted their Speaker Agenda. Held in conjunction with ISC 2018, the Fourth International Workshop on Communication Architectures for HPC, Big Data, Deep Learning and Clouds at Extreme Scale takes place June 28 in Frankfurt. " The goal of this workshop is to bring together researchers and software/hardware designers from academia, industry and national laboratories who are involved in creating network-based computing solutions for extreme scale architectures. The objectives of this workshop will be to share the experiences of the members of this community and to learn the opportunities and challenges in the design trends for exascale communication architectures."The post Agenda Posted for ExaComm 2018 Workshop in Frankfurt appeared first on insideHPC.

|

|

by staff on (#3S71A)

The Barcelona Supercomputing Centre has augmented their hybrid MareNostrum supercomputer with the new racks of IBM AC922 servers. This upgrade makes BSC becomes the first center in Europe to offer access to the same technologies of the brand new Summit, the most powerful supercomputer in the world. "Accelerated by NVIDIA Volta V100 GPUs, the new three racks have a peak performance of 1.48 Petaflops, a 50% more than MareNostrum3 supercomputer, which was uninstalled just one year ago"The post MareNostrum 4 Adds POWER9 Racks with Volta GPUS for AI Research appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S71C)

Bruno Monnet from HPE gave this talk at the NVIDIA GPU Technology Conference. "Deep Learning demands massive amounts of computational power. Those computation power usually involve heterogeneous computation resources, e.g., GPUs and InfiniBand as installed on HPE Apollo. NovuForce deep learning softwares within the docker image has been optimized for the latest technology like NVIDIA Pascal GPU and infiniband GPUDirect RDMA. This flexibility of the software, combined with the broad GPU servers in HPE portfolio, makes one of the most efficient and scalable solutions."The post Improving Deep Learning scalability on HPE servers with NovuMind: GPU RDMA made easy appeared first on insideHPC.

|

|

by staff on (#3S6X2)

Today Altair announced that the company has added GE’s Flow Simulator software to the Altair Partner Alliance (APA) and offer direct licenses to Altair customers. Flow Simulator is an integrated flow, heat transfer, and combustion design software that enables mixed fidelity simulations to optimize machine and systems design. "GE’s Flow Simulator will benefit our customers by providing thermal fluid system mixed fidelity simulation capabilities relevant to multiple industries. It expands our strong multiphysics system modeling portfolio, and we are very positive about working with GE to carry their great development work forward to a broader set of customers and applications,†said Brett Chouinard, Altair’s President and COO.The post GE’s Flow Simulator Software Now Available Exclusively from Altair appeared first on insideHPC.

|

|

by Peter ffoulkes on (#3S6X4)

Dell EMC vSAN Ready Nodes are built on Dell EMC PowerEdge servers that have been pre-configured, tested and certified to run VMware vSAN. This post outlines how to network vSan ready nodes. "As an integrated feature of the ESXi kernel, vSAN exploits the same clustering capabilities to deliver a comparable virtualization paradigm to storage that has been applied to server CPU and memory for many years."The post How Do I Network vSAN Ready Nodes? appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S4NY)

In this video from the Dell EMC HPC Community meeting in Austin, Garima Kochhar from Dell describes how the company works with customers at the Dell EMC AI & HPC Innovation Lab. "The HPC Innovation Lab gives our customers access to cutting-edge technology, like the latest-generation Dell EMC products, Scalable System Framework from Intel, InfiniBand gear from Mellanox, NVIDIA GPUs, Bright Computing software, and more. Customers can bring us their workloads, and we can help them tune a solution before the technology is readily available."The post Working with the Dell EMC AI & HPC Innovation Lab appeared first on insideHPC.

|

|

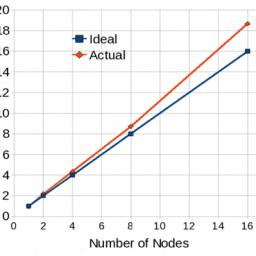

by Richard Friedman on (#3S4P0)

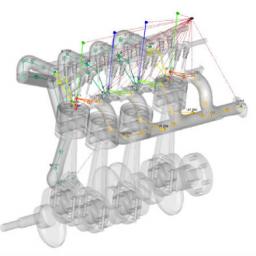

The HiFUN CFD solver shows that the latest-generation Intel Xeon Scalable processor enhances single-node performance due to the availability of large cache, higher core density per CPU, higher memory speed, and larger memory bandwidth. The higher core density improves intra-node parallel performance that permits users to build more compact clusters for a given number of processor cores. This permits the HiFUN solver to exploit better cache utilization that contributes to super-linear performance gained through the combination of a high-performance interconnect between nodes and the highly-optimized Intel® MPI Library.The post Maximizing Performance of HiFUN* CFD Solver on Intel® Xeon® Scalable Processor With Intel MPI Library appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S4P2)

Hewlett Packard Enterprise will host HP-CAST once again this year at ISC 2018. The event takes place June 22-23 in Frankfurt, Germany. "The High Performance Consortium for Advanced Scientific and Technical (HP-CAST) computing users group works to increase the capabilities of Hewlett Packard Enterprise solutions for large-scale scientific and technical computing. HP-CAST meetings typically include corporate briefings and presentations by HPE executives, technical staff, and HPE partners (under NDA), and discussions of customer issues related to high-performance computing."The post HP-CAST Returns to Franfurt for ISC 2018 appeared first on insideHPC.

|

|

by staff on (#3S4HR)

This is the second entry in a five-part insideHPC series that takes an in-depth look at how machine learning, deep learning and AI are being used in the energy industry. Read on to learn how energy companies are embracing deep learning for inspections, exploration and more.The post Energy Companies Embrace Deep Learning for Inspections, Exploration & More appeared first on insideHPC.

|

|

by staff on (#3S4DD)

Today Quobyte and NEC Deutschland GmbH announced a partnership to develop a complete HPC storage solution stack based on NEC HPC hardware and Quobyte’s Data Center File System software. "Quobyte’s full stack Data Center File System combined with their HPC hardware system results in a much needed storage solution that will help to eliminate the bottlenecks and pricing traps of legacy hardware-based storage while providing the performance, security, and ease of operation of modern hyperscale architectures."The post NEC and Quobyte team up for HPC Storage appeared first on insideHPC.

|

|

by staff on (#3S34S)

Today SMART Global Holdings, Inc. announced that it has acquired HPC vendor Penguin Computing. "Penguin will continue as a standalone operation being the first part of a new business unit, SMART Specialty Compute & Storage Solutions (SCSS), which will benefit from shared infrastructure and the ability to leverage the products and capabilities of SMART’s Specialty Memory business unit into the emerging AI and ML markets."The post SMART Global Holdings Acquires Penguin Computing for $85 Million appeared first on insideHPC.

|

|

by staff on (#3S2KS)

Today ISC 2018 announced the winners of the ISC High Performance Travel Grant Program. Designed to enable university students and young researchers to attend the conference in Germany, the ISC Travel Grant was introduced this year to help university students and young researchers who are interested in acquiring high performance computing knowledge and skills, but lack the necessary resources to obtain them. "Raksha Roy, a researcher from the International Centre for Integrated Mountain Development (ICIMOD) in Nepal, and Badisa Mosesane an undergraduate student at the University of Botswana, have been selected as the ISC Travel Grant recipients this year. They have been chosen out of 16 regional and international applications. Both Ms. Roy, 28, and Mr. Mosesane, 26, will each be awarded a grant of 1500 euros to attend the conference."The post ISC Travel Grant brings Young HPC Leaders to Conference from Nepal and Botswana appeared first on insideHPC.

|

|

by staff on (#3S2F2)

Today ThinkParQ announced that the company is working with Quanta Cloud Technology on a prototype HPC system to demonstrate the power of their combined technologies. Using the BeeGFS parallel file system, the demo cluster will be built around the Intel Rack Scale Design. "Utilizing BeeGFS with its ease of use, maximum scalability and flexibility such as BeeGFS on demand (BeeOND), buddy mirroring or the newly introduced storage pool concept, this demo environment provides a maximum flexibility for various customers cases to prove the value of a combined QCT and ThinkParQ solution,†said Frank Herold, CEO of ThinkParQ.The post ThinkparQ Teams with QCT to Demonstrate BeeGFS appeared first on insideHPC.

|

|

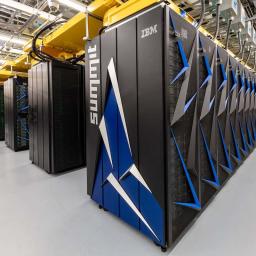

by staff on (#3S2AT)

"The biggest problems in science require supercomputers of unprecedented capability. That’s why the ORNL launched Summit, a system 8 times more powerful than their previous top-ranked system, Titan. Summit is providing scientists with incredible computing power to solve challenges in energy, artificial intelligence, human health, and other research areas, that were simply out of reach until now. These discoveries will help shape our understanding of the universe, bolster US economic competitiveness, and contribute to a better future."The post Video: Researchers Step Up with the New Summit Supercomputer appeared first on insideHPC.

|

|

by staff on (#3S267)

The advent of AI is creating new possibilities to tackle increasingly complex research beyond the traditional HPC analytics, modeling, and simulation workloads. This guest post from Intel highlights key points from its new eGuide that focuses on embracing AI in your HPC environment. "Thanks to the efforts of HPC experts and those they support, we face a bright and exciting future of scientific understanding and innovation to benefit humanity."The post New eGuide: Practical Considerations for Embracing AI in Your HPC Environment appeared first on insideHPC.

|

|

by staff on (#3S0HD)

The eighth international Women in HPC workshop will be held June 28 at ISC 2018 in Frankfurt. "This workshop provides leaders and managers in the HPC community with methods to improve diversity and also to provide early career women with an opportunity to develop their professional skills and profile. Following the overwhelming success of the 2017 WHPC workshops we will once again discuss methods and steps that can be taken to address the under-representation of women."The post Women in HPC Workshop Returns to ISC 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3S0F2)

Karsten Kutzer from Lenovo gave this talk at the 2018 Swiss HPC Conference. "This session will discuss why water cooling is becoming more and more important for HPC data centers, Lenovo’s series of innovations in the area of direct water-cooled systems, and ways to re-use “waste heat†created by HPC systems."The post Energy Efficiency and Water-Cool-Technology Innovations at Lenovo appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RZCP)

In this podcast, the Radio Free HPC team looks at the new 200 Petaflop Summit supercomputer that was unveiled this week at ORNL. "IBM designed a whole new heterogeneous architecture for Summit that integrates the robust data analysis of powerful IBM POWER9 CPUs with the deep learning capabilities of GPUs,†writes Dr. John E. Kelly from IBM. “The result is unparalleled performance on critical new applications. And, IBM is selling this same technology in Summit to enterprises today.â€The post Radio Free HPC Looks at the New Summit Supercomputer at ORNL appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RZA7)

Merck in New Jersey is seeking a Senior Engineer for HPC in our Job of the Week. As a Senior Engineer in an Operations role of High Performance Computing your primary role is that of “Senior Linux Systems Administrator and Scientific Workstation Specialistâ€. You will be using your scripting, architectural, systems engineering, Linux system administration, troubleshooting and communication skills to deliver and support research and statistical applications used to make key decisions in our organization's business. The Senior Engineer is a crucial role in the High Performance Computing team that requires a deep understanding of the technology stack, an understanding of the scientific domain, and the ability to work directly with scientists to understand their problems, devise and communicate solutions."The post Job of the Week: Senior Engineer for HPC at Merck appeared first on insideHPC.

|

|

by staff on (#3RXNS)

Today Energy Secretary Rick Perry unveiled Summit, the world's most powerful supercomputer. Powered by IBM POWER9 processors, 27,648 NVIDIA GPUs, and Mellanox InfiniBand, the Summit supercomputer is also the first Exaop AI system on the planet. "This massive machine, powered by 27,648 of our Volta GPUs, can perform more than three exaops, or three billion billion calculations per second," writes Ian Buck on the NVIDIA blog. "That’s more than 100 times faster than Titan, previously the fastest U.S. supercomputer, completed just five years ago. And 95 percent of that computing power comes from GPUs."The post Video: Announcing Summit – World’s Fastest Supercomputer with 200 Petaflops of Performance appeared first on insideHPC.

|

|

by staff on (#3RXH9)

Over at the SC18 Blog, Mike Bernhardt writes that the a small group known as the Perennials have attended the conference from the very beginning. There's no doubt that they'll be back this year for the show, which takes place Nov. 11-16 in Dallas.The post SC18 Perennials Keep Coming Back appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RXC7)

Startups have always had a tough time in the HPC space. Now, the SC18 conference is offering to lend a helping hand with a startup showcase they're calling the SC18 Coffee Shop. "Starting a new business is no easy feat and this is our effort to help smaller companies get exposure to our diverse and dynamic audience,†said SC18 Exhibits Chair Christy Adkinson, from Cray Inc. “We see this as a win-win for emerging companies and our attendees.â€The post HPC Startups: Sign up for the SC18 Coffee Shop appeared first on insideHPC.

|