|

by Rich Brueckner on (#3RXC9)

Pierre Spatz from Murex gave this talk at the NVIDIA GPU Technology Conference. "Murex has been an early adopters of GPU for pricing and risk management of complex financial options. GPU adoption has generated performance boost of its software while reducing its usage cost. Each new generation of GPU has also shown the importance of the necessary reshaping of the architecture of the software using its GPU accelerated analytics. Minsky featuring far better GPU memory bandwidth and GPU-CPU interconnect rase the bar even further. Murex will show how it has handled this new challenge for its business."The post Minsky at Murex: GPUs for Risk Management appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 12:15 |

|

by Sarah Rubenoff on (#3RX7W)

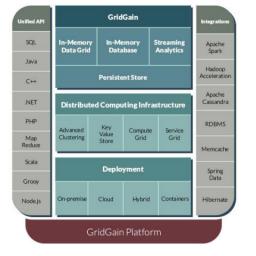

IMC is being used for a variety of functions, including fintech and ecommerce to telecommunications and IoT, as it becomes well known for success with processing and analyzing big data. Download the new report from GridGain, "Choosing the Right In-Memory Computing Solution," to find out whether IMC is the right choice for your business.The post Why IMC Is Right for Today’s Fast-Data and Big-Data Applications appeared first on insideHPC.

|

|

by staff on (#3RV4T)

Australia's Pawsey Supercomputing Centre and PRACE have signed a Memorandum of Understanding (MOU) to promote the use of supercomputers to the progress of scientific and technological outcomes, and to stimulate the industry sector both in Australia and Europe. "PRACE and Pawsey share the complexity and international nature of the science areas that each organization supports, the need to provide services to researchers who are spread across different time zones and the requirement of on-demand access to compute power for a scientific instrument."The post Pawsey teams with PRACE to promote Supercomputing appeared first on insideHPC.

|

|

by staff on (#3RTZR)

The Pistoia Alliance has released results of a survey that found that 72 per cent of science professionals believe their sector is lagging behind other industries in the development of AI. "Spaces to virtually collaborate, like our Centre of Excellence, will become even more critical as political and social shifts – from Brexit to changes to US immigration laws – impact how scientists share knowledge and ideas. AI is poised to have a radical impact on life sciences and healthcare, but the industry must give researchers the best chance of success."The post Survey: Life Sciences lagging behind in AI development appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RTZS)

Today real-time database company ScyllaDB announced a new Scylla Enterprise release with optimizations for IBM Power System Servers with the IBM POWER9 multi-core architecture. By combining Scylla’s highly performant, close-to-the-hardware design with next-generation IBM Power System Servers, organizations can reach new levels of performance while also reducing the footprint, cost and complexity of their systems. "ScyllaDB has designed a powerful distributed database that extends the performance advantages we’ve introduced with our multi-core POWER9 processors,†said Tim Vincent, IBM Fellow and Vice President of IBM Cognitive Systems. “The combination of the Scylla NoSQL database and our Power System Servers enables our shared customers to scale up their systems rather than continually scaling out, creating new opportunities for data center consolidation and price performance.â€The post ScyllaDB Announces Support for IBM Power Systems for Real Time Big Data appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RTZV)

In this video from the Dell EMC HPC Community Meeting in Austin, David Holmes from Dell EMC describes how the company is bringing technical innovation to the Oil & Gas industry. "We partner strongly with Intel and Nvidia and we take advantage of Intel Xeon scalable processes, Intel Xeon Phi processors, and Nvidia GPUs. And depending on the workloads, different computational requirements will run best on these different platforms. And we're now able to help companies build this new breed of composable systems, which provide a broad set of resources that can be dynamically assigned to the different workloads that companies want to run."The post Video: Dell EMC Innovation for the Oil & Gas industry appeared first on insideHPC.

|

|

by staff on (#3RTV5)

As we spend more time and share more information online than ever before, cybercrime is also on the rise. This guest article from Keypath Education explores the important role of IT management professionals in ensuring cybersecurity in an era when cybercrime is becoming more and more prevalent. "When it comes to customer and business data, cyber security is too important to go unmanaged. If you don’t have the IT and data protection expertise required to stay safe in a rapidly changing digital world, reach out to those who do."The post Cybersecurity: Why We Need IT Management Professionals More Than Ever appeared first on insideHPC.

|

|

by Richard Friedman on (#3RRXB)

In this podcast, Dr. Jeremy Hammond from the University of Sydney and Andrew Underwood from Dell EMC describe the new Artemis 3 supercomputer. The new Dell EMC system will power world-leading research and academic programs. "This fully deployed high-performance computing system uses Dell EMC PowerEdge C4140 server technology, The University of Sydney’s $2.3 million system has an rPeak performance of 1 petaflops and an rMax of 700 teraflops, which will allow faster processing of data to provide answers to scientific questions previously beyond reach."The post Podcast: Dell EMC Powers Artemis 3 Supercomputer at University of Sydney appeared first on insideHPC.

|

|

by staff on (#3RRRS)

Today Tata Consultancy Services announced plans for a new Center for Advanced Computing to develop advanced solutions in the areas of HPC, High Performance Data Analytics, and Artificial Intelligence. The new Center at TCS' Super Computing facility in Pune, India will be enabled by integrating the latest Intel Xeon Scalable processors with Intel Omni-Path Fabric, Intel Optane technology, BigDL and additional Intel technologies in the future.The post TCS and Intel to Establish Center for Advanced Computing for HPC in India appeared first on insideHPC.

|

|

by staff on (#3RRRV)

Over at CSCS, Simone Ulmer writes that researchers at ETH Zurich have clarified the previously unresolved question of whether fish save energy by swimming together in schools. They achieved this by simulating the complex physics on the supercomputer ‘Piz Daint’ and combining detailed flow simulations with a reinforcement learning algorithm for the first time. "In their simulations, they have not examined every aspect involved in the efficient swimming behavior of fish. However, it is clear that the developed algorithms and physics learned can be transferred into autonomously swimming or flying robots."The post Supercomputing how Fish Save Energy Swimming in Schools appeared first on insideHPC.

|

|

by staff on (#3RRJS)

Today ACM announced that Koen De Bosschere from HiPEAC has won the Alan D. Berenbaum Distinguished Service Award. Recognized for outstanding service in the field of computer architecture and design, Professor De Bosschere was nominated for structuring and connecting the European research community in computer architecture and compilation. "Leading the HiPEAC community, contributing to the HiPEAC Vision and observing the its impact has always been a very rewarding experience for me," said De Bosscher. "I feel very honored to receive the Alan D. Berenbaum Distinguished Service Award and I would like to dedicate it to all our members and the staff, without whom there would be no HiPEAC.â€The post Koen De Bosschere receives ACM SIGARCH Alan D. Berenbaum Distinguished Service Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RRJV)

Pedro Valero-Lara from BSC gave this talk at the GPU Technology Conference. "The attendees can learn about how the behavior of Human Brain is simulated by using current computers, and the different challenges which the implementation has to deal with. We cover the main steps of the simulation and the methodologies behind this simulation. In particular we highlight and focus on those transformations and optimizations carried out to achieve a good performance on NVIDIA GPUs."The post The Simulation of the Behavior of the Human Brain using CUDA appeared first on insideHPC.

|

|

by staff on (#3RRDN)

Today Quantum Corp. announced the company has joined the iRODS Consortium, the foundation that leads development and support of the integrated Rule-Oriented Data System (iRODS). "Quantum is a leader in scale-out storage and they will offer great insight into their customers’ data management needs," said Mark Pastor, archive solution marketing director, Quantum. "This is essential for software that is driven by the requirements of the user community. The addition of Xcellis Scale-out NAS to the body of product offerings that have been tested with iRODS shows great promise for the iRODS user community.â€The post Quantum Corp Joins iRODS Consortium appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RPB9)

In this video from the Dell HPC Community meeting, Maurizio Davini and Antonio Cisternino from the University of Pisa describe the work they are doing to extend server memory with Intel Optane technology. "Intel Optane technology provides an unparalleled combination of high throughput, low latency, high quality of service, and high endurance. This technology is a unique combination of 3D XPoint memory media, Intel Memory and Storage Controllers, Intel Interconnect IP and Intel software."The post University of Pisa Extends Server Memory with Intel Optane and Dell EMC appeared first on insideHPC.

|

|

by staff on (#3RP5X)

Hyperion Research has published a new case study on how General Electric engineers were able to nearly double the efficiency of gas turbines with the help of supercomputing simulation. "With these advanced modeling and simulation capabilities, GE was able to replicate previously observed combustion instabilities. Following that validation, GE Power engineers then used the tools to design improvements in the latest generation of heavy-duty gas turbine generators to be delivered to utilities in 2017. These turbine generators, when combined with a steam cycle, provided the ability to convert an amazing 64% of the energy value of the fuel into electricity, far superior to the traditional 33% to 44%."The post Case Study: Supercomputing Natural Gas Turbine Generators for Huge Boosts in Efficiency appeared first on insideHPC.

|

|

by staff on (#3RP0W)

Today Cray announced that it has partnered with Digital Catapult and its Machine Intelligence Garage to help organizations of all sizes speed the development of machine intelligence systems. This partnership enables the Machine Intelligence Garage to offer companies the supercomputing power and expertise required to develop and build machine learning and AI solutions. "Our partnership with Cray opens a new door for UK machine intelligence businesses,†said Anat Elhalal, Head of Technology and AI/ML Lead Tech at Digital Catapult. “Cray’s expertise in high-performance computing will give startups much-needed resources to continue the amazing work already underway in the UK for AI and machine learning solutions.â€The post Cray Partners with UK’s Digital Catapult for AI Innovation appeared first on insideHPC.

|

|

by staff on (#3RP0Y)

Today cloud provider Nimbix announced the Technology Preview of JARVICE 3.0, a robust HPC hybrid cloud software platform fully integrated with Kubernetes. The new container platform is designed to provide the enterprise with access to the entire Nimbix self-service catalog of HPC, AI and machine/deep learning applications. Packaged as a new enterprise software tool, JARVICE 3.0 optimally runs supercomputing and HPC workloads on-premises or remotely on the public cloud.The post Nimbix Launches JARVICE 3.0 HPC Hybrid Cloud Platform with Kubernetes appeared first on insideHPC.

|

|

by staff on (#3RNV8)

The is the first entry in a five-part insideHPC series that takes an in-depth look at how machine learning, deep learning and AI are being used in the energy industry. Read on to find out how machine learning is driving energy exploration. "Any tool that reduces the time needed to understand where the deposits are located can save a company millions of dollars."The post Opportunities Abound: HPC and Machine Learning for Energy Exploration appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RM63)

The Center for Computational Astrophysics has deployed a new Cray XC50 supercomputer. With a peak performance of 3 Petaflops, the ATERUI Ⅱ system is the world’s fastest supercomputer for astrophysical simulations. Powered by over 40,000 cores (Intel Xeon Scalable processors) the system features 386 Terabytes of memory. "Thanks to the rapid advancement of computational technology in recent decades, astronomical simulations to recreate celestial objects, phenomena, or even the whole Universe within the computer, have risen up as the third pillar in astronomy."The post Cray Deploys 40,000-core ATERUI II Supercomputer at CfCA in Japan appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RKGT)

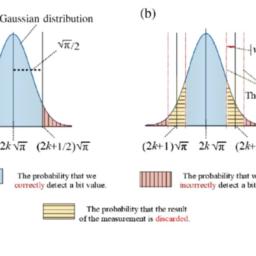

Dr. Xiaobo Zhu from China’s University of Science and Technology gave this talk at the HPC User Forum in Tucson. "CAS-Alibaba Quantum Computing Laboratory introduces quantum technology into computing sciences, aiming to manufacture high-speed quantum computers, and propelling quantum revolution in the computing world. This research has resulted in a Quantum Computing Cloud powered by a quantum processor which includes 11 superconducting qubits. This superconducting quantum chip works at extreme temperatures as low as 10mk (or -273.14℃)."The post Quantum Computing in China: Progress on a Superconducting Multi-Qubits System appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RKGW)

ETP4HPC is organizing a workshop at ISC 2018 to prepare the post Horizon 2020 HPC vision and technology roadmap. The event takes place Sunday, June 24 in Frankfurt, Germany. "What will be next? What will be the role of High-Performance Computing and related technologies in the post Horizon 2020 era? What should be the main priorities of the EC Framework Programme 9 in that area? What will be the key assumptions for the next Strategic Research Agenda (SRA) of ETP4HPC, for the HiPEAC vision and other similar documents in the area of HPC, Big Data and AI?"The post Coming to ISC 2018: Post 2020 Vision for HPC Workshop appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RK6H)

In this podcast, the Radio Free HPC team looks at the new NVIDIA HGX-2 Reference Platform for HPC & AI. "The HGX-2 cloud server platform supports multi-precision computing, supporting high-precision calculations using FP64 and FP32 for scientific computing and simulations, while also enabling FP16 and Int8 for AI training and inference. This unprecedented versatility meets the requirements of the growing number of applications that combine HPC with AI."The post Radio Free Looks at the HGX-2 Reference Platform for HPC & AI appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#3RK6J)

The GridGain In-Memory computing platform works to deliver speed and scalability for your large data needs. The platform is built on the Apache Ignite open source project. Download the new white paper today, "Introducing the GridGain In-Memory Computing Platform," to find out if GridGain is the right choice for you.The post GridGain In-Memory Computing Platform Delivers Speed and Scalability appeared first on insideHPC.

|

|

by staff on (#3RHNB)

In this Chip Chat Podcast, Dr. Bill Magro describes Intel Select Solutions for Simulation & Modeling. "In this interview, Dr. Magro discusses the evolution of HPC and how HPC's scope has grown to incorporate workloads like AI and advanced analytics. Dr. Magro then focuses on Intel Select Solutions for Simulation & Modeling and how they are lowering costs and enabling more customers to take advantage of HPC."The post Podcast: Bill Magro on Intel Select Solutions for Simulation & Modeling appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RHJK)

"This presentation will give an overview about the new NVIDIA Volta GPU architecture and the latest CUDA 9 release. The NVIDIA Volta architecture powers the worlds most advanced data center GPU for AI, HPC, and Graphics. Volta features a new Streaming Multiprocessor (SM) architecture and includes enhanced features like NVLINK2 and the Multi-Process Service (MPS) that delivers major improvements in performance, energy efficiency, and ease of programmability. You''ll learn about new programming model enhancements and performance improvements in the latest CUDA9 release."The post Inside the Volta GPU Architecture and CUDA 9 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RGA7)

Alexander Ruebensaal from ABC Systems AG gave this talk at the Swiss HPC Conference in Lugano. "NVMe has beome the main focus of storage developments when it comes to latency, bandwidth, IOPS. There is already a broad range of standard products available - server or network based."The post Video: NVMe Takes It All, SCSI Has To Fall appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RG74)

HPE is seeking a Research Engineer for their Systems Architecture Lab in our Job of the Week. "The System Architecture Laboratory at Hewlett Packard Labs has an immediate opening for a Research Scientist position with a background in high-speed mixed-signal CMOS design and silicon photonics. The critical tasks is the design of high-speed mixed signals circuits including modulator drivers, transimpedance amplifier, ADC/DAC, SERDES, and PLL using advanced CMOS nodes to enable high-performance computing systems. Successful candidates will have hands-on experience in the CMOS design, design verification, and lab testing of mixed-signal CMOS circuits to interface with photonics."The post Job of the Week: Research Engineer – Systems Architecture Lab at HPE appeared first on insideHPC.

|

|

by staff on (#3REC2)

Today Opin Kerfi in Iceland announced a partnership with The Ubercloud to enable customers to gain instant access to world-leading engineering simulation capabilities through Software as a Service. "This partnership and portfolio expansion is not only valuable to current customers but also appeals to a much wider spectrum of prospects as a subscription-based and managed approach is commonly preferred by both small and large businesses. Customers can use this extra capacity to research, design, experiment and simulate as fast as they need, when they need it."The post Opin Kerfi and UberCloud team up for Engineering Software as a Service appeared first on insideHPC.

|

|

by staff on (#3REC4)

Today Canada’s National Centre for Drug Research and Development announced a collaboration with Schrödinger Inc to use artificial intelligence to help facilitate the development of new therapeutic antibodies. "We’re excited to work with CDRD to aggressively translate scientific discoveries into innovative therapeutic products,†said Dr. Ramy Farid, Schrödinger’s President and CEO. “This partnership is an important step forward in our efforts to extend the successes we’ve had in small molecule drug discovery to the development of novel biologics.â€The post Canada’s CDRD to use AI for Drug Discovery appeared first on insideHPC.

|

|

by Rich Brueckner on (#3REC6)

In this video from the Dell EMC HPC Community Meeting, Adnan Khaleel from Dell EMC describes how the company works with partners to deliver high performance solutions. "It's really the conversations that we have in the corridors and the in mixers where they learn a lot of how we do our future planning, how we can work together, how we can collaborate not just with Dell EMC, but even amongst themselves."The post Collaborating for Innovation in the Dell EMC HPC Community appeared first on insideHPC.

|

|

by staff on (#3RE6V)

Today a new web portal called Rev-Sim.org was launched to support a growing industry-wide movement to make engineering simulation more accessible, efficient, and reliable; not just for CAE experts but also for non-specialists. "The Rev-Sim.org website provides access to the latest success stories, news, articles, whitepapers, thought leadership blogs, presentations, videos, webinars, best practices and other reference materials to help industry democratize the power of simulation across their engineering, manufacturing, service, supply chain, and R&D organizations."The post New Rev-Sim.org Portal to help Democratize Simulation appeared first on insideHPC.

|

|

by staff on (#3RE2P)

"Scientists at Hokkaido University and Kyoto University have developed a theoretical approach to quantum computing that is 10 billion times more tolerant to errors than current theoretical models. Their method brings us closer to developing quantum computers that use the diverse properties of subatomic particles to transmit, process and store extremely large amounts of complex information."The post Squeezing Light could be key to Quantum Computing appeared first on insideHPC.

|

|

by staff on (#3RBWT)

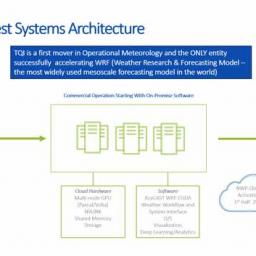

Today TempoQuest announced that it has achieved a breakthrough 700% acceleration of the weather community’s Weather Research Forecast (WRF) model used extensively for real-time forecasting and weather research throughout the world. "The acceleration of regional weather processing times has presented a challenge to forecasters that has taken years to solve,†said TempoQuest President Gene Pache. “Current analytical processes are decades old and until the availability of recent computer acceleration technologies pioneered by NVIDIA, and software platforms like AceCAST, there simply hasn’t been the ability to analyze all the data that drives forecasting speed and accuracy.â€The post GPUs Power Performance Breakthrough on TempoQuest Weather Prediction Software appeared first on insideHPC.

|

|

by staff on (#3RBWW)

Researchers are using supercomputers to better understand the mysteries of the subatomic world. "A team led by scientists in the Nuclear Science Division at the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) has enlisted powerful supercomputers to calculate a quantity known as the “nucleon axial coupling,†or gA – which is central to our understanding of a neutron’s lifetime – with an unprecedented precision. Their method offers a clear path to further improvements that may help to resolve the experimental discrepancy."The post Supercomputers Unlocking Mysteries of the Subatomic World appeared first on insideHPC.

|

|

by staff on (#3RBRG)

Over at the AMD Blog, Scot Aylor writes that the company's EPYC processors are coming to Cisco's popular UCS server platform. "The new, density-optimized Cisco UCS C4200 Series Rack Server Chassis and the Cisco UCS C125 M5 Rack Server Node will be powered by AMD EPYC 7000 series processors. By integrating AMD EPYC processors, Cisco joins a growing list of server providers taking advantage of the high-performance EPYC processor’s strong balance of core density, memory, I/O bandwidth and unprecedented security features to deliver revolutionary technology for customers."The post AMD EPYC Coming to Cisco UCS Rack Servers appeared first on insideHPC.

|

|

by Rich Brueckner on (#3RBRJ)

Dirk Pleiter from the Jülich Supercomputing Centre gave this talk at the NVIDIA GPU Technology Conference. "One of the biggest and most exiting scientific challenge requiring HPC is to decode the human brain. Many of the research topics in this field require scalable compute resources or the use of advance data analytics methods (including deep learning) for processing extreme scale data volumes. GPUs are a key enabling technology and we will thus focus on the opportunities for using these for computing, data analytics and visualization. GPU-accelerated servers based on POWER processors are here of particular interest due to the tight integration of CPU and GPU using NVLink and the enhanced data transport capabilities."The post Brain Research: A Pathfinder for Future HPC appeared first on insideHPC.

|

|

by Beth Harlen on (#3RBM1)

Frameworks, applications, libraries and toolkits—journeying through the world of deep learning can be daunting. If you’re trying to decide whether or not to begin a machine or deep learning project, there are several points that should first be considered. This is the fourth article in a five-part series that covers the steps to take before launching a machine learning startup. This post covers how deep learning is contributing to success across a variety of industries.The post How Deep Learning Tech Can Contribute to Success appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R9NJ)

In this video from Intel AI DevCon 2018, Genevieve Bell from Intel examines the nature of ethics, how and why they might apply in a world of Artificial Intelligence technologies and emergent cyber-physical systems.The post Video: Beyond the Trolley Problem – Ethics in AI appeared first on insideHPC.

|

|

by staff on (#3R9F2)

Today Supermicro announced the company is among the first to adopt the NVIDIA HGX-2 cloud server platform to develop the world’s most powerful systems for artificial intelligence and high-performance computing. "To help address the rapidly expanding size of AI models that sometimes require weeks to train, Supermicro is developing cloud servers based on the HGX-2 platform that will deliver more than double the performance,†said Charles Liang, president and CEO of Supermicro. “The HGX-2 system will enable efficient training of complex models. It combines 16 Tesla V100 32GB SXM3 GPUs connected via NVLink and NVSwitch to work as a unified 2 PetaFlop accelerator with half a terabyte of aggregate memory to deliver unmatched compute power.â€The post Supermicro Unveils 2 Petaflop SuperServer Based on New NVIDIA HGX-2 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R9F3)

In this video from the Dell EMC HPC Community Meeting, Michael Fernandez from Aerofarms describes how the company uses AI technologies to grow better indoor crops. "24-7, AeroFarms collects millions of data points from farms stacked vertically. Using modern imaging, big data and machine learning, they’re turning everything about a plant into data."The post Feeding the World: Dell EMC Powers AI at Aerofarms appeared first on insideHPC.

|

|

by staff on (#3R9BE)

Today NVIDIA introduced the NVIDIA HGX-2, a powerful cloud-server reference platform, the HGX-2 provides companies with unprecedented versatility to meet the requirements of applications that combine HPC with AI. "The HGX-2 cloud server platform supports multi-precision computing, supporting high-precision calculations using FP64 and FP32 for scientific computing and simulations, while also enabling FP16 and Int8 for AI training and inference. This unprecedented versatility meets the requirements of the growing number of applications that combine HPC with AI."The post A New Building Block for HPC & AI: NVIDIA HGX-2 Cloud Reference Platform appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R8SE)

The LDAV 2018 conference on Large Data Analysis and Visualization has issued its Call for Papers. Held in conjunction with IEEE VIS 2018, the event takes place October 21 in Berlin. "The 8th IEEE Large Scale Data Analysis and Visualization (LDAV) symposium is specifically targeting methodological innovation, algorithmic foundations, and possible end-to-end solutions. The LDAV symposium will bring together domain experts, data analysts, visualization researchers, and users to foster common ground for solving both near- and long-term problems. We are looking for both original research contributions and position papers on a broad-range of topics related to collection, analysis, manipulation, and visualization of large-scale data. We are particularly interested in innovative approaches that combine information visualization, visual analytics, and scientific visualization."The post Call for Papers: LDAV 2018 – Large Data Analysis and Visualization appeared first on insideHPC.

|

|

by staff on (#3R71Z)

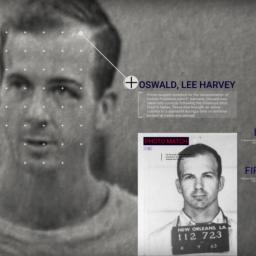

In this video, researchers apply Microsoft Cognitive Search to find clues in newly released documents on the JFK assassination. "Late last year the US government released more than 34,000 pages related to the assassination of JFK. What if we could bring the full power of Microsoft's AI to understand what was in those files and the vast scale of the Azure Cloud to handle the continual flow of data?"The post Microsoft turns AI loose on new documents from JFK Investigation appeared first on insideHPC.

|

|

by staff on (#3R721)

Today Mellanox announced the Mellanox Hyper-scalable Enterprise Framework, the industry’s first truly scalable, open framework for private cloud and enterprise data centers. "With the advent of open platforms and open networking it is now possible for even modestly sized organizations to build data centers like the hyperscalers do,†said Kevin Deierling, vice president of marketing at Mellanox Technologies. “We are confident and excited to release the Mellanox Hyper-scalable Enterprise Framework to the industry – and to provide an open, intelligent, high performance, accelerated and fully converged network to enable enterprise and private cloud architects to build a world class data center.â€The post Mellanox Launches Ground-Breaking Open Hyper-Scalable Enterprise Framework appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R6XH)

Thomas Schulthess from CSCS gave this talk at the GPU Technology Conference. "With over 5000 GPU-accelerated nodes, Piz Daint has been Europes leading supercomputing systems since 2013, and is currently one of the most performant and energy efficient supercomputers on the planet. It has been designed to optimize throughput of multiple applications, covering all aspects of the workflow, including data analysis and visualisation. We will discuss ongoing efforts to further integrate these extreme-scale compute and data services with infrastructure services of the cloud. As Tier-0 systems of PRACE, Piz Daint is accessible to all scientists in Europe and worldwide. It provides a baseline for future development of exascale computing."The post Leadership Computing for Europe and the Path to Exascale Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R674)

Prof. Dieter Kranzlmueller from LRZ will give a talk entitled "Smart Scaling for High Performance Computing: SuperMUC-NG at the Leibniz Supercomputing Centre." The event takes place Monday, June 4 at the University of Campania in Caserta, Italy. "The SuperMUC-NG supercomputer will deliver a staggering 26.7 petaflop compute capacity powered by nearly 6,500 nodes of Lenovo’s recently-announced, next-generation ThinkSystem SD650 servers."The post Prof. Dieter Kranzlmueller to showcase SuperMUC-NG Supercomputer at Event in Caserta appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R5C0)

The MVAPICH User Group Meeting (MUG 2018) has issued its Call For Presentations. The event will take place from August 6-8 in Columbus, Ohio. "MUG aims to bring together MVAPICH2 users, researchers, developers, and system administrators to share their experience and knowledge and learn from each other. The event includes Keynote Talks, Invited Tutorials, Invited Talks, Contributed Presentations, Open MIC session, hands-on sessions MVAPICH developers, etc."The post Call For Presentations: MVAPICH User Group Meeting (MUG 2018) appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R533)

Xiaoyi Lu from OSU gave this talk at the 2018 OpenFabrics Workshop. "The convergence of Big Data and HPC has been pushing the innovation of accelerating Big Data analytics and management on modern HPC clusters. Recent studies have shown that the performance of Apache Hadoop, Spark, and Memcached can be significantly improved by leveraging the high-performance networking technologies, such as Remote Direct Memory Access (RDMA). In this talk, we propose new communication and I/O schemes for these data analytics stacks, which are designed with RDMA over NVM and NVMe-SSD."The post High-Performance Big Data Analytics with RDMA over NVM and NVMe-SSD appeared first on insideHPC.

|

|

by Beth Harlen on (#3R4ZB)

Frameworks, applications, libraries and toolkits—journeying through the world of deep learning can be daunting. If you’re trying to decide whether or not to begin a machine or deep learning project, there are several points that should first be considered. This is the third article in a five-part series that covers the steps to take before launching a machine learning startup. This article explores the role of inference systems in machine learning.The post Inference systems: The 2nd Piece of the Deep Learning Puzzle appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R4VR)

In this podcast, the Radio Free HPC team looks at ramifications for the European GPDR laws, which went into effect May 25, 2018. "The EU General Data Protection Regulation (GDPR) is the most important change in data privacy regulation in 20 years – we’re here to make sure you’re prepared​."The post Radio Free HPC Rationalizes GPDR Regulations appeared first on insideHPC.

|