|

by Rich Brueckner on (#3R3H7)

New York University is seeking an Manager of High Performance Computing in our Job of the Week. "You will manage the day-to-day operations of the High-Performance Computing (HPC) team and support the research computing needs of NYU scholars. This requires provisioning, integrating, and supporting HPC and Big Data clusters running Linux as well as working directly with faculty, students and other scholars in support of utilizing efficiently advanced computing resources in research projects and teaching. Provide ongoing support, troubleshooting, maintenance and training for research computing applications. Manage and oversee HPC system development efforts and system and application testing. Identify and analyze data integrity and performance issues. Recommend solutions to improve system and application performance."The post Job of the Week: Manager of HPC at New York University appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 12:15 |

|

by Rich Brueckner on (#3R3FB)

Gabriel Hautreux from GENCI gave this talk at the NVIDIA GPU Technology Conference. "The talk will present the OpenPOWER platform bought by GENCI and provided to the scientific community. Then, it will present the first results obtained on the platform for a set of about 15 applications using all the solutions provided to the users (CUDA,OpenACC,OpenMP,...). Finally, a presentation about one specific application will be made regarding its porting effort and techniques used for GPUs with both OpenACC and OpenMP."The post Pre-exascale Architectures: OpenPOWER Performance and Usability Assessment for French Scientific Community appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R27V)

Univa will host a Breakfast Briefing on HPC in the Cloud at ISC 2018. "You are invited to attend this briefing on HPC in the Cloud at ISC 2018. A panel of product executives from Amazon Web Services and Nvidia will join Univa to share real-world use cases and discuss best practices for accelerating HPC cloud migration. Please join us and see how to increase the efficiency of on-premise data centers, and address peak needs with hybrid cloud bursting."The post Univa to host Breakfast Briefing on HPC in the Cloud at ISC 2018 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R25K)

DK Panda from Ohio State University gave this talk at the Swiss HPC Conference. "This talk will focus on challenges in designing HPC, Deep Learning, and HPC Cloud middleware for Exascale systems with millions of processors and accelerators. For the HPC domain, we will discuss about the challenges in designing runtime environments for MPI+X (PGAS - OpenSHMEM/UPC/CAF/UPC++, OpenMP, and CUDA) programming models. For the Deep Learning domain, we will focus on popular Deep Learning frameworks (Caffe, CNTK, and TensorFlow) to extract performance and scalability with MVAPICH2-GDR MPI library."The post Designing Scalable HPC, Deep Learning and Cloud Middleware for Exascale Systems appeared first on insideHPC.

|

|

by staff on (#3R0F5)

In this interview from the SC18 Blog, Jason Zurawski describes what's in store for the SCinet high performance show network at the SC18 conference. "In November, SCinet will bring the world’s fastest and most powerful network to Dallas for the SC conference. More than 180 volunteers – along with representatives from 31 organizations that contribute hardware, software, and services – will build the network from the ground up."The post Interview: What’s in store for SCinet at SC18 appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R0F7)

Andrew Ng from Deeplearning.ai and Landing.ai gave this talk at Intel AI DevCon. "Artificial intelligence already powers many of our interactions today. When you ask Siri for directions, peruse Netflix’s recommendations or get a fraud alert from your bank, these interactions are led by computer systems using large amounts of data to predict your needs. The market is only going to grow. By 2020, the research firm IDC predicts that AI will help drive worldwide revenues to over $47 billion, up from $8 billion in 2016."The post Video: Andrew Ng on Deploying Machine Learning in the Enterprise appeared first on insideHPC.

|

|

by staff on (#3R09Y)

Today DDN announced that CRN has named Yvonne Walker, DDN’s partner and customer relations manager, to its prestigious 2018 Women of the Channel list. The professionals who comprise this annual list span the IT channel, representing vendors, distributors, solution providers and other organizations that figure prominently in the channel ecosystem. Each is recognized for her outstanding leadership, vision and unique role in driving channel growth and innovation. "Growing our channel program is one of our top objectives year after year. It is our goal to offer the most innovative and cost-optimized storage solutions that our partners can provide for their end customers,†said Kurt Kuckein, senior director of marketing at DDN. “Yvonne works closely with our partners to ensure they have the tools necessary to help them achieve their goals.â€The post DDN’s Yvonne Walker joins CRN’s 2018 Women of the Channel List appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R0A0)

Erez Cohen from Mellanox gave this talk at the Swiss HPC Conference. "While InfiniBand, RDMA and GPU-Direct are an HPC mainstay, these advanced networking technologies are increasingly becoming a core differentiator to the data center. In fact, within just a few short years so far, where only a handful of bleeding edge industrial leaders emulated classic HPC disciplines, today almost every commercial market is usurping HPC technologies and disciplines in mass."The post Advanced Networking: The Critical Path for HPC, Cloud, Machine Learning and More appeared first on insideHPC.

|

|

by Rich Brueckner on (#3R04K)

Hyperion Research will host their Annual HPC Market Update Breakfast at ISC18. "We look forward to seeing you at our annual HPC Market Update Breakfast Briefing at the ISC conference. If you plan to attend, we encourage you to register soon because this event tends to fill quickly. In addition to presenting our updated market numbers and forecast, this year I and my fellow Hyperion Research analysts Steve Conway, Bob Sorensen and Alex Norton will discuss our latest research in HPDA-AI, quantum computing, cloud computing and the global exascale race."The post Hyperion Research to host Annual HPC Market Update Breakfast at ISC18 appeared first on insideHPC.

|

|

by staff on (#3QY85)

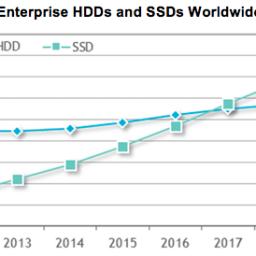

Flash storage has attained significant market growth over the years, with enterprise SSD revenues nearly quadrupling between 2012 and 2017. In this guest article, HPE explores how flash storage is changing the landscape of storage infrastructure. "One example is WekaIO, a flash-optimized parallel file system, created for use with high speed networks and architected to provide high IOPS and low latency performance."The post The Effects of Flash Storage on Storage Infrastructure appeared first on insideHPC.

|

|

by staff on (#3QY3H)

Today Intel announced a partnership with the International Olympic Committee to use the company's leading technology to enhance the Olympic Games through 2024. Intel will focus primarily on infusing its 5G platforms, VR, 3D and 360-degree content development platforms, artificial intelligence platforms, and drones, along with other silicon solutions to enhance the Olympic Games. "As a result of Olympic Agenda 2020, the IOC is forging groundbreaking partnerships, said IOC President Thomas Bach. “Intel is a world leader in its field, and we’re very excited to be working with the Intel team to drive the future of the Olympic Games through cutting-edge technology. The Olympic Games provide a connection between fans and athletes that has inspired people around the world through sport and the Olympic values of excellence, friendship and respect. Thanks to our new innovative global partnership with Intel, fans in the stadium, athletes and audiences around the world will soon experience the magic of the Olympic Games in completely new ways.â€The post Video: Intel to Bring AI Technology to future Olympic Games appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QXYJ)

The American University of Sharjah (AUS) in the United Arab Emirates is seeking an HPC Computational Scientist in our Job of the Week. "AUS is seeking a highly motivated senior programmer with proven experience in developing software for research applications, with emphasis on utilizing open source tools and platforms. The successful applicant is expected to promote and support the development of research-oriented applications that utilize the AUS HPC cluster in a variety of domains that include all engineering disciplines, biology and bioengineering, finance and more."The post Job of the Week: HPC Computational Scientist at the American University of Sharjah appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QXYM)

Peter Dueben from ECMWF gave this talk at the NVIDIA GPU Technology Conference. "Learn how one of the leading institutes for global weather predictions, the European Centre for Medium-Range Weather Forecasts (ECMWF), is preparing for exascale supercomputing and the efficient use of future HPC computing hardware. I will name the main reasons why it is difficult to design efficient weather and climate models and provide an overview on the ongoing community effort to achieve the best possible model performance on existing and future HPC architectures."The post How to Prepare Weather and Climate Models for Future HPC Hardware appeared first on insideHPC.

|

|

by staff on (#3QXS0)

Today Fujitsu announced that it has received an order from the Information Initiative Center of Hokkaido University for an interdisciplinary, large-scale computing system with a theoretical peak performance of 4.0 petaflops, consisting of a supercomputer system and a cloud system. "The deployment of this system, which delivers the dramatically improved peak performance of 4 petaflops, will meet the expectations of universities and research institutions, as well as private sector researchers, throughout Japan, while promoting the further acceleration of academic research in Japan."The post Fujitsu to build 4 Petaflop Supercomputer for Hokkaido University appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QXM1)

Today PASC18 announced that Dr. Eng Lim Goh from HPE will deliver a keynote presentation on scientific computing. Registration is now open for the conference, which takes place July 2-4 in Basel, Switzerland. "Traditionally, scientific laws have been applied deductively - from predicting the performance of a pacemaker before implant, downforce of a Formula 1 car, pricing of derivatives in finance or the motion of planets for a trip to Mars. With artificial intelligence, we are starting to also use the data-intensive inductive approach, enabled by the re-emergence of machine learning which has been fueled by decades of accumulated data."The post Dr. Eng Lim Goh from HPE to keynote PASC18 in July appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QVYA)

In this video, Naveen Rao keynotes the Intel AI DevCon event in San Francisco. "One of the important updates we’re discussing today is optimizations to Intel Xeon Scalable processors. These optimizations deliver significant performance improvements on both training and inference as compared to previous generations, which is beneficial to the many companies that want to use existing infrastructure they already own to achieve the related TCO benefits along their first steps toward AI."The post Video: How Intel will deliver on the Promise of AI appeared first on insideHPC.

|

|

by staff on (#3QVA9)

Researchers are using supercomputers to combat the Dengue virus and related diseases spread by mosquitos. "Advanced software and the rapid calculation speeds of both Comet and Bridges made the current simulations possible. In particular, the graphics processing units (GPUs) on Comet enabled the team to simulate the motion more efficiently than possible if they had used only the central processing units (CPUs) present on most supercomputers."The post Supercomputer Simulations help fight Dengue Virus appeared first on insideHPC.

|

|

by staff on (#3QVAB)

Today the Pawsey Supercomputing Centre announced the appointment of Mark Stickells as the new Director of the Centre. "Mark’s experience with government, industry, research and technology will bring synergy to Pawsey, and will help him to lead this Centre into a new era," said John Langoulant AO, Chair of the Pawsey Supercomputing Centre. "After Pawsey’s recent funding announcement, Mark will play a key role in shaping the future of Australia’s High-Performance Computing and in bringing a new range of users to the facility from across industry sectors."The post Mark Stickells appointed Director of Pawsey Supercomputing Centre appeared first on insideHPC.

|

|

by staff on (#3QV4P)

In this podcast, Terri Quinn from LLNL provides an update on Hardware and Integration (HI) at the Exascale Computing Project. "The US Department of Energy (DOE) national laboratories will acquire, install, and operate the nation’s first exascale-class systems. ECP is responsible for assisting with applications and software and accelerating the research and development of critical commercial exascale system hardware. ECP’s Hardware and Integration research focus area (HI), was created to help the laboratories and the ECP teams achieve success through mutually beneficial collaborations."The post Podcast: Terri Quinn on Hardware and Integration at the Exacale Computing Project appeared first on insideHPC.

|

|

by staff on (#3QV4R)

Today ISC announced that Prof. Yutong Lu from the National Supercomputing Center in Guangzhou, China will be the ISC 2019 Program Chair. This new appointment begins a new journey for ISC High Performance, which has only seen men hold this position for the last four years. "As part of the international HPC community, ISC has committed to including more countries and people in order to foster a broader array of ideas and experiences."The post Prof. Yutong Lu from China will Chair ISC 2019 appeared first on insideHPC.

|

|

by staff on (#3QS03)

Today NVIDIA released Cuda 9.2, which includes updates to libraries, a new library for accelerating custom linear-algebra algorithms, and lower kernel launch latency. "CUDA 9 is the most powerful software platform for GPU-accelerated applications. It has been built for Volta GPUs and includes faster GPU-accelerated libraries, a new programming model for flexible thread management, and improvements to the compiler and developer tools. With CUDA 9 you can speed up your applications while making them more scalable and robust."The post NVIDIA Releases Cuda 9.2 for GPU Code Acceleration appeared first on insideHPC.

|

|

by staff on (#3QRTG)

In this special guest feature, Faith Singer-Villalobos from TACC continues her series profiling Careers in STEM. It's the inspiring story of Je'aime Powell, a TACC System Administrator and XSEDE Extended Collaborative Support Services Consultant. "Options, goals, and hope are what can set you on a path that can change your life," Powell said.The post Mentorship fosters a Career in STEM appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QRTJ)

Joe Lombardo from UNLV gave this talk at the HPC User Forum. "The University of Nevada, Las Vegas and the Cleveland Clinic Lou Ruvo Center for Brain Health have been awarded an $11 million federal grant from the National Institutes of Health and National Institute of General Medical Sciences to advance the understanding of Alzheimer's and Parkinson's diseases. In this session, we will present how UNLV's National Supercomputing Institute plays a critical role in this research by fusing brain imaging, neuropsychological and behavioral studies along with the diagnostic exome sequencing models to increase our knowledge of dementia-related and age-associated degenerative disorders."The post HPC and Precision Medicine: A New Framework for Alzheimer’s and Parkinson’s appeared first on insideHPC.

|

|

by staff on (#3QRTM)

Today Altair announced a 3-year extension to its OEM agreement with Cray Inc. Under the terms of this agreement, PBS Professional will remain bundled as the preferred commercial scheduler on new systems manufactured and shipped by Cray. "Our relationship with Altair continues to benefit our common customers, as we deliver unique, integrated technologies that are ready to meet their highest HPC requirements,†said Fred Kohout, Senior Vice President of Products and Chief Marketing Officer at Cray. “Altair and PBS Professional are established leaders in HPC workload management, and we will continue to leverage this agreement for growth in commercial and other emerging market segments.â€The post Altair and Cray Extend HPC Collaboration appeared first on insideHPC.

|

|

by staff on (#3QRTP)

"Today Micron Technology announced the company is shipping the industry’s first SSD built on revolutionary quad-level cell (QLC) NAND technology. The Micron 5210 ION SSD provides 33 percent more bit density than triple-level cell (TLC) NAND, addressing segments previously serviced with hard disk drives (HDDs). The introduction of new QLC-based SSDs positions Micron as a leader in providing higher capacity at lower costs to address the read-intensive yet performance-sensitive cloud storage needs of AI, big data, business intelligence, content delivery and database systems."The post Micron Now Shipping Quad-Level Cell NAND SSDs appeared first on insideHPC.

|

|

by staff on (#3QRTR)

The Virtual Institute for IO is now accepting submissions for the upcoming IO500 list, which will be revealed at ISC 2018 in Frankfurt, Germany. "The IO500 benchmark suite is designed to be easy to run and the community has multiple active support channels to help with any questions. Please submit and we look forward to seeing many of you at ISC 2018! Please note that submissions of all size are welcome; the site has customizable sorting so it is possible to submit on a small system and still get a very good per-client score for example. Additionally, the list is about much more than just the raw rank; all submissions help the community by collecting and publishing a wider corpus of data."The post Call for Submissions: IO500 List appeared first on insideHPC.

|

|

by staff on (#3QRTT)

NCSA is now accepting team applications for the Blue Waters GPU Hackathon. This event will take place September 10-14, 2018 in Illinois. "General-purpose Graphics Processing Units (GPGPUs) potentially offer exceptionally high memory bandwidth and performance for a wide range of applications. A challenge in utilizing such accelerators has been learning how to program them. These hackathons are intended to help overcome this challenge for new GPU programmers and also to help existing GPU programmers to further optimize their applications - a great opportunity for graduate students and postdocs. Any and all GPU programming paradigms are welcome."The post Call for Applications: NCSA GPU Hackathon in September appeared first on insideHPC.

|

|

by Richard Friedman on (#3QRTW)

Intel® Integrated Performance Primitives (Intel IPP) offers the developer a highly optimized, production-ready, library for lossless data compression/decompression that targets image, signal, and data processing, and cryptography applications. The Intel IPP optimized implementations of the common data compression algorithms are “drop-in†replacements for the original compression code.The post Data Compression Optimized with Intel® Integrated Performance Primitives appeared first on insideHPC.

|

|

by staff on (#3QRTX)

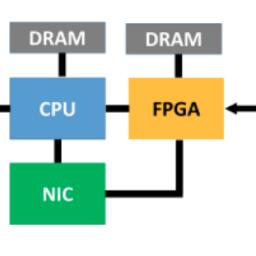

This is the final post in a five-part series from a report exploring the potential machine and a variety of computational approaches, including CPU, GPU and FGPA technologies. This article explores unified deep learning configurations and emerging applications.The post Unified Deep Learning Configurations and Emerging Applications appeared first on insideHPC.

|

|

by staff on (#3QHT9)

In this video, Doug Kothe from ORNl provides an update on the Exascale Computing Project. "With respect to progress, marrying high-risk exploratory and high-return R&D with formal project management is a formidable challenge. In January, through what is called DOE’s Independent Project Review, or IPR, process, we learned that we can indeed meet that challenge in a way that allows us to drive hard with a sense of urgency and still deliver on the essential products and solutions. In short, we passed the review with flying colors—and what’s especially encouraging is that the feedback we received tells us what we can do to improve."The post Video: Doug Kothe Looks Ahead at The Exascale Computing Project appeared first on insideHPC.

|

|

by staff on (#3QHHK)

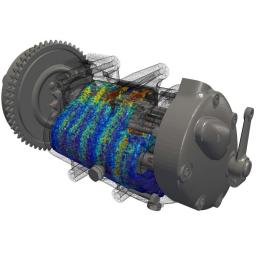

Altair has acquired Germany-based FluiDyna GmbH, a renowned developer of NVIDIA CUDA and GPU-based Computational Fluid Dynamics (CFD) and numerical simulation technologies in whom Altair made an initial investment in 2015. FluiDyna’s simulation software products ultraFluidX and nanoFluidX have been available to Altair’s customers through the Altair Partner Alliance and also offered as standalone licenses. "We are excited about FluiDyna and especially their work with NVIDIA technology for CFD applications," said James Scapa, Founder, Chairman, and CEO at Altair. "We believe the increased throughput and lower cost of GPU solutions is going to allow for a significant increase in simulations which can be used to further impact the design process.â€The post Altair acquires FluiDyna CFD Technology for GPUs appeared first on insideHPC.

|

|

by staff on (#3QHHN)

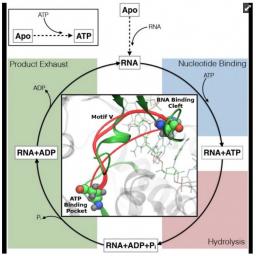

In this TACC Podcast, Chemists at the University of California, San Diego describe how they used supercomputing to design a sheet of proteins that toggle between different states of porosity and density. This is a first in biomolecular design that combined experimental studies with computation done on supercomputers. "To meet these and other computational challenges, Paesani has been awarded supercomputer allocations through XSEDE, the Extreme Science and Engineering Discovery Environment, funded by the National Science Foundation."The post Podcast: Supercomputing the Emergence of Material Behavior appeared first on insideHPC.

|

|

by staff on (#3QHCM)

Today Silicon Valley startup Tachyum Inc. unveiled its new processor family – codenamed “Prodigy†– that combines the advantages of CPUs with GP-GPUs, and specialized AI chips in a single universal processor platform. According to Tachyum, the new chip has "ten times the processing power per watt" and is capable of running the world’s most complex compute tasks. "With its disruptive architecture, Prodigy will enable a super-computational system for real-time full capacity human brain neural network simulation by 2020."The post New Tachyum Prodigy Chip has “More than 10x the Performance of Conventional Processors†appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QH8S)

Today ACM announced that that Dr. Satoshi Matsuoka will receive the annual HPDC Achievement Award for his pioneering research in the design, implementation, and application of high performance systems and software tools for parallel and distributed systems. "ACM HPDC is one of the top international conferences in the field of Computer Science / High Performance Calculation, and among them, I am delighted to have won the Society Career Award for the first time as a Japanese."The post Satoshi Matsuoka to receive High Performance Parallel Distributed Computation Achievement Award appeared first on insideHPC.

|

|

by staff on (#3QEXT)

Today Quantum Corporation named AutonomouStuff LLC as its primary partner for storage distribution in the automotive market, enabling them to deliver Quantum's comprehensive end-to-end storage solutions for both in-vehicle and data center environments. "Autonomous research generates an enormous volume of data which is vital to achieving the goal of a safe autonomous vehicle," said Bobby Hambrick, founder and CEO of AutonomouStuff. "Quantum multitier data storage kits powered by StorNext offer a highly scalable and economical solution to the data dilemma researchers face."The post Quantum Storage Solutions Power Self-driving Cars for AutonomouStuff appeared first on insideHPC.

|

|

by staff on (#3QEV3)

iRODS is taking an active role in Lustre community. The iRODS Consortium recently signed on to the Open Scalable File Systems, Inc (OpenSFS), a nonprofit organization dedicated to the success of the Lustre file system, an open source parallel distributed file system used for computing on large-scale high performance computing clusters.The post iRODS Consortium adds Members and Joins OpenSFS appeared first on insideHPC.

|

|

by staff on (#3QEV4)

Today Equus Compute Solutions announced that Intel has identified Equus as an Intel Platinum 2018 Technology Provider. Furthermore, Intel has distinguished Equus as both a Cloud Data Center Specialist and an HPC Data Center Specialist. These distinctions were earned based on Equus application specific platforms, solutions, and staff training in these areas. "We are honored to be a 2018 Intel Platinum Technology Partner. “said Costa Hasapopoulos, Equus President. “We are proud to offer our customers industry-leading data center solutions across a wide of range of industries and applications. In all of these applications, Equus customizes Intel-based white box servers and storage offerings to enable flexible software-defined infrastructures.â€The post Equus Compute Solutions Named Intel 2018 Cloud and HPC Data Center Specialist appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QER9)

"Scientifico ReFrame is a new framework for writing regression tests for HPC systems. The goal of the framework is to abstract away the complexity of the interactions with the system, separating the logic of a regression test from the low-level details, which pertain to the system configuration and setup. This allows users to write easily portable regression tests, focusing only on the functionality. The purpose of the tutorial will be to do a live demo of ReFrame and a hands-on session demonstrating how to configure it and how to use it."The post ReFrame: A Regression Testing Framework Enabling Continuous Integration of Large HPC Systems appeared first on insideHPC.

|

|

by staff on (#3QERB)

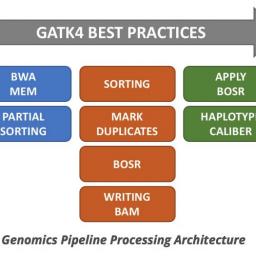

Today DDN announced a Parabricks technology solution that provides massive acceleration for analysis of human genomes. The breakthrough platform combines GPU supercomputing performance with DDN’s Parallel Flash Data Platforms for fastest time to results, and enables unprecedented capabilities for high-throughput genomics analysis pipelines. The joint solution also ensures full saturation of GPUs for maximum efficiency and provides analysis capabilities that previously required thousands of CPUs to engage.The post DDN and Parabricks Accelerate Genome Analysis appeared first on insideHPC.

|

|

by staff on (#3QCTJ)

Today HPC Cloud provider Nimbix announced that their 2018 HPC Cloud Summit will take place June 6 in Silicon Valley. "We are bringing together the best and brightest minds in accelerated computing at the Computer History Museum, an institution dedicated to the preservation and celebration of computer history. Event sponsors include: Intel, Lenovo and Mellanox."The post Nimbix to Host the 2018 HPC Cloud Summit on June 6 in Silicon Valley appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QC0T)

"Women are becoming a driving force in the open source community as the industry becomes more diverse and inclusive. However, a recent study found that only 3% of contributors in open source were women. As a community of women at Red Hat, we want to not only highlight how we contribute, but also inspire others to contribute. In the spirit of diversity, this panel will include women from different departments at Red Hat—including marketing, management, sales, consulting, and engineering—sharing the unique ways we help grow the open source community."The post Video: Women and Open Source appeared first on insideHPC.

|

|

by staff on (#3QBXK)

Today Asetek announced an order from Fujitsu, an established global data center OEM, for a new High Performance Computing system at a currently undisclosed location in Japan. This major installation will be implemented using Asetek's RackCDU liquid cooling solution throughout the cluster which includes 1300 Direct-to-Chip (D2C) coolers for the cluster's compute nodes. "We are pleased to see the continuing success of Fujitsu using Asetek's technology in large HPC clusters around the world," said André Sloth Eriksen, CEO and founder of Asetek.The post Asetek Receives Order For New HPC Cluster From Fujitsu appeared first on insideHPC.

|

|

by staff on (#3QBXM)

One year after launching its cloud service, Nimbus, the Pawsey Supercomputing Centre has now expanded with NVIDIA GPU nodes. The GPUs are currently being installed, so the Pawsey cloud team have begun a Call for Early Adopters. "Launched in mid-2017 as a free cloud service for researchers who require flexible access to high-performance computing resources, Nimbus consists of AMD Opteron CPUs making up 3000 cores and 288 terabytes of storage. Now, Pawsey will be expanding its cloud infrastructure from purely a CPU based system to include GPUs; providing a new set of functionalities for researchers."The post Pawsey Centre adds NVIDIA Volta GPUs to its HPC Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#3QBTF)

"The impact of AI will be visible in the software industry much sooner than the analog world, deeply affecting open source in general, as well as Red Hat, its ecosystem, and its userbase. This shift provides a huge opportunity for Red Hat to offer unique value to our customers. In this session, we'll provide Red Hat's general perspective on AI and how we are helping our customers benefit from AI."The post Red Hat’s AI Strategy appeared first on insideHPC.

|

|

by staff on (#3QBQN)

"The first version of the OpenMP application programming interface (API) was published in October 1997. In the 20 years since then, the OpenMP API and the slightly older MPI have become the two stable programming models that high-performance parallel codes rely on. MPI handles the message passing aspects and allows code to scale out to significant numbers of nodes, while the OpenMP API allows programmers to write portable code to exploit the multiple cores and accelerators in modern machines."The post Celebrating 20 Years of the OpenMP API appeared first on insideHPC.

|

|

by staff on (#3QBMW)

Intel is working with leaders in the field to eliminate today’s data processing bottlenecks. In this guest post from Intel, the company explores how BioScience is getting a leg up from order-of-magnitude computing progress. "Intel’s framework is designed to make HPC simpler, more affordable, and more powerful."The post BioScience gets a Boost Through Order-of-Magnitude Computing Gains appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Q92W)

Peter Lindstrom from LLNL gave this talk at the Conference on Next Generation Arithmetic in Singapore. "We propose a modular framework for representing the real numbers that generalizes IEEE, POSITS, and related floating-point number systems, and which has its roots in universal codes for the positive integers such as the Elias codes. This framework unifies several known but seemingly unrelated representations within a single schema while also introducing new representations."The post Universal Coding of the Reals: Alternatives to IEEE Floating Point appeared first on insideHPC.

|

|

by staff on (#3Q8ZS)

Today DDN that Harvard University’s Faculty of Arts and Sciences Research Computing (FASRC) has deployed DDN’s GRIDScaler GS7KX parallel file system appliance with 1PB of storage. The installation has sped the collection of images detailing synaptic connectivity in the brain’s cerebral cortex. "DDN’s scale-out, parallel architecture delivers the performance we need to keep stride with the rapid pace of scientific research and discovery at Harvard,†said Scott Yockel, Ph.D., director of research computing at Harvard’s FAS Division of Science. “The storage just runs as it’s supposed to, so there’s no contention for resources and no complaints from our users, which empowers us to focus on the research.â€The post DDN GridScaler Powers Neuroscience and Behavioral Research at Harvard appeared first on insideHPC.

|

|

by staff on (#3Q8ZV)

The Ohio Supercomputer Center has updated its innovative web-based portal for accessing high performance computing services. As part of this effort, OSC recently announced the release of Open OnDemand 1.3, the first version using its new RPM Package Manager, or Red Hat Package Manager, a common standard for distributing Linux software. "Our continuing development of Open OnDemand is aimed at making the package easier to use and more powerful at the same time," said David Hudak, interim executive director of OSC. "Open OnDemand 1.3's RPM Package Manager simplifies the installation and updating of OnDemand versions and enables OSC to do more releases more frequently."The post Ohio Supercomputer Center Upgrades HPC Access Portal appeared first on insideHPC.

|

|

by Rich Brueckner on (#3Q8WQ)

Jimmy Daley from HPE gave this talk at the HPC User Forum in Tucson. "High performance clusters are all about high speed interconnects. Today, these clusters are often built out of a mix of copper and active optical cables. While optical is the future, the cost of active optical cables is 4x - 6x that of copper cables. In this talk, Jimmy Daley looks at the tradeoffs system architects need to make to meet performance requirements at reasonable cost."The post HPE: Design Challenges at Scale appeared first on insideHPC.

|