|

by Rich Brueckner on (#3M9M0)

Today Energy Secretary Rick Perry announced the DOE has issued a Request for Proposal for 2-3 Exascale machines. "Called CORAL-2, this RFP is for up to $1.8 billion and is completely separate from the $320 million allocated for the Exascale Computing Project in the FY 2018 budget. Those funds are mostly focused at application development and software technology for an exascale software stack."The post Video: DOE Issues RFP for Exascale Supercomputers appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 14:00 |

|

by Rich Brueckner on (#3M9M1)

In this podcast, the Radio Free HPC team reviews the HPC highlights of the GPU Technology Conference. "From Rich's perspective, the key announcement centered around new NVIDIA DGX-2 supercomputer with the NVSwitch interconnect."The post Radio Free HPC Looks at HPC Highlights from the GPU Technology Conference appeared first on insideHPC.

|

by Marvyn on (#3M9GV)

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Nulla tincidunt facilisis neque, sit amet fermentum lacus. Nulla sagittis sapien vitae sem placerat finibus. Fusce eget iaculis arcu, quis fermentum orci. Mauris scelerisque lorem vitae facilisis placerat. Maecenas eu rutrum libero. Nullam risus purus, imperdiet nec dapibus et, convallis non ipsum. Pellentesque et feugiat ligula. Suspendisse […]The post Twitter Relinked appeared first on insideHPC.

|

by staff on (#3M9GW)

LBNL Communications Manager Jon Bashor has announced his retirement after 27 years with the national lab system. "As communications manager, Jon has been key to the visibility of Berkeley Lab’s computing program, both through written articles and other material produced by Jon and his team and his community leadership—including several years of organizing the DOE booth at SC, the annual supercomputing conference,†said Associate Lab Director Kathy Yelick.The post Jon Bashor Retires After 27 Years of Service to National Labs appeared first on insideHPC.

|

|

by Rich Brueckner on (#3M7GQ)

In this video from the GPU Technology Conference, David Gonzolez from Ziff describes how Dell EMC powers AI solutions at his company. "ZIFF is unique in its approach to Ai. By focusing on empowering product visionaries and software engineers, ZIFF can help organizations fully unlock the insights and automation trapped within their unstructured data." To accelerate it's unstructured database technology, Ziff uses the Dell PowerEdge C4140, which allows them meet the demands of cognitive computing workloads with a dense, accelerator-optimized 1U server supporting 4 GPUs and superior thermal efficiency.The post How ZIFF Powers AI with Dell EMC Technologies appeared first on insideHPC.

|

|

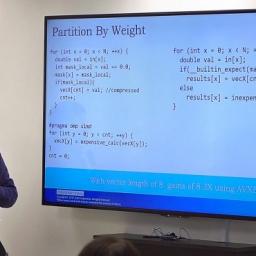

by staff on (#3M7DE)

"The goal of this hands-on workshop was to help participants optimize their application codes to exploit the different levels of parallelism and memory hierarchies in the Xeon Phi architecture,†said CSI computational scientist Meifeng Lin. “By the end of the hackathon, the participants had not only made their codes run more efficiently on Xeon Phi–based systems, but also learned about strategies that could be applied to other CPU-based systems to improve code performance.â€The post Researchers Tune HPC Codes for Intel Xeon Phi at Brookhaven Hackathon appeared first on insideHPC.

|

|

by staff on (#3M5MT)

In this video from the Supercomputing Frontiers Europe conference, Prof. Thomas Sterling describes how the National Strategic Computing Initiative will help keep the USA on the forefront of technology. Along the way he answers these questions: "Is there a race between China and the US?" and "What is Exascale and what does it mean for the value of computing?"The post Video: Thomas Sterling on the Current State of HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#3M5G7)

The Ohio State University is seeking an HPC Security Engineer in our Job of the Week. "OSC provides high-performance computing services for university researchers and industrial clients. The HPC Systems Team delivers OSC’s production HPC systems, networking, and storage services through system design, procurement, deployment, security, and systems administration. This security engineer position supports the overall mission of the team and in particular duties related to systems security and implementation of security policy."The post Job of the Week: HPC Security Engineer at Ohio State University appeared first on insideHPC.

|

|

by staff on (#3M3KE)

At the Ohio Supercomputer Center Statewide Users Group spring conference this week, OSC clients in fields spanning everything from astrophysics to linguistics gathered to share research highlights and hear updates about the center’s direction and role in supporting science across Ohio. "SUG is a great vehicle for us to not only communicate to our clients about what is going on from a policy perspective or hardware roadmaps and new services, but for us to hear back from the clients about what they are doing,†said Brian Guilfoos, HPC client services manager at OSC.“Our normal interaction with someone is very technical – ‘This is the thing I’m trying to do, what I’m having a problem with, etc.’ Here we get to take a broader view and look at the science, and it’s good for our staff to be reminded what is being done with our services and what we are enabling.â€The post Ohio Supercomputing Center Hosts User Group Meeting appeared first on insideHPC.

|

|

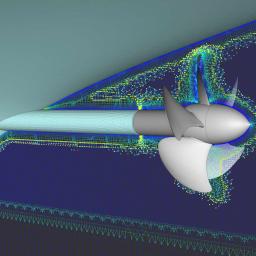

by staff on (#3M38K)

Pointwise has released Version 18.1, a major update to its computational fluid dynamics (CFD) mesh generation software. With its origins in a multiyear development effort funded by the U.S. Air Force for overset grid assembly, this latest Pointwise release includes a suite of tools that provide increased automation and flexibility in mesh topology creation, mesh types and mesh quality assessment. "This release is the culmination and commercial manifestation of a two-year $1.2 million research and development effort funded by the U.S. Air Force Materiel Command, Arnold Engineering Development Complex,†said Nick Wyman, Pointwise’s director of applied research. “That effort in turn was an extension of a previous, two-year, Small Business Innovation Research (SBIR) Phase II award. While focused on overset grid assembly, the capabilities added to the software are applicable to virtually any type of mesh generation.â€The post New Pointwise Release Adds Mesh Automation and Adaptability for CFD appeared first on insideHPC.

|

|

by staff on (#3M334)

Today the OpenMP Architecture Review Board (ARB) announced the appointment of Barbara Chapman to its Board of Directors. "We are delighted to have Prof. Chapman join the OpenMP Boardâ€, says Partha Tirumalai, chairman of the OpenMP Board of Directors. “Her decades of experience in high-performance computing and education will enhance the value OpenMP brings to users all over the world.â€The post Barbara Chapman Joins Board of OpenMP ARB appeared first on insideHPC.

|

|

by staff on (#3M30C)

In this episode of Let's Talk Exascale, Scott Baden of LBNL describes the Pagoda Project, which seeks to develop a lightweight communication and global address space support for exascale applications. "What our project is addressing is how to keep the fixed cost as small as possible, so that cutting-edge irregular algorithms can efficiently move many small pieces of data efficiently."The post Let’s Talk Exascale: Developing Low Overhead Communication Libraries appeared first on insideHPC.

|

|

by Rich Brueckner on (#3M30E)

In this video from the GPU Technology Conference, Thierry Pellegrino describes how Dell EMC customers are applying HPC technologies to AI workloads. "I'll just mention one of our customers, AeroFarms, who use a lot of our technology in order to bring the value of IoT into an environment where you can do machine learning, deep learning, artificial intelligence, and automatically grow crops in an environment that you would never think it would be possible."The post Thierry Pellegrino on the Move to AI with HPC at Dell EMC appeared first on insideHPC.

|

|

by Rich Brueckner on (#3M0CK)

In this video from the GPU Technology Conference, Les Lorenzo from Supermicro describes the company's new High Density NVMe Storage Server. "Supermicro’s latest storage server platform supporting the Next Generation Small Form Factor (NGSFF) media offers a new approach to enterprise all-flash storage applications, by providing all of the benefits of cost optimized M.2 flash drives with an increase in capacity and density and adding the hot-swap capabilities required for enterprise-class applications."The post Video: Supermicro Steps up with High Density NVMe Storage Server appeared first on insideHPC.

|

|

by staff on (#3M09X)

Over at the IBM blog, Jeff Welser writes that nearly 100 startups, venture capitalists, and industry thought leaders are gathering today at the first IBM Q Summit Silicon Valley event. "IBM Q Summit attendees are gathering to discuss what to expect over the next five years and what it means to be "quantum ready." The discussion will inevitably also center on the emerging role of the quantum developer and what that means for future application development."The post IBM brings in Startups to Accelerate Quantum Computing appeared first on insideHPC.

|

|

by staff on (#3M073)

The National Supercomputing Centre (NSCC) Singapore and the Pawsey Supercomputing Centre have signed a Memorandum of Understanding (MOU) to collaborate in the fields of supercomputing, networking, data analytics, scientific software applications and visualization. "With our national petascale HPC platform, NSCC’s collaboration with international supercomputing centres such as the Pawsey, will benefit and bring together people and researchers with different skillsets and expertise to solve problems of a scale previously not possible,†said Professor Tan Tin Wee, NSCC’s Chief Executive."The post Pawsey Supercomputing Centre teams with NSCC Singapore to Accelerate Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#3M01T)

In this video from the GPU Technology Conference, Peter Lilian from NVIDIA describes how the company works with Dell EMC to deliver extreme performance solutions for the Energy sector. "Dell EMC and NVIDIA have expanded their collaboration by signing a new strategic agreement to include joint product development of new products and solutions that address the burgeoning workload and datacenter requirements, with GPU-accelerated solutions for HPC, data analytics, and artificial intelligence."The post How NVIDIA Powers HPC for Energy at Dell EMC appeared first on insideHPC.

|

|

by staff on (#3KYGC)

Burton J. Smith has passed away. An internationally recognized leader in HPC architecture, Smith was a co-founder of Tera Computing (later renamed Cray, Inc) and most recently a Microsoft technical fellow. Smith was 77. "Burton was an amazing intellect but more importantly, he was an amazing person. Every moment was important to him and he lived them all passionately. He touched many lives, made a profound impact in the world and will be missed by many,†said Todd Holmdahl, Smith’s colleague at Microsoft.The post HPC Pioneer Burton Smith passes away appeared first on insideHPC.

|

|

by staff on (#3KXHV)

Today Mellanox announced that the company’s InfiniBand and Ethernet solutions have been chosen to accelerate the new NVIDIA DGX-2 artificial intelligence system. DGX-2 is the first 2 Petaflop system that combines sixteen GPUs and eight Mellanox ConnectX adapters, supporting both EDR InfiniBand and 100 gigabit Ethernet connectivity. The technological advantages of the Mellanox adapters with smart acceleration engines enable the highest performance for AI and Deep Learning applications.The post Mellanox powers NVIDIA DGX-2 AI Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KXFB)

In this video from the GPU Technology Conference, Kash Shaikh from Dell EMC describes the company's new AI Ready Solutions. "Dell EMC is at the forefront of AI, providing the technology that makes tomorrow possible, today. Dell EMC uniquely provides an extensive portfolio of technologies — spanning workstations, servers, networking, storage, software and services — to create the high-performance computing and data analytics solutions that underpin successful AI, machine and deep learning implementations."The post Video: AI Ready Solutions from Dell EMC appeared first on insideHPC.

|

|

by staff on (#3KXCD)

Wolfgang Gentzsch writes that The UberCloud has just received the highest technology partnership level as an ANSYS Advanced Solution Partner. "Achieving this elevated partnership status has been the natural evolution of UberCloud's collaboration with ANSYS. Back in 2012 ANSYS and UberCloud started working together with our CAE Cloud experiments. This resulted in over 30 experiments and case studies, which are made freely available to the CAE community in the ANSYS Compendium of Case Studies."The post The UberCloud becomes ANSYS Advanced Solution Partner appeared first on insideHPC.

|

|

by staff on (#3KX9N)

We are honored to be recognized by our strategic partner, Intel. This award further validates our position as the world’s leading provider of data-intensive at-scale solutions,†said Alex Bouzari, chief executive officer, chairman and co-founder of DDN. “DDN’s data storage solutions provide our customers worldwide with the value-add flexibility, performance acceleration, advanced intelligent data management capabilities, and workflow simplification that are essential for on premise, hybrid cloud and public cloud requirements.â€The post DDN Named Intel Technology Partner of the Year appeared first on insideHPC.

|

|

by staff on (#3KTTN)

Over at the MapD blog, Todd Mostak writes the company has justed launched the MapD Cloud, a major milestone GPU-accelerated analytics. "With MapD Cloud, anyone can spin up a 2-week trial of our platform in less than 60 seconds, and then continue as a customer with a mix of self-service individual and enterprise plans. The launch of MapD cloud is the next major step toward our larger vision of giving everyone access to GPU-accelerated analytics, following our decision to open source the core of the platform nearly a year ago."The post New MapD Cloud offers GPU-accelerated Analytics appeared first on insideHPC.

|

|

by staff on (#3KTKW)

Today Dutch cleantech company Asperitas announced it is partnering with Boston Ltd on Immersed Computing technologies. "Our partnership with Asperitas ushers in an exciting new chapter for our business and for our customers delivering radically improved datacenter cooling solutions coupled with intelligent energy recovery and reduced operational costs."The post Asperitas and Boston Collaborate on Immersed Cooling for Datacenters appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KTH0)

In this video, the European PRACE HPC initiative describes how the Piz Daint supercomputer at CSCS in Switzerland provides world-class supercomputing power for research. "We are very pleased that Switzerland – one of our long-time partners in high-performance computing – is joining the European effort to develop supercomputers in Europe," said Mariya Gabriel, Commissioner for Digital Economy and Society. "This will enhance Europe’s leadership in science and innovation, help grow the economy and build our industrial competitiveness."The post Video: Piz Daint Supercomputer speeds PRACE simulations in Europe appeared first on insideHPC.

|

|

by staff on (#3KTE9)

Today Paderborn University in Germany announced that it has selected a Cray CS500 cluster system as its next-generation supercomputer. This procurement is the first phase of the Noctua project in which a multi-petaflop-system with a total budget of 10M euros. The initial HPC system provides academic researchers from Paderborn University and nationwide with computing resources primarily for computational material science, optoelectronics and photonics, and computer system research. The system is expected to go into production in 2018.The post Cray to build FPGA-Accelerated Supercomputer for Paderborn University appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KTAW)

In this video from GTC 2018, Dolly Wu from Inspur and Marius Tudor from Liquid describe how the two companies are collaborating on Composable Infrastructure for AI and Deep Learning workloads. "AI and deep learning applications will determine the direction of next-generation infrastructure design, and we believe dynamically composing GPUs will be central to these emerging platforms,†said Dolly Wu, GM and VP Inspur Systems.The post Video: Liqid Teams with Inspur at GTC for Composable Infrastructure appeared first on insideHPC.

|

|

by MichaelS on (#3KQVB)

Setting up an environment for High Performance Computing (HPC) especially using GPUs can be daunting. There can be multiple dependencies, a number of supporting libraries required, and complex installation instructions. NVIDIA has made this easier with the announcement and release of HPC Application Containers with the NVIDIA GPU Cloud.The post NVIDIA Makes GPU Computing Easier in the Cloud appeared first on insideHPC.

|

|

by staff on (#3KQP1)

A more flexible, application-centric, datacenter architecture is required to meet the needs of rapidly changing HPC applications and hardware. In this guest post, Katie Rivera of One Stop Systems explores how rack-scale composable infrastructure can be utilized for mixed workload data centers.The post Rack Scale Composable Infrastructure for Mixed Workload Data Centers appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KQP3)

Satoshi Matsuoka from the Tokyo Tech writes that he is taking on a new role at RIKEN to foster the deployment of the Post-K computer. "From April 1st I have become the Director of Riken Center for Computational Science, to lead the K-Computer & Post-K development, and the next gen HPC research. Riken R-CCS Director is my main job, but I also retain my Professorship at Tokyo Tech. and lead my lab there & also lead a group for AIST-Tokyo Tech joint RWBC-OIL."The post Satoshi Matsuoka Moves to RIKEN Center for Computational Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KQKM)

In this video from the GPU Technology Conference, Marc Hamilton from NVIDIA describes the new DGX-2 supercomputer with the NVSwitch interconnect. "NVIDIA NVSwitch is the first on-node switch architecture to support 16 fully-connected GPUs in a single server node and drive simultaneous communication between all eight GPU pairs at an incredible 300 GB/s each. These 16 GPUs can be used as a single large-scale accelerator with 0.5 Terabytes of unified memory space and 2 petaFLOPS of deep learning compute power. With NVSwitch, we have 2.4 terabytes a second bisection bandwidth, 24 times what you would have with two DGX-1s."The post Inside the new NVIDIA DGX-2 Supercomputer with NVSwitch appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KQH2)

In this video from GTC 2018, Alexander St . John from Nyriad demonstrates how the company's NSULATE software running on Advanced HPC gear provides extreme data protection for HPC data. As we watch, he removes a dozen SSDs from a live filesystem -- and it keeps on running!The post RAID No More: GPUs Power NSULATE for Extreme HPC Data Protection appeared first on insideHPC.

|

|

by staff on (#3KNPG)

HP Z Workstations, with new NVIDIA technology, are ideal for local processing at the edge of the network – giving developers more control, better performance and added security over cloud-based solutions. "Products like the HP Z8, the most powerful workstation for ML development, coupled with the new NVIDIA Quadro GV100, the HP ML Developer Portal and our expanded services offerings will undoubtedly fast-track the adoption of machine learning.â€The post New HP Z8 is “World’s Most Powerful Workstation for Machine Learning Development†appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KNMK)

The HPC application containers available on NVIDIA GPU Cloud (NGC) drastically improve ease of application deployment, while delivering optimized performance. The containers include HPC applications such as NAMD, GROMACS, and Relion. NGC gives researchers and scientists the flexibility to run HPC application containers on NVIDIA Pascal and NVIDIA Volta-powered systems including Quadro-powered workstations, NVIDIA DGX Systems, and HPC clusters.The post Video: Deploy HPC Applications Faster with NVIDIA GPU Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KKSH)

Mike Ignatowski from AMD gave this talk at the Rice Oil & Gas conference. "We have reached the point where further improvements in CMOS technology and CPU architecture are producing diminishing benefits at increasing costs. Fortunately, there is a great deal of room for improvement with specialized processing, including GPUs and other emerging accelerators. In addition, there are exciting new developments in memory technology and architecture coming down the development pipeline."The post Video: The Challenge of Heterogeneous Compute & Memory Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KKNY)

The eScience Center at the University of Southern Denmark (SDU) is seeking Software/Hardware Architects for Research Infrastructure. "The eScience Center is now expanding its staff in all its core areas and expects to fill 6 or more positions. We address stimulating and interesting technological challenges, and offer a research-like environment where we encourage the study of innovative solutions. We firmly believe in open-source and web technology as means to reach our goals.The post Jobs of the Week: Software/Hardware Architects for Research Infrastructure at University of Southern Denmark appeared first on insideHPC.

|

|

by staff on (#3KHM3)

In this Let's Talk Exascale podcast, Lois Curfman McInnes from Argonne National Laboratory describes the Extreme-scale Scientific Software Development Kit (xSDK) for ECP, which is working toward a software ecosystem for high-performance numerical libraries. "The project is motivated by the need for next-generation science applications to use and build on diverse software capabilities that are developed by different groups."The post Let’s Talk Exascale: Software Ecosystem for High-Performance Numerical Libraries appeared first on insideHPC.

|

|

by staff on (#3KHFJ)

The Eleventh International Workshop on Parallel Programming Models and Systems Software for High-End Computing (P2S2) has issued its Call for Papers. The event takes place in August 22 in Eugene, Oregon. "The goal of this workshop is to bring together researchers and practitioners in parallel programming models and systems software for high-end computing architectures. Please join us in a discussion of new ideas, experiences, and the latest trends in these areas at the workshop. If you're working on #HPC programming models, performance modeling, storage or interconnect system software, large-scale scheduling or task coordination, consider submitting to the P2S2 2018 workshop!"The post Call for Papers: International Workshop on Parallel Programming Models in Oregon appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KHCM)

In this video from GTC 2018, Adel El-Hallak from IBM describes how IBM and NVIDIA are partnering to build the largest supercomputers in the world to enable data scientists and application developers to not be limited to any device memory. Between IBM and NVIDIA, you can capitalize on the Volta 32GB memory and the entire system as a whole.The post Video: IBM Brings NVIDIA Volta to Supercharge Discoveries appeared first on insideHPC.

|

|

by staff on (#3KH9T)

Over at the NVIDIA blog, Jamie Beckett writes that the new European-Extremely Large Telescope, or E-ELT, will capture images 15 times sharper than the dazzling shots the Hubble telescope has beamed to Earth for the past three decades. "are running GPU-powered simulations to predict how different configurations of E-ELT will affect image quality. Changes to the angle of the telescope’s mirrors, different numbers of cameras and other factors could improve image quality."The post Why the World’s Largest Telescope Relies on GPUs appeared first on insideHPC.

|

|

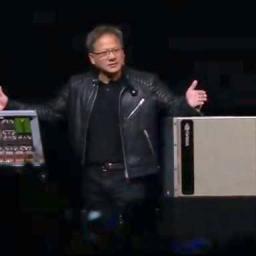

by Rich Brueckner on (#3KEJY)

In this video, NVIDIA CEO Jensen Huang unveils the DGX-2 supercomputer. Combined with a fully optimized, updated suite of NVIDIA deep learning software, DGX-2 is purpose-built for data scientists pushing the outer limits of deep learning research and computing. "Watch to learn how we’ve created the first 2 petaFLOPS deep learning system, using NVIDIA NVSwitch to combine the power of 16 V100 GPUs for 10X the deep learning performance."The post Video: NVIDIA Unveils DGX-2 Supercomputer appeared first on insideHPC.

|

|

by staff on (#3KECF)

Today DDN announced that its EXAScaler DGX solution accelerated client has been fully integrated with the NVIDIA DGX Architecture. "By supplying this groundbreaking level of performance, DDN enables customers to greatly accelerate their Machine Learning initiatives, reducing load wait times of large datasets to mere seconds for faster training turnaround."The post DDN feeds NVIDIA DGX Servers 33GB/s for Machine Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KCXV)

In this video from from 2018 GPU Technology Conference, Ziv Kalmanovich from VMware and Fred Devoir from NVIDIA describe how they are working together to bring the benefits of virtualization to GPU workloads. "For cloud environments based on vSphere, you can deploy a machine learning workload yourself using GPUs via the VMware DirectPath I/O or vGPU technology."The post Video: VMware powers HPC Virtualization at NVIDIA GPU Technology Conference appeared first on insideHPC.

|

|

by staff on (#3KBX4)

Today Liqid and Inspur announced that the two companies will offer a joint solution designed specifically for advanced, GPU-intensive applications and workflows. "Our goal is to work with the industry’s most innovative companies to build an adaptive data center infrastructure for the advancement of AI, scientific discovery, and next-generation GPU-centric workloads,†said Sumit Puri, CEO of Liqid. “Liqid is honored to be partnering with data center leaders Inspur Systems and NVIDIA to deliver the most advanced composable GPU platform on the market with Liqid’s fabric technology.â€The post Liqid and Inspur team up for Composable GPU-Centric Rack-Scale Solutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KBSX)

Today Cray announced it is adding new options to its line of CS-Storm GPU-accelerated servers as well as improved fast-start AI configurations, making it easier for organizations implementing AI to get started on their journey with AI proof-of-concept projects and pilot-to-production use. "As companies approach AI projects, choices in system size and configuration play a crucial role,†said Fred Kohout, Cray’s senior vice president of products and chief marketing officer. “Our customers look to Cray Accel AI offerings to leverage our supercomputing expertise, technologies and best practices. Whether an organization wants a starter system for model development and testing, or a complete system for data preparation, model development, training, validation and inference, Cray Accel AI configurations provide customers a complete supercomputer system.â€The post Cray rolls out new Cray Artificial Intelligence Offerings appeared first on insideHPC.

|

|

by Rich Brueckner on (#3KBD8)

"Quantum computing has recently become a topic that has captured the minds and imagination of a much wider audience. Dr. Jerry Chow joined CloudFest to speak to the near future of quantum computing and insights into the IBM Q Experience, which since May 2016 has placed a rudimentary quantum computer on the Cloud for anyone and everyone to access."The post Video: Enabling Quantum Computing Over the Cloud appeared first on insideHPC.

|

|

by staff on (#3KBDA)

“The complexities of big data and data science models, particularly in data-intensive fields such as life sciences, telecommunications, cybersecurity, financial services and retail, require purpose-built database applications, compute systems and storage platforms. We are excited to partner with DDN and bring the benefits of its unsurpassed expertise in large-scale, high-performance computing environments to our customers.â€The post DDN partners with SQream for “World’s Fastest Big Data Analytics†appeared first on insideHPC.

|

|

by staff on (#3K94M)

Today BOXX Technologies announced the new APEXX W3 compact workstation featuring an Intel Xeon W processor, four dual slot NVIDIA GPUs, and other innovative features for accelerating HPC applications. "Available with an Intel Xeon W CPU (up to 18 cores) in a compact chassis, the remarkably quiet APEXX W3 is ideal for data scientists, enabling deep learning development at the user’s deskside. Capable of supporting up to four NVIDIA Quadro GV100 graphics cards, the workstation helps users rapidly iterate and test code prior to large-scale DL deployments while also being ideal for GPU-accelerated rendering. At GTC, APEXX W3 will demonstrate V-Ray rendering with NVIDIA OptiX AI-accelerated denoiser technology."The post New BOXX Deep Learning Workstation has 4 NVIDIA GPUs and 18-core Xeon Processors appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K8VM)

Today NVIDIA unveiled the NVIDIA DGX-2: the "world's largest GPU." Ten times faster than its predecessor, the DGX-2 the first single server capable of delivering two petaflops of computational power. DGX-2 has the deep learning processing power of 300 servers occupying 15 racks of datacenter space, while being 60x smaller and 18x more power efficient.The post NVIDIA Announces DGX-2 as the “First 2 Petaflop Deep Learning System†appeared first on insideHPC.

|

|

by staff on (#3K8R1)

Today NVIDIA announced Quadro GV100 GPU. With innovative packaging, the Quadro GV100 comprises two Volta GPUs in the same chassis -- linked with NVIDIA's new NVlink 2 interconnect. "The new AI-dedicated Tensor Cores have dramatically increased the performance of our models and the speedier NVLink allows us to efficiently scale multi-GPU simulations.â€The post NVIDIA rolls out GV100 “Dual-Volta†GPU for Workstations appeared first on insideHPC.

|