|

by staff on (#3K8KW)

Today ISC 2018 announced that Dr. Keren Bergman from Columbia University will give a keynote on the latest developments in silicon photonics. The event takes place June 24-28 in Frankfurt.The post Dr. Keren Bergman and Thomas Sterling to Keynote ISC 2018 appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 14:00 |

|

by staff on (#3K8GX)

Today Nyriad and ThinkParQ announced a partnership to develop a certification program for high performance, resilient storage systems that combine BeeGFS with NSULATE, Nyriad’s solution for GPU-accelerated storage-processing. "We believe this is the beginning of a fantastic partnership between two innovative software companies with similar roots in the high performance computing community said ThinkParQ CEO Frank Herold. "BeeGFS was developed at the Fraunhofer Center for High Performance Computing, while Nyriad’s NSULATE was originally developed from a partnership with the International Centre for Radio Astronomy Research in Australia. We want to bring our expertise to the wider storage industry by creating new standards for performance and reliability suitable for the coming generation of exascale systems.â€The post Nyriad and ThinkParQ Announce Partnership to Certify GPU-accelerated Storage appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K8GZ)

NVIDIA is hosting their annual GPU Technology Conference this week. "Watch the livestream of NVIDIA’s CEO, Jensen Huang, will be delivering the opening keynote to officially kick off the event with a focus on AI and Deep Learning. The event takes place in Silicon Valley at 9AM (Pacific Time) today."The post Video Replay: GTC 2018 Keynote with Jensen Huang appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K832)

Rob Davis from Mellanox gave this talk at the 2018 OCP Summit. "There is a new very high performance open source SSD interfaced called NVMe over Fabrics now available to expand the capabilities of networked storage solutions. It is an extension of the local NVMe SSD interface developed a few years ago driven by the need for a faster interface for SSDs. Similar to the way native disk drive SCSI protocol was networked with Fibre Channel 20 years ago, this technology enables NVMe SSDs to be networked and shared with their native protocol. By utilizes ultra-low latency RDMA technology to achieve data sharing across a network without sacrificing the local performance characteristics of NVMe SSDs, true composable infrastructure is now possible."The post NVMe Over Fabrics High performance SSDs Networked for Composable Infrastructure appeared first on insideHPC.

|

|

by staff on (#3K5E5)

Today Nyriad and Advanced HPC announce their partnership for a new NVIDIA GPU-accelerated storage system that achieves data protection levels well beyond any RAID solution. "Nyriad and Advanced HPC have brought together a hardware and software reference implementation around a GPU to mitigate rebuild times, enable large-scale RAID systems to run at full speed while degraded, reduce failures, and increase overall reliability, said Christopher M. Sullivan, Assistant Director for Biocomputing at Oregon State University’s Center for Genome Research and Biocomputing (CGRB). "We look forward to their continued technology support and innovative approach that keeps CGRB at the forefront of computational research groups.â€The post GPU-accelerated Storage System goes “Beyond RAID†appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K5B6)

Jack Wells from ORNL gave this talk at the 2018 OpenPOWER Summit. "The Summit supercomputer coming to Oak Ridge is the next leap in leadership-class computing systems for open science. Summit will have a hybrid architecture, and each node will contain multiple IBM POWER9 CPUs and NVIDIA Volta GPUs all connected together with NVIDIA’s high-speed NVLink. Each node will have over half a terabyte of coherent memory (high bandwidth memory + DDR4) addressable by all CPUs and GPUs plus 800GB of non-volatile RAM that can be used as a burst buffer or as extended memory."The post Video: Powering the Road to National HPC Leadership appeared first on insideHPC.

|

|

by staff on (#3K52A)

Today One Stop Systems expanded its line of rack scale NVIDIA GPU accelerator products with the introduction of GPUltima-CI. "The GPUltima-CI power-optimized rack can be configured with up to 32 dual Intel Xeon Scalable Architecture compute nodes, 64 network adapters, 48 NVIDIA Volta GPUs, and 32 NVMe drives on a 128Gb PCIe switched fabric, and can support tens of thousands of composable server configurations per rack. Using one or many racks, the OSS solution contains the necessary resources to compose any combination of GPU, NIC and storage resources as may be required in today’s mixed workload data center."The post One Stop Systems Launches Rack Scale GPU Accelerator System appeared first on insideHPC.

|

|

by john kirkley on (#3K4ZQ)

The Broad Institute of MIT and Harvard in collaboration with Intel, is playing a major role in accelerating genomic analysis. This guest post from Intel explores how the two are working together to 'reach levels of analysis that were not possible before.'The post Broad Institute and Intel Advance Genomics appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K30D)

Greg Casey from Dell gave this talk at the 2018 OCP Summit. "Gen-Z is different. It is a high-bandwidth, low-latency fabric with separate media and memory controllers that can be realized inside or beyond traditional chassis limits. It treats all components as memory (so-called memory-semantic communications), and it moves data between them with minimal overhead and latency. It thus takes full advantage of emerging persistent memory (memory accessed over the data bus at memory speeds). It can also handle other compute elements, such as GPUs, FPGAs, and ASIC or coprocessor-based accelerators."The post Video: Gen-Z High-Performance Interconnect for the Data-Centric Future appeared first on insideHPC.

|

|

by staff on (#3K2YE)

In this special guest feature, Dr. Eng Lim Goh from HPE shares the endless possibilities of supercomputing and AI, and the challenges that stand in the way. "Can AI systems continue to learn and evolve based on historical data, and then predict – with breakneck speed – the exact time to buy or sell stocks? Of course they can – and with precision that comes close to replicating other predictive models, and usually in a fraction of the time."The post A World of Opportunities for HPC and AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K0YV)

Thor Sewell from Intel gave this talk at the Rice Oil & Gas conference. "We are seeing the exciting convergence of artificial intelligence and modeling & simulation workflows as well as the movement towards cloud computing and Exascale. This session will cover these significant trends, the technical challenges that must be overcome, and how to prepare for this next level of computing."The post Video: Convergence of AI & HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#3K0WX)

RedLine Performance Solutions in Herndon, VA is seeking an HPC Engineer in our Job of the Week. "RedLine Performance Solutions has been in the HPC solutions engineering services business for approximately 17 years and is consistently determined to keep the "bar of excellence" quite high for new hires. This enables RedLine to accomplish what other firms cannot and promotes a high level of staff retention. We offer services ranging from full life cycle HPC systems engineering to remote managed services to HPC program analysis. We are located in the Washington, DC area and are looking for a HPC Engineer to join us."The post Job of the Week: HPC Engineer at RedLine Performance Solutions appeared first on insideHPC.

|

|

by staff on (#3JYQW)

Over at the All Things Distributed blog, Werner Vogels writes that the new Amazon SageMaker is designed for building machine learning algorithms that can handle an infinite amount of data. "To handle unbounded amounts of data, our algorithms adopt a streaming computational model. In the streaming model, the algorithm only passes over the dataset one time and assumes a fixed-memory footprint. This memory restriction precludes basic operations like storing the data in memory, random access to individual records, shuffling the data, reading through the data several times, etc."The post Amazon SageMaker goes for “Infinitely Scalable†Machine Learning appeared first on insideHPC.

|

|

by staff on (#3JYJJ)

Today the European Commission announced more details on the European Processor Initiative to co-design, develop and bring on the market a low-power microprocessor. "This technology, with drastically better performance and power, is one of the core elements needed for the development of the European Exascale machine. We expect to achieve unprecedented levels of performance at very low power, and EPI’s HPC and automotive industrial partners are already considering the EPI platform for their product roadmaps," said Philippe Notton, the EPI General Manager.The post European Processor Initiative to develop chip for future supercomputers appeared first on insideHPC.

|

|

by staff on (#3JYD2)

"MAX is a one-stop exchange for data scientists and AI developers to consume models created using their favorite machine learning engines, like TensorFlow, PyTorch, and Caffe2, and provides a standardized approach to classify, annotate, and deploy these models for prediction and inferencing, including an increasing number of models that can be deployed and customized in IBM’s recently announce AI application development platform, Watson Studio."The post IBM Launches MAX – an App Store for Machine Learning Models appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JY7H)

Jessica Pointing from MIT gave this talk at the IBM Think conference. "Because atoms and subatomic particles behave in strange and complex ways, classical physics can not explain their quantum behavior. However, when the behavior is harnessed effectively, systems become far more powerful than classical computers… quantum powerful."The post A Primer on Quantum Computing… with Doughnuts! appeared first on insideHPC.

|

|

by staff on (#3JVKE)

"We anticipate that the Grand Unified File Index will have a big impact on the ability for many levels of users to search data and get a fast response,†said Gary Grider, division leader for High Performance Computing at Los Alamos. “Compared with other methods, the Grand Unified File Index has the advantages of not requiring the system administrator to do the query, and it honors the user access controls allowing users and admins to use the same indexing system,†he said.The post Los Alamos Releases File Index Product to Open Source appeared first on insideHPC.

|

|

by staff on (#3JVKG)

Scientists at Argonne are helping to develop better batteries for our electronic devices. The goal is to develop beyond-lithium-ion batteries that are even more powerful, cheaper, safer and longer lived. “The energy storage capacity was about three times that of a lithium-ion battery, and five times should be easily possible with continued research. This first demonstration of a true lithium-air battery is an important step toward what we call beyond-lithium-ion batteries.â€The post Argonne Helps to Develop all-new Lithium-air Batteries appeared first on insideHPC.

|

|

by Richard Friedman on (#3JVGZ)

Recent Intel® enhancements to Java enable faster and better numerical computing. In particular, the Java Virtual Machine (JVM) now uses the Fused Multiply Add (FMA) instructions on Intel Intel Xeon® PhiTM processors with Advanced Vector Instructions (Intel AVX) to implement the Open JDK9 Math.fma()API. This gives significant performance improvements for matrix multiplications, the most basic computation found in most HPC, Machine Learning, and AI applications.The post Intel AVX Gives Numerical Computations in Java a Big Boost appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JVE2)

In this video from the 2018 Rice Oil & Gas Conference, Doug Kothe from ORNL provides an update on the Exascale Computing Project. "The quest to develop a capable exascale ecosystem is a monumental effort that requires the collaboration of government, academia, and industry. Achieving exascale will have profound effects on the American people and the world—improving the nation’s economic competitiveness, advancing scientific discovery, and strengthening our national security."The post The U.S. Exascale Computing Project: Status and Plans appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JRVP)

Over at the Lenovo Blog, Dr. Bhushan Desam writes that the company just updated its LiCO tools to accelerate AI deployment and development for Enterprise and HPC implementations. "LiCO simplifies resource management and makes launching AI training jobs in clusters easy. LiCO currently supports multiple AI frameworks, including TensorFlow, Caffe, Intel Caffe, and MXNet. Additionally, multiple versions of those AI frameworks can easily be maintained and managed using Singularity containers. This consequently provides agility for IT managers to support development efforts for multiple users and applications simultaneously."The post Lenovo Updates LiCO Tools to Accelerate AI Deployment appeared first on insideHPC.

|

|

by staff on (#3JRKR)

Today GIGABYTE Technology announced the availability of ThunderXStation: the industry’s first 64-bit Armv8 workstation platform based on Cavium’s flagship ThunderX2 processor. “ThunderXStation is an ideal platform for Arm software developers across networking, embedded, mobile, and IoT verticals. We are delighted to be working closely with GIGABYTE on this, and we look forward to supporting them on a number of innovative new platforms.â€The post New Arm-based Workstation Opens the Doors for HPC Developers appeared first on insideHPC.

|

|

by staff on (#3JRKT)

Today Penguin Computing announced that Director of Advanced Solutions, Kevin Tubbs, Ph.D., will be speaking at NVIDIA’s GPU Technology Conference (GTC) on best practices in artificial intelligence. "On the second day of the conference, Tubbs will lead the “Best Practices in Designing and Deploying End-to-End HPC and AI Solutions†session. The focus of the session will be challenges faced by organizations looking to build AI systems and the design principles and technologies that have proven successful in Penguin Computing AI deployments for customers in the Top 500."The post Penguin Computing to share AI Best Practices at GPU Technology Conference appeared first on insideHPC.

|

|

by staff on (#3JRAE)

Today Hewlett Packard Enterprise announced new offerings to help customers ramp up, optimize and scale artificial intelligence usage across business functions to drive outcomes such as better demand forecasting, improved operational efficiency and increased sales. “HPE is best positioned to help customers make AI work for their enterprise, regardless of where they are in their AI adoption. While others provide AI components, we provide complete AI solutions from strategic advisory to purpose-built technology, operational support and a strong AI partner ecosystem to tailor the right AI solution for each organization.â€The post HPE Launches Vertical AI Solutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JR4Z)

Talia Gershon from the Thomas J. Watson Research Center gave this talk at the 2018 IBM Think conference. "There is a whole class of problems that are too difficult for even the largest and most powerful computers that exist today to solve. These exponential challenges include everything from simulating the complex interactions of atoms and molecules to optimizing supply chains. But quantum computers could enable us to solve these problems, unleashing untold opportunities for business."The post Video: IBM Quantum Computing will be “Mainstream in Five Years†appeared first on insideHPC.

|

|

by MichaelS on (#3JJSM)

Visualizing the results of a simulation can give new insight into complex scientific problems. Interactive viewing of entire datasets can lead to earlier understanding of the challenge at hand and can enhance the understanding of complex phenomena. With the release of the of HPC Visualization Containers with the NVIDIA CPU Cloud, it has become much easier to get a visualization system up and production ready much quicker than ever before.The post NVIDIA Makes Visualization Easier in the Cloud appeared first on insideHPC.

|

|

by staff on (#3JP2J)

"IBM's goal is to make it easier for you to build your deep learning models. Deep Learning as a Service has unique features, such as Neural Network Modeler, to lower the barrier to entry for all users, not just a few experts. The enhancements live within Watson Studio, our cloud-native, end-to-end environment for data scientists, developers, business analysts and SMEs to build and train AI models that work with structured, semi-structured and unstructured data — while maintaining an organization’s existing policy/access rules around the data."The post IBM Launches Deep Learning as a Service appeared first on insideHPC.

|

|

by staff on (#3JNZG)

Mariam Kiran is using an early-career research award from DOE’s Office of Science to develop methods combining machine-learning algorithms with parallel computing to optimize such networks. "This type of science and the problems it can address can make a real impact, Kiran says. “That’s what excites me about research – that we can improve or provide solutions to real-world problems.â€The post Overcoming Roadblocks in Computational Networks appeared first on insideHPC.

|

|

by staff on (#3JNWX)

In this video, researchers from IBM Research in Zurich describe how the new IBM Snap Machine Learning (Snap ML) software was able to achieve record performance running TesorFlow. "This training time is 46x faster than the best result that has been previously reported, which used TensorFlow on Google Cloud Platform to train the same model in 70 minutes."The post Video: IBM Sets Record TensorFlow Performance with new Snap ML Software appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JNKX)

Ahmed Hashmi from BP gave this talk at the Rice Oil & Gas conference. “The Oil and Gas High Performances Computing Conference, hosted annually at Rice University, is the premier meeting place for networking and discussion focused on computing and information technology challenges and needs in the oil and gas industry. High-end computing and information technology continues to stand out across the industry as a critical business enabler and differentiator with a relatively well understood return on investment. However, challenges such as constantly changing technology landscape, increasing focus on software and software innovation, and escalating concerns around workforce development still remain.â€The post Video: High Power Algorithms, High Performance Computing appeared first on insideHPC.

|

|

by staff on (#3JJQB)

Today Univa announced a new global partnership and reseller agreement with UberCloud. Under terms of the agreement, UberCloud, a leading HPC cloud provider, will resell Univa Grid Engine and related Univa products to UberCloud's growing community of HPC customers. "By deploying packaged HPC applications in containers, and managing them with Univa Grid Engine, users become productive immediately. They can focus on their work rather than spending time troubleshooting complicated HPC software stacks."The post Univa partners with UberCloud appeared first on insideHPC.

|

|

by staff on (#3JJQD)

“Optalysys has for the first time ever, applied optical processing to the highly complex and computationally demanding area of CNNs with initial accuracy rates of over 70%. Through our uniquely scalable and highly efficient optical approach, we are developing models that will offer whole new levels of capability, not only cloud-based but also opening up the extraordinary potential of CNNs to mobile systems.â€The post Optalysys Speeds Deep Learning with Optical Processing appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JJJA)

In this video from the 2018 Rice Oil & Gas Conference, Addison Snell from Intersect360 Research leads a panel discussion on Exascale computing. "High-end computing and information technology continues to stand out across the industry as a critical business enabler and differentiator with a relatively well understood return on investment. However, challenges such as constantly changing technology landscape, increasing focus on software and software innovation, and escalating concerns around workforce development still remain."The post Panel Discussion: Delivering Exascale Computing for the Oil and Gas Industry appeared first on insideHPC.

|

|

by staff on (#3JJEP)

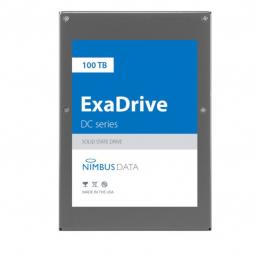

Today Nimbus Data announced the ExaDrive DC100, the largest capacity (100 terabytes) solid state drive (SSD) ever produced. Featuring more than 3x the capacity of the closest competitor, the ExaDrive DC100 also draws 85% less power. “The ExaDrive DC100 meets these challenges for both data center and edge applications, offering unmatched capacity in an ultra-low power design.â€The post Nimbus Data launches 100 Terabyte SSD appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JJER)

In this podcast, the Radio Free HPC team goes off the supercomputing rails a bit with a discussion on digital immortality. "A new company called Nectome will reportedly archive your mind for future uploading to a machine. While the price of $10K seems reasonable enough, they do have to kill you to complete the process."The post Radio Free HPC Looks at Immortality through Nectome’s Mind Archival appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#3JJA1)

The world of today’s HPC computing is driven by the ever-increasing generation and consumption of digital information. And the ability to analyze this rapidly growing pool of data, and extrapolate meaningful insights, gives modern businesses a competitive edge. Download the full report, “Introducing 200G HDR InfiniBand Solutions,†to learn about how Mellanox Technologies end-to-end 200G HDR InfiniBand solution is helping enable the next generation of data centers.The post What a 200G HDR InfiniBand Solution Means for Today’s Advanced Data Centers appeared first on insideHPC.

|

|

by staff on (#3JGBN)

Today MathWorks rolled out Release 2018a with a range of new capabilities in MATLAB and Simulink. "R2018a includes two new products, Predictive Maintenance Toolbox for designing and testing condition monitoring and predictive maintenance algorithms, and Vehicle Dynamics Blockset for modeling and simulating vehicle dynamics in a virtual 3D environment. In addition to new features in MATLAB and Simulink, and the new products, this release also includes updates and bug fixes to 94 other products."The post MATLAB adds new capabilities with Release R2018a appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JGA7)

Wonchan Lee, Todd Warszawski, and Karthik Murthy gave this talk at the Stanford HPC Conference. "Legion is an exascale-ready parallel programming model that simplifies the mapping of a complex, large-scale simulation code on a modern heterogeneous supercomputer. Legion relieves scientists and engineers of several burdens: they no longer need to determine which tasks depend on other tasks, specify where calculations will occur, or manage the transmission of data to and from the processors. In this talk, we will focus on three aspects of the Legion programming system, namely, dynamic tracing, projection functions, and vectorization."The post Advances in the Legion Programming Model appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JEDR)

GE in San Ramon, California is seeking a Data Scientist in our Job of the Week. "We are looking for a Data Scientist to work in Deep Learning(DL) to assist and build deep learning algorithms using imaging and non-imaging data. This individual will work with customer clinical data scientists and interface with internal GE software and data science teams to deliver high quality software in a fast paced, challenging, and creative environment."The post Job of the Week: Staff Data Scientist at GE appeared first on insideHPC.

|

|

by staff on (#3JE7S)

In this Let's Talk Exascale podcast, Tapasya Patki of Lawrence Livermore National Laboratory dicusses ECP’s Power Steering Project. "Efficiently utilizing procured power and optimizing the performance of scientific applications at exascale under power and energy constraints are challenging for several reasons. These include the dynamic behavior of applications, processor manufacturing variability, and increasing heterogeneity of node-level components."The post Podcast: Power and Peformance Optimization at Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JBX7)

The good folks at GlobusWorld 2018 have posted their speaker agenda. The meeting takes place April 25-26 in Chicago. "Our theme for 2018 is Connecting your Research Universe. When it's easy for researchers to move and share data across any location (e.g. campus clusters, XSEDE systems, lab servers, scientific instruments, archival storage, commercial clouds), they can collaborate more successfully and get to results faster. Globus now makes this possible, with connectivity to virtually any storage location via a single, easy to use interface. It has never been simpler to connect the universe of research data to the researchers who need it."The post Agenda Posted for GlobusWorld 2018 in Chicago appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JBV1)

Michael Bauer from Sylabs gave this talk at FOSDEM'17. "This presentation will provide an in-depth look at how Singularity is able to securely run user containers on HPC systems. After a brief introduction to Singularity and its relationship to other container solutions, the details of Singularity's runtime will be explored. The way that Singularity leverages Linux features such as namespaces, bind mounts, and SUID binaries will be discussed in further detail as well."The post Singularity: The Inner Workings of Securely Running User Containers on HPC Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#3JBJD)

In anticipation of forthcoming product announcements, Asetek today announced an ongoing collaboration with Intel to provide hot water liquid cooling for servers and datacenters. This collaboration, which includes Asetek’s ServerLSL and RackCDU D2C technologies, is focused on the liquid cooling of density-optimized Intel Compute Modules supporting high-performance Intel Xeon Scalable processors.The post Asetek Announces Ongoing Collaboration with Intel on Liquid Cooling for Servers and Datacenters appeared first on insideHPC.

|

|

by staff on (#3JBG7)

Bill Magro of Intel Corporation will kick off the OpenFabrics Workshop with a keynote speech, “Software Foundation for High-Performance Fabrics in the Cloud.†The event takes place April 9-13 in Boulder, Colorado. "This talk will highlight the broadening role of OpenFabrics, in general, and the Open Fabrics Interface, in particular, to rise to the challenge of meeting the emerging requirements and become the software foundation for high-performance cloud fabrics."The post Bill Magro from Intel to Keynote OpenFabrics Workshop appeared first on insideHPC.

|

|

by Rich Brueckner on (#3J8WV)

Today NVIDIA announced the availability of PGI 2018. "PGI is the default compiler on many of the world’s fastest computers including the Titan supercomputer at Oak Ridge National Laboratory. PGI production-quality compilers are for scientists and engineers using computing systems ranging from workstations to the fastest GPU-powered supercomputers."The post NVIDIA Releases PGI 2018 Compilers and Tools appeared first on insideHPC.

|

|

by staff on (#3J8PT)

Today Univa announced the contribution of its Navops Launch product to the open source community as Project Tortuga under an Apache 2.0 license. The free and open code is designed to help proliferate the transition of enterprise HPC workloads to the cloud. "Having access to more software that applies to a broad set of applications like high performance computing is key to making the transition to the cloud successful," said William Fellows, Co-Founder and VP of Research, 451 Research. "Univa's contribution of Navops Launch to the open source community will help with this process, and hopefully be an opportunity for cloud providers to contribute and use Tortuga as the on-ramp for HPC workloads."The post Univa Open Sources Project Tortuga for moving HPC Workloads to the Cloud appeared first on insideHPC.

|

|

by MichaelS on (#3J8PW)

In the past, it was necessary to understand a complex programming language such as Verilog or VHDL, that was designed for a specific FPGA. "Using a familiar language such as OpenCL, developers can become more productive, sooner when deciding to use an FPGA for a specific purpose. OpenCL is portable and is designed to be used with almost any type of accelerator."The post FPGA Programming Made Easy appeared first on insideHPC.

|

|

by Rich Brueckner on (#3J8KX)

William Hurley from Strangeworks gave this talk at SXSW 2018. "Quantum computing isn’t just a possibility, it’s an inevitable next step. During his Keynote, whurley discusses how it will forever change the computing landscape and potentially the balance of international power. Countries around the world are investing billions to become the first quantum superpower. When we say an emerging technology represents a “paradigm shift,†it’s often hyperbole. In the case of quantum computing, it’s an understatement."The post Whurley Keynotes SXSW: The Endless Impossibilities of Quantum Computing appeared first on insideHPC.

|

|

by staff on (#3J6N2)

Today Cray announced that the National Institutes for Quantum and Radiological Science and Technology (QST) selected a Cray XC50 supercomputer to be its new flagship supercomputing system. "The new system is expected to deliver peak performance of over 4 petaflops, an increase of more than 2 times the system it is replacing.The speed and integrated software environment of the Cray XC50 will enhance QST’s infrastructure and allow researchers to speed time to discovery.â€The post Cray to Build 4 Petaflop Supercomputer for Fusion Research at QST in Japan appeared first on insideHPC.

|

|

by staff on (#3J5T0)

Today the UC San Diego Center for Microbiome Innovation (CMI) announced that Panasas has joined CMI’s Corporate Member Board and has donated a 500TB Panasas ActiveStor high-performance storage solution to support the acceleration of microbiome research. ActiveStor drives productivity and accelerates time to results with ultrafast streaming performance, true linear scalability, enterprise-grade reliability and unparalleled ease of management. "We are grateful for the support of Panasas,†said Center Faculty Director Rob Knight. “The advanced ActiveStor data storage solution we now have at our disposal will greatly enhance the activities of the Center.â€The post Panasas Donates 500 TB ActiveStore Solution to CMI Center for Microbiome Innovation appeared first on insideHPC.

|