|

by Rich Brueckner on (#4YARM)

Michela Taufer from UT Knoxville gave this talk at ATPESC 2019. "This talk discusses two emerging trends in computing (i.e., the convergence of data generation and analytics, and the emergence of edge computing) and how these trends can impact heterogeneous applications. This talk presents case studies of heterogenous applications in precision medicine and precision farming that expand scientist workflows beyond the supercomputing center and shed our reliance on large-scale simulations exclusively, for the sake of scientific discovery."The post Michela Taufer presents: Scientific Applications and Heterogeneous Architectures appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-02 17:00 |

|

by staff on (#4YAAZ)

RJM International, a provider of emissions reduction and combustion improvement solutions, has deployed its first HPC cluster. The move supports the growth of the company’s business in the EU and the UK, particularly in the biomass and Energy from Waste segments of the market. The new HPC environment is designed, integrated and supported by HPC, storage and data analytics integrator, OCF.The post RJM International deploys HPC cluster for reducing power plant emissions appeared first on insideHPC.

|

|

by staff on (#4Y90J)

Today Mellanox announced that OpenStack software now includes native and upstream support for virtualization over HDR 200 gigabit InfiniBand network, enabling customers to build high-performance OpenStack-based cloud services over the most enhanced interconnect infrastructure, taking advantage of InfiniBand's extremely low latency, high data-throughput, In-Network Computing and more.The post OpenStack Adds Native Upstream Support for HDR InfiniBand appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y90K)

In this Let’s Talk Exascale podcast, Gina Tourassi from ORNL describes how the CANDLE project is setting the stage to fight cancer with the power of Exascale computing. "Basically, as we are leveraging supercomputing and artificial intelligence to accelerate cancer research, we are also seeing how we can drive the next generation of supercomputing."The post Podcast: A Codebase for Deep Learning Supercomputers to Fight Cancer appeared first on insideHPC.

|

|

by staff on (#4Y90N)

HPE has been selected by Zenuity, a leading developer of software for self-driving and assisted driving cars, to provide the crucial AI and HPC infrastructure it needs in order to develop next generation autonomous driving (AD) systems. "HPE will provide Zenuity with core data processing services that will allow Zenuity to gather, store, organize and analyze the data it generates globally from its network of test vehicles and software development centers. The end-to-end IT infrastructure will be delivered as-a-Service through HPE GreenLake."The post Zenuity and HPE to develop next generation autonomous cars appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y90Q)

Yunong Shi from the University of Chicago gave this talk at ATPESC 2019. "The Argonne Training Program on Extreme-Scale Computing provides intensive, two weeks of training on the key skills, approaches, and tools to design, implement, and execute computational science and engineering applications on current high-end computing systems and the leadership-class computing systems of the future."The post SW/HW co-design for near-term quantum computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y8E5)

Today the 2020 ASC Student Supercomputer Challenge (ASC20) announced new tasks for the competition: using supercomputers to simulate Quantum circuit and training AI models. These tasks will be unprecedented challenges for the 300+ ASC teams from around the world. The Finals event takes place April 25-29, 2020 in Shenzhen, China. "through these tasks, students from all over the world get to access and learn the most cutting-edge computing technologies. ASC strives to foster supercomputing & AI talents of global vision, inspiring technical innovation."The post ASC20 to test Student Cluster Teams on Quantum & AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y7CD)

Today One Stop Systems (OSS) announced the availability of a new OSS PCIe 4.0 value expansion system incorporating up to eight of the latest NVIDIA V100S Tensor Core GPU. As the newest member of the company’s AI on the Fly product portfolio, the system delivers data center capabilities to HPC and AI edge deployments in the field or for mobile applications. "The 4U value expansion system adds massive compute capability to any Gen 3 or Gen 4 server via two OSS PCIe x16 Gen 4 links. The links can support an unprecedented 512 Gpbs of aggregated bandwidth to the GPU complex."The post OSS PCI Express 4.0 Expansion System does AI on the Fly with Eight GPUs appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y7CE)

In this video, NVIDIA’s Duncan Poole and Arm’s David Lecomber explain how the two company's accelerate the world’s fastest supercomputers. "At SC19, NVIDIA introduced a reference design platform that enables companies to quickly build GPU-accelerated Arm-based servers, driving a new era of high performance computing for a growing range of applications in science and industry. The reference design platform — consisting of hardware and software building blocks — responds to growing demand in the HPC community to harness a broader range of CPU architectures."The post NVIDIA and Arm look to accelerate HPC Worldwide appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y7CG)

Today European chip startup SiPearl announced it is moving into its operational phase. The company is now the 27th member of the European Processor Initiative (EPI) consortium. "By delivering supercomputing power, energy efficiency and backdoor-free security, the solutions that we are developing with support from the EPI members will enable Europe to gain its independence and, more importantly, to ensure its technological sovereignty on the market for high performance computing, which has become one of the key drivers for economic growthâ€, explains Philippe Notton, SiPearl’s CEO.The post European SiPearl Startup designing microprocessor for Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y7CJ)

Dr. Eng Lim Goh gave this talk at HPE Discover More. He describes inspiring real-world use cases that demonstrate the transformative power of AI and HPC. Hear how the next wave of Machine Intelligence is uncovering value in data like never before to solve some of the world’s most significant challenges."The post AI & Blockchain: Internet of smarter things appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y6VJ)

Hyperion Research has posted their preliminary agenda for the upcoming HPC User Forum. The event takes place March 30 - April 1 in Princeton, New Jersey. "The HPC User Forum was established in 1999 to promote the health of the global HPC industry and address issues of common concern to users (www.hpcuserforum.com). The organization has grown to 150 members."The post Registration Opens for HPC User Forum in Princeton, NJ appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y5SN)

Steve Conway from Hyperion Research gave this talk at the Panasas User Group. "Hyperion Research conducted an industry survey to better understand TCO vs. initial costs/other purchase criteria, the benefits of greater simplicity, and buyer/user perceptions of major vendors of HPC storage systems."The post The State of HPC Storage – Requirements & Challenges appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y5SJ)

In this AI Podcast, Doina Precup describes why their doesn't need to be a gender gap in computer science education. An associate professor at McGill University and research team lead at DeepMind, Precup shares her personal experiences, along with the AI4Good Lab she co-founded to give women more access to machine learning training.The post Podcast: AI4Good Lab Empowers Women in Computer Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y5SM)

Today Appentra released Parallelware Trainer 1.4, an interactive, real-time code editor with features that facilitate the learning, usage, and implementation of parallel programming by understanding how and why sections of code can be parallelized. "As Appentra strives to make parallel programming easier, enabling everyone to make the best use of parallel computing hardware from the multi-cores in a laptop to the fastest supercomputers. With this new release, we push Parallelware Trainer further towards that goal."The post Appentra Releases Parallelware Trainer 1.4 appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y4H8)

Dr. Suryachandra Rao from MoES gave this talk at the DDN User Group. "The Ministry of Earth Sciences (MoES) is mandated to provide services for weather, climate, ocean and coastal state, hydrology, seismology, and natural hazards; to explore and harness marine living and non-living resources in a sustainable way and to explore the three poles (Arctic, Antarctic and Himalayas). MoES recently inaugurated a new supercomputer at the Indian Institute of Tropical Meteorology (IITM) in Pune, dedicated to improving weather and climate forecasts across the country."The post Video: Data Driven Ocean & Atmosphere Sciences at MoES in India appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y4HA)

Researchers at ORNL are trying out their HPC codes on Wombat, a test bed cluster based on production Marvell ThunderX2 CPUs and NVIDIA V100 GPUs. The small cluster provides a platform for testing NVIDIA’s new CUDA software stack purpose-built for Arm CPU systems. "Eight teams successfully ported their codes to the new system in the days leading up to SC19. In less than 2 weeks, eight codes in a variety of scientific domains were running smoothly on Wombat."The post ORNL Tests Arm-based Wombat Platform with NVIDIA GPUs appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y3JC)

In this video from the DDN booth at SC19, Scot Schultz from Mellanox presents: Connecting Visions: HDR 200GB/sec InfiniBand. "HDR 200Gb/s InfiniBand accelerates 31 percent of new InfiniBand-based systems on the current TOP500, including the fastest TOP500 supercomputer built this year. The results also highlight InfiniBand’s continued position in the top three supercomputers in the world and acceleration of six of the top 10 systems. Since the TOP500 List release in June 2019, InfiniBand’s presence has increased by 12 percent, now accelerating 141 supercomputers on the List."The post HDR 200GB/sec InfiniBand for HPC & AI appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y3JE)

The Norwich Biosciences Institute Partnership (NBIP) is seeking a new Head of Research Computing in our Job of the Week. "We have an exciting opportunity for a strategic High-Performance Computing (HPC) leader to take accountability for the future development of research computing across one of the UK’s foremost research organisations. The Head of Research Computing will set the future technology strategy and service model for mission-critical research IT services at NBI."The post Job of the Week: Head of Research Computing at the Norwich Biosciences Institute appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y286)

Today Groq announced that the company's tensor processing hardware is now available on the Nimbix Cloud for select partners. The Groq processor is designed specifically for the performance requirements of computer vision, machine learning and other AI-related workloads, and is the first architecture in the world capable of 1 PetaOp/s performance on a single chip. Nimbix is the leading HPC cloud platform provider and offers purpose-built cloud computing for the world's most demanding workloads.The post Groq Hardware Now Available on Nimbix Cloud for AI Workloads appeared first on insideHPC.

|

|

by staff on (#4Y288)

In this Sciencetown podcast, we follow experts from around the world to the epicenter of supercomputing - the annual, North American supercomputing conference or SC19. We ask them to weigh in on how the future of computers, artificial intelligence, machine learning and more are coming together to shape the way we explore and understand our world.The post Podcast: Sciencetown Investigates Extreme Computing at SC19 appeared first on insideHPC.

|

|

by staff on (#4Y1RD)

Today Mellanox announced that 200 Gigabit HDR InfiniBand has been selected by the European Centre for Medium-Range Weather Forecasts (ECMWF) to accelerate their new world-leading supercomputer, based on Atos’ latest BullSequana XH2000 technology. By leveraging HDR InfiniBand's fast data throughout, extremely low latency, and smart In-Network Computing engines, ECMWF will enhance, speed up, and increase the accuracy of weather forecasting and predictions.The post 200G HDR InfiniBand to Accelerate Weather Forecasting at ECMWF appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y28A)

Supercomputing Frontiers Europe 2020 is a platform for the thought leaders from both academia and industry to interact and discuss the visionary ideas, the most important global trends and substantial innovations in supercomputing. This year the SCEF 2020 will take place March 23-26, 2020 in Warsaw, Poland.The post Meet the Future at the Supercomputing Frontiers Europe 2020 Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y28C)

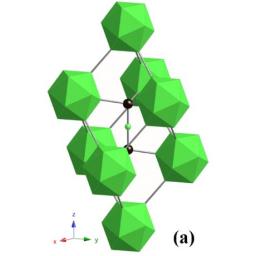

Researchers at the University of Florida are using XSEDE supercomputers to unlock the mysteries of boron carbide, one of the hardest materials on earth. The material is also very lightweight, which explains why it has been used in making vehicle armor and body protection for soldiers. The research, which primarily used the Comet supercomputer at the San Diego Supercomputer Center along with the Stampede and Stampede2 systems at the Texas Advanced Computing Center, may provide insight into better protective mechanisms for vehicle and soldier armor after further testing and development.The post Supercomputing the Mysteries of Boron Carbide appeared first on insideHPC.

|

|

by staff on (#4Y0D3)

Today Exxact Corporation announced the company is joining the Panasas Accelerate Channel Partner Program. Exxact will become a key distributor and integrator for the Panasas ActiveStor Ultra product line, providing installation, configuration and support services globally. The move broadens Exxact HPC solutions that can be customized to industry requirements and scaled to multiple sized organizations. "By partnering with Panasas, an industry leader in HPC storage, we are able to offer our customers a top tier, fully integrated and turnkey HPC storage solution, and ActiveStor Ultra with PanFS is a perfect fit for this,†said Jason Chen, Vice President at Exxact Corporation. “ActiveStor Ultra’s focus on HPC performance, while also offering reliability and simplicity in a single package gives our customers an HPC storage solution that’s easy to deploy and manage.â€The post Exxact Teams with Panasas for Turnkey HPC Storage Clusters appeared first on insideHPC.

|

|

by staff on (#4Y0D4)

Today ScaleMP announced that it has accomplished best-ever recorded results for the SPEC CPU 2017 benchmark for any system size of four processors and higher. ScaleMP’s results for 32-, 24- and 16-processor systems are now holding the top three positions for best results ever. "We are extremely pleased with these new benchmark results, which show superiority across the board,†said Shai Fultheim, founder and CEO of ScaleMP. “Customers can now scale their systems on demand for more computing or more memory, pay only for what they need, and be sure that they are buying the best and most cost-effective solution available that delivers the best performance.â€The post ScaleMP and AMD Benchmark Best-in-Class Large-System Performance on SPEC CPU 2017 appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y0D5)

In this podcast, the Radio Free HPC team lays out their tech predictions for 2020. "Henry predicts that we’ll see a RISC-V based supercomputer on the TOP500 list by the end of 2020 – gutsy call on that. This is a double down on a bet that Dan and Henry have, so he’s reinforcing his position. Dan also sees 2020 as the “Year of the FPGA.â€The post 2020 Predictions from Radio Free HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#4Y0D6)

James Moawad and Greg Nash from Intel gave this talk at ATPESC 2019. "FPGAs are a natural choice for implementing neural networks as they can handle different algorithms in computing, logic, and memory resources in the same device. Faster performance comparing to competitive implementations as the user can hardcore operations into the hardware. Software developers can use the OpenCL device C level programming standard to target FPGAs as accelerators to standard CPUs without having to deal with hardware level design."The post Video: FPGAs and Machine Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XZT4)

Today WekaIO announced that Genomics England (GEL) has selected the Weka File System (WekaFS) to accelerate genomics research for the 5 Million Genomes Project. "We needed a modern storage solution that could scale to 100s petabytes while maintaining performance scaling, and it had to be simple to manage at that scale. With its clever combination of flash for performance and object store for scale, Weka has proven to be a great solution.â€The post WekaIO Accelerates 5 Million Genomes Project at Genomics England appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XYR0)

Early career researchers and university students are encouraged to take advantage of the ISC Travel Grant and the ISC Student Volunteer programs. The programs are intended to enable these participants to attend the ISC 2020 conference in Frankfurt Germany, from June 21 – 25. "The ISC High Performance Travel Grant Program is open to early career researchers and students who have never been to the conference and wish to be a part of it this year. For recipients traveling from Europe or North Africa, the maximum funding is 1500 euros per person; for the rest of the world, it is 2500 euros per person. ISC Group will also provide the grant recipients free registration for the entire conference, as well as mentorship during the event. The purpose of the grant is to enable university students and researchers who are highly interested in acquiring high performance computing knowledge and skills, but lack the necessary resources to obtain them, to participate in the ISC High Performance conference series."The post Apply Now for ISC Travel Grant and Student Volunteer Programs appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XYR1)

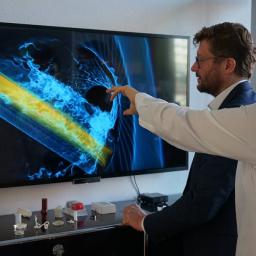

People with mechanical heart valves need blood thinners on a daily basis, because they have a higher risk of blood clots and stroke. With the help of the Piz Daint supercomputer, researchers at the University of Bern have identified the root cause of blood turbulence leading to clotting. Design optimization could greatly reduce the risk of clotting and enable these patients to live without life-long medication.The post Reducing the risk of blood clots by supercomputing turbulent flow appeared first on insideHPC.

|

|

by staff on (#4XTT3)

Today Atos announced an €80 million contract with the European Centre for Medium-Range Weather Forecasts (ECMWF) to supply its for a BullSequana XH2000 supercomputer. Powered by AMD EPYC 7742 processors, the new system will be the most powerful meteorological supercomputers in the world. "The Atos supercomputer will allow ECMWF to run its world-wide 15-day ensemble prediction at a higher resolution of about 10km, reliably predicting the occurrence and intensity of extreme weather events ahead of time."The post AMD to Power Atos Supercomputer at ECMWF appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XYR3)

Dr. Jack Dongarra from the University of Tennessee has been named to receive the IEEE Computer Society’s 2020 Computer Pioneer Award. "The award is given for significant contributions to early concepts and developments in the electronic computer field, which have clearly advanced the state-of-the-art in computing. Dongarra is being recognized “for leadership in the area of high-performance mathematical software.â€The post Jack Dongarra to Receive 2020 IEEE Computer Pioneer Award appeared first on insideHPC.

|

|

by staff on (#4XYR4)

Lex Fridman gave this talk as part of the MIT Deep Learning series. "This lecture is on the most recent research and developments in deep learning, and hopes for 2020. This is not intended to be a list of SOTA benchmark results, but rather a set of highlights of machine learning and AI innovations and progress in academia, industry, and society in general."The post Deep Learning State of the Art in 2020 appeared first on insideHPC.

|

|

by staff on (#4XYR6)

Vortex Bladeless presented the company’s design for a new wind energy technology. One of the key characteristics of this system is the reduction of mechanical elements that can be worn by friction. The company developed the technology using CFD tools provided by Altair, which helped the company study both the fluid-structure interaction and the behaviour of the magnetic fields in the alternator.The post Advanced simulation tools for Vortex Bladeless wind power appeared first on insideHPC.

|

|

by staff on (#4XX0Z)

Incooling has adopted GIGABYTE’s overclockable R161 Series server platform as the test-bed and prototype model for a new class of two-phase liquid cooled overclockable servers designed for the high frequency trading market. "Incooling's technology is capable of pushing temperatures far below the traditional data center air temperatures, unlocking a new class of turbocharged servers. It does this by leveraging specialized refrigerants using phase-change cooling, inside a pressure-controlled loop. This is a highly efficient method that allows exchange of the heat from the chip with the datacenter air with far less thermal resistance. Coupled with the R161 overclockable server from GIGABYTE, Incooling’s solutions are able to push performance further than ever before. First system tests showed up to 20°C lower core temperatures contributing up to 10% increase in boost clock-speed whilst lowering total power draw by 200 watts."The post GIGABYTE and Incooling to Develop Two-Phase Liquid Cooled Servers for High Frequency Trading appeared first on insideHPC.

|

|

by staff on (#4XX10)

The 2020 HiPEAC conference will kick off next week in Bologna to showcase the innovative made-in-Europe technologies driving computing systems from the edge to the cloud. This year, the conference will dive into radical new developments in European processor technology, including open source hardware, while building on HiPEAC’s long-established reputation for cutting-edge research into heterogeneous architectures, cross-cutting artificial intelligence themes, security and more.The post 2020 HiPEAC Conference to Showcase European Computing Technologies appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XWQC)

The 19th Workshop on High Performance Computing in Meteorology has issued its Call for Abstracts. With a theme entitled "Towards Exascale Computing in Numerical Weather Prediction, the event takes place September 14-18 in Bologna, Italy. "The workshop will consist of keynote talks from invited speakers, 20-30 minute presentations, a panel discussion and a visit to the new data centre. Our aim is to provide a forum where users from our Member States and around the world can report on recent experience and achievements in the field of high performance computing; plans for the future and requirements for computing power will also be presented."The post Call for Abstracts: 19th Workshop on High Performance Computing in Meteorology appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XWBJ)

The Spanish Supercomputing Network (RES) closed the year 2019 with a record in the number of processor hours allocated to Spanish researchers in different areas of knowledge such as: Astronomy, Space and Earth Sciences, Biomedicine and Science Life, Engineering, Physics, Mathematics, Solid State Chemistry, Biological Systems Chemistry. Specifically, in 2019, 583.21 million processor hours were made available to Spanish researchers in the 12 supercomputers that are part of this Singular Scientific-Technical Infrastructure. These data represent an increase of 80% of the amount of 2018, almost doubling the total number of hours.The post Spanish Supercomputing Network serves up record computing hours for Science appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XWQD)

In this Lets Talk Exascale podcast, Katrin Heitmann from Argonne describes how the ExaSky project may be one of the first applications to reach exascale levels of performance. "Our current challenge problem is designed to run across the full machine [on both Aurora and Frontier], and doing so on a new machine is always difficult,†Heitmann said. “We know from experience, having been first users in the past on Roadrunner, Mira, Titan, and Summit; and each of them had unique hurdles when the machine hit the floor.â€The post Podcast: Will the ExaSky Project be First to Reach Exascale? appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XVAG)

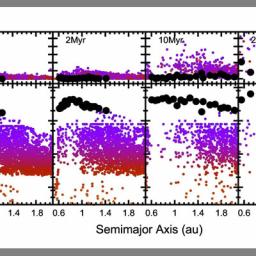

Researchers are using a novel approach to solving the mysteries of planet formation with the help of the Comet supercomputer at the San Diego Supercomputer Center on the UC San Diego campus. The modeling enabled scientists at the Southwest Research Institute (SwRI) to implement a new software package, which in turn allowed them to create a simulation of planet formation that provides a new baseline for future studies of this mysterious field. “The problem of planet formation is to start with a huge amount of very small dust that interacts on super-short timescales (seconds or less), and the Comet-enabled simulations finish with the final big collisions between planets that continue for 100 million years or more.â€The post Supercomputing Planet Formation at SDSC appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XVAJ)

Today TMGcore announced that Facility Solutions Group (FSG) will be the first OTTO-Ready Electrical Contractor. OTTO is a line of highly efficient, high density, modular two-phase immersion cooled data center platforms with a fully integrated power, cooling, racking, networking and management experience, backed by partnerships with numerous industry leaders. "We first worked with FSG in 2018 when we were building our own headquarters and data center in Plano, Texas,†said John-David Enright, CEO of TMGcore. “We trust them to handle our electrical needs daily and, therefore, we trust they can do the same for our customers now, helping them decide upon the best electrical solutions for the installation and creation of ideal environments for their OTTO platforms.â€The post TMGcore teams with FSG as Certified OTTO Ready Electrical Contractor appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XV0V)

The MSST 2020 Mass Storage Conference has issued its Call for Papers. The event will be held May 4-8, 2020 at Santa Clara University, with the Research Track taking place May 7 and 8. "The conference focuses on current challenges and future trends in storage technologies. MSST 2020 will include a day of tutorials, two days of invited papers, and two days of peer-reviewed research papers. The conference will be held, once again, on the beautiful campus of Santa Clara University, in the heart of Silicon Valley."The post Call for Papers: MSST 2020 Mass Storage Conference in Santa Clara appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XV0X)

In this video from ATPESC 2019, Rob Schreiber from Cerebras Systems looks back at historical computing advancements, Moore's Law, and what happens next. "A recent report by OpenAI showed that, between 2012 and 2018, the compute used to train the largest models increased by 300,000X. In other words, AI computing is growing 25,000X faster than Moore’s law at its peak. To meet the growing computational requirements of AI, Cerebras has designed and manufactured the largest chip ever built."The post Video: The Parallel Computing Revolution Is Only Half Over appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XSRQ)

Darrin Johnson from NVIDIA gave this talk at the DDN User Group. "The NVIDIA DGX SuperPOD is a first-of-its-kind artificial intelligence (AI) supercomputing infrastructure that delivers groundbreaking performance, deploys in weeks as a fully integrated system, and is designed to solve the world's most challenging AI problems. "When combined with DDN’s A3I data management solutions, NVIDIA DGX SuperPOD creates a real competitive advantage for customers looking to deploy AI at scale.â€The post NVIDIA DGX SuperPOD: Instant Infrastructure for AI Leadership appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XSXQ)

Today Los Alamos National Laboratory announced that it is joining the cloud-based IBM Q Network as part of the Laboratory’s research initiative into quantum computing, including developing quantum computing algorithms, conducting research in quantum simulations, and developing education tools. "Joining the IBM Q Network will greatly help our research efforts in several directions, including developing and testing near-term quantum algorithms and formulating strategies for mitigating errors on quantum computers,†said Irene Qualters, associate laboratory director for Simulation and Computation at Los Alamos.The post Los Alamos National Laboratory joins IBM Q Network for quantum computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XRYK)

In this Lets Talk Exascale podcast, Elaine Raybourn from Sandia National Laboratories decribes how Productivity Sustainability Improvement Planning (PSIP) is bringing software development teams together at the Exascale Computing Project. PSIP brings software development activities together and enables partnerships and the adoption of best practices across aggregate teams.The post Podcast: PSIP Brings Software Development teams together at the Exascale Computing Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XRYN)

UC San Diego is seeking a Research Systems Integration Engineer in our Job of the Week. "Information Technology Services uses world-class services and technologies to empower UC San Diego's mission to transform California and the world as a student-centered, research-focused, service-oriented public university. As a strategic member of the UC San Diego community, IT Services embraces innovation in their delivery of IT services, infrastructure, applications, and support. IT Services is customer-focused and committed to collaboration, continuous improvement, and accountability."The post Job of the Week: Research Systems Integration Engineer at UC San Diego appeared first on insideHPC.

|

|

by staff on (#4XQFR)

Today Sano Genetics announced the company is using Lifebit CloudOS to power their free DNA sequencing platform, achieving 35% increase in speed of imputation analyses. Imputation is a statistical technique that fills in the gaps between sites measured by genotyping arrays, and is very useful for genetic genealogy and other forms of ‘citizen science’. Any participant who uploads their DTC genetic data to the Sano platform can download their imputed data within about 15 minutes.The post Sano Genetics Deploys Lifebit CloudOS for Direct-to-Consumer Genetics appeared first on insideHPC.

|

|

by staff on (#4XQFS)

MIRIS has entered into an agreement with LiquidCool Solutions (LCS), a world leader in rack-based immersion cooling technology for datacenters. The agreement gives MIRIS the exclusive right to distribute LCS technology on heat recovery projects. "Together we will develop the next generation of datacenter racks, with the highest density and the most effective heat recovery that the industry has ever seen. LCS brings to the plan a technology that is uniquely able to recapture more than 90% of rack input energy in the form of a 60 degree C liquid, and an emphasis will be placed on reusing valuable energy that would otherwise be wasted."The post MIRIS Teams with LiquidCool Solutions for Datacenter Heat Recovery appeared first on insideHPC.

|