|

by staff on (#4XQFV)

Researchers at the Department of Energy’s Oak Ridge National Laboratory have developed a quantum chemistry simulation benchmark to evaluate the performance of quantum devices and guide the development of applications for future quantum computers. “This work is a critical step toward a universal benchmark to measure the performance of quantum computers, much like the LINPACK metric is used to judge the fastest classical computers in the world.â€The post ORNL Researchers Develop Quantum Chemistry Simulation Benchmark appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-02 17:00 |

|

by staff on (#4XQFX)

Thanks to clouds, latest climate models predict more global warming than their predecessors. Researchers at LLNL in collaboration with colleagues from the University of Leeds and Imperial College London have found that the latest generation of global climate models predict more warming in response to increasing carbon dioxide. "If global warming leads to fewer or thinner clouds, it causes additional warming above and beyond that coming from carbon dioxide alone. In other words, an amplifying feedback to warming occurs."The post Latest Climate Models Predict Thinner Clouds and More Global Warming appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XP58)

Microway has deployed six NVIDIA DGX-2 supercomputer systems at Oregon State University. As an NVIDIA Partner Network HPC Partner of the Year, Microway installed the DGX-2 systems, integrated software, and transferred their extensive AI operational knowledge to the University team. "The University selected the NVIDIA DGX-2 platform for its immense power, technical support services, and the Docker images with NVIDIA's NGC containerized software. Each DGX-2 system delivers an unparalleled 2 petaFLOPS of AI performance."The post Microway Deploys NVIDIA DGX-2 supercomputers at Oregon State University appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XNV4)

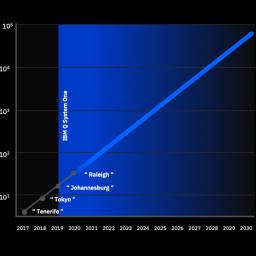

All qubits are not created equal. Over at the IBM Blog, Jerry Chow and Jay Gambetta write that the company's new Raleigh 28-qubit quantum computer has achieved the company’s goal of doubling its Quantum Volume. The development marks a shift from experimentation towards building Quantum Computers with a systems approach.The post IBM Doubles Quantum Volume with 28 Qubit Raleigh System appeared first on insideHPC.

|

|

by staff on (#4XNJ5)

In October Bittware and Achronix announced a strategic collaboration with Achronix to introduce the S7t-VG6 PCIe accelerator product – a PCIe card sporting the new Achronix 7nm Speedster7t FPGA. This new generation of accelerator products offers a range of capabilities including low-cost and highly flexible GDDR6 memory that aims to offer HBM-class memory bandwidth, high-performance machine learning processors and a new 2D network-on-chip for high bandwidth and energy-efficient data movement.The post New Achronix Bittware FPGA Accelerator Speeds Cloud, AI, and Machine Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XNJ6)

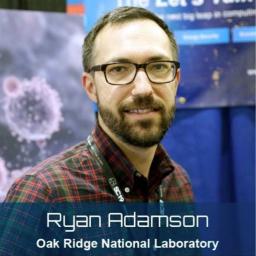

In this Let’s Talk Exascale podcast, Ryan Adamson from Oak Ridge National Laboratory describes how his role at the Exascale Computing Project revolves around software deployment and continuous integration at DOE facilities. “Each of the scientific applications that we have depends on libraries and underlying vendor software,†Adamson said. “So managing dependencies and versions of all of these different components can be a nightmare."The post Podcast: Software Deployment and Continuous Integration for Exascale appeared first on insideHPC.

|

|

by staff on (#4XNJ8)

In this special guest feature, Robert Roe from Scientific Computing World writes that it is not always clear which HPC technology provides the most energy-efficient solution for a given application. "You need to understand your application as somebody that is coming into this from a greenfield perspective. If your application doesn’t parallelize well, or if it needs higher frequency processors, then the best thing you can do is pick the right processor and the right number of them so you are not wasting power on CPU cycles that are not being used."The post Technologies for Energy Efficient Supercomputing appeared first on insideHPC.

|

|

by staff on (#4XM2G)

IDC MarketScape has recognized WekaIO as a Major Player in this sector. According to the IDC MarketScape 2019, IDC believes that file-based storage (FBS) will continue to evolve to address the needs of traditional and next-generation workloads. The IDC MarketScape noted, “WekaFS was developed from the ground up to utilize the performance of NVMe flash technology to deliver the optimum performance and minimum latency for demanding and unpredictable AI workloads. WekaIO's customers claim satisfaction and that the offering holds to performance promises made by the vendor.â€The post WekaIO Named Major Player in File-Based Storage by IDC MarketScape appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XM2H)

Today IBM and Delta Air Lines announced a multi-year collaborative effort to explore the potential capabilities of quantum computing to transform experiences for customers and employees. "Delta joins more than 100 clients already experimenting with commercial quantum computing solutions alongside classical computers from IBM to tackle problems like risk analytics and options pricing, advanced battery materials and structures, manufacturing optimization, chemical research, logistics and more."The post Delta Partners with IBM to Explore Quantum Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XM2K)

Today AI chip startup Groq announced that their new Tensor processor has achieved 21,700 inferences per second (IPS) for ResNet-50 v2 inference. Groq’s level of inference performance exceeds that of other commercially available neural network architectures, with throughput that more than doubles the ResNet-50 score of the incumbent GPU-based architecture. ResNet-50 is an inference benchmark for image classification and is often used as a standard for measuring performance of machine learning accelerators.The post Groq AI Chip Benchmarks Leading Performance on ResNet-50 Inference appeared first on insideHPC.

|

|

by staff on (#4XKT2)

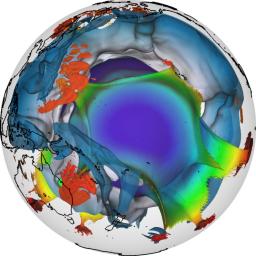

Scientists are taking advantage of an $2.5 million NSF grant to develop a new framework for integrated geodynamic models that simulate the Earth's molten core. "Most physical phenomena can be described by partial differential equations that explain energy balances or loss,†said Heister, an associate professor of mathematical sciences who will receive $393,000 of the overall funding. “My geoscience colleagues will develop the equations to describe the phenomena and I’ll write the algorithms that solve their equations quickly and accurately.â€The post Simulating the Earth’s mysterious mantle appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XM2N)

In this podcast, the Radio Free HPC team looks at how Quantum Computing is overhyped and underestimated at the same time. "The episode starts out with Henry being cranky. It also ends with Henry being cranky. But between those two events, we discuss quantum computing and Shahin’s trip to the Q2B quantum computing conference in San Jose."The post Podcast: The Overhype and Underestimation of Quantum Computing appeared first on insideHPC.

|

|

by staff on (#4XJBY)

Today Atos announced a 4-year contract to supply its BullSequana XH2000 supercomputer to the University of Luxembourg. "The BullSequana XH2000 supercomputer will give researchers 1.5 times more computing capacity with a theoretical peak performance of 1.7 petaflops which will complement the existing supercomputing cluster. It will be equipped with AMD EPYC processors and Mellanox InfiniBand HDR technology, connected to a DDN storage environment."The post Atos to deploy AMD-powered AION Supercomputer at the University of Luxembourg appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XJBZ)

Today Hyperion Research announced that the company is staffing up with two new analysts for continuing growth and new business opportunities. The analyst firm provides thought leadership and practical guidance for users, vendors, and other members of the HPC community by focusing on key market and technology trends across government, industry, commerce, and academia.The post Hyperion Research Expands Analyst Team appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XJC1)

Dr. Alice-Agnes Gabriel from LMU is the winner of the 2020 PRACE Ada Lovelace Award for HPC for her outstanding contributions to HPC in Europe. "Dr. Alice-Agnes Gabriel uses numerical simulations coupled to experimental observations to increase our understanding of the underlying physics of earthquakes. The work includes wide scales and can improve our knowledge and safety against these natural phenomena.†says Núria López, Chair of the PRACE Scientific Steering Committee.The post Dr. Alice-Agnes Gabriel from LMU wins Ada Lovelace Award for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XJ1B)

In this video from SC19, Sam Mahalingam from Altair describes how the company is enhancing PBS Works software to ease the migration of HPC workloads to the Cloud. "Argonne National Laboratory has teamed with Altair to implement a new scheduling system that will be employed on the Aurora supercomputer, slated for delivery in 2021. PBS Works runs big — 50,000 nodes in one cluster, 10,000,000 jobs in a queue, and 1,000 concurrent active users."The post Altair PBS Works Steps Up to Exascale and the Cloud appeared first on insideHPC.

|

|

by staff on (#4XGSY)

Today Micron Technology announced that it has begun sampling DDR5 registered DIMMs, based on its industry-leading 1znm process technology, with key industry partners. DDR5, the most technologically advanced DRAM to date, will enable the next generation of server workloads by delivering more than an 85% increase in memory performance. DDR5 doubles memory density while improving reliability at a time when data center system architects are seeking to supply rapidly growing processor core counts with increased memory bandwidth and capacity.The post Micron steps up Memory Performance and Density with DDR5 appeared first on insideHPC.

|

|

by staff on (#4XGFT)

GIGABYTE is showcasing AI, Cloud, and Smart Applications this week at CES 2020 in Las Vegas. "GIGABYTE is renowned for its craftsmanship and dedication to innovating new technologies that are current with the time and helping humanity leap forward for more than 30 years. GIGABYTE's accomplishments in motherboards and graphics cards have set the standard for the industry to follow, and the quality and performance of its products have been the excellence that competitors look up to. GIGABYTE has leveraged the experience and know-how to establish a trusted reputation in data center expertise, and is responsible in supplying the hardware and support to some of the biggest companies involved in HPC and cloud & web hosting services, enabling their successes in the respective fields."The post GIGABYTE Brings AI and Cloud Solutions to CES 2020 appeared first on insideHPC.

|

|

by staff on (#4XGFW)

In this special guest feature, Joe Landman from Scalability.org writes that the move to cloud-based HPC is having some unexpected effects on the industry. "When you purchase a cloud HPC product, you can achieve productivity in time scales measurable in hours to days, where previously weeks to months was common. It cannot be overstated how important this is."The post Joe Landman on How the Cloud is Changing HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XG55)

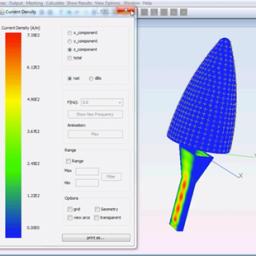

Today Altair announced the acquisition of newFASANT, offering leading technology in computational and high-frequency electromagnetics. “By combining its people and software into our advanced solutions offerings, we are clearly emerging as the dominant player in high-frequency electromagnetics – technology that is critical for solving some of the world’s toughest engineering problems.â€The post Altair Acquires newFASANT for High-Frequency Electromagnetics appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XG57)

Karen Willcox from the University of Texas gave this Invited Talk at SC19. "This talk highlights how physics-based models and data together unlock predictive modeling approaches through two examples: first, building a Digital Twin for structural health monitoring of an unmanned aerial vehicle, and second, learning low-dimensional models to speed up computational simulations for design of next-generation rocket engines."The post Predictive Data Science for Physical Systems: From Model Reduction to Scientific Machine Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XEYR)

NVIDIA will host a full day HPC Summit this year at the GPU Technology Conference 2020. With a full day of plenary sessions and a developer track on high performance computing, the HPC Summit takes place Thursday, March 26 in San Jose, California. "The HPC Summit at GTC brings together HPC leaders, IT professionals, researchers, and developers to advance the state of the art of HPC. Explore content from different HPC communities, engage with experts, and learn about new trends and innovations."The post NVIDIA to host Full-Day HPC Summit at GPU Technology Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XEYT)

James Coomer gave this talk at the DDN User Group at SC19. James Coomer from DDN presents: Analytics, Multicloud, and the Future of the Datasphere. "We are adding serious data management, collaboration and security capabilities to the most scalable file solution in the world. EXA5 gives you mission critical availability whilst consistently performing at scale†said James Coomer, senior vice president of product, DDN. “Our 20 years’ experience in delivering the most powerful at-scale data platforms is all baked into EXA5. We outperform everything on the market and now we do so with unmatched capability.â€The post Analytics, Multicloud, and the Future of the Datasphere appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XDSF)

In this episode of Let’s Talk Exascale, Ulrike Meier Yang of LLNL describes the xSDK4ECP and hypre projects within the Exascale Computing Project. The increased number of libraries that exascale will need presents challenges. “The libraries are harder to build in combination, involving many variations of compilers and architectures, and require a lot of testing for new xSDK releases.â€The post Podcast: Optimizing Math Libraries to Prepare Applications for Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XDSH)

Stanford University is seeking a Research Computing Specialist in our Job of the Week. "As a Research Computing Specialist, you will draw on both deep technical knowledge and interpersonal skills to facilitate and accelerate academic research at the Stanford University Graduate School of Business (GSB). You will join a team of research analytics scientists, data engineers, and project managers on the Data, Analytics and Research Computing (DARC) team to support research at the GSB. Your clients will include GSB faculty and collaborators who are drawn from a broad spectrum of academic backgrounds, research interests, methodological specialties, and technical backgrounds. As a Research Computing Specialist, you will bring the ability to understand the ecosystem of research computing resources and partner with researchers to use these resources effectively. You should enjoy working directly with researchers, and be equally comfortable introducing novices to research computing systems and helping advanced users optimize their workflow."The post Job of the Week: Research Computing Specialist at Stanford University appeared first on insideHPC.

|

|

by Rich Brueckner on (#4XCG8)

In this Chip Chat podcast, Carey Kloss from Intel outlines the architecture and potential of the Intel Nervana NNP-T. He gets into major issues like memory and how the architecture was designed to avoid problems like becoming memory-locked, how the accelerator supports existing software frameworks like PaddlePaddle and TensorFlow, and what the NNP-T means for customers who want to keep on eye on power usage and lower TCO.The post Podcast: Advancing Deep Learning with Custom-Built Accelerators appeared first on insideHPC.

|

|

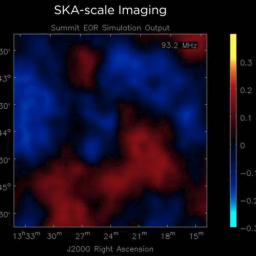

by Rich Brueckner on (#4XCGA)

Researchers are using the Summit Supercomputer at ORNL to simulate the massive dataflow of the future SKA telescope. "The SKA simulation on Summit marks the first time radio astronomy data have been processed at such a large scale and proves that scientists have the expertise, software tools, and computing resources that will be necessary to process and understand real data from the SKA."The post Simulating SKA Telescope’s Massive Dataflow using the Summit Supercomputer appeared first on insideHPC.

|

|

by staff on (#4XB2E)

Sarah Middleton from GSK gave this talk at DOE CSGF 2019. "Advances in techniques for single-cell RNA sequencing have made it possible to profile gene expression in individual cells on a large scale, opening up the possibility to explore the heterogeneity of expression within and across cell types. This exciting technology is now being applied to almost every tissue in the human body, with some experiments generating expression profiles for more than 100,000 cells at a time."The post Single-Cell Sequencing for Drug Discovery: Applications and Challenges appeared first on insideHPC.

|

|

by staff on (#4XB2G)

In this Artificial Intelligence podcast with Lex Fridman, computer scientist Donald Knuth discusses Alan Turing, Neural networks, machine learning and other AI topics from ant colonies and human cognition.The post Donald Knuth on Algorithms, Complexity, and The Art of Computer Programming appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X9VK)

Scott Parker from Argonne gave this talk at ATPESC 2019. "Designed in collaboration with Intel and Cray, Theta is a 6.92-petaflops (Linpack) supercomputer based on the second-generation Intel Xeon Phi processor and Cray’s high-performance computing software stack. Capable of nearly 10 quadrillion calculations per second, Theta enables researchers to break new ground in scientific investigations that range from modeling the inner workings of the brain to developing new materials for renewable energy applications."The post Theta and the Future of Accelerator Programming at Argonne appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X9JR)

"It’s become a tradition at Radio Free HPC to celebrate the holidays with a video of the holiday episode. The new logo launches the video: celebrating a truly family style dinner and dreaming big (maybe too creatively, however) as the team exchanges “if-only†gifts!"The post Radio Free HPC Rings in the New Year appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X90G)

In this video from SC19, Thierry Pellegrino from Dell Technologies describes how the company's HPC solutions are designed to simplify and accelerate customers’ HPC and AI efforts. "There’s a lot of value in the data that organizations collect, and HPC and AI are helping organizations get the most out of this data,†said Thierry Pellegrino, vice president of HPC at Dell Technologies. “We’re committed to building solutions that simplify the use and deployment of these technologies for organizations of all sizes and at all stages of deployment.â€The post Dell Technologies Democratizes HPC at SC19 appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X8SJ)

The Barcelona Supercomputing Center has coordinated the manufacture of the first open source chip developed in Spain. "Lagarto is an important step in the search of the BSC, led by the center's director, Mateo Valero, to develop European computing technology. This project is based on the premise that the instruction set of the future processors must be open source to ensure transparency and minimize dependence."The post RISC-V Lagarto is First Open Source Chip Developed in Spain appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X8SM)

Andrey Kudryavtsev from Intel gave this talk at the DDN User Group in Denver. "Intel Optane DC SSDs are proven technologies that have helped data centers remove storage bottlenecks and accelerate application performance for the past two years. Now, the launch of Intel Optane persistent memory and the integrated support of 2nd Generation Intel Xeon Scalable processors is further shrinking the gap between storage and DRAM. Together, Intel Optane DC persistent memory and Intel Optane SSDs deliver value across four crucial vectors."The post Reimagining HPC Compute and Storage Architecture with Intel Optane Technology appeared first on insideHPC.

|

|

by staff on (#4X7EB)

In this video, Oracle Sr Director Leo Leung cloud computing options by workload in 2019. "Of course there's variance when it comes to clock speeds, when it comes to the amount of memory versus storage is on board. But generally they fall in these families: CPU based instances that have storage onboard, CPU instances that require network storage, and GPU instances that require network storage."The post Cloud Compute in 2019: Workloads and Options appeared first on insideHPC.

|

|

by staff on (#4X76M)

In this video, scientists describes IBM GRAF, a powerful new global weather forecasting system that will provide the most accurate local weather forecasts ever seen worldwide. The IBM Global High-Resolution Atmospheric Forecasting System (GRAF) is the first hourly-updating commercial weather system that is able to predict something as small as thunderstorms globally. Compared to existing models, it will provide a nearly 200% improvement in forecasting resolution for much of the globe (from 12 to 3 sq km).The post Video: Democratizing the World’s Weather Data with IBM GRAF appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X67B)

In this Chip Chat podcast, Intel's Alexei Bastidas describes the technology behind making maps from satellite images. "Good maps rely on good data. The Red Cross’ Missing Maps Project leverages AI to provide governments and aid workers with the tools they need to navigate disasters like hurricanes and floods. Using a wealth of satellite imagery and machine learning, the Missing Maps Project is working to make villages, roads, and bridges more accessible in the wake of devastation."The post Mapping Disasters with Artificial Intelligence appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X67D)

Adaptive Computing has released NODUS Cloud OS 4.0 to help organizations simplify the deployment of cloud-hosted resources and gain access to the virtually unlimited capacity of High-Performance Computing in the cloud. In addition to offering enhanced cloud bursting configurations and capabilities, NODUS Cloud OS 4.0 is breaking down the barriers to entry into the world of advanced computing for corporations and organizations of any size.The post Adaptive Computing Releases NODUS Cloud OS 4.0 appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X560)

Anders Ynnerman from Linköping University gave this invited talk at SC19. "This talk will present and demonstrate the NASA funded open source initiative, OpenSpace, which is a tool for space and astronomy research and communication, as well as a platform for technical visualization research. OpenSpace is a scalable software platform that paves the path for the next generation of public outreach in immersive environments such as dome theaters and planetariums."The post SC19 Invited Talk: OpenSpace – Visualizing the Universe appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X562)

ASRC Federal in Maryland is seeking a Sr. HPC System Administrator in our Job of the Week. "This position is a member of an HPC Support team focusing on storage hardware and software for two supercomputing clusters. You will specialize in both the monitoring and management of storage systems and storage-related network management for a large supercomputer."The post Job of the Week: Sr. HPC System Administrator at ASRC Federal appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X417)

Intel's Mike Davies describes Intel’s Loihi, a neuromorphic research chip that contains over 130,000 “neurons." "To be sure, neuromorphic computing isn’t biomimicry or about reconstructing the brain in silicon. Rather, it’s about understanding the processes and structures of neuroscience and using those insights to inform research, engineering, and technology."The post Podcast: The Evolution of Neuromorphic Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X3S3)

Andreas Dilger from Whamcloud gave this talk at LAD'19 in Paris. "For a number of years, a majority of the world’s 100 fastest supercomputers have relied on Lustre for their storage needs. If you need lots of data fast and reliably, and value the flexibility of using a wide choice of block storage and want to become part of a world-wide open community, then Lustre is a good choice."The post Video: Lustre Features and Future appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X2PE)

A research team from the University of Pisa seeks your participation in their Data Science Survey. The survey is mainly addressed at those who work every day in the field of data science, either on their own or for a company. "This questionnaire will provide the starting point for defining a common framework among those working in data science. We think we will make the results of the questionnaire public 100%, so that the community can benefit from it."The post Take the Data Science Survey from the University of Pisa appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X2PG)

Glenn Lockwood from NERSC gave this talk at ATPESC 2019. "Systems are very different, but the APIs you use shouldn't be. Understanding performance is easier when you know what's behind the API. What really happens when you read or write some data?"The post Video: I/O Architectures and Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X1T3)

In this edition of Let's Talk Exascale, Christian Trott of Sandia National Laboratories shares insights about Kokkos, a programming model for numerous Exascale Computing Project applications. "Kokkos is a programming model being developed to deliver a widely usable alternative to programming in OpenMP. It is expected to be easier to use and provide a higher degree of performance portability, while integrating better into C++ codes."The post An Alternative to OpenMP and an On-Ramp to Future C++ Standards appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X1T5)

Lin Gan from Tsinghua University gave this invited talk at SC19. "In recent years, many complex and challenging numerical problems, in areas such as climate modeling and earthquake simulation, have been efficiently resolved on Sunway TaihuLight, and have been successfully scaled to over 10 million cores with inspiringly good performance. To carefully deal with different issues such as computing efficiency, data locality, and data movement, novel optimizing techniques from different levels are proposed, including some specific ones that fit well with the unique architectural futures of the system and significantly improve the performance."The post SC19 Invited Talk: HPC Solutions for Geoscience Application on the Sunway Supercomputer appeared first on insideHPC.

|

|

by Rich Brueckner on (#4X0XH)

Penguin Computing has upgraded the Corona supercomputer at LLNL with the newest AMD Radeon Instinct MI60 accelerators. Based on the Vega 7nm architecture, this upgrade is the latest example of Penguin Computing and LLNL’s ongoing collaboration aimed at providing additional capabilities to the LLNL user community. "With the MI60 upgrade, the cluster increases its potential PFLOPS peak performance to 9.45 petaFLOPS of FP32 peak performance. This brings significantly greater performance and AI capabilities to the research communities."The post Penguin Computing Upgrades Corona Cluster with 7nm AMD GPU Technology appeared first on insideHPC.

|

|

by staff on (#4X0PP)

A nationwide alliance of national labs, universities, and industry launched today to advance the frontiers of quantum computing systems designed to solve urgent scientific challenges and maintain U.S. leadership in next-generation information technology. "The Quantum Information Edge will accelerate quantum R&D by simultaneously pursuing solutions across a broad range of science and technology areas, and integrating these efforts to build working quantum computing systems that benefit the nation and science.â€The post Sandia and LBNL to lead Quantum Information Edge Strategic Alliance appeared first on insideHPC.

|

|

by Rich Brueckner on (#4WZHG)

In this video from the DDN User Group at SC19, Gael Delbray from CEA presents: Optimizing Flash at Scale. "The major challenges that the HPC will face in the coming years are manifold, such as the development of hardware and software architectures able to deliver very high computing power, modelling methods combining different scales and physical models and the management of huge volumes of numerical data."The post Video: Optimizing Flash at Scale at CEA appeared first on insideHPC.

|

|

by Rich Brueckner on (#4WZ7T)

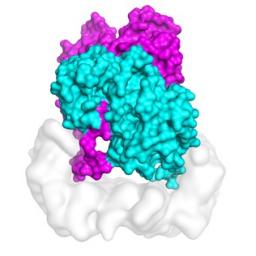

In this TACC podcast, UC Berkeley scientists describe how they are using powerful supercomputers to uncover the mechanism that activates cell mutations found in about 50 percent of melanomas. "The study's computational challenges involved molecular dynamics simulations that modeled the protein at the atomic level, determining the forces of every atom on every other atom for a system of about 200,000 atoms at time steps of two femtoseconds."The post XSEDE Supercomputers Advance Skin Cancer Research appeared first on insideHPC.

|