|

by staff on (#4V7H6)

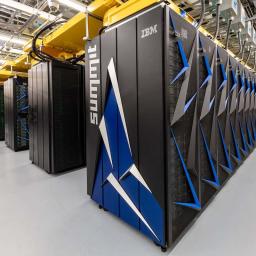

Researchers at the Georgia Institute of Technology have achieved world record performance on the Summit supercomputer using a new algorithm for turbulence simulation. "The team identified the most time-intensive parts of a base CPU code and set out to design a new algorithm that would reduce the cost of these operations, push the limits of the largest problem size possible, and take advantage of the unique data-centric characteristics of Summit, the world’s most powerful and smartest supercomputer for open science."The post Tackling Turbulence on the Summit Supercomputer appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-02 18:45 |

|

by Rich Brueckner on (#4V7H8)

Washington State University is seeking an HPC Systems Administrator in our Job of the Week. "The Center for Institutional Research Computing at Washington State University seeks exceptional applicants for the position of High-Performance Computing Systems Administrator. This position will play a vital role in the administration of HPC clusters used by the research community at Washington State University."The post Job of the Week: HPC Systems Administrator at Washington State University appeared first on insideHPC.

|

|

by staff on (#4V4D7)

As we head into the biggest supercomputing event of the year, all eyes are on exascale. The frontrunners in the race to exascale, including our friends over at Altair, will convene at SC19 in Denver this November to share updates, address challenges, and help paint the picture of an exascale-fueled future for HPC.The post Are You Ready for the Exascale Era? Find Out at SC19 appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V63Z)

"Altair and Oracle have teamed up to help customers quickly expand their engineering and high performance computing (HPC) capacity in Oracle's cloud. Altair HyperWorks Unlimited Virtual Appliance is a fully managed engineering service that provides modeling and visualization software, solvers, and post-processing tools—all on Oracle Cloud Infrastructure. The solution offers simplified administration, unlimited use of Altair HyperWorks™ applications, and full global support from Altair's team. Plus, it’s pre-configured and ready to use on day one."The post Altair Teams with Oracle Cloud Infrastructure for HPC appeared first on insideHPC.

|

|

by staff on (#4V641)

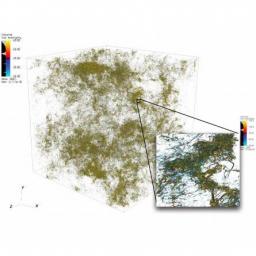

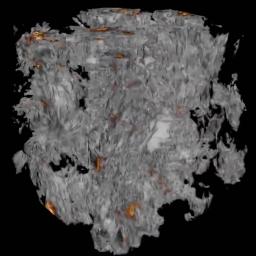

In this visualization, LRZ presents the largest interstellar turbulence simulations ever performed, unravelling key astrophysical processes concerning the formation of stars and the relative role of magnetic fields. "Besides revealing features of turbulence with an unprecedented resolution, the visualizations brilliantly showcase the stretching-and-folding mechanisms through which astrophysical processes such as supernova explosions drive turbulence and amplify the magnetic field in the interstellar gas, and how the first structures, the seeds of newborn stars are shaped by this process."The post Visualizing the World’s Largest Turbulence Simulation appeared first on insideHPC.

|

|

by staff on (#4V643)

Today Mellanox introduced the Mellanox Quantum LongReach series of long-distance InfiniBand switches. Mellanox Quantum LongReach systems provide the ability to seamlessly connect remote InfiniBand data centers together, or to provide high-speed and full RDMA (remote direct memory access) connectivity between remote compute and storage infrastructures. Based on the 200 gigabit HDR Mellanox Quantum InfiniBand switch, the LongReach solution provides up to two long-reach InfiniBand ports and eight local InfiniBand ports. The long reach ports can deliver up to 100Gb/s data throughput for distances of 10 and 40 kilometers.The post Mellanox LongReach Appliance Extends InfiniBand Connectivity up to 40 Kilometers appeared first on insideHPC.

|

|

by staff on (#4V645)

Today DDN announced new infrastructure and multicloud solutions ahead of its return to SC19 in Denver. "We are adding serious data management, collaboration and security capabilities to the most scalable file solution in the world. EXA5 gives you mission critical availability whilst consistently performing at scale†said James Coomer, senior vice president of product, DDN. “Our 20 years’ experience in delivering the most powerful at-scale data platforms is all baked into EXA5. We outperform everything on the market and now we do so with unmatched capability.â€The post DDN Launches New Data Management Capabilities and Platforms for AI and HPC appeared first on insideHPC.

|

|

by staff on (#4V5VG)

"Polymer components in liquid cooling systems are attractive for several reasons: they are lightweight, typically less expensive than metal counterparts, and are impervious to corrosion that can render parts inoperable or introduce debris into flow paths. The challenges with many polymers used to date, however, are their abilities to handle high temperatures and physical stressors without deforming, cracking or creeping. These shortcomings become significant when leaks occur, leading to downtime or damage to equipment."The post The Use of High-Performance Polymers in HPC and Data Center Applications appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V5VJ)

The OpenMP Architecture Review Board (ARB) has released Technical Report 8, the first preview for the future OpenMP API, version 5.1. "We are excited about this first public step towards OpenMP 5.1," said OpenMP Language Committee Chair Bronis R. de Supinski. "While 5.1 will include only a small set of new features, TR8 demonstrates that those additions, such as the assume directive, will provide important usability enhancements."The post OpenMP ARB Releases Technical Report 8 with New Usability Enhancements appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V4CZ)

Today Mellanox introduced Mellanox Skyway, a 200 gigabit HDR InfiniBand to Ethernet gateway appliance. Mellanox Skyway enables a scalable and efficient way to connect the high-performance, low-latency InfiniBand data center to external Ethernet infrastructures or connectivity. Mellanox Skyway is the next generation of the existing 56 gigabit FDR InfiniBand to 40 gigabit Ethernet gateway system, deployed in multiple data centers around the world.The post Mellanox Announces HDR InfiniBand-to-Ethernet Gateway Appliance for High Performance Data Centers appeared first on insideHPC.

|

|

by staff on (#4V4D1)

Today datacenter cooling vendor Iceotope announced that it ranked number 14 and were Midlands Regional Winners in the 2019 Deloitte UK Technology Fast 50, a ranking of the 50 fastest-growing technology companies in the UK. Rankings are based on percentage revenue growth over the last four years. Iceotope grew 2,331% during this period.The post Rapid Growth lands Iceotope on 2019 Deloitte Fast 50 appeared first on insideHPC.

|

|

by staff on (#4V4D2)

In this special guest feature, Dr. Rosemary Francis gives her perspective on what to look for at SC19 conference next week in Denver. "There are always many questions circling the HPC market in the run up to Supercomputing. In 2019, the focus is even more focused on the cloud in previous years. Here are a few of the topics that could occupy your coffee queue conversations in Denver this year."The post What to expect at SC19 appeared first on insideHPC.

|

|

by staff on (#4V4D3)

The Jülich Supercomputing Centre is adding a high-powered booster module to their JUWELS supercomputer. Designed in cooperation with Atos, ParTec, Mellanox, and NVIDIA, the booster module is equipped with several thousand GPUs designed for extreme computing power and artificial intelligence tasks. "With the launch of the booster in 2020, the computing power of JUWELS will be increased from currently 12 to over 70 petaflops."The post GPU-Powered Turbocharger coming to JUWELS Supercomputer at Jülich appeared first on insideHPC.

|

|

by staff on (#4V4D5)

Today Award-winning Japanese HPC Cloud company XTREME-D today announced that its flagship product, XTREME-Stargate, will be available in the US in Q1. "XTREME-Stargate is a fully automated service for deploying, monitoring, and managing a virtual supercomputer on public or private cloud, and includes managed services via Infrastructure as a Service (IaaS), system software for automation, high performance storage, and specialized professional services provided by XTREME-D’s HPC architecture team."The post XTREME-D Now Accepting US Orders for Unique HPC Cloud Platform appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V3MR)

"The next generation of PanFS on ActiveStor Ultra offers unlimited performance scaling in 4 GB/s building blocks, utilizing multi-tier intelligent data placement to maximize storage performance by placing metadata on low-latency NVMe SSDs, small files on high IOPS SSDs and large files on high-bandwidth HDDs. The system’s balanced node architecture optimizes networking, CPU, memory and storage capacity to prevent hot spots and bottlenecks, ensuring consistently high performance regardless of workload."The post Panasas to Showcase “Fastest HPC Parallel File System at any Price-Point†at SC19 appeared first on insideHPC.

|

|

by staff on (#4V30G)

Today Cortical.io announced the debut of a new class of high-performance enterprise applications based on “Semantic Supercomputing.†Semantic Supercomputing combines Cortical.io AI-based NLU software inspired by neuroscience with hardware acceleration to create new solutions to understand and process streams of natural language content at massive scale in real time. "We are taking the concept of supercomputing to the next level with the introduction of Semantic Supercomputing and the ability to deliver real-time processing of semantic content.â€The post Cortical.io Demonstrates Real-time Semantic Supercomputing for NLU appeared first on insideHPC.

|

|

by staff on (#4V30J)

The OpenFabrics Alliance (OFA) has published a Call for Sessions for its 16th annual OFA Workshop. "The OFA Workshop 2020 Call for Sessions encourages industry experts and thought leaders to help shape this year’s discussions by presenting or leading discussions on critical high-performance networking issues. Session proposals are being solicited in any area related to high performance networks and networking software, with a special emphasis on the topics for this year’s Workshop. In keeping with the Workshop’s emphasis on collaboration, proposals for Birds of a Feather sessions and panels are particularly encouraged."The post Call for Sessions: OpenFabrics Alliance Workshop in March appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V30K)

Oracle Cloud Services built on Intel technology offers best-in-class services across software as a service (SaaS), platform as a service (PaaS), and infrastructure as a service (IaaS), and even lets you put Oracle Cloud in your own data center. "With optimized Intel processors, solid state drives, and other Intel-based technologies such as Intel Resource Director, Oracle Cloud offers solutions that enable enterprises to architect long-term strategies and solutions to meet their unique business requirements. Intel technologies utilized by Oracle Cloud support the broadest set of workloads, including media processing, machine learning, data analytics, high-performance computing, and artificial intelligence."The post Enabling HPC with Intel on Oracle Cloud Infrastructure appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V2CB)

Today Cray and Fujitsu announced a partnership to offer high performance technologies for the exascale era. Under the alliance agreement, Cray is developing the first-ever commercial supercomputer powered by the Fujitsu A64FX Arm-based processor with high-memory bandwidth (HBM) and supported on the proven Cray CS500 supercomputer architecture and programming environment.The post Cray and Fujitsu to bring Game-Changing Arm A64FX Processor to Global HPC Market appeared first on insideHPC.

|

|

by staff on (#4V21R)

Today Intel unveiled new products designed to accelerate AI system development and deployment from cloud to edge. "In its key announcement, Intel demonstrated its Intel Nervana Neural Network Processors (NNP) for training (NNP-T1000) and inference (NNP-I1000) — Intel’s first purpose-built ASICs for complex deep learning with incredible scale and efficiency for cloud and data center customers. Intel also revealed its next-generation Intel Movidius Myriad Vision Processing Unit (VPU) for edge media, computer vision and inference applications."The post Intel showcases New Class of AI Hardware from Cloud to Edge appeared first on insideHPC.

|

|

by staff on (#4V1DC)

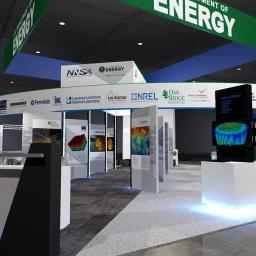

The DOE’s national laboratories will be showcased at SC19 next week in Denver, CO. "Computational scientists from DOE laboratories have been involved in the conference since it began in 1988 and this year’s event is no different. Experts from the 17 national laboratories will be sharing a booth featuring speakers, presentations, demonstrations, discussions, and simulations. DOE booth #925 will also feature a display of high performance computing artifacts from past, present and future systems. Lab experts will also contribute to the SC19 conference program by leading tutorials, presenting technical papers, speaking at workshops, leading birds-of-a-feather discussions, and sharing ideas in panel discussions."The post Department of Energy to Showcase World-Leading Science at SC19 appeared first on insideHPC.

|

|

by staff on (#4V1DE)

A team of researchers at ETH Zurich are working on a novel approach to solving increasingly large graph problems. "As the size of graph datasets grows larger, a question arises: Does one need to store and process the exact input graph datasets to ensure precise outcomes of important graph algorithms? After an extensive investigation into this question, the ETH researchers have been nominated for the Best Paper and Best Student Paper Awards at SC19."The post ETH Zurich up for Best Paper at SC19 with Lossy Compression for Large Graphs appeared first on insideHPC.

|

|

by Rich Brueckner on (#4V1DG)

Today Dell rolled out Dell Technologies On Demand, a set of consumption-based and as-a-service offerings. “Dell Technologies On Demand makes it possible for organizations to plan, deploy and manage their entire IT footprint.They can choose how they consume and pay for IT solutions that meet their needs with the freedom and flexibility to evolve as their needs change over time.â€The post Get Infrastructure As-a-Service with new Dell Technologies On Demand appeared first on insideHPC.

|

|

by staff on (#4V1DJ)

In this special guest feature, Dan Olds from OrionX continues his Epic HPC Road Trip series with a stop at NERSC. "NERSC is unusual in that they receive more data than they send out. Client agencies process their raw data on NERSC systems and then export the results to their own organizations. This puts a lot of pressure on storage and network I/O, making them top priority at NERSC."The post Epic HPC Road Trip stops at NERSC for a look at Big Network and Storage Challenges appeared first on insideHPC.

|

|

by staff on (#4V1DK)

In this special guest feature from Scientific Computing World, Robert Roe interviews Loren Dean from Mathworks on the use of AI in modeling and simulation. "If you just focus on AI algorithms, you generally don’t succeed. It is more than just developing your intelligent algorithms, and it’s more than just adding AI - you really need to look at it in the context of the broader system being built and how to intelligently improve it."The post Keys to Success for AI in Modeling and Simulation appeared first on insideHPC.

|

|

by staff on (#4TZPT)

In early November, A*CRC, ICM, and Zettar conducted a production trial over the newly built Collaboration Asia Europe-1 (CAE-1) 100Gbps link connecting Europe and Singapore. "The project has established a historical first," said Zettar CEO Chin Fang. "For the first time over the newly built CAE-1 link, with a production setup at ICM end, it has shown that moving data at great speed and scale between Poland (and thus Eastern Europe) and Singapore is a reality. Furthermore, although the project was initiated only in mid-October, all goals have been reached and a few new grounds have also been broken as well. It is also a true international collaboration."The post Production Trial Shows Global Science Possible with CAE-1 100Gbps link appeared first on insideHPC.

|

|

by staff on (#4TZPW)

Today InAccel annnounced that it has integrated JupyterHub into the company's adaptive acceleration platform for FPGAs. InAccel provides an FPGA resource manager that allows the instant deployment, scaling and virtualization of FPGAs making easier than ever the utilization of FPGA clusters for applications like machine learning, data processing, data analytics and many more HPC workloads.The post Accelerate Big Data and HPC applications with FPGAs using JupyterHub appeared first on insideHPC.

|

|

by staff on (#4TZPY)

Today the Barcelona Supercomputing Center (BSC) announced the European Laboratory for Open Computer Architecture (LOCA). LOCA’s mission is to design and develop energy efficient and high performance chips, based on open architectures like RISC-V, OpenPOWER, and MIPS, in Europe, for use within future exascale supercomputers and other high performance domains. "“We are launching it with great conviction, because it is another step in our philosophy of paving the way for the creation of European HPC architectures."The post New LOCA Facility to Develop Open Computer Architectures at BSC in Barcelona appeared first on insideHPC.

|

|

by Rich Brueckner on (#4TZE9)

In this video, Doug O'Flaherty from IBM describes how Spectrum Scale Storage (GPFS) helps Oracle Cloud Infrastructure delivers high performance for HPC applications. "To deliver insights, an organization’s underlying storage must support new-era big data and artificial intelligence workloads along with traditional applications while ensuring security, reliability and high performance. IBM Spectrum Scale meets these challenges as a high-performance solution for managing data at scale with the distinctive ability to perform archive and analytics in place."The post Enabling Oracle Cloud Infrastructure with IBM Spectrum Scale appeared first on insideHPC.

|

|

by staff on (#4TZEB)

In this guest article, our friends at Intel discuss how CPUs prove better for some important Deep Learning. Here’s why, and keep your GPUs handy! Heterogeneous computing ushers in a world where we must consider permutations of algorithms and devices to find the best platform solution. No single device will win all the time, so we need to constantly assess our choices and assumptions.The post Optimizing in a Heterogeneous World is (Algorithms x Devices) appeared first on insideHPC.

|

|

by staff on (#4TYNY)

Today education company Coding Dojo launched the Data Science Immersive bootcamp, a new program designed to teach and apply Data Science methodologies and tools so participants can solve real-world problems in business and academia. The 14-week course was developed to meet the growing employer demand for skilled Data Scientists, which rose by 31% since December 2018 and an astonishing 256% since December 2013, according to research by Indeed.com. The curriculum was designed by Isaac Faber Ph.D., the Chief Data Scientist for education and collaboration startup MatrixDS.The post Coding Dojo Launches Immersive Data Science Bootcamp appeared first on insideHPC.

|

|

by Rich Brueckner on (#4TYFR)

Amos Storkey from the University of Edinburgh gave this talk at HiPEAC CSW Edinburgh. "Storkey explores the demands of getting deep learning software to work on embedded devices, with challenges including real-time requirements, memory availabilit and the energy budget. He discusses work undertaken within the context of the European Union-funded Bonseyes project."The post Video: Deep Learning for resource-constrained systems appeared first on insideHPC.

|

|

by staff on (#4TXH4)

A research collaboration between LBNL, PNNL, Brown University, and NVIDIA has achieved exaflop (half-precision) performance on the Summit supercomputer with a deep learning application used to model subsurface flow in the study of nuclear waste remediation. Their achievement, which will be presented during the “Deep Learning on Supercomputers†workshop at SC19, demonstrates the promise of physics-informed generative adversarial networks (GANs) for analyzing complex, large-scale science problems.The post Deep Learning on Summit Supercomputer Powers Insights for Nuclear Waste Remediation appeared first on insideHPC.

|

|

by staff on (#4TXBX)

The Ohio Supercomputer Center is seeking an HPC Operations Manager in our Job of the Week. "The operations manager will manage a team of three systems administrators and two student employees. The person who fills this role will be responsible for both supervising operations staff as well as providing technical and organizational expertise for all aspects of the HPC operations. The ideal candidate will be an excellent problem solver, have strong communication skills, and be willing to work in a distributed, team-oriented work environment."The post Job of the Week: HPC Operations Manager at Ohio Supercomputing Center appeared first on insideHPC.

|

|

by staff on (#4TW5M)

Intersect360 Research has released a pair of new reports examining major technology trends in AI and machine learning, including the worldwide market, spending trends, and impact on HPC. "Machine learning has been in a very high growth stage,†says Intersect360 Research CEO Addison Snell. “In addition to that $10 billion, many systems not one hundred percent dedicated to machine learning are serving training needs as part of their total workloads, increasing the influence that machine learning has on spending and configuration.â€The post Intersect360 Research Examines Spending Trends in Machine Learning Market appeared first on insideHPC.

|

|

by staff on (#4TW5P)

SC19 will host a BoF Meeting on Americas HPC Collaboration. This third BoF Americas HPC Collaboration seeks to showcase collaboration opportunities and experiences between different HPC Networks and Laboratories from countries of the American continent. "The goal of this BoF is to show the current state of the art in continental collaboration in HPC, latest developments of regional collaborative networks and, to update the roadmap for the next two years for the Americas HPC partnerships."The post SC19 to Host BoF Meeting on Americas HPC Collaboration appeared first on insideHPC.

|

|

by staff on (#4TVVY)

Today Altair announced the acquisition of DEM Solutions, makers of EDEM, the market-leader in Discrete Element Method (DEM) software for bulk material simulation. "With this acquisition, Altair customers now have the ability to design and develop power machinery while simultaneously optimizing how this equipment processes and handles bulk materials."The post Altair acquires DEM Solutions for Discrete Element Method Analysis appeared first on insideHPC.

|

|

by Rich Brueckner on (#4TVW0)

In this video, Karan Batta from Oracle describes how the company built Oracle Cloud Infrastructure to deliver high performance for HPC applications. "Over the last 12 months, we have invested significantly, in both technology and partnerships, to make Oracle Cloud Infrastructure the best place to run your Big Compute and HPC workloads."The post How we built Oracle Cloud Infrastructure for HPC appeared first on insideHPC.

|

|

by staff on (#4TTEE)

This week IonQ launched the new Azure Quantum ecosystem in partnership with Microsoft at the annual Ignite conference. Azure Quantum is a full-stack, open cloud ecosystem that will make IonQ’s quantum computers, based on trapped ions, commercially available via the cloud, allowing users to leverage IonQ’s unique approach to quantum computing. "IonQ brings a unique approach to quantum computing with tremendous potential,†said Krysta Svore, General Manager of Quantum Systems at Microsoft. “This partnership brings world-class quantum computing capabilities to Azure Quantum, and we’re excited to continue working together to realize the full benefits of quantum computers.â€The post IonQ Joins Azure Quantum Ecosystem appeared first on insideHPC.

|

|

by staff on (#4TT4N)

Lifebit CloudOS solves the major barrier faced by the genomics sector: data is often siloed and distributed, inaccessible for multiple parties to analyze. Without the ability to perform analyses across this distributed data the industry is stalled, impeding critical progress in precision medicine and life sciences. "Because it is completely agnostic to the customer’s HPC and cloud infrastructure, workflows and data, Lifebit CloudOS is unlike any other genomics platforms in that it sits natively on one’s cloud/HPC and brings computation to the data instead of the other way around.â€The post Lifebit Launches Federated Genomics Cloud Operating System for Accelerated Discovery appeared first on insideHPC.

|

|

by Rich Brueckner on (#4TT4R)

In this podcast, the Radio Free HPC team reviews the full list of events leading up to SC19 in Denver. "There's a lot that happens before the exhibit floor opens on Monday night. Our old pal Rich Brueckner from insideHPC joins us to give us the full rundown. We even have a few parties to tell you about."The post Podcast: Full Rundown of SC19 Events in Denver appeared first on insideHPC.

|

|

by staff on (#4TT4S)

The QCT Platform on Demand (QCT POD) combines advanced technology with a unique user experience to help enterprises reach better performance and gain more insights. "With flexibility and scalability, QCT POD enables enterprises to address a broader range of HPC, Deep Learning, and Data Analytic demands that fulfill various applications."The post Data Center Transformation: Why a Workload-Driven and Scalable Architecture Matters appeared first on insideHPC.

|

|

by staff on (#4TT4Q)

Today NVIDIA posted the fastest results on new benchmarks measuring the performance of AI inference workloads in data centers and at the edge — building on the company’s equally strong position in recent benchmarks measuring AI training. "NVIDIA topped all five benchmarks for both data center-focused scenarios (server and offline), with Turing GPUs providing the highest performance per processor among commercially available entries."The post NVIDIA Tops MLPerf AI Inference Benchmarks appeared first on insideHPC.

|

|

by staff on (#4TRN5)

Today NVIDIA introduced Jetson Xavier NX, "the world’s smallest, most powerful AI supercomputer for robotic and embedded computing devices at the edge. "With a compact form factor smaller than the size of a credit card, the energy-efficient Jetson Xavier NX module delivers server-class performance up to 21 TOPS for running modern AI workloads, and consumes as little as 10 watts of power."The post NVIDIA Launches $399 Jetson Xavier NX for AI at the Edge appeared first on insideHPC.

|

|

by staff on (#4TRN7)

Today the MLPerf consortium released over 500 inference benchmark results from 14 organizations. "Having independent benchmarks help customers understand and evaluate hardware products in a comparable light. MLPerf is helping drive transparency and oversight into machine learning performance that will enable vendors to mature and build out the AI ecosystem. Intel is excited to be part of the MLPerf effort to realize the vision of AI Everywhere,†stated Dr Naveen Rao, Corp VP Intel, GM AI Products.The post MLPerf Releases Over 500 Inference Benchmarks appeared first on insideHPC.

|

|

by staff on (#4TRN8)

Advania and Stockholm Exergi have joined in partnership for the construction of a new HPC data center in Stockholm, Sweden. ADC will lease land in Stockholm Data Parks to build the data center, and the heat generated by computing equipment in the data center will be used for local house-heating in Stockholm. This arrangement ensures a dramatic increase in the energy efficiency of the data center, making it environmentally friendly, and will utilize heat that is traditionally wasted for the benefit of the local community.The post Advania to build sustainable HPC datacenter in Sweden appeared first on insideHPC.

|

|

by staff on (#4TRBY)

In this special guest feature, Robert Roe from Scientific Computing World interviews Mark Parsons on the strategy for HPC in the UK. "We are not part of EuroHPC, so we are not going to have access to the exascale systems that appear in Europe in 2023, they will also have some very large systems in 2021, around 150 to 200 Pflop systems, and we will not have access to that which will have a detrimental effect on our scientific and industrial communities ability to use the largest scale of supercomputing."The post Interview: Advancing HPC in the UK in the Age of Brexit appeared first on insideHPC.

|

|

by staff on (#4TRC0)

Today datacenter solution provider TMGcore announced it has developed a first-of-its-kind data center platform that utilizes immersion cooling and is ready to house ​Dell EMC​ PowerEdge C4140 servers. "Traditionally, data center platforms run in air-cooled environments​ that require a significant amount of power and air conditioning within the facility,†said John-David Enright, CEO of TMGcore. “However, companies are looking to be more environmentally friendly and reduce the amount of power consumption within their data center operations. ​We have developed a line of data center platforms that feature an immersion environment for the servers, and we are proud to introduce the products to the market with one customized for Dell Technologies servers.â€The post TMGcore Teams with Dell EMC on Immersion Cooled Datacenter Platform appeared first on insideHPC.

|

|

by Rich Brueckner on (#4TRC1)

In this video, Taylor Newill from Oracle describes how the Oracle Cloud Infrastructure delivers high performance for HPC applications. "From the beginning, Oracle built their bare-metal cloud with a simple goal in mind: deliver the same performance in the cloud that clients are seeing on-prem."The post Building Oracle Cloud Infrastructure with Bare-Metal appeared first on insideHPC.

|

|

by staff on (#4TPYH)

Today Cray announced that the United Kingdom’s Atomic Weapons Establishment (AWE) has selected the Cray Shasta supercomputer to support security and defence of the U.K. Called Vulcan, AWE’s new machine powered by AMD EPYC processors will deliver approximately 7 petaflops of performance and play an integral role in maintaining the U.K.’s nuclear deterrent. “Shasta will bring Exascale Era technologies to bear on AWE’s challenging modeling and simulation data-intensive workload and enable the convergence of AI and analytics into this same workload, on a single system.â€The post AMD to Power Cray Shasta Supercomputer coming to AWE in the UK appeared first on insideHPC.

|