|

by staff on (#35A1B)

The PEARC18 Conference has issued its Call for Contributions. The conference takes place June 22-27 in Pittsburgh. "The Practice & Experience in Advanced Research Computing (PEARC) annual conference series fosters the creation of a dynamic and connected community of advanced research computing professionals who advance leading practices at the frontiers of research, scholarship and teaching, and industry application."The post Call for Contributions: PEARC18 in Pittsburgh appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 20:45 |

|

by staff on (#359SR)

Today ACM and IEEE Computer Society named Jesús Labarta of the Barcelona Supercomputing Center as the recipient of the 2017 ACM-IEEE CS Ken Kennedy Award. Labarta is recognized for his seminal contributions to programming models and performance analysis tools for high performance computing. The award will be presented at SC17.The post Jesús Labarta from BSC to receive Ken Kennedy Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#359SS)

Michael Garris from NIST gave this talk at the HPC User Forum. "AI must be developed in a trustworthy manner to ensure reliability and safety. NIST cultivates trust in AI technology by developing and deploying standards, tests and metrics that make technology more secure, usable, interoperable and reliable, and by strengthening measurement science. This work is critically relevant to building the public trust of rapidly evolving AI technologies."The post Video: The AI Initiative at NIST appeared first on insideHPC.

|

|

by staff on (#359PN)

OpenSFS today announced the first Long Term Support (LTS) maintenance release of Lustre 2.10.1. "This latest advance, in effect, fulfills a commitment made by Intel last April to align its efforts and support around the community release, including efforts to design and release a maintenance version. This transition enables growth in the adoption and rate of innovation for Lustre."The post OpenSFS Announces Maintenance Release 2.10.1 for Lustre appeared first on insideHPC.

|

|

by staff on (#359FT)

More than 200 researchers at the University of Pisa use high performance computing (HPC) systems in their quantum chemistry, nanophysics, genome sequencing, engineering simulations and areas such as big data analysis. The University's IT Center has moved to a pre-integrated HPC software stack designed to simplify software installation and maintenance. This sponsored post from Intel shows how a pre-integrated, validated and supported HPC software stack allows the University of Pisa to focus on research.The post HPC Software Stacks: Making High Performance Computing More Accessible appeared first on insideHPC.

|

|

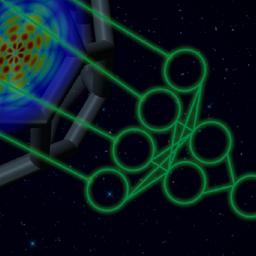

by staff on (#356VF)

In this podcast, Peter Nugent from Berkeley Lab explains how scientists confirmed the first-ever measurement of the merger of two neutron stars and its explosive aftermath. "Simulations succeeded in modeling what would happen in an incredibly complex phenomenon like a neutron star merger. Without the models, we all probably all would have been mystified by exactly what we were seeing in the sky.â€The post Video: Supercomputing Models Enable Detection of a Cosmic Cataclysm appeared first on insideHPC.

|

|

by staff on (#356EC)

Intel field programmable gate arrays (FPGAs) are now powering the Acceleration-as-a-Service of Alibaba Cloud. "Intel FPGAs offer us a more cost-effective way to accelerate cloud-based application performance for our customers who are running business applications and demanding data and scientific workloads,†said Jin Li, vice president of Alibaba Cloud. “Another key value of FPGAs is that they provide high performance at low power, and the flexibility for managing diverse computing workloads.â€The post Intel FPGAs Power Acceleration-as-a-Service for Alibaba Cloud appeared first on insideHPC.

|

|

by staff on (#356HG)

ISC 2018 has issued their Call for Tutorials and Workshops. The conference takes place June 24-28 in Frankfurt, Germany. "If you possess knowledge and skills in a particular field in high performance computing, and enjoy sharing them, the ISC 2018 Tutorials Committee looks forward to hearing from you. Along the same lines, the ISC 2018 Workshops Committee also calls on the HPC community members to send in their proposals for workshops."The post ISC 2018 Issues Call For Tutorials and Workshops appeared first on insideHPC.

|

|

by staff on (#356HH)

Tomorrow's exascale supercomputers will enable researchers to accurately simulate the ground motions of regional earthquakes quickly and in unprecedented detail. "Simulations of high frequency earthquakes are more computationally demanding and will require exascale computers," said David McCallen, who leads the ECP-supported effort. "Ultimately, we’d like to get to a much larger domain, higher frequency resolution and speed up our simulation time."The post Supercomputing Earthquakes in the Age of Exascale appeared first on insideHPC.

|

|

by Rich Brueckner on (#3567K)

In this podcast, the Radio Free HPC team looks at Henry Newman’s recent proposal to use Blockchain as a way to combat Fake News. Henry shares that this rant result from what he saw as an egregious story that was going around that could have been easily quashed.The post Radio Free HPC Looks at how How Blockchain Could Prevent Fake News appeared first on insideHPC.

|

|

by Richard Friedman on (#3564F)

While most of the fundamental HPC system software building blocks are now open source, dealing with the sheer number of components and their inherently complex interdependencies has created a barrier to adoption of HPC for many organizations. This is the first article in a four-part series that explores using Intel HPC Orchestrator to solve HPC software stack management challenges.The post Simplifying HPC Software Stack Management appeared first on insideHPC.

|

|

by Rich Brueckner on (#353R3)

Rupak Biswas from NASA gave this talk at the Argonne Training Program on Extreme-Scale Computing. "High performance computing is now integral to NASA’s portfolio of missions to pioneer the future of space exploration, accelerate scientific discovery, and enable aeronautics research. Anchored by the Pleiades supercomputer at NASA Ames Research Center, the High End Computing Capability (HECC) Project provides a fully integrated environment to satisfy NASA’s diverse modeling, simulation, and analysis needs."The post Video: NASA Advanced Computing Environment for Science & Engineering appeared first on insideHPC.

|

|

by Rich Brueckner on (#353NQ)

NREL in Golden, Colorado is seeking a Scientific Computing Senior Engineer in our Job of the Week. "We have an immediate opening for a Systems Engineer (Job Req #R2314). This senior position is responsible for implementing and operating CSC systems and infrastructure in support of Science and Technical computing in support of NREL’s mission."The post Job of the Week: Scientific Computing Senior Engineer at NREL in Colorado appeared first on insideHPC.

|

|

by staff on (#351MB)

As nuclear physicists delve ever deeper into the heart of matter, they require the tools to reveal the next layer of nature's secrets. Nowhere is that more true than in computational nuclear physics. A new research effort led by theorists at DOE's Jefferson Lab is now preparing for the next big leap forward in their studies thanks to funding under the 2017 SciDAC Awards for Computational Nuclear Physics.The post SciDAC funding to Move Quantum Chromodynamics forward at Jefferson Lab appeared first on insideHPC.

|

|

by Rich Brueckner on (#351G7)

John Gustafson from A*STAR will host a BoF on Posit arithmetic at SC17. Entitled, "Improving Numerical Computation with Practical Tools and Novel Computer Arithmetic," this BOF will be co-hosted by Mike Lam with discussions on tools for measuring floating point accuracy. "This approach obtains more accurate answers than floating-point arithmetic yet uses fewer bits in many cases, saving memory, bandwidth, energy, and power."The post John Gustafson to host BoF on Posit Arithmetic at SC17 appeared first on insideHPC.

|

|

by staff on (#34YWJ)

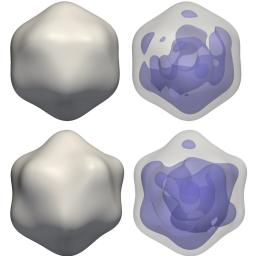

A team at Berkeley Lab are using innovative computational methods to enable new X-ray science. "The creation of XFEL facilities, including the Linac Coherent Light Source (LCLS) and the European X-FEL, have created opportunities for conducting new experiments which can overcome the limitations of traditional crystallography."The post Supercomputing Virus Structures at Nanoscale with XFEL appeared first on insideHPC.

|

|

by Rich Brueckner on (#34YS4)

The PASC18 Organizing Team has posted their Call for Submissions. Proposals are now being accepted for minisymposium, papers, and posters. The conference takes place July 2-4 in Basel, Switzerland (the week after ISC 2018 in Frankfurt). We look forward to receiving your submissions through the online submission portal. Please note that the November 26th deadline for […]The post Call for Submissions: PASC18 in Basel appeared first on insideHPC.

|

|

by staff on (#34YNC)

In this video, Jesús Labarta from the Barcelona Supercomputing Center describes the POP (Performance Optimization and Productivity) Centre of Excellence led by BSC. According to Labarta, POP’s performance analysis results in performance improvements ranging from 10-15% to more than 10 times. Best of all, the POP services are free of charge to organizations / SMEs / ISVs / companies in the EU!The post How the POP Performance Optimization Centre at BSC is Speeding up HPC in Europe appeared first on insideHPC.

|

|

by Rich Brueckner on (#34YER)

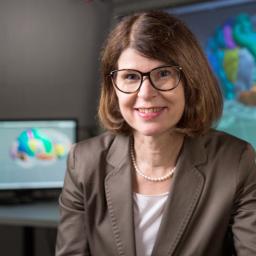

In this video from SC17, Katrin Amunts from Jülich highlights how the massive European-based Human Brain Project (HBP), comprising a veritable orchestra of scientists, collaborates to deliver the most exquisitely detailed human brain models ever created. "We have to create an ‘atlas’ (of the brain) that has a very large size in terms and bits and bytes,†Amunts said.The post HPC Connects: How Supercomputers Are Unraveling the Mystery of the Human Brain appeared first on insideHPC.

|

|

by Rich Brueckner on (#34YBH)

The Intel HPC Developer conference at SC17 has posted its Session Agenda. The conference takes place Nov. 11-12 in Denver. "The Intel HPC Developer Conference is the premier technical training event to meet and hear from Intel architecture experts and connect with HPC industry leaders. Join in to learn what's next in HPC, attend technical sessions, hands-on tutorials, and poster chats that cover parallel programming, high productivity languages, artificial intelligence, systems, enterprise, visualization development and much more."The post Agenda Posted for Intel HPC Developer Conference at SC17 appeared first on insideHPC.

|

|

by staff on (#34VMW)

The recent Tapia Conference on Diversity in Computing in Atlanta brought together some 1,200 undergraduate and graduate students, faculty, researchers and professionals in computing from diverse backgrounds and ethnicities to learn from leading thinkers, present innovative ideas and network with peers.The post NERSC lends a hand to 2017 Tapia Conference on Diversity in Computing appeared first on insideHPC.

|

|

by staff on (#34VE5)

A new peer-reviewed paper is reportedly causing a stir in the climatology community. "The best hope for reducing long-standing global climate model biases, is through increasing the resolution to the kilometer scale. Here we present results from an ultra-high resolution non-hydrostatic climate model for a near-global setup running on the full Piz Daint supercomputer on 4888 GPUs."The post GPUs Power Near-global Climate Simulation at 1 km Resolution appeared first on insideHPC.

|

|

by Rich Brueckner on (#34VB4)

"The European Extreme Data & Computing Initiative (EXDCI) objective is to support the development and implementation of a common strategy for the European HPC Ecosystem. One of the main goals of the meeting in Bologna was to set up a roadmap for future developments, and for other parties who would like to participate in HPC research."The post Introducing the European EXDCI initiative for HPC appeared first on insideHPC.

|

|

by Richard Friedman on (#34V7K)

Intel® Cluster Checker, distributed as part of Intel® Parallel Studio XE 2018 Cluster Edition, provides a set of system diagnostics and analysis methods in a single tool to assist managing clusters of any size. "Think of Intel Cluster Checker as a clinical system that detects signs that issues affecting the health of the cluster exist, diagnoses those issues, and suggests remedies. Using common diagnostic tools signs that may indicate symptoms leading to a diagnosis and a possible solution."The post Diagnose Cluster Health with Intel® Cluster Checker appeared first on insideHPC.

|

|

by staff on (#34RF5)

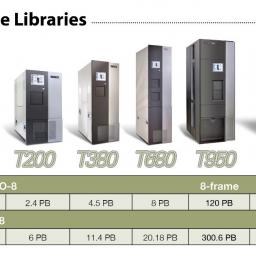

"LTO-8 tape technology doubles the capacity of LTO-7 to an astonishing 30 TB compressed (12TB native) per cartridge, and improves performance by 20 percent, up to 360Mbps. The additional capacity equates to fewer tape cartridges required to store the same amount of data while the performance boost translates into the need for fewer tape drives to do the same amount of work. In addition, the new LTO-8 drives are backward compatible with LTO-7 tape media, allowing users to read/write any LTO-7 media."The post Spectra Logic Launches LTO-8 Pre-Purchase Program appeared first on insideHPC.

|

|

by staff on (#34RBS)

Jason Knight from Intel writes that the company has joined Microsoft, Facebook, and others to participate in the Open Neural Network Exchange (ONNX) project. "By joining the project, we plan to further expand the choices developers have on top of frameworks powered by the Intel Nervana Graph library and deployment through our Deep Learning Deployment Toolkit. Developers should have the freedom to choose the best software and hardware to build their artificial intelligence model and not be locked into one solution based on a framework. Deep learning is better when developers can move models from framework to framework and use the best hardware platform for the job."The post Intel Joins Open Neural Network Exchange appeared first on insideHPC.

|

|

by staff on (#34R1E)

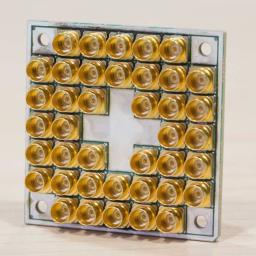

Today, Intel announced the delivery of a 17-qubit superconducting test chip for quantum computing to QuTech, Intel’s quantum research partner in the Netherlands. The new chip was fabricated by Intel and features a unique design to achieve improved yield and performance. "Our quantum research has progressed to the point where our partner QuTech is simulating quantum algorithm workloads, and Intel is fabricating new qubit test chips on a regular basis in our leading-edge manufacturing facilities."The post Intel Delivers 17-Qubit Superconducting Chip with Advanced Packaging to QuTech appeared first on insideHPC.

|

|

by Rich Brueckner on (#34QYG)

In this video from HPE, researchers describe how Exascale will advance science and improve the quality of life for all. "Why is the U.S. government throwing down this gauntlet? Many countries are engaged in what has been referred to as a race to exascale. But getting there isn’t just for national bragging rights. Getting to exascale means reaching a new frontier for humanity, and the opportunity to potentially solve humanity’s most pressing problems."The post Exascale: The Movie appeared first on insideHPC.

|

|

by Rich Brueckner on (#34QVM)

Ruud van der Pas from Oracle has co-authored a new book on OpenMP. It covers the OpenMP 4.5 specifications, with a focus on the practical usage of the language features and constructs. "We start where the specifications end and explain the rationale behind the features. In particular the functionality and how a feature may be used in an application."The post New Book: Using OpenMP – The Next Step appeared first on insideHPC.

|

|

by Rich Brueckner on (#34QCC)

In this video, future HPC professionals discuss their participation in the ISC Student Cluster Competition. "Now in its seventh year, the Student Cluster Competition enables international teams to take part in a real-time contest focused on advancing STEM disciplines and HPC skills development. To take home top honors, the teams will have to showcase systems of their own design, adhering to strict power constraints and achieve the highest performance across a series of standard HPC benchmarks and applications."The post Video: Why your school should enter the ISC Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#34N35)

Today TYAN showcased their latest GPU-optimized platforms that target the high performance computing and artificial intelligence sectors at the GPU Technology Conference in Munich. "TYAN’s new GPU computing platforms are designed to provide efficient parallel computing for the analytics of vast amounts of data. By incorporating NVIDIA's latest Tesla V100 GPU accelerators, TYAN provides our customers with the power to accelerate both high performance and cognitive computing workloads†said Danny Hsu, Vice President of MiTAC Computing Technology Corporation's TYAN Business Unit.The post NVIDIA Tesla V100 GPUs Power New TYAN Server appeared first on insideHPC.

|

|

by Rich Brueckner on (#34N0C)

Ed Seidel from the University of Illinois gave this talk at the 2017 Argonne Training Program on Extreme-Scale Computing. The theme of his talk centers around the need for interdisciplinary research. "Interdisciplinary research (IDR) is a mode of research by teams or individuals that integrates information, data, techniques, tools, perspectives, concepts, and/or theories from two or more disciplines or bodies of specialized knowledge to advance fundamental understanding or to solve problems whose solutions are beyond the scope of a single discipline or area of research practice."The post Video: Revolution in Computer and Data-enabled Science and Engineering appeared first on insideHPC.

|

|

by staff on (#34MT8)

At GTC Europe this week, One Stop Systems (OSS) will exhibit two of the most powerful GPU accelerators for data scientists and deep learning researchers, the CA16010 and SCA8000. NVIDIA GPU computing is helping researchers and engineers take on some the world's hardest challenges,†said Paresh Kharya, group product marketing manager of Accelerated Computing at NVIDIA. “One Stop Systems' customers can now tap into the power of our Volta architecture to accelerate their deep learning and high performance computing workloads.â€The post OSS Showcases New HDCA Platforms with Volta GPUs at GTC Europe appeared first on insideHPC.

|

|

by staff on (#34MQ3)

Google Compute Engine now offers new VMs with the most Skylake vCPUs of any cloud provider. "Skylake in turn provides up to 20% faster compute performance, 82% faster HPC performance, and almost 2X the memory bandwidth compared with the previous generation Xeon. Need even more compute power or memory? We’re also working on a range of new, even larger VMs, with up to 4TB of memory."The post Google Compute Engine offers VMs with 96 Skylake CPUs and 624GB of Memory appeared first on insideHPC.

|

|

by staff on (#34MGW)

In the pharmaceutical industry, drug discovery is a long and expensive process. This sponsored post from Nvidia explores how the University of Florida and University of North Carolina developed an anakin-me neural network engine to produce computationally fast quantum mechanical simulations with high accuracy at a very low cost to speed drug discovery and exploration.The post Accelerating Quantum Chemistry for Drug Discovery appeared first on insideHPC.

|

|

by staff on (#34JGJ)

"Intel OPA, part of Intel Scalable System Framework, is a high-performance fabric enabling the responsiveness, throughput, and scalability required by today's and tomorrow's most-demanding high performance computing workloads. In this interview, Misage talks about market uptake in Intel OPA's first year of availability, reports on some of the first HPC deployments using the Intel Xeon Scalable platform and Intel OPA, and gives a sneak peek of what Intel OPA will be talking about at SC17."The post Podcast: Intel Omni-Path adds Performance and Scalalability appeared first on insideHPC.

|

|

by staff on (#34HW7)

Over at ALCF, Andrea Manning writes that the recent Argonne Training Program on Extreme-Scale Computing brought together HPC practitioners from around the world. "You can’t get this material out of a textbook,†said Eric Nielsen, a research scientist at NASA’s Langley Research Center. Added Johann Dahm of IBM Research, “I haven’t had this material presented to me in this sort of way ever.â€The post Future HPC Leaders Gather at Argonne Training Program on Extreme-Scale Computing appeared first on insideHPC.

|

|

by staff on (#34HPR)

Today the European PRACE initiative announced that 46 Awards from their recent 15th Call for Proposals total up to nearly 1.7 thousand million core hours. The 46 awarded projects are led by principal investigators from 12 different European countries. "Of local interest this time around, the awarded projects involve co-investigators from the USA (7) and Russia (2). All information and the abstracts of the projects awarded under the 15th PRACE Call for Proposals are now available online."The post PRACE Awards 1.7 Thousand Million Core Hours for Research Projects in Europe appeared first on insideHPC.

|

|

by Rich Brueckner on (#34HG9)

In this podcast, the Radio Free HPC team looks at Smart Cities. As the featured topic this year at the SC17 Plenary, the Smart Cities initiative looks to improve the quality of life for residents using urban informatics and other technologies to improve the efficiency of services.The post Radio Free HPC Previews the SC17 Plenary on Smart Cities appeared first on insideHPC.

|

|

by Rich Brueckner on (#34H4R)

Nikkei in Japan reports that Fujitsu is building a 37 Petaflop supercomputer for the National Institute of Advanced Industrial Science and Technology (AIST). "Targeted at Deep Learning workloads, the machine will power the AI research center at the University of Tokyo's Chiba Prefecture campus. The new Fujitsu system feature will comprise 1,088 servers, 2,176 Intel Xeon processors, and 4,352 NVIDIA GPUs."The post Fujitsu to Build 37 Petaflop AI Supercomputer for AIST in Japan appeared first on insideHPC.

|

|

by Rich Brueckner on (#34F8F)

Scott Parker gave this talk at the Argonne Training Program on Extreme-Scale Computing. "Designed in collaboration with Intel and Cray, Theta is a 9.65-petaflops system based on the second-generation Intel Xeon Phi processor and Cray’s high-performance computing software stack. Capable of nearly 10 quadrillion calculations per second, Theta will enable researchers to break new ground in scientific investigations that range from modeling the inner workings of the brain to developing new materials for renewable energy applications."The post Video: Argonne’s Theta Supercomputer Architecture appeared first on insideHPC.

|

|

by staff on (#34F6F)

Attendees of the COMSOL Conference in Boston this week were treated to a sneak preview future developments of the popular multiphysics software from Svante Littmarck, President and CEO of COMSOL. The conference featured a robust technical program with approximately 300 attendees. "Our customers are at the forefront of innovation behind the products that will shape our future,†says Littmarck. “We work tirelessly to support their efforts by increasing the modeling power of the COMSOL software and by making collaboration among simulation experts and their colleagues the core of everything we do. This annual event is our opportunity to connect and exchange knowledge within the COMSOL community on multiphysics modeling.â€The post COMSOL Conference Showcases Next-Gen Multiphysics appeared first on insideHPC.

|

|

by Rich Brueckner on (#34CZN)

The PDT team is seeking a highly-talented Linux HPC Systems Administrator to enhance and support our research computing clusters. As part of the HPC/Grid team, you will be responsible for improving, extending, and maintaining the HPC/Grid infrastructure, and helping provide a world-class computing and big data environment for PDT’s Quantitative Researchers. You will interface closely with research teams using the Grid, the entire Linux engineering group, software engineers, and PDT’s in-house monitoring team. You will also have the opportunity to serve as PDT’s subject matter expert for various HPC technologies.The post Job of the Week: HPC Systems Engineer at PDT Partners in NYC appeared first on insideHPC.

|

|

by Rich Brueckner on (#34CXN)

In this video, Norman Kutemperor from Scientel describes how his company ran a record-setting big data problem on the Owens supcomputer at OSC."The Ohio Supercomputer Center recently displayed the power of its new Owens Cluster by running the single-largest scale calculation in the Center’s history. Scientel IT Corp used 16,800 cores of the Owens Cluster on May 24 to test database software optimized to run on supercomputer systems. The seamless run created 1.25 Terabytes of synthetic data."The post Video: Scientel Runs Record Breaking Calculation on Owens Cluster at OSC appeared first on insideHPC.

|

|

by Rich Brueckner on (#34A2N)

Mark Sims (DoD) and Bob Sorensen from Hyperion Research gave this talk at the HPC User Forum in Milwaukee. Here, they demonstrate an exciting new tool that aims to map HPC centers across the USA.The post Mapping of the Opportunities for Government, Academia, and Industry Engagement in HPC appeared first on insideHPC.

|

|

by staff on (#349ZN)

Today Advanced Clustering Technologies announced the deployment of a new supercomputer at the University of South Dakota. cluster. The machine is named "Lawrence" after Nobel Laureate and University of South Dakota alumnus E. O. Lawrence. "Lawrence makes it possible for us to accelerate scientific progress while reducing the time to discovery,†said Doug Jennewein, the University’s Director of Research Computing. “University researchers will be able to achieve scientific results not previously possible, and our students and faculty will become more engaged in computationally assisted research.â€The post Advanced Clustering Technologies Deploys Lawrence Supercomputer at University of South Dakota appeared first on insideHPC.

|

|

by Rich Brueckner on (#349ZQ)

Today the Barcelona Supercomputing Center announced it has allocated 20 million processor hours to the LIGO project, the most recent winner of the Nobel Prize for Physics. "The importance of MareNostrum for our work is very easy to explain: without it we could not do the kind of work we do; we would have to change our direction of research.â€The post Video: MareNostrum Supercomputer Powers LIGO Project with 20 Million Processor Hours appeared first on insideHPC.

|

|

by Rich Brueckner on (#349R9)

Researchers are exploring the Tree of Life with the help of the CIPRES portal at the San Diego Supercomputer Center. “As a community-built resource, CIPRES addresses what the scientists really want and need to do in the real world of research,†said Mishler. "Aside from increasing our understanding of the evolutionary relationships of this planet’s diverse range of species, the research also has yielded results of critical importance to the health and welfare of humans."The post Exploring Evolutionary Relationships through CIPRES appeared first on insideHPC.

|

|

by Rich Brueckner on (#346VV)

Sunita Chandrasekaran and Guido Juckeland have published a new book on Programming with OpenACC. "Scientists and technical professionals can use OpenACC to leverage the immense power of modern GPUs without the complexity traditionally associated with programming them. OpenACC for Programmers integrates contributions from 19 leading parallel-programming experts from academia, public research organizations, and industry."The post New Book: OpenACC for Programmers appeared first on insideHPC.

|

|

by Rich Brueckner on (#346RG)

Marius Stan from Argonne gave this talk at the 2017 Argonne Training Program on Extreme-Scale Computing. Famous for his part-time acting role on the Breaking Bad TV show, Marius Stan is a physicist and a chemist interested in non-equilibrium thermodynamics, heterogeneity, and multi-scale computational science for energy applications. The goal of his research is to discover or design materials, structures, and device architectures for nuclear energy and energy storage.The post Dr. Marius Stan Presents: Uncertainty of Thermodynamic Data – Humans and Machines appeared first on insideHPC.

|