|

by Rich Brueckner on (#1GCM8)

"Dell is proud to collaborate with South Africa’s CSIR on the delivery of the fastest HPC system in Africa. The Lengau system will provide access and open doors to help drive new research, new innovations and new national economic benefits,†said Jim Ganthier, vice president and general manager, Engineered Solutions, HPC and Cloud at Dell. “While Lengau benefits from the latest technology advancements, from performance to density to energy efficiency, the most important benefit is that Lengau will enable new opportunities and avenues in research, the ability to help spur private sector growth in South Africa and, ultimately, help enable human potential.â€The post Dell Powers 1 Petaflop Lengau Supercomputer for CHPC in South Africa appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 14:00 |

|

by staff on (#1GAJH)

Our in-depth series on Intel architects continues with this profile of Mark Seager, a key driver in the company's mission to achieve Exascale performance on real applications. "Creating and incentivizing an exascale program is huge. Yet more important, in Mark’s view, NCSI has inspired agencies to work together to spread the value from predictive simulation. In the widely publicized Project Moonshot sponsored by Vice President Biden, the Department of Energy is sharing codes with the National Institutes of Health to simulate the chemical expression pathway of genetic mutations in cancer cells with exascale systems."The post With the Help of Dijkstra’s Law, Intel’s Mark Seager is Changing the Scientific Method appeared first on insideHPC.

|

|

by Rich Brueckner on (#1GA7G)

Bret Weber from DDN presented this talk at the 2016 MSST Conference. "SSDs and all flash arrays are being marketed as a panacea. This may be true if you’re a small to medium enterprise that simply needs more performance for email servers or wants to speed-up just a few hundred VMs. But, for Enterprise At-scale and High Performance Computing environments, identifying and removing I/O bottlenecks is much more complex than simply exchanging spinning disk drives with flash devices. Aside from performance – efficiency, scalability and integration are also critical success factors in larger and non-standard environments. In this domain, selecting a partner with the tools, technology and experience to holistically examine and optimize your entire I/O path can deliver orders of magnitude greater acceleration and competitive advantage to your organization."The post Video: Adoption Trends for Solid State in Big Data Sites appeared first on insideHPC.

|

|

by Rich Brueckner on (#1GA4D)

Today Intel announced the new Intel Xeon processor E7-8800/4800 v4 processor family for High Performance Data Analytics. Designed with the enterprise in mind, the Intel Xeon processor E7 v4 family offers robust performance, the industry’s largest memory capacity per socket, advanced reliability and hardware enhanced security for real-time analytics so that businesses can rapidly gain actionable insights from massive and complex data sets.The post Intel Ramps up HPDA with New Xeon E7 v4 Family of Processors appeared first on insideHPC.

|

|

by staff on (#1G9C5)

Today ThinkParQ from Germany announced certification of BeeGFS over Intel Omni-Path Architecture (OPA). “Without a doubt, Intel has made a big leap in performance with the new 100Gbps OPA technology compared to previous interconnect generations," said Sven Breuner, CEO of ThinkParQ. "The fact that we didn’t need to modify even a single line of the BeeGFS source code to deliver this new level of throughput, confirms that the BeeGFS internal design is really future-proof.â€The post BeeGFS Certified on Intel Omni-Path at 12GB/s per server appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G6TX)

Chris Mason from Acceleware presented this talk at GTC 2016. "This session will focus on real life examples including an RF powered contact lens, a wireless capsule endoscopy, and a smart watch. The session will also outline the basics of the subgridding algorithm along with the GPU implementation and the development challenges. Performance results will illustrate the significant reduction in computation times when using a localized subgridded mesh running on an NVIDIA Tesla GPU."The post Video: Using GPUs for Electromagnetic Simulations of Human Interface Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G6QV)

The MVAPICH User Group (MUG) meeting has issued its Call for Presentations. The event takes place August 15-17 in Columbus, Ohio.The post Call for Presentations: MVAPICH User Group (MUG) Meeting appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G46P)

"Newton's explanation of planetary orbits is one of the greatest achievements of science. We will follow Feynman's approach to show how the motion of the planets around the sun can be calculated using computers and without using Newton's advanced mathematics. This talk will convince you that doing physics with Python is way more fun than the way you did physics in high school or university."The post Video: Computational Physics with Python appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G44S)

The Heterogeneous System Architecture (HSA) Foundation has released the HSA 1.1 specification, significantly enhancing the ability to integrate open and proprietary IP blocks in heterogeneous designs. The new specification is the first to define the interfaces that enable IP blocks from different vendors to communicate, interoperate and collectively compose an HSA system.The post HSA 1.1 Specification Adds Multi-Vendor Architecture Support appeared first on insideHPC.

|

|

by staff on (#1G16W)

In this special guest feature, Scot Schultz from Mellanox and Terry Myers from HPE write that the two companies are collaborating to push the boundaries of high performance computing. "So while every company must weigh the cost and commitment of upgrading its data center or HPC cluster to EDR, the benefits of such an upgrade go well beyond the increase in bandwidth. Only HPE solutions that include Mellanox end-to-end 100Gb/s EDR deliver efficiency, scalability, and overall system performance that results in maximum performance per TCO dollar."The post HPE and Mellanox: Advanced Technology Solutions for HPC appeared first on insideHPC.

|

|

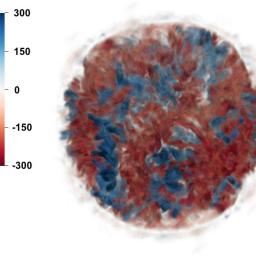

by Rich Brueckner on (#1G11S)

Researchers from Michigan State University are using the Mira supercomputer to perform large-scale 3-D simulations of the final moments of a supernova’s life cycle. While the 3-D simulation approach is still in its infancy, early results indicate that the models are providing a clearer picture than ever before of the mechanisms that drive supernova explosions.The post Supercomputing Supernova Explosions on Mira appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G0YV)

"IBM has developed new scale-up and scale-out systems — with 16 million neurons — that will be presented in Dr Modha's pioneering research talk. Watson wins at Jeopardy and enters industrial applications while AlphaGo defeats the human Go champion. No day goes by before the dooms day prediction of AI infused Robots taking over our world comes up in the news. Visions of HAL and Terminator coming alive? Will Artificial Intelligence make us obsolete?"The post Video: Will AI & Robotics Make Humans Obsolete? appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G0W1)

"Because water can transport heat 25 times more efficiently than air, ‘Triton’ can run high performing components faster and more efficiently than traditional air-cooled systems. Its ability to sub-cool the processor and operate at higher frequencies means that Triton can deliver up to 59% greater performance than the popular Intel Xeon processor E5-2680v4 for similar costs."The post Dell Unveils Triton Liquid Cooling Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#1G0RD)

Today the Transaction Processing Performance Council (TPC) today announced the immediate availability of the TPCx-BB benchmark. The benchmark is designed to measure the performance of Hadoop based systems including MapReduce, Apache Hive, and Apache Spark Machine Learning Library (MLlib).The post New TPCx-BB Benchmark Compares Big Data Analytics Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FX8P)

Over at the Dell HPC Community, Jim Ganthier writes that TACC is planning to deploy its 18 Petflop Stampede 2 supercomputer based on Dell servers running Intel Knights Landing processors. "Stampede 2 will do more than just meet growing demand from those who run data-intensive research. Imagine the discoveries that will be made as a result of this award and the new system. Now more than ever is an exciting time to be in HPC."The post Stampede 2 Supercomputer at TACC to Sport 18 Petaflops appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FX1F)

Today LBNL released version 2.0 of its innovative Singularity container software.The post Singularity 2.0 Software Makes Linux Applications More Portable appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FX01)

"One of the benefits of our ClusterStor modular architecture is its flexibility - we can deliver a very comparable performance with either Lustre or Spectrum Scale on the same extensible architecture. There are two key reasons for that balance of performance and flexibility. Firstly, we have a unique scale out storage architecture with a distributed processing model, meaning you’re not tied to a centralized legacy RAID controller hardware. Secondly, there is no proprietary hardware or RAID firmware in the system. All the software runs in a standard Linux environment, so we are able to take our software stack and it is really agnostic as to whether we are running with Lustre or SS."The post Interview: Ken Claffey on Seagate HPC Innovation On Deck at ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FWTC)

Today IBM expanded its portfolio of software-defined infrastructure solutions with cognitive features to help clients improve the management of computing resources to achieve faster, results from data-driven applications and analytics. As part of the announcement, the company has rebranded its Platform computing software as IBM Spectrum Computing. "IBM Spectrum Computing software includes IBM Spectrum Conductor, IBM Spectrum LSF and IBM Spectrum Symphony. The expanded software-defined computing portfolio complements the IBM Spectrum Storage software-defined storage family."The post Platform Rebrands as IBM Spectrum Computing with Focus on HPDA appeared first on insideHPC.

|

|

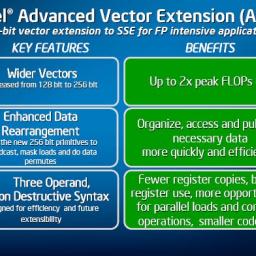

by MichaelS on (#1FWPG)

For maximum performance, data needs to flow into and out of the vectorization units. There are a few things to remember regarding laying out the data to gain high performance. These include, data layout, alignment, prefetching, and store operations. "Prefetching is also extremely important in HPC applications that use coprocessors. If the vectors are aligned, then the data can be streamed to the math units very efficiently, with data being prefetched, rather than the system having to load registers from various memory storage."The post Data Layout for High Performance appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FSFD)

"In the past, researchers often had to wait in line for the computing power for scientific discovery. With Alces Flight, you can take your compute projects from 0 to 60 in a few minutes. Researchers and scientists can quickly spin up multiple nodes with pre-installed compilers, libraries, and hundreds of scientific computing applications. Flight comes with a catalog of more than 750 built-in scientific computing applications, libraries and versions, covering nearly everything from engineering, chemistry and genomics, to statistics and remote sensing."The post Alces Flight from AWS Lets You Build a “Self-service Supercomputer in Minutes†appeared first on insideHPC.

|

|

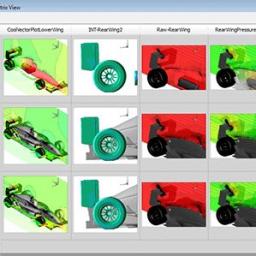

by staff on (#1FS19)

Denver-based Boom Technology is leveraging Rescale’s cloud-based simulation and optimization system to enable a rebirth of supersonic passenger travel. "Rescale’s cloud platform is a game-changer for engineering. It gives Boom computing resources comparable to building a large on premise HPC center. Rescale lets us move fast with minimal capital spending and resources overhead,†Joshua Krall, Co-founder & CTO.The post Rescale HPC Cloud to Foster Return of Supersonic Travel appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FRX7)

Today Mellanox announced the BlueField family of programmable processors for networking and storage applications. "As a networking offload co-processor, BlueField will complement the host processor by performing wire-speed packet processing in-line with the network I/O, freeing the host processor to deliver more virtual networking functions (VNFs),†said Linley Gwennap, principal analyst at the Linley Group. “Network offload results in better rack density, lower overall power consumption, and deterministic networking performance.â€The post Mellanox Introduces BlueField SoC Programmable Processors appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FRQT)

The ISAV2016 Workshop has issued it Call for Participation. Held at SC16 in cooperation with with SIGHPC, the In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization Workshop takes place Sunday, November 13th, 2016. The considerable interest in the HPC community regarding in situ analysis and visualization is due to several factors. First is an I/O cost […]The post Call for Participation: ISAV2016 Workshop at SC16 appeared first on insideHPC.

|

|

by staff on (#1FRM1)

"As the industry’s leading server vendor, HPE is committed to bringing new infrastructure innovations to the market that enable organizations to derive more value from their data,†said Vikram K, Director, Servers, Hewlett Packard Enterprise India. “We are delivering on that commitment by delivering a complete Persistent Memory hardware and software ecosystem into our server portfolio, as well as high-performance computing enhancements that will allow customers to increase agility, protect critical information and deliver new applications and services more quickly than ever before.â€The post Hewlett Packard Enterprise Adds Persistent Memory to Server Portfolio appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FRE9)

This week, the Women in HPC organization announced a series of special events coming up at ISC 2016. To learn more, we caught up with WHPC Director Dr. Toni Collis from EPCC at the University of Edinburgh. "Most people don’t notice how un-diverse HPC really is. But when you start counting the number of women in the room, at the table, or in the C-suite, it is quite surprising."The post Interview: Women in HPC to Host Special Events at ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FN4M)

The International Workshop on Communication Architectures at Extreme Scale has published its Advance Agenda. Now in its second year, Exacom 2016 will be held in conjunction with ISC 2016 in Frankfurt on Thursday, June 23, 2016.The post Agenda Posted for Exacom 2016 – Communication Architectures at Extreme Scale appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FMMC)

The 13th IFIP International Conference on Network and Parallel Computing has issued its Call for Papers. The NPC 2016 event takes place October 28-29 in Xi'an, China.The post Call for Papers: International Conference on Network and Parallel Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FMJS)

In this video from PYCON 2016 in Portland, Lorena Barba from George Washinton University presents: Beyond Learning to Program, Education, Open Source Culture, Structured Collaboration, and Language. "PyCon is the largest annual gathering for the community using and developing the open-source Python programming language."The post Video: Lorena Barba Keynote at PYCON 2016 appeared first on insideHPC.

|

|

by staff on (#1FMDG)

Today Univa announced the general availably of its Grid Engine 8.4.0 product. Enterprises can now automatically dispatch and run jobs in Docker containers, from a user specified Docker image, on a Univa Grid Engine cluster. This significant update simplifies running complex applications in a Grid Engine cluster and reduces configuration and OS issues. Grid Engine 8.4.0 isolates user applications into their own container, avoiding conflict with other jobs on the system and enables legacy applications in Docker containers and non-container applications to run in the same cluster.The post Univa Grid Engine Adds Support for Docker Containers & Knights Landing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FM92)

Today RAID Inc. announced a contract to provide Lawrence Livermore National Laboratory (LLNL) a custom parallel file system solution for its unclassified computing environment. RAID will deliver a 17PB file system able to sustain up to 180 GB/s. These high performance, cost-effective solutions are designed to meet LLNL’s current and future demands for parallel access data storage.The post Lustre and ZFS to Power New Parallel File System at LLNL appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FH40)

"Computer simulations of complex systems provide an opportunity to study their time evolution under user control. Simulations of neural circuits are an established tool in computational neuroscience. Through systematic simplification on spatial and temporal scales they provide important insights in the time evolution of networks which in turn leads to an improved understanding of brain functions like learning, memory or behavior. Simulations of large networks are exploiting the concept of weak scaling where the massively parallel biological network structure is naturally mapped on computers with very large numbers of compute nodes. However, this approach is suffering from fundamental limitations. The power consumption is approaching prohibitive levels and, more seriously, the bridging of time-scales from millisecond to years, present in the neurobiology of plasticity, learning and development is inaccessible to classical computers. In the keynote I will argue that these limitations can be overcome by extreme approaches to weak and strong scaling based on brain-inspired computing architectures."The post Video: Neuromorphic Computing – Extreme Approaches to Weak and Strong Scaling appeared first on insideHPC.

|

|

by staff on (#1FH10)

Today Cavium announced ThunderX2, its second generation of Workload-Optimized ARM server SoCs. ThunderX2 targets high performance volume servers deployed by Public/Private Cloud and Telco data centers and high performance computing applications. "Optimized for key Data Center workloads, ThunderX2 will deliver comparable performance at a better total cost of ownership compared to the next generation of traditional server processors."The post Cavium Rolls Out ThunderX2 ARM Processor appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FE0K)

In this video from the Perimeter Institute for Theoretical Physics in Ontario, Dr. Tim Palmer from the University of Oxford presents: Climate Change, Chaos, and Inexact Computing. "How well can we predict the climate future? This question is at the heart of Tim Palmer’s research into the links between chaos theory and the science of climate change. Palmer will discuss climate modeling, the emerging concept of inexact supercomputing, and chaos theory."The post Video: Climate Change, Chaos, and Inexact Computing appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FDYE)

With ISC 2016 coming up in June, a number of ancillary events have been scheduled in Frankfurt to take advantage of this annual gathering of over 2500 supercomputing professionals. We've compiled a full listing for what looks to be an exciting week in the history of high performance computing.The post Full Listing of Ancilliary Events at ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FB9Q)

In this Programming Throwdown podcast, Mark Harris from Nvidia describes Cuda programming for GPUs. "CUDA is a parallel computing platform and programming model invented by NVIDIA. It enables dramatic increases in computing performance by harnessing the power of the graphics processing unit (GPU). With millions of CUDA-enabled GPUs sold to date, software developers, scientists and researchers are finding broad-ranging uses for GPU computing with CUDA."The post Podcast: CUDA Programming for GPUs appeared first on insideHPC.

|

|

by Rich Brueckner on (#1FB9S)

Thanks to a grant from the National Science Foundation, Indiana University is developing an online service that will make it easier for university administrators to understand the importance of funding related to IT systems based at their institutions. The novel functionality will be available as a module for Open XDMoD (XD Metrics on Demand), which was developed by the University at Buffalo Center for Computational Research (CCR).The post XDMoD Tool to Measure Impact of Campus Cyberinfrastructure appeared first on insideHPC.

|

|

by Rich Brueckner on (#1F7X2)

In this video from the 2016 MSST Conference, Dave Anderson from Seagate presents: Whither Hard Disk Archives? The talk was part of a panel discussion on Data-intensive Workflows.The post Video: Whither Hard Disk Archives? appeared first on insideHPC.

|

|

by staff on (#1F7V5)

"One of the most recurrent themes is that of open-source vs. proprietary code. This debate is often painted with the idealistic open-source evangelists on one side, and the business-focused proprietary software advocates on the other. This is, of course, an unfair depiction of the topic. In reality, when debating open-source vs. proprietary, several issues tend to get conflated into one argument – open-source vs. closed-source, free vs. paid-for, restrictive vs flexible licensing, supported vs. unsupported, code quality, and so on."The post Open-source vs. Proprietary – Keeping Ideology Out of the Equation appeared first on insideHPC.

|

|

by staff on (#1F7QB)

"The world of artificial intelligence is rapidly evolving and affecting every aspect of our daily life. And soon this progress will be felt in the pharmaceutical industry. We set up the Pharma.AI division to help pharmaceutical companies significantly accelerate their R&D and increase the number of approved drugs, but in the process we came up with over 800 strong hypotheses in oncology, cardiovascular, metabolic and CNS space and started basic validation. We are cautious about making strong statements, but if this approach works, it will uberize the pharmaceutical industry and generate unprecedented number of QALY," said Alex Zhavoronkov, PhD, CEO of Insilico Medicine, Inc.The post Insilico Applies Deep Learning to Drug Discovery appeared first on insideHPC.

|

|

by Rich Brueckner on (#1F7K3)

Today Vela Software announced that it has acquired Tecplot, a leading provider of fluid dynamics visualization and analysis software for engineers and scientists in the aerospace and oil & gas vertical markets.The post Vela Software Acquires Tecplot Fluid Dynamics Software appeared first on insideHPC.

|

|

by Rich Brueckner on (#1F3Z3)

"The process of developing HPC software requires consideration of issues in software design as well as practices that support the collaborative writing of well-structured code that is easy to maintain, extend, and support. This presentation will provide an overview of development environments and how to configure, build, and deploy HPC software using some of the tools that are frequently used in the community."The post Video: Developing, Configuring, Building, and Deploying HPC Software appeared first on insideHPC.

|

|

by Rich Brueckner on (#1F3Q3)

The TERATEC Forum 2016 will host a June 29 workshop on HPC, Connected Objects, and IoT Infrastructures. The full event takes place June 28-29 in Palaiseau, France. "Many innovations and new generation systems are based on connected things equipped with massive instrumentation and integrated within Industrial Internet of Things (IIoT) infrastructure. This workshop will be focused on new HPC software and infrastructure technologies integrated within these future global smart systems."The post Teratec Forum to Spotlight HPC and IoT appeared first on insideHPC.

|

|

by Rich Brueckner on (#1F3N5)

"Weather prediction using high performance computing relies on having physically based models of the atmosphere that can deliver forecasts well in advance of the weather actually happening. ECMWF has embarked on a scalability program together with the NWP and climate modeling community in Europe. The talk will give an overview of the principles underlying numerical weather prediction as well as a description of the HPC related challenges that are facing the NWP and climate modeling communities today."The post Weather Prediction and the Scalability Challenge appeared first on insideHPC.

|

|

by MichaelS on (#1F3FT)

The process to vectorize application code is very important and can result in major performance improvements when coupled with vector hardware. In many cases, incremental work can mean a large payoff in terms of performance. "When applications that have successfully been implemented on supercomputers or have made use of SIMD instructions such as SSE or AVX are excellent candidates for a methodology to take advantage of modern vector capabilities in servers today."The post Vectorization Steps appeared first on insideHPC.

|

|

by Rich Brueckner on (#1F0D8)

Ansys, a provider of engineering simulation technology, has announced the release of its SeaScape architecture to help engineers accelerate the optimization of designs using a combination of elastic computation, machine learning, big data analytics and simulation technology.The post Ansys SeaHawk Brings Big Data Analytics to EDA appeared first on insideHPC.

|

|

by Rich Brueckner on (#1EZQJ)

In this video, Moshe Rappoport of the IBM Research THINK Lab - Zurich, takes into the world of quantum computing. He explains why the recent steps that scientists made this field are very likely just the beginning of yet another quantum leap in the history of computing. "The IBM Quantum Experience is a virtual lab where you can design and run your own algorithms through the cloud on real quantum processors located in the IBM Quantum Lab at the Thomas J Watson Research Center in Yorktown Heights, New York."The post Video: Why Quantum Computing Matters appeared first on insideHPC.

|

|

by Rich Brueckner on (#1EZNB)

Today AMD announced the Multiuser GPU (MxGPU) for blade servers, a new graphics virtualization solution that provides workstation-class experience. Now available HPE ProLiant WS460c Gen9 blade servers, the AMD FirePro S7100X GPU is the industry’s first and only hardware-virtualized GPU that is compliant with the SR-IOV (Single Root I/O Virtualization) PCIe virtualization standard. The AMD FirePro S7100X GPU is […]The post AMD FirePro S7100X Hardware-Virtualized GPU Comes to HPE Blade Servers appeared first on insideHPC.

|

|

by Rich Brueckner on (#1EZJ2)

Today Bright Computing announced that Sri Sathya Sai Institute of Higher Learning (SSSIHL) in India has chosen Bright infrastructure management technology to manage its HPC environment. This heterogeneous and hybrid cluster at SSSIHL is the first step towards achieving a self-sufficient HPC facility for the Institute’s research work, and we are pleased that Bright is […]The post Sri Sathya Sai Institute in India Chooses Bright Technology appeared first on insideHPC.

|

|

by staff on (#1EZFW)

"We want to encourage and support that collaborative behavior in whatever way we can, because there are a multitude of problems in government agencies and commercial entities that seem to have high performance computing solutions. Think of bringing together the tremendous computational expertise you find from the DOE labs with the problems that someone like the National Institutes of Health is trying to solve. You couple those two together and you really can create something amazing that will affect all our lives. We want to broaden their exposure to the possibilities of HPC and help that along. It’s important, and it will allow all of us in HPC to more broadly impact the world with the large systems as well as the more moderate-scale systems."The post Disruptive Opportunities and a Path to Exascale: A Conversation with HPC Visionary Alan Gara of Intel appeared first on insideHPC.

|

|

by staff on (#1EWHX)

Today Symbolic IO emerged from stealth mode with a suite of products destined to change the way industry defines storage and computing architecture, by bringing to market the first truly computational-defined storage solution. "The company’s IRIS (the Intensified RAM Intelligent Server) product will be offered in three variations: IRIS Compute, IRISVault and IRIS Store. The server solution introduces a new approach to storage and computing by rethinking how binary bits are processed and ultimately changing the way data is utilized. Symbolic’s solutions ensure that enterprise storage and compute no longer rely on media or hardware, instead “materializing†and “dematerializing†data in real-time and providing greater flexibility, performance and security."The post Symbolic IO Emerges from Stealth with First Computational-Defined Storage Solution appeared first on insideHPC.

|