|

by Rich Brueckner on (#1MVAN)

Today GIGABYTE Technology and Cavium announced a new set of servers built on the industry-leading ThunderX family of workload-optimized ARM server SoCs. According to Cavium, the collaboration brings the world's most powerful 64-bit ARM-based servers to market to address increasingly demanding application and workload requirements.The post Cavium Rolls Out ThunderX Servers with GIGABYTE Technology appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 12:15 |

|

by staff on (#1MTXB)

Private cloud storage enabled by BlackPearl provides the best option for balancing performance and cost; however, some customer workflows benefit greatly from incorporating a public cloud storage infrastructure,†said Matt Starr, Spectra Logic’s CTO. “Public cloud works best when a disaster recovery copy is needed; when wide data distribution is called for; to create geographic dispersion of data; and when other cloud-based features can be leveraged, such as automatic transcoding. The new Spectra hybrid storage ecosystem means that Spectra customers don’t have to choose between public and private cloud; BlackPearl brings them together seamlessly.â€The post Spectra BlackPearl Offers Direct Archive to AWS appeared first on insideHPC.

|

|

by staff on (#1MTQ2)

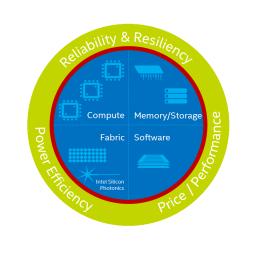

For decades, Intel has been enabling insight and discovery through its technologies and contributions to parallel computing and High Performance Computing (HPC). Central to the company’s most recent work in HPC is a new design philosophy for clusters and supercomputers called Intel® Scalable System Framework (Intel® SSF), an approach designed to enable sustained, balanced performance as the community pushes towards the Exascale era.The post Intel® Xeon Phi™ Processor—Highly Parallel Computing Engine for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MTEC)

In this video from PASC16, Peter Bauer from ECMWF shares his perspectives on the conference and his work with high performance computing for weather forecasting. "ECMWF specializes in global numerical weather prediction for the medium range (up to two weeks ahead). We also produce extended-range forecasts for up to a year ahead, with varying degrees of detail. We use advanced computer modeling techniques to analyze observations and predict future weather."The post Interview: Peter Bauer from ECMWF at PASC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MTCD)

While SC16 isn't until November, the conference is already gaining momentum. There is already a record 188 organizations signed up to exhibit on the industry floor.The post SC16 Exhibits Already Breaking Records appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MT8X)

The Argonne Leadership Computing Facility (ALCF) is now accepting proposals for its Aurora Early Science Program (ESP) through September 2, 2016. The program will award computing time to 10 science teams to pursue innovative research as part of pre-production testing on the facility’s next-generation system. Aurora is a massively parallel, many-core Intel-Cray supercomputer that will deliver 18 times the computational performance of Mira, ALCF’s current production system.The post Call for Proposals: Aurora Early Science Program at Argonne appeared first on insideHPC.

|

|

by MichaelS on (#1MQDR)

Cloud computing is growing and replacing many data centers for High Performance Computing (HPC) applications. However, the movement towards using a cloud infrastructure is not without challenges. This whitepaper discusses many of the challenges in moving from an on-premise HPC solution to using an HPC Cloud Solution.The post Best In Class HPC Cloud Solutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MQ6R)

In this podcast, the Radio Free HPC team welcomes Shahin Khan from OrionX to a discussion on chip architectures for HPC. "More and more new alternative architectures were in evidence at ISC in Germany this year, but what does it take for a chip architecture to be a winner? Looking back, chips like DEC Alpha had many advantages over the competition, but it did not survive."The post Radio Free HPC Looks at What Chip Architectures Need to Succeed appeared first on insideHPC.

|

|

by staff on (#1MQ6S)

"Achieving the No. 1 ranking is significant for China’s economic and energy security, not to mention national security. With 125 petaFLOP/s (peak), China’s supercomputer is firmly on the path toward applying incredible modeling and simulation capabilities enabling them to spur innovations in the fields of clean energy, manufacturing, and yes, nuclear weapons and other military applications. The strong probability of China gaining advantages in these areas should be setting off loud alarms, but it is hard to see what the U.S. is going to do differently to respond."The post With China Kicking the FLOP Out of Us, the Gold Medal Prize is Future Prosperity appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MPWS)

Now that ARM has been acquired, the big question is how much the Softbank investment firm will invest in bolstering their chips for HPC. Meanwhile, ARM continues to gain traction as evidenced by

|

|

by staff on (#1MPS4)

The UK semiconductor company, ARM, may soon be acquired by Japan’s Softbank for £24.3 billion. The Cambridge-based firm is best known for designing microchips used in most smartphones, including Apple's and Samsung's. However, this year it was announced that the upgrade to the K computer, Japan’s flagship supercomputer housed at RIKEN would also use ARM-based processors.The post Softbank to Acquire ARM appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MPNY)

Today One Stop Systems (OSS) announced that it has completed a merger with Magma, with OSS as the surviving entity. Both companies are market leaders in PCIe expansion technology used to create high-end compute accelerators and flash storage arrays. Together they become a dominant technology leader of PCIe expansion appliances.The post One Stop Systems Merges with Magma appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MM0K)

Today, Allinea announced that the company will be exhibiting at XSEDE16 July 17-21 in Miami. The conference will attract an audience across industry and academia to discuss the key themes of diversity, big data and science at scale. "Our tools are used extensively across the XSEDE user base so we’re delighted to be extending the value they bring by giving practical advice for getting the best out of infrastructure capabilities through software tuning, especially given the addition of support for the full Intel Xeon Phi family in our new v6.1 software release,†said Rob Rick, VP Americas for Allinea."The post Allinea to Share Top Tips for Code Modernization at XSEDE16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MKZE)

As the first recipient of the PRACE Ada Lovelace Award for HPC, Dr Zoe Cournia was selected for her outstanding contributions and impact on HPC in Europe on a global level. "Using the PRACE HPC resources and recent advances in computer-aided drug design allow us to develop drugs specifically designed for a given protein, shortening the time for development of new drugs,†says Dr Cournia. “I believe that our work is a good example of how computers help develop candidate drugs that have the potential to save millions of lives worldwide. I am honored to receive this prestigious award and hope that this serves as inspiration to other female researchers in the field.â€The post Video: Dr. Zoe Cournia on the PRACE Ada Lovelace Award for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MHGE)

In this video, Steven Pawson discussed how NASA uses computer models to build up a complete three-dimensional picture of El Niño in the ocean and atmosphere. Pawson is an atmospheric scientist and the chief of the Global Modeling and Assimilation Office at NASA's Goddard Space Flight Center in Greenbelt, Maryland.The post Video: A NASA Perspective on El Niño appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MHEK)

The Intel HPC Developer Conference has issued its Call for Proposals. Held in conjunction with SC16, the event takes place Nov. 12-13 in Salt Lake City.The post Call for Proposals: Intel HPC Developer Conference at SC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MENB)

Do you have new technology that could disrupt HPC in the near future? There's still time to get free exhibit space at SC16 in November. "At the SC16 Emerging Technologies Showcase, we invite submissions from industry, academia, and government researchers.The post Got Disruptive HPC Technology? Get Free Exhibit Space at SC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MEH0)

Are you looking at how to deliver HPC services through the Cloud? The CloudLightning Project in Europe is seeking your input on a new survey.The post CloudLightning Project Seeks Your Input with New Survey appeared first on insideHPC.

|

|

by staff on (#1MEF9)

The ASCR Leadership Computing Challenge (ALCC) has awarded 26 projects a total of 1.7 billion core-hours at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility. The one-year awards began July 1.The post DOE Awards 1.7 Billion Core-hours on Argonne Supercomputers appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MEC3)

"The data that I presented from the Sanger Institute is typical of the profiles that we come across: a mix of good streaming IO (ie the larger reads), but unexpectedly high numbers of small reads and writes. These small reads and writes are potentially harmful to the file system. We've profiled HPC applications in various different life sciences organizations, not just the Sanger Institute, and we've found these IO patterns throughout. We've also seen similar IO patterns in EDA and oil and gas applications."The post Video: Profiling Genome Pipeline I/O with DDN and the Sanger Institute appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MEA9)

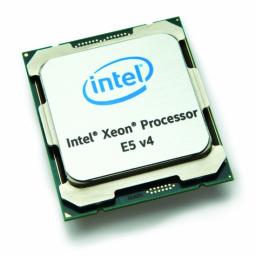

NASA Ames reports that SGI has completed an important upgrade to Pleiades supercomputer. "As of July 1, 2016, all of the remaining racks of Intel Xeon X5670 (Westmere) processors were removed from Pleiades to make room for an additional 14 Intel Xeon E5-2680v4 (Broadwell) racks, doubling the number of Broadwell nodes to 2,016 and increasing the system’s theoretical peak performance to 7.25 petaflops. Pleiades now has a total of 246,048 CPU cores across 161 racks containing four different Intel Xeon processor types, and provides users with more than 900 terabytes of memory."The post NASA Boosts Pleiades Supercomputer with Broadwell CPUs and LTO Tape appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MBGT)

Hadoop has a reputation for being extremely difficult to install and configure, but that seems to be changing. In this video, Bright Cluster Manager for Big Data is used to install Cloudera 5.3.1 with YARN failover in just five minutes.The post Video: 5-Minute Hadoop Cluster Setup using Bright Cluster Manager appeared first on insideHPC.

|

|

by staff on (#1MBF2)

CocoLink, a subsidiary of Seoul National University, in collaboration with Orange Silicon Valley, has upgraded its KLIMAX 210 server with 20 of the latest GeForce 1080 GPUs – with the eventual goal of scaling the single 4U rack to more than 200 teraflops.The post CocoLink Using Consumer GPUs for Deep Learning appeared first on insideHPC.

|

|

by Rich Brueckner on (#1MBDD)

Today SGI announced Zero Watt Storage, a powerful extension to its SGI DMF data management platform designed to meet growing customer demands for managing critical data. This solution was developed by SGI to offer its DMF customers fast, disk-based access to their near line data that significantly outperforms cloud storage in terms of costs and access speed up to 5X.The post Managing Critical Data with SGI’s New Zero Watt Storage appeared first on insideHPC.

|

|

by MichaelS on (#1MAJK)

Intel® Cilk™ Plus is an extension to C and C++ that offers a quick and easy way to harness the power of both multicore and vector processing. The three Intel Cilk Plus keywords provide a simple yet surprisingly powerful model for parallel programming, while runtime and template libraries offer a well-tuned environment for building parallel applications.The post Cilk Plus from Intel Offers Easy Access to Performance appeared first on insideHPC.

|

|

by staff on (#1MAGC)

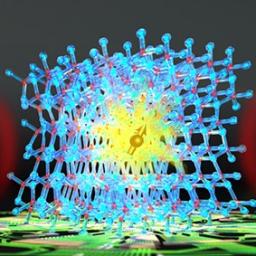

An eye-popping visualization of two black holes colliding demonstrates 3D Adaptive Mesh Refinement volume rendering on next-generation Intel® Xeon Phiâ„¢ processors. "It simplifies things when you can run on a single processor and not have to offload the visualization work,†says Juha Jäykkä, system manager of the COSMOS supercomputer. Dr. Jäykkä holds a doctorate in theoretical physics and also serves as a scientific consultant to the system’s users. “Programming is easier. The Intel Xeon Phi processor architecture is the next step for getting more performance and more power efficiency, and it is refreshingly convenient to use.â€The post Faster Route to Insights with Hardware and Visualization Advances from Intel appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M7G4)

Today Mellanox announced the availability of new software drivers for RoCE (RDMA over Converged Ethernet). The new drivers are designed to simplify RDMA (Remote Direct Memory Access) deployments on Ethernet networks and enable high-end performance using RoCE, without requiring the network to be configured for lossless operation. This enables cloud, storage, and enterprise customers to deploy RoCE more quickly and easily while accelerating application performance, improving infrastructure efficiency and reducing cost.The post Mellanox Enhances RoCE Software to Simplify RDMA Deployments appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M7G5)

Today the Open MPI Team announced the release of Open MPI version 2.0.0. Version 2 is a major new release series containing many new features and bug fixes.The post Open MPI 2.0.0 Released appeared first on insideHPC.

|

|

by staff on (#1M77C)

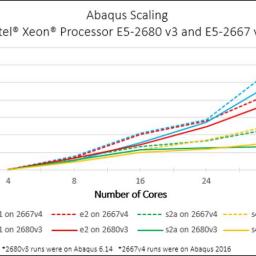

Today Simulationclusters.com announced it is now running Intel’s E5-2600 v4 processors. A site for real-time demonstration of the ROI of upgrading from a workstation to an HPC cluster, Simulationsclusters.com is a collaboration between Nor-Tech, Intel, and Dassault Systèmes that showcases benchmarks with the new processors that show dramatic performance increases and significant cost benefits.The post Simulationclusters.com Shows ROI from Intel v4 Processors appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M73V)

In this video from PASC16, Peter Messmer from Nvidia gives his perspectives on the conference and his work on co-design for high performance computing. "Using a combination of specialized rather than one type fits all processing elements offers the advantage of providing the most economical hardware for each task in a complex application. In order to produce optimal codes for such heterogeneous systems, application developers will need to design algorithms with the architectural options in mind."The post Video: Nvidia’s Peter Messmer on Co-Design at PASC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M708)

The Federal University of Rio de Janeiro is embarking on ground-breaking energy research powered by a new SGI Computer. The high performance computing system will be installed through the SGI valued partner, Versatus HPC.The post SGI Powers Energy Research at UFRJ in Brazil appeared first on insideHPC.

|

|

by staff on (#1M6WQ)

"With an imminent switchover to a new Cray system with next-generation Intel Xeon Phi Processors (codenamed Knights Landing) planned for October, the ACCMS team at Kyoto University is eagerly looking forward to a potential two-fold application performance improvements from its new system. But the lab is also well aware that there is significant recoding work ahead before the promise of the new manycore technology can be realized."The post Kyoto University Thinks Widening SIMD Will be Key to Performance Gains in New Intel Xeon Phi processor-based Cray System appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M3SZ)

In this podcast from ISC 2016 in Frankfurt, Steve Pawlowski from Micron discusses the latest memory technology trends for high performance computing. "When you look at a technology like 3D XPoint and some of the new materials the industry is looking at, those latencies are becoming more DRAM-like, which makes them a more attractive option to look at. Is there a way we can actually inject persistent memory that’s fairly high-performance so we don’t take a performance hit but we can certainly increase the capacity on a cost-per-bit basis versus what we have today?"The post Podcast: Micron’s Steve Pawlowski on the Latest Memory Trends for HPC appeared first on insideHPC.

|

|

by staff on (#1M3JZ)

In this special guest feature, Robert Roe from Scientific Computing World writes that the new #1 system on the TOP500 is using home-grown processors to shake up the supercomputer industry. "While the system does have a focus towards computation, as opposed to the more data-centric computing strategies that we have begun to see implemented in the US and Europe, it is most certainly not just a Linpack supercomputer. The report explains that there are already three applications running on the Sunway TaihuLight system which are finalists for the Gordon Bell Award at SC16."The post China Leads TOP500 with Home-grown Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M3DK)

"Data Science and Information Systems researchers at UQ are tackling the challenges of big data, real-time analytics, data modeling and smart information use. The cutting-edge solutions developed at UQ will lead to user empowerment at an individual, corporate and societal level. Our researchers are making a sustained and influential contribution to the management, modeling, governance, integration, analysis and use of very large quantities of diverse and complex data in an interconnected world."The post Video: Data Storage Infrastructure at University of Queensland appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M39D)

Today the Institute for Research in Biomedicine and the Barcelona Supercomputing Center unveiled Nostrum BioDiscovery, a new biotech company that applies computational simulation to help new drugs and biotech molecules to reach the market.The post Nostrum BioDiscovery to Apply Supercomputing for Drug Development appeared first on insideHPC.

|

|

by Rich Brueckner on (#1M2CP)

In this video, CoolIT Systems CEO & CTO, Geoff Lyon, reveals details on the company’s next generation liquid cooling solutions for HPC, Cloud and Enterprise markets during ISC HPC 2016 in Frankfurt. Discussion highlights include OEM server solutions, customer case studies, product launches and more. "To learn more about how CoolIT Systems products and solutions maximize data center performance and efficiency whilst significantly reducing OPEX and overall TCO, visit www.coolitsystems.com."The post The Liquid Cooling Revolution Continues for HPC with CoolIT Systems appeared first on insideHPC.

|

|

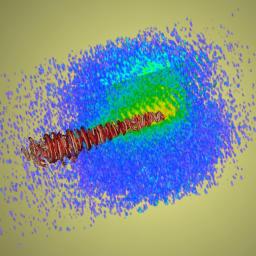

by Rich Brueckner on (#1M0AJ)

In this podcast, researchers from the University of Texas at Austin discuss how they are using TACC supercomputers to find a new way to make controlled beams of gamma rays. "The simulations done on the Stampede and Lonestar systems at TACC will guide a real experiment later this summer in 2016 with the recently upgraded Texas Petawatt Laser, one of the most powerful in the world. The scientists say the quest for producing gamma rays from non-radioactive materials will advance basic understanding of things like the inside of stars. What's more, gamma rays are used by hospitals to eradicate cancer, image the brain, and they're used to scan cargo containers for terrorist materials. Unfortunately no one has yet been able to produce gamma ray beams from non-radioactive sources. These scientists hope to change that."The post Podcast: Supercomputing Better Ways to Produce Gamma Rays appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KZR1)

The LAD'16 conference has issued its Call for Presentations. Hosted jointly by CEA, EOFS, and OpenSFS, the Lustre Administrator & Developer Conference will take place Sept. 20-21 in Paris.The post Call for Lustre Presentations: LAD’16 in Paris appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KZKZ)

In this video from the Transtec booth at ISC 2016, Piotr Wachowicz from Bright Computing shows how the company's cluster management software enables customers to configure and monitor OpenStack systems. "Bright Cluster Manager provides a unified enterprise-grade solution for provisioning, scheduling, monitoring and management of HPC and Big Data systems in your data center and in the cloud. Our dynamic cloud provisioning optimizes cloud utilization by automatically creating servers when they’re needed and releasing them when they’re not. Bright OpenStack provides a complete cloud solution that is easy to deploy and manage."The post Video: Deploying OpenStack Solutions with Bright Computing & Transtec appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KZG3)

Registration is now open for a free Workflows Workshop to be held August 9-10 at multiple institutions across the country. Sponsored by the Blue Waters sustained-petascale computing project, this workshop will provide an overview of workflows and how they can enhance research productivity.The post Register Now for HPC Workflow Workshops appeared first on insideHPC.

|

|

by MichaelS on (#1KZEH)

Organizations that implement high-performance computing (HPC) technologies have a wide range of requirements. From small manufacturing suppliers to national research institutions, using significant computing technologies is critical to creating innovative products and leading-edge research. No two HPC installations are the same. For maximum return, budget, software requirements, performance and customization all must be considered before installing and operating a successful environment.The post Successful Implementations That Demand Flexible HPC appeared first on insideHPC.

|

|

by staff on (#1KWM3)

Businesses in the north west of the UK are being helped to develop new products faster and more cheaply. The advanced engineering technology centre was officially opened today at the Science and Technology Facility’s Council’s (STFC) Daresbury Laboratory in Cheshire. It will provide UK businesses of all sizes, including small start-ups, with affordable access to more than £2 million of advanced engineering technology, including advanced 3D printing and rapid prototype assistance.The post STFC in the UK Opens New Technology Center appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KWK7)

"This talk will describe one new effort to embed best practices for reproducible scientific computing into traditional university curriculum. In particular, a set of open source, liberally licensed, IPython (now Jupyter) notebooks are being developed and tested to accompany a book “Effective Computation in Physics.†These interactive lecture materials lay out in-class exercises for a project-driven upper-level undergraduate course and are accordingly intended to be forked, modified and reused by professors across universities and disciplines."The post Video: A Computational Future for Science Education appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KT3V)

A new World Record was set by the Huazhong University team at the Student Cluster Competition at ISC 2016. Using Nvidia Tesla K80 GPUs, the team recorded 12.56 teraflops on the LINPACK benchmark, while staying within a 3-Kw power consumption limit.The post China Team Sets New World Record appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KT2M)

In this video from the Women in HPC Workshop at ISC 2016, Kim McMahon moderates a panel discussion on Diversity in the Workplace. The panel will discuss methods that to improve workplace diversity, the challenges, successes and pitfalls they have experienced.The post WHPC Panel Looks at Diversity in the Workplace appeared first on insideHPC.

|

|

by staff on (#1KQG1)

DDN has opened a research and development center in Paris, in response to strong demand the French market and across Europe for high-performance big data storage solutions. DDN’s Newly Created Research and Development Centre, located in the Meudon area of Paris, will develop advanced end-to-end data lifecycle management technology, and a local technical presence for its European customer base - increasing scope for innovation and collaboration.The post DDN Opens New Techology Center in Paris appeared first on insideHPC.

|

|

by Rich Brueckner on (#1KPZJ)

In this video from ISC 2016, Karl Schultz from the OpenHPC Community provides and update on software releases and other milestones. "OpenHPC is a Linux Foundation Collaborative Project whose mission is to provide an integrated collection of HPC-centric components that can be used to provide full-featured reference HPC software stacks. Provided components should range across the entire HPC software ecosystem including provisioning and system administration tools, resource management, I/O services, development tools, numerical libraries, and performance analysis tools."The post OpenHPC Moves Forward with New Software Release appeared first on insideHPC.

|

|

by staff on (#1KPVY)

Today Bright Computing announced that Linvision has become the latest company to join the Bright Computing partner network, to serve customers across Benelux, France and Germany. "At Linvision, we see a high demand from organizations for state of the art HPC systems that enable projects to be carried out efficiently and cost-effectively," said Karin Peeters, CEO at Linvision. "I’m excited that Bright and Linvision can enter this market together, to see how we can support our customers with their evolving business challenges.â€The post Linvision in the Netherlands Joins Bright Partner Community appeared first on insideHPC.

|

|

by staff on (#1KPVZ)

The Department of Energy’s Energy Sciences Network (ESnet) has published a 3D timeline celebrating thirty years of service. With the launch of an interactive timeline, viewers can explore ESnet’s history and contributions.The post ESnet Timeline Celebrates 30 Years of Servicing Science appeared first on insideHPC.

|