|

by MichaelS on (#1DGJA)

"The next step is to look at using OpenMP directives to create multiple threads to distribute the work over many threads and cores. A key OpenMP directive, #pragma omp for collapse, will collapse the inner two loops into one. The developer can then set the number of threads and cores to use and return the application to determine the performance. In one test case, three threads per physical core shows the best performance, by quite a lot compared to just using one or two threads per core."The post Diffusion Optimization on Intel Xeon Phi appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-04 14:00 |

|

by Rich Brueckner on (#1DD6P)

The OpenPOWER Foundation is pleased to announce the first OpenPOWER Europe Summit taking place on June 16-17 in Frankfurt.The post OpenPOWER Summit Coming to Frankfurt June 16-17 appeared first on insideHPC.

|

|

by staff on (#1DCV9)

A new paper outlining NERSC's Burst Buffer Early User Program and the center’s pioneering efforts in recent months to test drive the technology using real science applications on Cori Phase 1 has won the Best Paper award at this year’s Cray User Group (CUG) meeting.The post NERSC Paper on Burst Buffers Recognized at Cray User Group appeared first on insideHPC.

|

|

by staff on (#1DCRM)

Today D-Wave Systems announced the launch of Quantum for Quants, an online community designed specifically for quantitative analysts and other experts focused on complex problems in finance. Launched at the Global Derivatives Trading & Risk Management conference in Budapest, the online community will allow quantitative finance and quantum computing professionals to share ideas and insights regarding quantum technology and to explore its application to the finance industry. Through this community financial industry experts will also be granted access to quantum computing software tools, simulators, and other resources and expertise to explore the best ways to tackle the most difficult computational problems in finance using entirely new techniques.The post D-Wave Systems Launches Quantum for Quants Online Community appeared first on insideHPC.

|

|

by Rich Brueckner on (#1DCMR)

In this video, Oklahoma State Director of HPC Dana Brunson describes how the Cowboy supercomputer powers research. "High performance computing is often used for simulations that may be too big, too small, too fast, too slow, too dangerous or too costly, another thing it's used for involves data. So you may remember the human genome project it took nearly a decade and cost a billion dollars, these sorts of things can now be done over the weekend for under a thousand dollars. Our current super computer is named Cowboy and it was funded by a 2011 National Science Foundation Grant and it has been serving us very well."The post Cowboy Supercomputer Powers Research at Oklahoma State appeared first on insideHPC.

|

|

by staff on (#1DADB)

Today SGI announced the deployment of its largest SGI UV 300 supercomputer to date at The Genome Analysis Centre (TGAC) in the UK. As one of the largest Intel SSD for PCIe*deployments worldwide, TGAC’s new supercomputing platform gives the research Institute access to the next-generation of SGI UV technology for genomics. This will enable TGAC researchers to store, categorize and analyze more genomic data in less time for decoding living systems and answering crucial biological questions. "The combination of processor performance, memory capacity and one of the largest deployments of Intel SSD storage worldwide makes this a truly powerful computing platform for the life sciences.â€The post TGAC Installs Worlds Largest SGI UV 300 Supercomputer for Life Sciences appeared first on insideHPC.

|

|

by staff on (#1DACK)

Today Fujitsu announced an order for a 25 Petaflop supercomputer system from the University of Tokyo and the University of Tsukuba. Powered by Intel Knights Landing processors, the "T2K Open Supercomputer" will be deployed to the Joint Center for Advanced High-Performance Computing (JCAHPC), which the two universities jointly operate. "The new supercomputer will be an x86 cluster system consisting of 8,208 of the latest FUJITSU Server PRIMERGY x86 servers running next-generation Intel Xeon Phi processors. Due to be completely operational in December 2016, the system is expected to be Japan's highest-performance supercomputer."The post Fujitsu to Build 25 Petaflop Supercomputer at JCAHPC in Japan appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D9HY)

In this video from the 2016 OpenPOWER Summit, Stephen Bates of Microsemi presents: Enabling high-performance storage on OpenPOWER Systems. "Non-Volatile Memory (NVM), and the low latency access to storage it provides, is changing the compute stack. NVM Express is the de-facto protocol for communicating with local NVM attached over the PCIe interface. In this talk we will demonstrate performance data for extermely low-latency NVMe devices operating inside OpenPOWER systems. We will discuss the implications of this for applications like in-memory databases, analytics and cognitive computing. In addition we will present data on the emerging NVMe over Fabrics (NVMf) protocol running on OpenPOWER systems."The post Video: Enabling High-Performance Storage on OpenPOWER Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D9C8)

The TERATEC Forum has posted their agenda for their 11th annual meeting. The event takes place June 28-29 in Palaiseau, France. "TERATEC brings together top international experts in high performance numerical design, simulation and Big Data, making it the major event in France and in Europe in this domain."The post Teratec Forum to Put Spotlight on Big Data & HPC appeared first on insideHPC.

|

|

by staff on (#1D95H)

Today the ISC Group, promoter of the TOP500 list, is pleased to announce the appointment of high performance computing industry journalist Michael Feldman to the position of managing editor of TOP500 News. In his new role, Feldman will expand the TOP500 project’s information portal into a comprehensive news site reporting on the HPC industry. "We are thrilled to bring Michael on board as he is a respected voice in the industry. With the addition of a comprehensive news site managed by him, the TOP500 project will offer a valuable new resource for HPC vendors and users,†said ISC Group managing director and TOP500 co-author, Martin Meuer.The post Michael Feldman Joins TOP500.org as Managing Editor appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D941)

David Bonnie from LANL presented this talk at the 2016 MSST Conference. "As we continue to scale system memory footprint, it becomes more and more challenging to scale the long-term storage systems with it. Scaling tape access for bandwidth becomes increasingly challenging and expensive when single files are in the many terabytes to petabyte range. Object-based scale out systems can handle the bandwidth requirements we have, but are also not ideal to store very large files as objects. MarFS sidesteps this while still leveraging the large pool of object storage systems already in existence by striping large files across many objects."The post MarsFS – A Near-POSIX Namespace Leveraging Scalable Object Storage appeared first on insideHPC.

|

|

by Douglas Eadline on (#1A3H4)

Today's High Performance Computing (HPC) systems offer the ability to model everything from proteins to galaxies. The insights and discoveries offered by these systems are nothing short of astounding. Indeed, the ability to process, move, and store data at unprecedented levels, often reducing jobs from weeks to hours, continues to move science and technology forward at an accelerating pace. This article series offers those considering HPC, both users and managers, guidance when considering the best way to deploy an HPC solution.The post InsideHPC Guide to Technical Computing appeared first on insideHPC.

|

|

by staff on (#1D8S1)

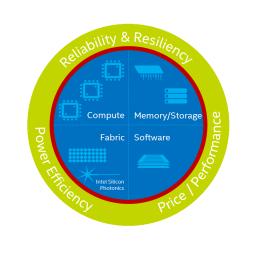

Intel has been working on a new HPC design philosophy for HPC systems called Intel® Scalable System Framework (Intel® SSF), an approach designed to enable sustained, balanced performance in HPC as the community pushes towards the Exascale computing era. Central to Intel SSF performance is the Lustre* scalable, parallel file system (PFS). Intel® Enterprise Edition for Lustre software (Intel® EE for Lustre software) is the Intel distribution of the well-known PFS, which is used by the majority of the fastest supercomputers around the world.The post Intel® Enterprise Edition for Lustre* Software—High-performance File System appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D6YG)

Today the Association for Computing Machinery’s Special Interest Group on Algorithms and Computation Theory (SIGACT) and the European Association for Theoretical Computer Science (EATCS) announced that Stephen Brookes and Peter W. O’Hearn are the recipients of the 2016 Gödel Prize for their invention of Concurrent Separation Logic.The post ACM Awards 2016 Gödel Prize to Inventors of Concurrent Separation Logic appeared first on insideHPC.

|

|

by staff on (#1D6DT)

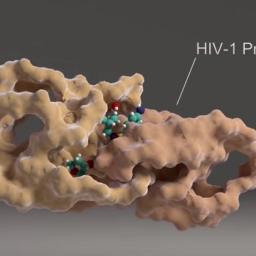

In what is being cited as a step towards personalized medicine, the Barcelona Supercomputing Center (BSC) and IrsiCaixa have developed a bioinformatics method to predict the effects of mutation on the resistance of the virus to HIV drugs. An article published in the Journal of Chemical Information and Modeling explains how this method has effectively predicted the resistance of the virus with genetic mutations in the HIV-1 protease, a protein which is essential for the replication of the virus, to the drugs amprenavir and darunavir. The method could easily be applied to other drugs and proteins.The post Personalized Medicine steps forward with Bioinformatics Method from BSC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D6C4)

In this video from the 2016 MSST Conference, Yoonho Park from IBM presents: Storage Performance Modeling for Future Systems. "The burst buffer is an intermediate, high-speed layer of storage that is positioned between the application and the parallel file system (PFS), absorbing the bulk data produced by the application at a rate a hundred times higher than the PFS, while seamlessly draining the data to the PFS in the background."The post Storage Performance Modeling for Future Systems appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D660)

In this podcast, the Radio Free HPC team recaps the ASC16 Student Cluster Competition in China and the 2016 MSST Conference in Santa Clara. Dan spent a week in Wuxi interviewing ASC16 student teams, he came back impressed with the Linpack benchmark tricks from the team at Zhejiang University, who set a new student LINPACK record with 12.03 TFlop/s. Meanwhile, Rich was in Santa Clara for the MSST conference, where he captured two days of talks on Mass Storage Technologies.The post Radio Free HPC Trip Reports from ASC16 & MSST appeared first on insideHPC.

|

|

by Douglas Eadline on (#1D5V1)

Successful HPC computing depends on choosing the architecture that addresses both application and institutional needs. In particular, finding a simple path to leading edge HPC and Data Analytics is not difficult, if you consider the capabilities and limitations of various approaches to HPC performance, scaling, ease of use, and time to solution. Careful analysis and consideration of the following questions will help lead to a successful and cost-effective HPC solution. Here are three questions to ask to ensure HPC success.The post Three Questions to Ensure Your HPC Success appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D3VP)

In this video from the 2016 MSST Conference, Harriet Coverston from Versity presents: Versity - Archiving to Objects. "Introducing Versity Storage Manager - an enterprise-class storage virtualization and archiving system that runs on Linux. Offering comprehensive data management for tiered storage environments and the ability to preserve and protect your data forever. Maximum protection at a minimum cost. Versity supports nearly unlimited volumes of storage and offers the most robust archive policy engine on the market."The post Video: Versity HSM – Archiving to Objects appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D3TB)

Today Flow Science announced the speakers for its annual FLOW-3D European Users Conference that will take place on June 15-16, 2016 in Kraków, Poland.The post Agenda Posted for FLOW-3D European User Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D1X4)

In this video from the GPU Hackathon at the University of Delaware, attendees tune their code to accelerate their application performance. The 5-day intensive GPU programming Hackathon was held in collaboration with Oak Ridge National Lab (ORNL). "Thanks to a partnership with NASA Langley Research Center, Oak Ridge National Laboratory, National Cancer Institute, National Institutes of Health (NIH), Brookhaven National Laboratory and the UD College of Engineering, UD students had access to the world's second largest supercomputer — the Titan — to help solve real-world problems."The post Video: Accelerating Code at the GPU Hackathon in Delaware appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D1TZ)

SGI_logo_platinum_lgSGI is seeking an HPC Pre-Sales Engineer in our Job of the Week. "The HPC Pre-Sales Engineer role provides in depth technical and architectural expertise in Federal Sales opportunities in the DC area, primarily working with DOD and Civilian Agencies. As the primary technical interface with the customer, you must be able to recognize customer needs, interpret them and produce comprehensive solutions."The post Job of the Week: HPC Pre-Sales Engineer at SGI appeared first on insideHPC.

|

|

by Rich Brueckner on (#1D071)

There is still time to take advantage of Early Bird registration rates for ISC 2016. You can save over 45 percent off the on-site registration rates if you sign up by May 11. "ISC 2016 takes place June 19-23 in Frankfurt, Germany. With an expected attendance of 3,000 participants from around the world, ISC will also host 146 exhibitors from industry and academia."The post Register for ISC 2016 by May 11 for Early Bird Discounts appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CZT0)

In this video from the 2016 Percona Data Performance Conference, Brendan Gregg, Senior Performance Architect from Netflix presents: Linux Systems Performance. “Systems performance provides a different perspective for analysis and tuning, and can help you find performance wins for your databases, applications, and the kernel. However, most of us are not performance or kernel engineers, […]The post Video: Brendan Gregg Looks at Tools & Methodologies for Linux System Performance appeared first on insideHPC.

|

|

by staff on (#1CZRW)

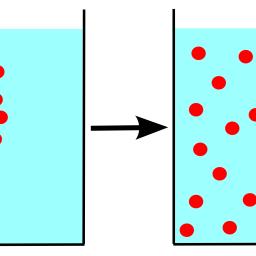

In this TACC Podcast, Jorge Salazar looks at how researchers are using the Stampede supercomputer to shed light on the microscale world of colloidal gels — liquids dispersed in a solid medium as a gel. "Colloidal gels are actually soft solids, but we can manipulate their structure to produce ‘on-demand' transitions from liquid-like to solid-like behavior that can be reversed many times," Zia said. Zia is an Assistant Professor of Chemical and Biomolecular Engineering at Cornell University.The post Podcast: Supercomputing Gels with Stampede appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CZJY)

Kirill Malkin from SGI presented this talk at the 2016 MSST conference. "Malkin will talk about the impact of power management on reliability of hard disk devices based on a field experience with SGI’s MAID product (formerly COPAN). The presentation will explore statistical analysis of support data covering a decade of MAID deployment (2005-2015) as well as briefly cover the history and basic principles of the technology and provide an update on future direction of the product line."The post A Perspective on Power Management for Hard Disks appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CZHC)

Today the ISC Group announced that the Green500 will be integrated with the TOP500 project with a single submission process. "This merge is of great significance to the high performance computing community,†said Erich Strohmaier, co-founder of TOP500. “Both projects will now be maintained under a common set of rules for data submission, which will simplify the process for submitters and provide a consistent set of data for the historical record.â€The post Submissions Open for Newly Merged TOP500 and Green500 appeared first on insideHPC.

|

|

by staff on (#1CXDZ)

IBM has introduced a new way for organizations of all sizes to buy and acquire a full HPC management suite including a community edition for those just starting out. The new IBM Platform LSF Suites are packages that include more than IBM Platform LSF, they provide additional functionalities designed to simplify HPC for users, administrators and the IT organization.The post IBM Makes HPC Easier appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CWW4)

Katie Antypas from NERSC presented this talk at the 2016 MSST conference. Katie is the Project Lead for the NERSC-8 system procurement, a project to deploy NERSC's next generation supercomputer in mid-2016. The system, named Cori, (after Nobel Laureate Gerty Cori) will be a Cray XC system featuring 9300 Intel Knights Landing processors. The Knights Landing processors will have over 60 cores with 4 hardware threads each and a 512 bit vector unit width. It will be crucial that users can exploit both thread and SIMD vectorization to achieve high performance on Cori."The post Superfacility – How New Workflows in the DOE Office of Science are Changing Storage Requirements appeared first on insideHPC.

|

|

by staff on (#1CWN8)

While much noise is being made about the race to exascale, building productive supercomputers really comes down to people and ingenuity. In this special guest feature, Donna Loveland profiles supercomputer architect Robert Wisniewski from Intel. "In combining the threading and memory challenges, there’s an increased need for the hardware to perform synchronization operations, especially intranode ones, efficiently. With more threads utilizing less memory with wider parallelism, it becomes important that they synchronize among themselves efficiently and have access to efficient atomic memory operations. Applications also need to be vectorized to take advantage of the wider FPUs on the chip. While much of the vectorization can be done by compilers, application developers can follow design patterns that aid the compiler’s task."The post Peta-Exa-Zetta: Robert Wisniewski and the Growth of Compute Power appeared first on insideHPC.

|

|

by MichaelS on (#1CWS7)

"The Intel Xeon Phi coprocessor is an example of a many core system that can greatly increase the performance of an application when used correctly. Simply taking a serial application and expecting tremendous performance gains will not happen. Rewriting parts of the application will be necessary to take advantage of the architecture of the Intel Xeon Phi coprocessor."The post Basics For Coprocessors appeared first on insideHPC.

|

|

by Douglas Eadline on (#1CWG2)

Cloud computing has become another tool for the HPC practitioner. For some organizations, the ability of cloud computing to shift costs from capital to operating expenses is very attractive. Because all cloud solutions require use of the Internet, a basic analysis of data origins and destinations is needed. Here's an overview of when local or cloud HPC make the most sense.The post Local or Cloud HPC? appeared first on insideHPC.

|

|

by staff on (#1CSM5)

A newly released report commissioned by the National Science Foundation (NSF) and conducted by National Academies of Sciences, Engineering, and Medicine examines priorities and associated trade-offs for advanced computing investments and strategy. "We are very pleased with the National Academy's report and are enthusiastic about its helpful observations and recommendations," said Irene Qualters, NSF Advanced Cyberinfrastructure Division Director. "The report has had a wide range of thoughtful community input and review from leaders in our field. Its timing and content give substance and urgency to NSF's role and plans in the National Strategic Computing Initiative."The post New Report Charts Future Directions for NSF Advanced Computing Infrastructure appeared first on insideHPC.

|

|

by staff on (#1CSBS)

Today ACM announced that Ron Perrott, an international leader in the development and promotion of parallel computing, will receive ACM’s prestigious Distinguished Service Award. The goal of ACM’s Awards and Recognition Program is to highlight outstanding technical and professional achievements and contributions in computer science and IT. Perrott will be formally honored at the ACM Awards Banquet on June 11 in San Francisco.The post Ron Perrott to Receive ACM Distinguished Service Award appeared first on insideHPC.

|

|

by staff on (#1CS7T)

The Gauss Centre for Supercomputing (GCS) in Germany has allocated a record 1,648 million core hours of computing time to 21 scientifically outstanding national research projects as part of its Call for Large-Scale Projects. "GCS is excited to support simulation projects of these excelling scopes as they clearly underline our claim of Germany being a world leader in High Performance Computing. Beyond dispute, they produce proof of us being at eye level with the largest international research projects such as the INCITE Program supported by the Office of Science of the U.S. Department of Energy," states Prof. Thomas Lippert of JSC, GCS Chairman of the Board."The post GCS in Germany Allocates Record Number of Core Hours for Research appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CS5V)

In its latest move to build a practical quantum computer, IBM Research for the first time ever is making quantum computing available in the cloud to anyone interested in hands-on access to the company’s advanced experimental quantum system. "The cloud-enabled quantum computing platform, called IBM Quantum Experience, will allow users to run algorithms and experiments on IBM’s quantum processor, work with the individual quantum bits (qubits), and explore tutorials and simulations around what might be possible with quantum computing."The post Kick the Tires on Quantum Computing with the IBM Cloud appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CRYR)

In this video from the 2016 MSST Conference, Ian Corner from CSIRO in Australia presents: A Journey to a Holistic Framework for Data-intensive Workflows. "At CSIRO, we are Australia's national science organization and one of the largest and most diverse scientific research organizations in the world. Our research focuses on the biggest challenges facing the nation. We also manage national research infrastructure and collections."The post Video: A Journey to a Holistic Framework for Data-intensive Workflows at CSIRO appeared first on insideHPC.

|

|

by staff on (#1CR7G)

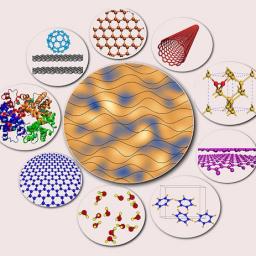

Over at ALCF, Katie Jones writes that researchers are using the Mira supercomputer to validate a new “wave-like†model of the van der Waals force—a weak attraction that has strong ties to function and stability in materials and biological systems.The post Supercomputing van der Waals Forces on Mira appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CPMG)

Jeff Bonwick from EMC DSSD presented this talk at the MSST conference. "Jeff Bonwick is co-founder and CTO of DSSD, where he co-invented both the system hardware architecture and the Flood software stack. His talk will focus on extracting maximum performance from flash at scale. Jeff has a long history of developing at-scale storage starting with leading the team that developed the ZFS filesystem, which powers Oracle’s ZFS storage line as well as numerous startups including Nexenta, Delphix, Joyent, and Datto."The post Video: DSSD Scalable High Performance FLASH Systems appeared first on insideHPC.

|

|

by staff on (#1CMZE)

Altair is making a big investment toward uniting the whole HPC community to accelerate the state of the art (and the state of actual production operations) for HPC scheduling. Altair is joining the OpenHPC project with PBS Pro. They are focused on longevity – creating a viable, sustainable community to focus on job scheduling software that can truly bridge the gap in the HPC world.The post An Open Letter to the HPC Community appeared first on insideHPC.

|

|

by staff on (#1CNN6)

The MJO occurs on its own timetable—every 30 to 60 days—but its worldwide impact spurs scientists to unlock its secrets. The ultimate answer? Timely preparation for the precipitation havoc it brings—and insight into how it will behave when pressured by a warming climate.The post Edison Supercomputer Helps Find Roots of MJO Modeling Mismatches appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CNHD)

In this video, technicians install a new supercomputer at UK Met Office. The Met Office is the National Weather Service for the UK, providing internationally-renowned weather and climate science and services to support the public, government and businesses.The post Time-lapse Video: Cray Install at UK Met Office appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CN76)

In this special guest feature from Scientific Computing World, Darren Watkins from Virtus Data Centres explains the importance of building a data centre from the ground up to support the requirements of HPC users - while maximizing productivity, efficiency and energy usage. "The reality for many IT users is they want to run analytics that –with the growth of data – have become too complex and time critical for normal enterprise servers to handle efficiently."The post HPC and the HDC Datacenter appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CHT5)

The Women in HPC organization will host a Workshop and a BoF at ISC 2016 in Frankfurt. "Once again we will bring together women from across the international HPC community, providing opportunities to network, showcasing the work of inspiring women and discussing how we can all work towards to improving the under-representation of women in supercomputing."The post Women in HPC to Host Events at ISC 2016 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CHJ3)

In this video from LUG 2016 in Portland, Steve Simms from Indiana University presents: Lustre 101 - A Quick Overview. Now in its 14th year, the Lustre User Group is the industry’s primary venue for discussion and seminars on the Lustre parallel file system and other open source file system technologies.The post Lustre 101: A Quick Overview appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CHDX)

Today the Barcelona Supercomputing Center released version 1.4 of the COMPSs programming environment. COMPSs includes new features that improves runtime performance, a new tracing infrastructure, and support for dockers and Chameleon infrastructure.The post Announcing the COMPSs 1.4 Programming Environment from BSC appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CH90)

Mark Seamans from SGI presented this talk at the HPC User Forum in Tucson. "As the trusted leader in high performance computing, SGI helps companies find answers to the world’s biggest challenges. Our commitment to innovation is unwavering and focused on delivering market leading solutions in Technical Computing, Big Data Analytics, and Petascale Storage. Our solutions provide unmatched performance, scalability and efficiency for a broad range of customers."The post Video: SGI Production Supercomputing appeared first on insideHPC.

|

|

by staff on (#1CH5C)

Today PRACE announced that Dr Zoe Cournia is the recipient of the 1st PRACE Ada Lovelace Award for HPC. As a Computational Chemist, Investigator – Assistant Professor level at the Biomedical Research Foundation, Academy of Athens in Greece, Dr Cournia was selected for her outstanding contributions and impact on HPC in Europe on a global level. "Using the PRACE HPC resources and recent advances in computer-aided drug design allow us to develop drugs specifically designed for a given protein, shortening the time for development of new drugs,†says Dr Cournia. “I believe that our work is a good example of how computers help develop candidate drugs that have the potential to save millions of lives worldwide. I am honored to receive this prestigious award and hope that this serves as inspiration to other female researchers in the field.â€The post Dr Zoe Cournia to Receive 1st PRACE Ada Lovelace Award for HPC appeared first on insideHPC.

|

|

by staff on (#1CE66)

Over at the Nvidia Blog, George Millington writes that, the fourth consecutive year, the Nvidia Tesla Accelerated Computing Platform helped set new milestones in the Asia Student Supercomputer Challenge, the world’s largest supercomputer competition.The post GPU-Powered Systems Take Top Spot & Set Performance Records at ASC16 appeared first on insideHPC.

|

|

by Rich Brueckner on (#1CE52)

"Single Root I/O Virtualization (SR-IOV) technology has been steadily gaining momentum for high-performance interconnects such as InfiniBand. SR-IOV can deliver near native performance but lacks locality-aware communication support. This talk presents an efficient approach to build HPC clouds based on MVAPICH2 over OpenStack with SR-IOV. We discuss the high-performance design of virtual machine-aware MVAPICH2 library over OpenStack-based HPC Clouds with SR-IOV. A comprehensive performance evaluation with micro-benchmarks and HPC applications has been conducted on an experimental OpenStack-based HPC cloud and Amazon EC2. The evaluation results show that our design can deliver near bare-metal performance."The post Building Efficient HPC Clouds with MVAPICH2 and OpenStack over SR-IOV Enabled InfiniBand Clusters appeared first on insideHPC.

|