|

by Rich Brueckner on (#4A82D)

Liquid cooling has long been an enabling technology for high performance computing, but cost, complexity, and facility requirements continue to be concerns. Enter the Aquila Group, whose cold-plate technology looks to offer the advantages of liquid cooling with less risk. Based on a 10-month trial, a new paper gives an overview of the Aquarius fixed cold plate cooling technology and provides results from early energy performance evaluation testing.The post NREL Report Looks at Aquila’s Cold Plate Cooling System for HPC appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-03 03:30 |

|

by Rich Brueckner on (#4A7ZY)

Argonne National Laboratory is seeking an Assistant Computational Scientist in our Job of the Week. "The Computational Scientist assists in the development and implementation of machine learning strategies for the development of new materials. Principal effort includes the identification of appropriate data science techniques for accelerating the development of materials, developing and validating software to perform these methods, and working with materials engineers to put the tools in to practice."The post Job of the Week: Assistant Computational Scientist at Argonne appeared first on insideHPC.

|

|

by staff on (#4A5T2)

The OpenMP community has issued its Call for Submissions for OpenMPCon 2019 and IWOMP 2019. The events take place September 9-13 in Auckland, New Zealand. "OpenMPCon is the annual conference for OpenMP developers to discuss of all aspects of parallel programming with OpenMP. The International Workshop on OpenMP (IWOMP) is an annual workshop dedicated to the promotion and advancement of all aspects of parallel programming with OpenMP."The post Call for Submissions: OpenMPCon and IWOMP 2019 in New Zealand appeared first on insideHPC.

|

|

by staff on (#4A5N7)

In this special guest feature from Scientific Computing World, Robert Roe reports on developments in multiphysics simulation at the Global Altair Technology Conference. "This year’s event focused on sharing applications of simulation-driven innovation from technology leaders and industry executives from all over the world, including keynotes from Ferrari, Jaguar Land Rover and Zaha Hadid Architects."The post Simulation Driven Design at the Altair Technology Conference appeared first on insideHPC.

|

|

by Rich Brueckner on (#4A5GQ)

Nick Nystrom from the Pittsburgh Supercomputing Center gave this talk at the Stanford HPC Conference. "To address the demand for scalable AI, PSC recently introduced Bridges-AI, which adds transformative new AI capability. In this presentation, we share our vision in designing HPC+AI systems at PSC and highlight some of the exciting research breakthroughs they are enabling."The post Pioneering and Democratizing Scalable HPC+AI at the Pittsburgh Supercomputing Center appeared first on insideHPC.

|

|

by staff on (#4A35M)

Today Univa announced that it will now provide support services to users in their open source community to assist them with installation, configuration and troubleshooting. Supported products include Open Source Grid Engine 6.2U5 and variants of Open Grid Scheduler and Son of Grid Engine (SGE) 8.1.9. "The Open Source Grid Engine community is comprised of an active and diverse group of people committed to helping each other succeed and is integral to Univa’s success as a company,†said Gary Tyreman, president and CEO of Univa. “Grid Engine has been deployed thousands of times in business critical clusters. We want to ensure this community of active users has access to the support services they need to give them the peace-of-mind to continue using open source software without the need to upgrade critical systems.â€The post Univa steps up with Support Services for Open Source Grid Engine Users appeared first on insideHPC.

|

|

by staff on (#4A35N)

Today Rescale announced a strategic collaboration with Siemens PLM Software. Siemens PLM Software has partnered with Rescale to enable SaaS delivery of Siemens’ Simcenter portfolio on Rescale’s ScaleX platform. "Leveraging cloud infrastructure and flexible licensing models allows customers to explore their digital twin like never before, and Siemens’ cloud strategy is a key enabler for their customers to get faster time to results and iterate more quickly on designs,†says Joris Poort, Founder and CEO of Rescale.The post Siemens Simcenter comes to Rescale ScaleX Platform for HPC in the Cloud appeared first on insideHPC.

|

|

by staff on (#4A2ZZ)

Pattern recognition tasks such as classification, localization, object detection and segmentation have remained challenging problems in the weather and climate sciences. Now, a team at the Lawrence Berkeley National Laboratory is developing ClimateNet, a project that will bring the power of deep learning methods to identify important weather and climate patterns via expert-labeled, community-sourced open datasets and architectures.The post ClimateNet Looks to Machine Learning for Global Climate Science appeared first on insideHPC.

|

|

by staff on (#4A300)

With the demand for intelligent vision solutions increasing everywhere from edge to cloud, enterprises of every type are demanding visually-enabled – and intelligent – applications. Up till now, most intelligent computer vision applications have required a wealth of machine learning, deep learning, and data science knowledge to enable simple object recognition, much less facial recognition or collision avoidance. That’s changed with the introduction of Intel’s Distribution of OpenVINO toolkit.The post Making Computer Vision Real Today – For Any Application appeared first on insideHPC.

|

|

by staff on (#4A35P)

Today ISC 2019 announced that its lineup of keynote speakers will include John Shalf from LBNL and Thomas Sterling from Indiana University. The event takes place June 16-20 in Frankfurt, Germany. "On June 18, John Shalf, from Lawrence Berkeley National Laboratory will offer his thoughts on how the slowdown and eventual demise of Moore’s Law will affect the prospects for high performance computing in the next decade. On June 19, Thomas Sterling will present his annual retrospective of the most important developments in HPC over the last 12 months."The post John Shalf and Thomas Sterling to Keynote ISC 2019 in Frankfurt appeared first on insideHPC.

|

|

by Rich Brueckner on (#4A180)

Today Sylabs announced that Singularity 3.1.0 is now generally available. With this release, Singularity is fully compliant with standards established by the Open Containers Initiative (OCI), and benefits from enhanced management of cached images. Open source based Singularity continues to systematically incorporate code-level changes specific to the Darwin operating environment, as it progresses towards support for macOS platforms. These, and […]The post Singularity 3.1.0 brings in Full OCI Compliance appeared first on insideHPC.

|

|

by staff on (#4A181)

The National Science Foundation (NSF) recently awarded funding to a team led by the Ohio Supercomputer Center (OSC) for further development of Open OnDemand, an open-source software platform supporting web-based access to high performance computing services. "The Open OnDemand 2.0 project will deliver an improved open-source platform for HPC, cloud and remote computing access,†said David Hudak, Ph.D., executive director of OSC. “Additionally, interaction with a growing user base has generated requests for new technical capabilities and more engagements with the science community to extend this platform and deepen its science impact.â€The post NSF funds second round for OSC’s Open OnDemand appeared first on insideHPC.

|

|

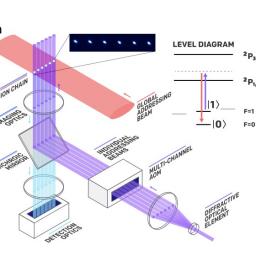

by staff on (#4A0XT)

Today IonQ announced that its quantum computer has achieved a critical milestone: simulating the water molecule with accuracy approaching what is needed for practical applications in the promising field of computational chemistry. "A manuscript released today describes how IonQ scientists calculated the energy of the water molecule in its lowest or natural state. The results from IonQ’s machine approached the precision a computer model would need to make an accurate chemical prediction, known as chemical accuracy."The post IonQ Performs First Quantum Simulation of a Water Molecule appeared first on insideHPC.

|

|

by Rich Brueckner on (#4A0SE)

Rob Neely from LLNL gave this talk at the Stanford HPC Conference. "This talk will give an overview of the Sierra supercomputer and some of the early science results it has enabled. Sierra is an IBM system harnessing the power of over 17,000 NVIDIA Volta GPUs recently deployed at Lawrence Livermore National Laboratory and is currently ranked as the #2 system on the Top500. Before being turned over for use in the classified mission, Sierra spent months in an “open science campaign†where we got an early glimpse at some of the truly game-changing science this system will unleash – selected results of which will be presented."The post Video: Sierra – Science Unleashed appeared first on insideHPC.

|

|

by staff on (#4A0C4)

Today D-Wave Systems released a preview of its next-generation quantum computing platform incorporating hardware, software and tools to accelerate and ease the delivery of quantum computing applications. "Quantum computing is only as valuable as the applications customers can run,†said Alan Baratz, chief product officer, D-Wave. “With the next-generation platform, we are making investments in things like connectivity and hybrid software and tools to allow customers to solve even more complex problems at greater scale, bringing new emerging quantum applications to life."The post D-Wave previews Next-Gen Quantum Computing Platform appeared first on insideHPC.

|

|

by Richard Friedman on (#49Y28)

One of the big surprises of the past few years has been the spectacular rise in the use of Python* in high-performance computing applications. With the latest releases of Intel® Distribution for Python, included in Intel® Parallel Studio XE 2019, the numerical and scientific computing capabilities of high-performance Python now extends to machine learning and data analytics.The post Intel High-Performance Python Extends to Machine Learning and Data Analytics appeared first on insideHPC.

|

|

by staff on (#49YBS)

Today Tachyum announced its participation and support for the Open Euro High Performance Computing Project (OEUHPC). "Tachyum is supporting the OEUHPC Project due to its ability to satisfy the need for both scalable and converged computing, which, in part, will ultimately enable users to cost effectively simulate, in real-time, human brain-sized Neural Networks. Tachyum has been working to develop its ultra-low power Prodigy Universal Processor Chip to allow system integrators to build a 32 Tensor ExaFLOPS AI supercomputer in 2020, well ahead of the scheduled EU goal to achieve 1 ExaFLOPS in 2028."The post Tachyum Joins Open Euro HPC Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#49YBV)

Nominations are now open for the PRACE Ada Lovelace Award for HPC. The award honors excellence in computational science by early-career women in Europe. "The winner of the Award will be invited to speak at PRACEdays19 and will receive a cash prize of €1,000 as well as a certificate and an engraved crystal trophy."The post Nominations Open for 2019 PRACE Ada Lovelace Award for HPC appeared first on insideHPC.

|

|

by staff on (#49Y70)

In this Big Compute podcast, host Gabriel Broner from Rescale interviews Mike Woodacre, HPE Fellow, to discuss the shift from CPUs to an emerging diversity of architectures. They discuss the evolution of CPUs, the advent of GPUs with increasing data parallelism, memory driven computing, and the potential benefits of a cloud environment with access to multiple architectures.The post Big Compute Podcast Looks at New Architectures for HPC appeared first on insideHPC.

|

|

by staff on (#49Y26)

The Air Force’s top training official said it is moving toward a new “paradigm†for how it teaches airmen to fly airplanes. A new special report from insideHPC, courtesy of Dell EMC and NIVIDA, explores current machine learning applications in government. This excerpt covers recent research on the potential of AI technology in the U.S. Federal government, as well as how government AI is being used in U.S.Air Force pilot training strategies.The post Augmenting & Automating Operations in Government appeared first on insideHPC.

|

|

by staff on (#49W2N)

NERSC recently hosted a successful GPU Hackathon event in preparation for their next-generation Perlmutter supercomputer. Perlmutter, a pre-exascale Cray Shasta system slated to be delivered in 2020, will feature a number of new hardware and software innovations and is the first supercomputing system designed with both data analysis and simulations in mind. Unlike previous NERSC systems, Perlmutter will use a combination of nodes with only CPUs, as well as nodes featuring both CPUs and GPUs.The post NERSC Hosts GPU Hackathon in Preparation for Perlmutter Supercomputer appeared first on insideHPC.

|

|

by staff on (#49VR6)

Today Univa announced that Western Digital is using the company's Navops Launch and Univa Grid Engine cloud software for large-scale deployments on AWS. "This successful collaboration with Univa and AWS shows the extreme scale, power and agility of cloud-based HPC to help us run complex simulations for future storage architecture analysis and materials science explorations. Using AWS to easily shrink simulation time from 20 days to 8 hours allows Western Digital R&D teams to explore new designs and innovations at a pace un-imaginable just a short time ago."The post Univa Powers Million-core Cluster on AWS for Western Digital appeared first on insideHPC.

|

|

by Rich Brueckner on (#49VKX)

Ebru Taylak from the UberCloud gave this talk at the Stanford HPC Conference. "A scalable platform such as the cloud, provides more accurate results, while reducing solution times. In this presentation, we will demonstrate recent examples of innovative use cases of HPC in the Cloud, such as, “Personalized Non-invasive Clinical Treatment of Schizophrenia and Parkinson’s†and “Deep Learning for Steady State Fluid Flow Predictionâ€. We will explore the challenges for the specific problems, demonstrate how HPC in the Cloud helped overcome these challenges, look at the benefits, and share the learnings."The post Innovative Use of HPC in the Cloud for AI, CFD, & LifeScience appeared first on insideHPC.

|

|

by staff on (#49VR7)

Today Mellanox announced that its 200 Gigabit HDR InfiniBand solutions are now shipping worldwide to deliver leading efficiency and scalability to HPC, Ai, cloud, storage and other data intensive applications. "The world-wide strategic race to Exascale supercomputing, the exponential growth in data we collect and need to analyze, and the new performance levels needed to support new scientific investigations and innovative product designs, all require the fastest and most advanced HDR InfiniBand interconnect technology. HDR InfiniBand solutions enable breakthrough performance levels and deliver the highest return on investment, enabling the next generation of the world’s leading supercomputers, hyperscale, Artificial Intelligence, cloud and enterprise datacenters.â€The post Now Shipping: Mellanox HDR 200G InfiniBand Solutions for Accelerating HPC & Ai appeared first on insideHPC.

|

|

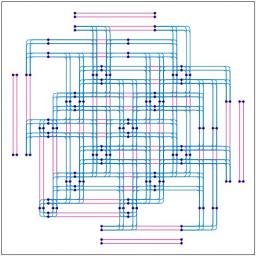

by staff on (#49VKZ)

Software-Defined Networking (SDN) is a concept that has emerged in recent years to decouple network control and forwarding functions. The one network type that does not require special effort to adjust it to the computational needs of high-performance computing, deep learning or any other data intensive application is InfiniBand. In this guest article, Mellanox Technologies' Gilad Shainer and Eitan Zahavi explore Infiniband networks and the benefits and potential of software-defined networking.The post The Best Software-Defined Network for the Best Network Efficiency (or Getting Ready for Exascale) appeared first on insideHPC.

|

|

by staff on (#49SQG)

In this video from the IBM Think conference, Anthony J. Annunziata leads a panel discussion on the State of Quantum Computing in 2019. "Since launching the world's first quantum cloud service in 2016, IBM has been at the forefront of advancing the quantum industry and making this exciting technology accessible to organizations worldwide. Get a firsthand update from two of IBM's quantum executives on how this area is rapidly moving forward and what's next on the horizon."The post Video: The State of Quantum Computing in 2019 appeared first on insideHPC.

|

|

by Rich Brueckner on (#49SMG)

In this Intel podcast, Adnan Khaleel describes how Dell EMC is making enterprise AI implementation fast and easy with its Dell EMC Ready Solutions for AI. Adnan also illustrates how Dell EMC Ready Solutions are well suited for many different industries including financial services, insurance, pharmaceuticals and many more.The post Podcast: How Intel powers Dell EMC Ready Solutions for Ai appeared first on insideHPC.

|

|

by Rich Brueckner on (#49RA7)

Brett Newman from Microway gave this talk at the Stanford HPC Conference. "Figuring out how to map your dataset or algorithm to the optimal hardware design is one of the hardest tasks in HPC. We’ll review what helps steer the selection of one system architecture from another for AI applications. Plus the right questions to ask of your collaborators—and a hardware vendor. Honest technical advice, no fluff."The post Architecting the Right System for Your AI Application—without the Vendor Fluff appeared first on insideHPC.

|

|

by Rich Brueckner on (#49R1S)

The Milwaukee School of Engineering is seeking an HPC System Administrator in our Job of the Week. "The HPC Systems Administrator will lead efforts related to the daily operation of a small to medium-sized GPU-based high-performance computing (HPC) cluster. The individual in this position will provide engineering and administration support for HPC hardware and software."The post Job of the Week: HPC System Administrator at the Milwaukee School of Engineering appeared first on insideHPC.

|

|

by staff on (#49P8C)

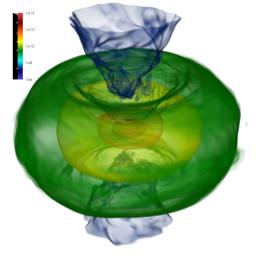

Over at XSEDE, Kimberly Mann Bruch & Jan Zverina from the San Diego Supercomputer Center write that researchers are using supercomputers to create detailed simulations of neutron star structures and mergers to better understand gravitational waves, which were detected for the first time in 2015. "XSEDE resources significantly accelerated our scientific output," noted Paschalidis, whose group has been using XSEDE for well over a decade, when they were students or post-doctoral researchers. "If I were to put a number on it, I would say that using XSEDE accelerated our research by a factor of three or more, compared to using local resources alone."The post Supercomputing Neutron Star Structures and Mergers appeared first on insideHPC.

|

|

by Rich Brueckner on (#49P8E)

Atos recently announced two new flash accelerators for HPC) workloads as part of Atos Smart Data Management Suite. These two new solutions – Smart Burst Buffer (SBB) and Smart Bunch of Flash (SBF) – increase the performance and productivity of HPC I/O intensive applications. They are the most flexible and cost-effective flash accelerators on the […]The post Atos steps up with NVMeOF Flash Accelerator Solutions appeared first on insideHPC.

|

|

by Rich Brueckner on (#49P33)

Michael Aguilar from Sandia National Laboratories gave this talk at the Stanford HPC Conference. "This talk will discuss the Sandia National Laboratories Astra HPC system as mechanism for developing and evaluating large-scale deployments of alternative and advanced computational architectures. As part of the Vanguard program, the new Arm-based system will be used by the National Nuclear Security Administration (NNSA) to run advanced modeling and simulation workloads for addressing areas such as national security, energy and science."The post ASTRA: A Large Scale ARM64 HPC Deployment appeared first on insideHPC.

|

|

by staff on (#49KR7)

Today the UberCloud announced that it has been recognized in three leading HPC industry awards for two of its innovative engineering projects in the cloud. "We are proud and humbled for the two prestigious Hyperion Innovation Excellence Awards and the HPCwire Editors’ Choice Award,†said Wolfgang Gentzsch, President of UberCloud, “Our innovative projects on UberCloud’s Cloud Simulation Platform showcase engineers, how they can benefit from the cloud to accelerate their simulations and innovate."The post UberCloud Recognized with three HPC Industry Awards appeared first on insideHPC.

|

|

by Rich Brueckner on (#49KR8)

"When we are optimizing our objective is to determine which hardware resource the code is exhausting (there must be one, otherwise it would run faster!), and then see how to modify the code to reduce its need for that resource. It is therefore essential to understand the maximum theoretical performance of that aspect of the machine, since if we are already achieving the peak performance we should give up, or choose a different algorithm."The post Improving HPC Performance with the Roofline Model appeared first on insideHPC.

|

|

by Rich Brueckner on (#49KJX)

Addison Snell gave this talk at the Stanford HPC Conference. "Intersect360 Research returns with an annual deep dive into the trends, technologies and usage models that will be propelling the HPC community through 2017 and beyond. Emerging areas of focus and opportunities to expand will be explored along with insightful observations needed to support measurably positive decision making within your operations."The post The New HPC appeared first on insideHPC.

|

|

by staff on (#49KJZ)

Today Atos announced the deployment of a BullSequana X1000 supercomputer at CALMIP, one of the biggest multi-scale inter-university supercomputing centers in France. Called Olympe, the supercomputer will be used for over 200 research projects in materials, fluid mechanics, universe sciences and chemistry. "The supercomputer has a peak performance of 1,3 petaflop/s bringing 5 times more computing and processing power than that of the previous supercomputer, for the same energy consumption."The post Video: Atos Olympe Supercomputer Powers Research at CALMIP appeared first on insideHPC.

|

|

by Sarah Rubenoff on (#49KE4)

High performance computing has gone through numerous shifts in the past few years. The new HPC, inclusive of analytics and AI, and with its wide range of technology components and choices, presents significant challenges to a commercial enterprise. A new report from Lenovo explores the intersection of HPC and AI, as well tips on how to choose the right HPC provider for your enterprise.The post Advancement in AI & Machine Learning Calls For New HPC Solutions appeared first on insideHPC.

|

|

by staff on (#49H5J)

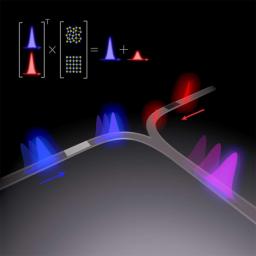

In this video, IBM researchers describe an all-optical approach to developing direct in-memory multiplication on an integrated photonic device based on non-volatile multilevel phase-change memories. Using integrated photonic technology will potentially offer attractive solutions for using light to carry out computational tasks on a chip in future.The post Video: In-Memory Computing Using Photonic Memory Devices appeared first on insideHPC.

|

|

by staff on (#49H5M)

Researchers at the Barcelona Supercomputing Centre have created a new artificial intelligence-based computational method that accelerates the identification of new genes related to cancer. Prof. Pržulj highlights that this new method to analyze cells "enables the identification of perturbed genes in cancer that do not appear as perturbed in any data type alone. This discovery emphasizes the importance of integrative approaches to analyze biological data and paves the way towards comparative integrative analyzes of all cells."The post Ai allows for identification of new cancer genes appeared first on insideHPC.

|

|

by staff on (#49H5P)

Today Excelero announced that it has appointed HPC pioneer Sven Breuner as Field Chief Technical Officer (CTO.) With over a decade of leadership in the HPC industry, Sven will help expand the innovative capabilities in Excelero’s award-winning NVMesh solution by anticipating customers' future requirements while bringing deep technical and product knowledge to the field teams.The post Sven Breuner Joins Excelero as Field CTO appeared first on insideHPC.

|

|

by Rich Brueckner on (#49H0D)

The PASC19 conference will feature a Public Lecture by Keren Bergman on Flexibly Scalable High Performance Architectures with Embedded Photonics. The event takes place June 12-14 in Zurich, Switzerland the week before ISC 2019. "Integrated silicon photonics with deeply embedded optical connectivity is on the cusp of enabling revolutionary data movement and extreme performance capabilities."The post PASC19 to feature talk on Scalable High Performance Architectures with Embedded Photonics appeared first on insideHPC.

|

|

by Rich Brueckner on (#49H0F)

Greg Kurtzer from Sylabs gave this talk at the Stanford HPC Conference. "Singularity is a widely adopted container technology specifically designed for compute-based workflows making application and environment reproducibility, portability and security a reality for HPC and AI researchers and resources. Here we will describe a high-level overview of Singularity and demonstrate how to integrate Singularity containers into existing application and resource workflows as well as describe some new trending models that we have been seeing."The post Singularity: Container Workflows for Compute appeared first on insideHPC.

|

|

by Rich Brueckner on (#49EMK)

The Arm Research Summit, has issued its Call for Submissions. The event takes place September 15-18 in Austin, Texas. As a one-of-a-kind forum for topics that are shaping our world, the Summit focuses on presentations and discussions, and welcomes research at all stages of development and/or publication. The committee encourages submissions of early-stage, high-impact ideas seeking feedback, new […]The post Call for Submissions: Arm Research Summit in Austin appeared first on insideHPC.

|

|

by staff on (#49EMN)

Today the 25 Gigabit Ethernet Consortium announced the availability of a low-latency forward error correction (FEC) specification for 50 Gbps, 100 Gbps and 200 Gbps Ethernet networks. "Five years ago, only HPC developers cared about low latency, but today latency sensitivity has come to many more mainstream applications,†said Rob Stone, technical working group chair of the 25G Ethernet Consortium. “With this new specification, the consortium is improving the single largest source of packet processing latency, which improves the performance that high-speed Ethernet brings to these applications.â€The post 25 Gigabit Ethernet Consortium Offers Low Latency Specification for 50GbE, 100GbE and 200GbE HPC Networks appeared first on insideHPC.

|

|

by staff on (#49EFC)

Today Hyperion Research launched a new Cloud Application Assessment Tool. Available free of charge to the public, this tool is an interactive grading system to assess the characteristics of HPC applications to help understand whether they fit better on an on-premise HPC system or are they well suited for running in a public cloud. "By using this tool, end users will be able to see which characteristics of their applications drive or restrict the ability to run them in clouds, and what future improvements in public clouds may make their application fit better in the cloud. It also shows the characteristics that drive certain applications to stay on-premise."The post Hyperion Research Launches Cloud Application Assessment Tool appeared first on insideHPC.

|

|

by Rich Brueckner on (#49EFE)

Steve Oberlin from NVIDIA gave this talk at the Stanford HPC Conference. "Clearly, AI has benefited greatly from HPC. Now, AI methods and tools are starting to be applied to HPC applications to great effect. This talk will describe an emerging workflow that uses traditional numeric simulation codes to generate synthetic data sets to train machine learning algorithms, then employs the resulting AI models to predict the computed results, often with dramatic gains in efficiency, performance, and even accuracy."The post HPC + Ai: Machine Learning Models in Scientific Computing appeared first on insideHPC.

|

|

by staff on (#49EFF)

A new special report from insideHPC, courtesy of Dell EMC and NIVIDa explores current machine learning applications in government. And this excerpt breaks down solutions for AI in government, including Dell EMC Ready Solutions. Dell EMC Ready Solutions for AI are validated hardware and software stacks optimized to accelerate AI initiatives, shortening the time to architect a new solution by six to 12 months.The post Finding a Solution for Ai in Government appeared first on insideHPC.

|

|

by Rich Brueckner on (#49CFA)

Michael Jennings from LANL gave this talk at the Stanford HPC Conference. "As containers initially grew to prominence within the greater Linux community, particularly in the hyperscale/cloud and web application space, there was very little information out there about using Linux containers for HPC at all. In this session, we'll confront this problem head-on by clearing up some common misconceptions about containers, bust some myths born out of misunderstanding and marketing hype alike, and learn how to safely (and securely!) navigate the Linux container landscape with an eye toward what the future holds for containers in HPC and how we can all get there together!"The post Video: Container Mythbusters appeared first on insideHPC.

|

|

by staff on (#49C7J)

It was with one goal – accelerating Python execution performance – that lead to the creation of Intel Distribution for Python, a set of tools designed to provide Python application performance right out of the box, usually with no code changes required. This sponsored post from Intel highlights how Intel SDK can enhance Python development and execution, as Python continues to grow in popularity.The post Python Power: Intel SDK Accelerates Python Development and Execution appeared first on insideHPC.

|

|

by Rich Brueckner on (#49APW)

The Creative Destruction Lab is now accepting applications for their 2019 Quantum Machine Learning and Blockchain-AI Incubator Streams. As a seed-stage program for massively scalable, science-based companies, the mission of the CDL is to enhance the prosperity of humankind. "The CDL Blockchain-AI Incubator Stream is a 10-month incubator program which gives blockchain founders personalized mentorship from blockchain thought leaders, successful tech entrepreneurs, scientists, economists and venture capitalists. Founders are also eligible for up to US$100K in investment, in exchange for equity."The post Seeking Seed Money? Creative Destruction Lab offers Incubator Streams for Quantum, Blockchain, and Ai appeared first on insideHPC.

|